Apache Iceberg Alternatives: What Are Your Options for Lakehouse Architectures?

Share this article

Apache Iceberg is a table format for the data lake and lakehouse architectures. While Apache Iceberg is leading in popularity because of its general-purpose nature, other alternatives offer similar features but for slightly different use cases.

See How Atlan Simplifies Data Governance ✨ – Start Product Tour

This article will take you through Apache Iceberg alternatives, such as Apache Hudi, Delta Lake, and Apache Paimon. We’ll explore how these alternatives stack up against Apache Iceberg, while also learning about tools (Apache XTable and Delta Lake Uniform) that seem like Iceberg alternatives, but aren’t.

Table of Contents

Permalink to “Table of Contents”- Apache Iceberg alternatives: An overview

- Apache Iceberg alternatives: What are your options?

- Apache Iceberg alternative #1: Apache Hudi, Uber’s upgrade from Apache Hive

- Apache Iceberg alternative #2: Delta Lake, Databricks-native open table format

- Apache Iceberg alternative #3: Apache Paimon, Alibaba’s table format for streaming

- Unlock business value from tables format with a metadata control plane

- Bottom line

- Apache Iceberg alternatives: Related reads

Apache Iceberg alternatives: An overview

Permalink to “Apache Iceberg alternatives: An overview”Apache Iceberg’s primary function is to provide efficient storage, organization, and management of the files where data is stored. Its predecessors, like Apache Hive, used to perform similar functions, but it was closely tied to the Hadoop ecosystem.

Iceberg brings a more platform-agnostic table format that works across multiple cataloging engines, query engines, and other tools. There are alternatives to Iceberg, but before comparing them, let’s understand the key features of a data lake table format and how it differs from a file format or a storage engine.

Read more → Everything you need to know about Apache Iceberg at a glance

Key features of a data lake table format

Permalink to “Key features of a data lake table format”A table format is generally file format-agnostic, or at least it supports a range of file formats, such as Parquet, Avro, ORC, etc. On the other hand, a file format is responsible for defining how data is stored on disk or in memory. As a result, a file format is only concerned with the efficient storage of files.

Meanwhile, some of the primary responsibilities of a data lake table format are to:

- Efficiently organize and manage data files

- Allow point-in-time queries by creating snapshots

- Support multiple compute and query engines

- Facilitate concurrent reads and writes using ACID guarantees

- Manage the creation and evolution of schemas and partitions

Now, let’s look at some of the main alternatives to Apache Iceberg.

Apache Iceberg alternatives: What are your options?

Permalink to “Apache Iceberg alternatives: What are your options?”The key predecessor to Apache Iceberg was the Hive Metastore, which was tightly coupled with the Hadoop ecosystem. Several attempts were made to solve issues regarding schema evolution, support for ACID transactions, and so on with file formats. That led to the realization that separating file and table formats was a good idea.

Since then, there have been three main alternatives to Apache Iceberg:

- Apache Hudi

- Apache Paimon

- Delta Lake

The above Apache Iceberg alternatives differ in how they fulfill the primary responsibilities of a table format. They also differ in their level of support for open data lakehouse architecture and the use cases. With that in mind, let’s examine each Apache Iceberg alternative in more detail.

Apache Iceberg alternative #1: Apache Hudi, Uber’s upgrade from Apache Hive

Permalink to “Apache Iceberg alternative #1: Apache Hudi, Uber’s upgrade from Apache Hive”In 2016, Uber developed Apache Hudi to overcome the challenges of performing updates and deletes on an Apache Hive-based Hadoop setup. Apache Hudi has been a top-level Apache project since 2020. December 2024 marked the release of Apache Hudi 1.0.

Why Hudi became a viable option for lakehouse setups

Permalink to “Why Hudi became a viable option for lakehouse setups”Before Hudi, even small updates triggered the copying of whole partitions, which was inefficient. With an increasing need for streaming data, Apache Hive had stopped being a suitable solution.

Hudi solved these problems by offering two table types that differed in their support for various queries:

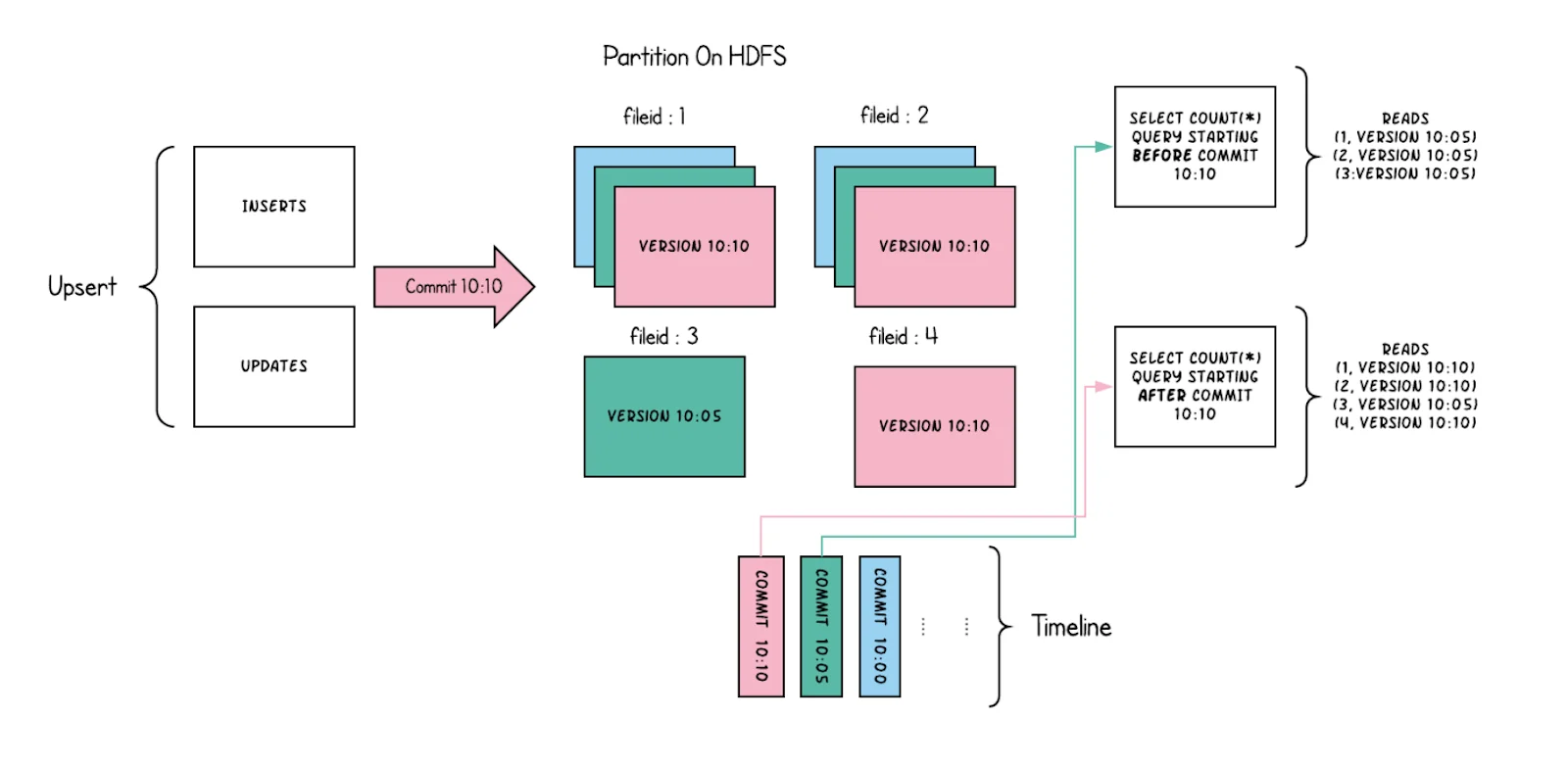

- Copy on Write (COW): Stores data using columnar file formats like Parquet and offers support for snapshot queries and incremental queries

Data being written into a Copy On Write (COW) table - Source: Apache Hudi.

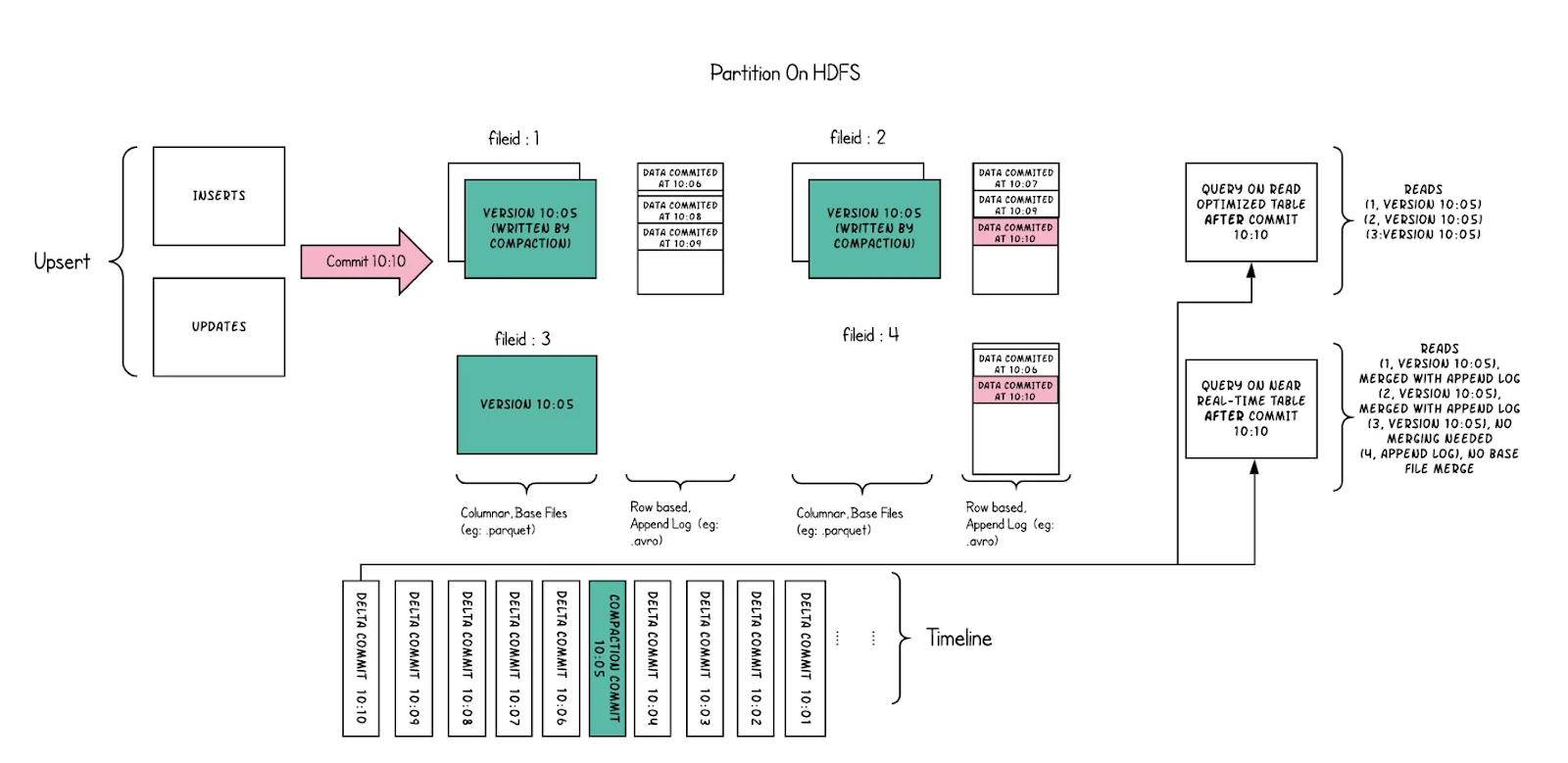

- Merge on Read (MOR): Stores data using a combination of columnar (Parquet) and row-based file formats (Avro); offers support for snapshot queries, incremental queries, and read-optimized queries

Data being written into a Merge On Read (MOR) table - Source: Apache Hudi.

To provide consistent results for concurrent readers and writers, Hudi enforces snapshot isolation using various concurrency control methodologies.

Overall, the key idea behind the creation of Hudi was to bring the “core database functionality directly to a data lake - tables, transactions, efficient upserts/deletes, advanced indexes, ingestion services, data clustering/compaction optimizations, and concurrency control all while keeping your data in open file formats.”

Because of these features, Hudi became a viable option for data lakehouses that needed to support transactional workloads, especially with high volumes of upserts, deletes, and real-time streaming ingestion workloads.

Apache Iceberg alternative #2: Delta Lake, Databricks-native open table format

Permalink to “Apache Iceberg alternative #2: Delta Lake, Databricks-native open table format”In 2017, Databricks created Delta Lake was created by Databricks to support transactions and schema enforcement in data lakes and data lakehouses using Apache Parquet. In 2019, the project was open-sourced and donated to the Linux Foundation.

Why Delta Lake became an option for data lakehouse setups in Databricks-based environments

Permalink to “Why Delta Lake became an option for data lakehouse setups in Databricks-based environments”Delta Lake was created specifically to address the lack of some of the key features when using Apache Spark as the compute engine on top of a data lakehouse.

“Delta Lake provides ACID transactions, scalable metadata handling, and unifies streaming and batch data processing. Delta Lake runs on top of your existing data lake and is fully compatible with Apache Spark APIs.” - Delta Lake

Given Delta Lake’s tightly coupled relationship with Apache Spark, Apache Parquet, and the wider Databricks ecosystem, it is usually a good choice when your data stack is already on Databricks or a Spark-focused ecosystem. This is especially true when you are looking to support ACID transactions, which is similar to Apache Iceberg.

In its latest release, v3.3.0, Delta Lake saw significant improvements for backfilling, liquid clustering, and vacuuming, among other things.

Delta Lake compatibility with file formats other than Parquet

Permalink to “Delta Lake compatibility with file formats other than Parquet”It’s important to note that unlike Apache Iceberg and Apache Hudi, Delta Lake only works with one underlying file format, i.e., Apache Parquet. That may be a limitation if you’re trying to architect a data lakehouse based on the principles of openness and interoperability.

Delta Lake’s new addition, UniForm, is an interoperability engine that allows you to read Delta Lake tables with Iceberg and Hudi clients, as long as your data files are in the Parquet file format.

Apache Iceberg alternative #3: Apache Paimon, Alibaba’s table format for streaming

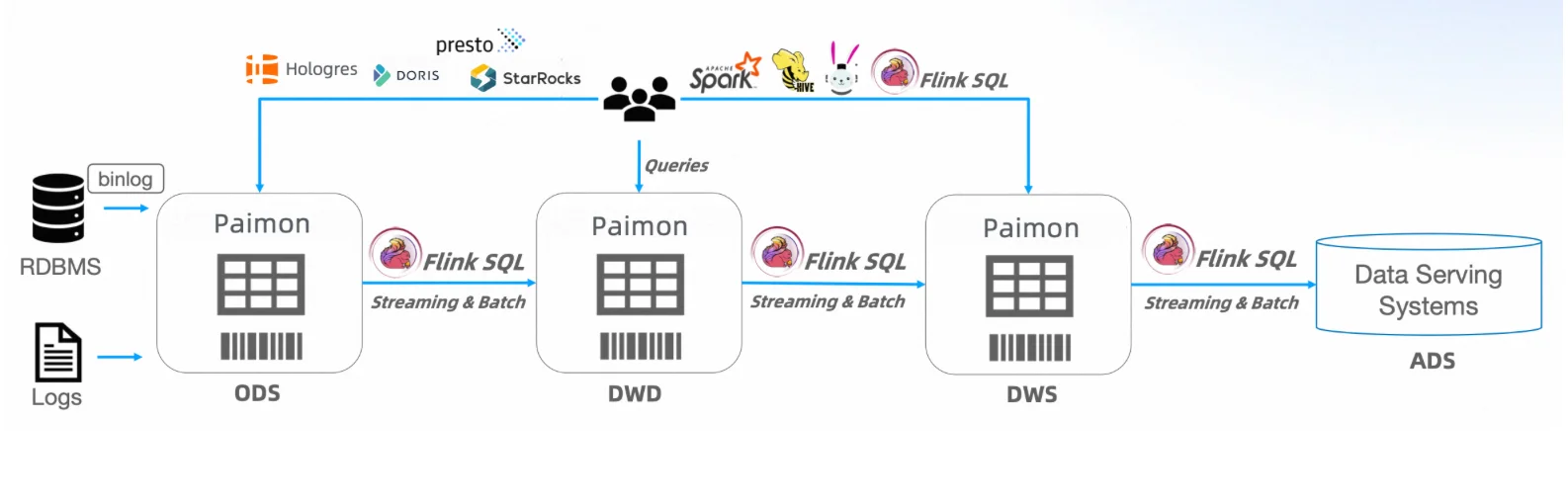

Permalink to “Apache Iceberg alternative #3: Apache Paimon, Alibaba’s table format for streaming”The latest addition to Apache Iceberg’s alternatives is Apache Paimon. In 2022, Alibaba Cloud developed Paimon as Flink Table Store. Its key characteristic was to bring Log Structured Merge (LSM) tree architecture to popular columnar file formats like Parquet and ORC.

However, Paimon is primarily known for optimizing streaming-first workloads for real-time data processing use cases, especially for Apache Flink, while also integrating with Apache Spark.

Why Apache Paimon became an option for Apache Flink use cases

Permalink to “Why Apache Paimon became an option for Apache Flink use cases”Apache Paimon departs from being a direct alternative to Apache Iceberg because it is designed to solve the problem of building a low-latency real-time lakehouse with Flink and Spark at the center.

On the other hand, Apache Iceberg takes a more batch-based and general-purpose approach to the architecture. Paimon was designed around Flink, so it scores lower than Iceberg in terms of multi-engine compatibility.

To be more interoperable, Apache Paimon supports the generation of metadata compatible with the Iceberg specification. This allows Iceberg readers to use Paimon tables directly.

Several other projects, such as Apache XTable and Delta Lake UniForm, are trying to solve the problem of open table format interoperability, but none of them currently supports Apache Paimon.

Paimon’s key features include handling the following:

- Real-time updates

- Large-scale append-based data processing

- Petabyte streaming scale data lake with ACID transactions

- Time travel

- Schema evolution capabilities

Apache Paimon for Apache Flink and Apache Spark use cases - Source: Alibaba Cloud.

You can learn more about Apache Paimon and its roadmap here.

Unlock business value from tables format with a metadata control plane

Permalink to “Unlock business value from tables format with a metadata control plane”The evolution of table formats is in its early stages. Most of the table formats have only seen their first major release, but there’s a move toward adopting open architectures for data lakes and data lakehouses to avoid getting locked-in with vendors.

Apache Iceberg and its alternatives discussed in this article play a key role in such environments – providing efficient data file management, ACID transactions, point-in-time querying, version control, and more.

While all of these Apache Iceberg alternatives provide a core metadata layer, they need a fully-featured metadata platform, a control plane for metadata, for:

- Granular data lineage

- Role-based access control

- Business glossary

- Setting and enforcing data governance policies from a single pane of glass

That’s where Atlan comes into the picture, giving you a control plane for all your metadata, especially with everything in Iceberg with Atlan’s integration with a REST-based implementation of the Iceberg catalog.

Bottom line

Permalink to “Bottom line”The rise of Apache Iceberg and its alternatives reflects the ongoing shift toward open, scalable, and interoperable table formats for data lakes and lakehouses. Apache Hudi, Delta Lake, and Apache Paimon each cater to different architectures and workloads, from transactional updates to real-time streaming.

While these Apache Iceberg alternatives provide a strong metadata foundation, organizations need a comprehensive metadata control plane like Atlan to maximize value from their data ecosystems.

Head to Atlan’s official documentation to learn more.

Apache Iceberg alternatives: Related reads

Permalink to “Apache Iceberg alternatives: Related reads”- Apache Iceberg: All You Need to Know About This Open Table Format in 2025

- Apache Iceberg Data Catalog: What Are Your Options in 2025?

- Polaris Catalog from Snowflake: Everything We Know So Far

- Polaris Catalog + Atlan: Better Together

- Snowflake Horizon for Data Governance

- What does Atlan crawl from Snowflake?

- Snowflake Cortex for AI & ML Analytics: Here’s Everything We Know So Far

- Snowflake Copilot: Here’s Everything We Know So Far About This AI-Powered Assistant

- How to Set Up Data Governance for Snowflake: A Step-by-Step Guide

- How to Set Up a Data Catalog for Snowflake: A Step-by-Step Guide

- Snowflake Data Catalog: What, Why & How to Evaluate

- AI Data Catalog: Exploring the Possibilities That Artificial Intelligence Brings to Your Metadata Applications & Data Interactions

- What Is a Data Catalog? & Do You Need One?

Share this article