Understanding Luigi: Spotify’s Open-Source Data Orchestration Tool for Batch Processing

Share this article

Luigi is a lightweight Python workflow scheduler built at Spotify in late 2011 and open-sourced in 2012. Spotify built Luigi mainly for orchestrating data assets in the Hadoop ecosystem.

In this article, we will explore Luigi’s capabilities, architecture, and core features as an open-source data orchestrator.

Is Open Source really free? Estimate the cost of deploying an open-source data catalog 👉 Download Free Calculator

Table of contents

Permalink to “Table of contents”- What is Luigi?

- An overview of Luigi and its approach to data orchestration

- Core capabilities in data orchestration

- Getting started with Luigi

- Summing up

- Luigi: Related Reads

What is Luigi?

Permalink to “What is Luigi?”Luigi is a data orchestration tool that helps you build complex pipelines of batch jobs. These jobs could be anything, such as:

- A Hive query

- A Hadoop job in Java

- A Spark job in Scala or Python

- A Python snippet

- A machine learning algorithm

Luigi can handle dependencies, workflows, and failures while offering pipeline visualization and command-line integration.

Luigi shares similarities with GNU Make, Oozie, and Azkaban. However, unlike Oozie and Azkaban, Luigi is versatile and not limited to Hadoop-based tasks/workloads.

Luigi: Origins at Spotify

Permalink to “Luigi: Origins at Spotify”As mentioned earlier, Luigi was originally developed at Spotify by Erik Bernhardsson and Elias Freider in 2011. The purpose was to automate the tasks involved in their music streaming service and build reproducible processes.

Spotify used Luigi to define workflows as recursively dependent tasks. - Source: Engineering at Spotify.

Erik Bernhardsson calls Luigi a tool that does the “plumbing of connecting lots of tasks into complicated pipelines, especially if those tasks run on Hadoop.”

Subsequently, it was adopted by companies such as Asana, Buffer, Foursquare, and Stripe for task orchestration and dependency management.

Spotify used Luigi for big data processing and personalized music recommendations - Source: Twitter.

Luigi is pre-Airflow, so it doesn’t have the concept of a DAG that other data orchestration tools like Dagster, Prefect, etc. seem to offer.

Without DAG, developing highly complex pipelines with many dependencies and branches will be extremely difficult in Luigi. Spotify itself doesn’t actively maintain Luigi anymore and instead, has shifted to a different orchestration tool called Flyte.

An overview of Luigi and its approach to data orchestration

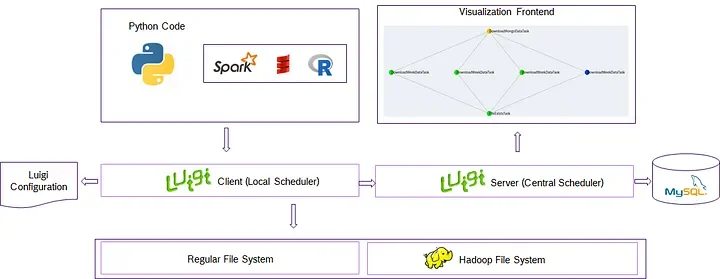

Permalink to “An overview of Luigi and its approach to data orchestration”Luigi pipelines are straightforward — while the Luigi client helps in running Python/R tasks, the server (i.e., the centralized scheduler) monitors them.

The Luigi client helps in executing Python/R tasks and the Luigi server handles monitoring - Source: Medium.

The centralized scheduler serves two purposes:

- Ensure that two instances of the same task are not running simultaneously

- Provide visualization of all the tasks running

This scheduler itself doesn’t execute anything. It merely helps with monitoring your Luigi pipelines.

Using Luigi’s central scheduler - Source: Luigi.

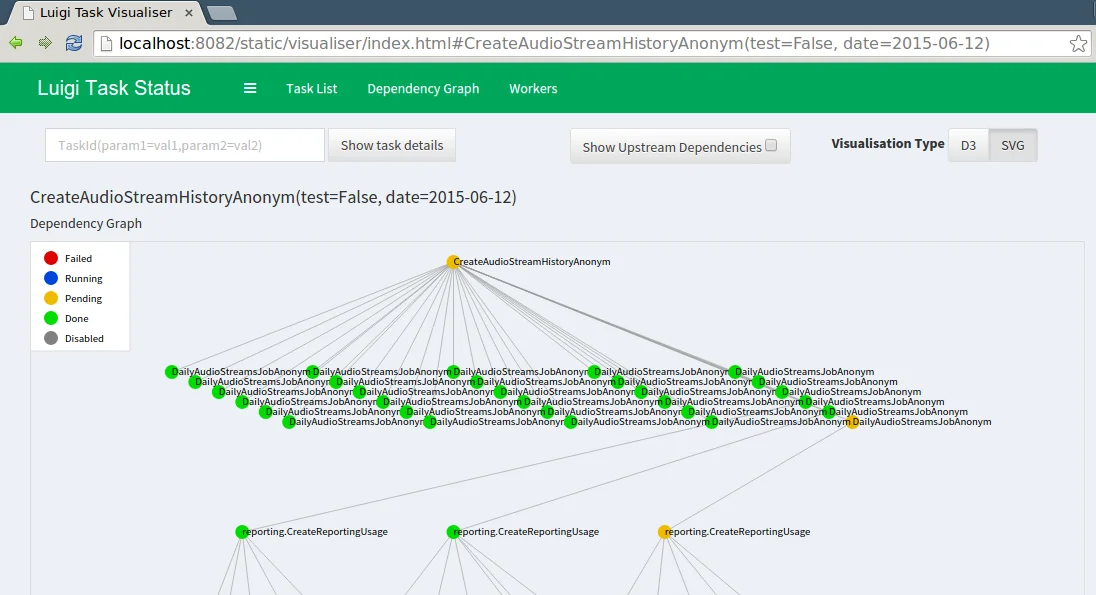

Lastly, a web interface lets you visualize and monitor the data pipeline. You can search and filter through your tasks.

The interface shows the task graph, the dependencies, the execution order, the progress, and the status of each task.

Luigi’s web-based user interface - Source: PyPI.

The two building blocks of a Luigi pipeline are Tasks and Targets. Let’s explore further.

Tasks and targets: The building blocks of pipelines in Luigi

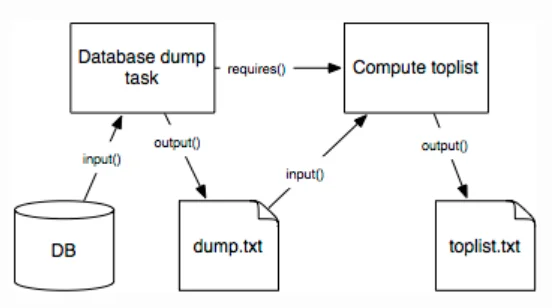

Permalink to “Tasks and targets: The building blocks of pipelines in Luigi”A task performs computations and consumes targets generated by other tasks. It’s where the execution takes place.

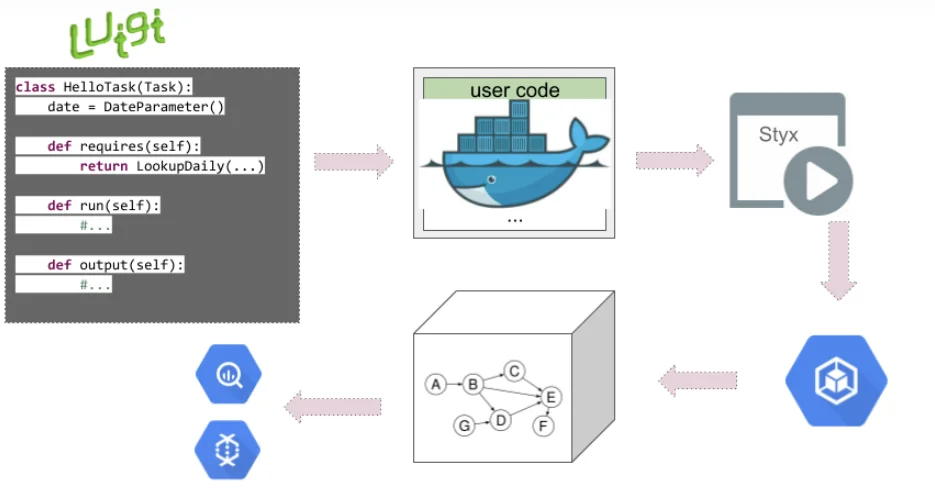

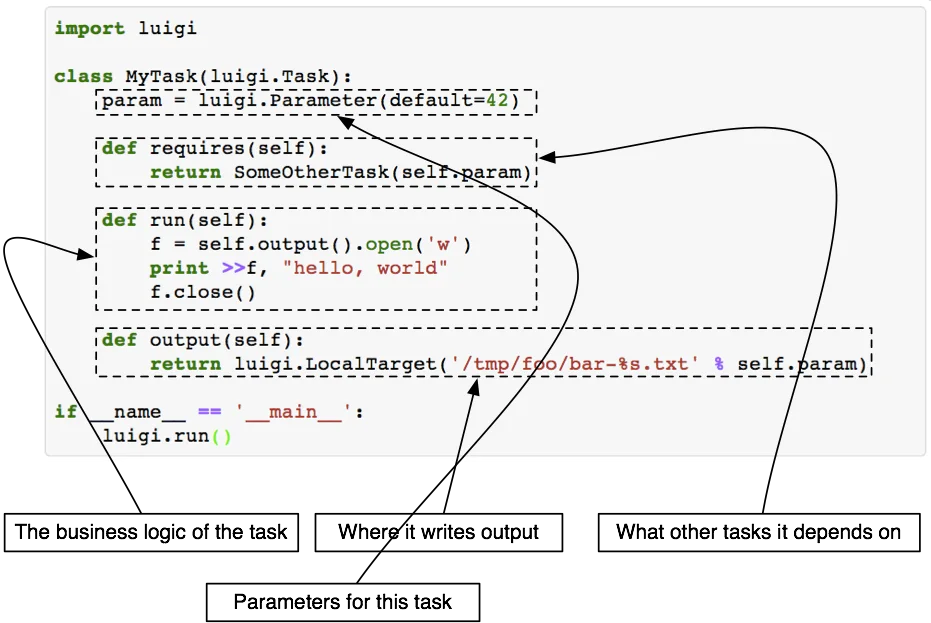

Tasks are developed using the Task class in Luigi, which can be executed via three methods:

requires(): Specifies dependenciesrun(): Contains the actual code required to run a job, i.e., the business logicoutput(): Returns one or more target objects

An illustration of tasks in Luigi, along with their implementation methods - Source: Luigi.

Generic outline of a task in Luigi - Source: Luigi.

Meanwhile, a target is a resource generated by a task. This could be an HDFS file, assets in a database, etc.

A Task will define one or more Targets as output, and the Task is considered complete if and only if each of its output Targets exists.

Luigi has an in-built toolbox of Targets, such as:

LocalTargetHdfsTargetluigi.contrib.s3.S3Targetluigi.contrib.ssh.RemoteTargetluigi.contrib.ftp.RemoteTargetluigi.contrib.mysqldb.MySqlTargetluigi.contrib.redshift.RedshiftTarget

Besides tasks and targets, another important concept in Luigi is the Parameter class.

Parameters in Luigi

Permalink to “Parameters in Luigi”Parameters is the Luigi equivalent of creating a constructor for each Task. For example, if one of the tasks you run is generating a daily sales report, then parametrizing the date would make the task reusable.

import datetime

class MyTask(luigi.Task):

date = luigi.DateParameter(default=datetime.date.today())

Core capabilities in data orchestration

Permalink to “Core capabilities in data orchestration”Architecting Data-Intensive Applications highlights five key features of Luigi:

- Has a built-in mechanism to parallelize workflow steps

- Offers a set of commonly-used task templates that speed up adoption

- Supports Python MapReduce jobs in Hadoop, Hive, and Pig

- Includes filesystem abstractions for HDFS and local files that ensure all systems are atomic, preventing them from crashing in a state containing partial data

Getting started with Luigi

Permalink to “Getting started with Luigi”Here are the steps to begin working with Luigi for data orchestration:

- Install the latest version of Luigi from PyPI

- Write your first pipeline

- Define the tasks and dependencies

- Run the Luigi workflow

- Visualize the outcome

Summing up

Permalink to “Summing up”Luigi, Spotify’s original orchestration tool, serves as an open-source data orchestrator for building complex pipelines of batch jobs. Its origins lie in automating tasks for music streaming services, and it has found adoption in various companies for task orchestration and dependency management.

However, it’s important to recognize Luigi’s limitations — Spotify itself has moved on to Flyte for better visibility of its workflows, automation, and lower maintenance overhead.

Luigi is ideal when you need a lightweight workflow tool that can handle simple dependencies. Consider your requirements and primary use cases before deciding.

Luigi: Related Reads

Permalink to “Luigi: Related Reads”- What is data orchestration: Definition, uses, examples, and tools

- Open source ETL tools: 7 popular tools to consider in 2023

- Open-source data observability tools: 7 popular picks in 2023

- ETL vs. ELT: Exploring definitions, origins, strengths, and weaknesses

- 10 popular transformation tools in 2023

- Dagster: Here’s everything you need to know about this open-source data orchestrator

Share this article