Top Data Quality Monitoring Tools for 2025

Last Updated on: June 25th, 2025 | 12 min read

Unlock Your Data's Potential With Atlan

Quick Answer: What are data quality monitoring tools?

Permalink to “Quick Answer: What are data quality monitoring tools?”Data quality monitoring tools are platforms that help data teams track, measure, and maintain the reliability of their data in real time. These tools continuously evaluate datasets against defined rules or thresholds—such as completeness, accuracy, timeliness, and consistency—to surface issues before they impact downstream consumers like analytics, reporting, or machine learning models.

As data pipelines grow more complex and business decisions become increasingly data-driven, these tools are essential for maintaining trust in data. Popular data quality monitoring tools include Soda, Anomalo, Bigeye, Lightup, and Monte Carlo.

Up next, we’ll look at the key features of data quality monitoring tools, along with a brief introduction to some of the popular tools in this space. You will also learn how a metadata activation platform like Atlan can strengthen data quality monitoring.

Table of Contents

Permalink to “Table of Contents”- Data quality monitoring tools explained

- What are the key features of data quality monitoring tools?

- What are some of the popular data quality monitoring tools?

- What do you need to successfully implement a data quality monitoring tool?

- Data quality monitoring tools: Summing up

- Data quality monitoring tools: Frequently asked questions (FAQs)

Data quality monitoring tools explained

Permalink to “Data quality monitoring tools explained”With more organizations using real-time data to drive their businesses, the need for closely monitoring data quality has become of utmost importance. According to the 2024 Vanson Bourne report on AI, poor data quality leads to over 400 million dollars in losses. The report also noted that as organizations begin their AI journey, data quality becomes increasingly important and has the potential to incur even further losses.

Data quality monitoring tools allow you to track and monitor data quality metrics for the data you’re bringing into your data platform. These tools let you write data quality rules, send alerts and notifications on breached data quality SLAs, and let you understand the state of data quality with a data quality dashboard.

What are the key features of data quality monitoring tools?

Permalink to “What are the key features of data quality monitoring tools?”Data quality monitoring tools that have the capability to integrate with data pipelines, orchestration engines, data processing engines, data catalogs, metadata platforms, and BI platforms, among others. The main value that these data quality monitoring tools provide is the visibility into your organization’s data quality at a granular level. Data quality monitoring tools have the following salient features:

- Connectors: Connectors allow you to connect with data platforms, ingestion, orchestration, transformation, and visualization tools so that you can define, embed, and track data quality features natively. Having a variety of connectors also helps you integrate various tools in your stack, so that you can consolidate data quality monitoring for your organization.

- Checks and validations: These lay the foundation for enforcing data quality by defining what should be tested and validated, and what metrics should be tracked to gain confidence in the data. For example, you might have a certain tolerance for null values in a specific column of a table coming in from a specific data source.

- Data quality dashboards: Data quality dashboards provide you with complete visibility into your organisation’s data quality metrics in an easy-to-read and widely-consumable manner. Using data quality dashboards, you can take action on any issues that you see with the data quality of any specific data assets.

- Alerts and notifications: These allow you to be on top of any potential data quality issues before they cross a predefined threshold. This way, you can resolve the issue in time before it becomes a bigger problem for the downstream consumers of the bad data, such as in reporting and analytics use cases.

Now, let’s look at some data quality monitoring tools that implement the features mentioned above.

What are some of the popular data quality monitoring tools?

Permalink to “What are some of the popular data quality monitoring tools?”Many tools and technologies in the market help you get on top of the data quality issues in your organization.

Some of the tools fall into the category of application-level observability tools, similar to Datadog and New Relic, but for data platforms. Others are more closely tied to data engineering and data science workflows, including ETL, MLOps lifecycles, and others.

In this article, we’re covering the latter. Let’s look at some of the popular data quality monitoring tools today:

- Soda

- Monte Carlo

- Anomalo

- Bigeye

- Lightup

Soda

Permalink to “Soda”Soda is a data quality monitoring tool that helps you monitor data quality, test data as part of CI/CD pipelines, enforce data contracts, and operationalize data quality. It offers a free and open-source version called Soda Core, but also has a managed cloud-based version that you can integrate with your data stack.

Soda is a Python library that can be embedded in most data workflows running on systems such as PostgreSQL, Snowflake, BigQuery, and Amazon Athena, among others. It uses a declarative approach to testing using its domain-specific language for data reliability: SodaCL (Soda Checks Language).

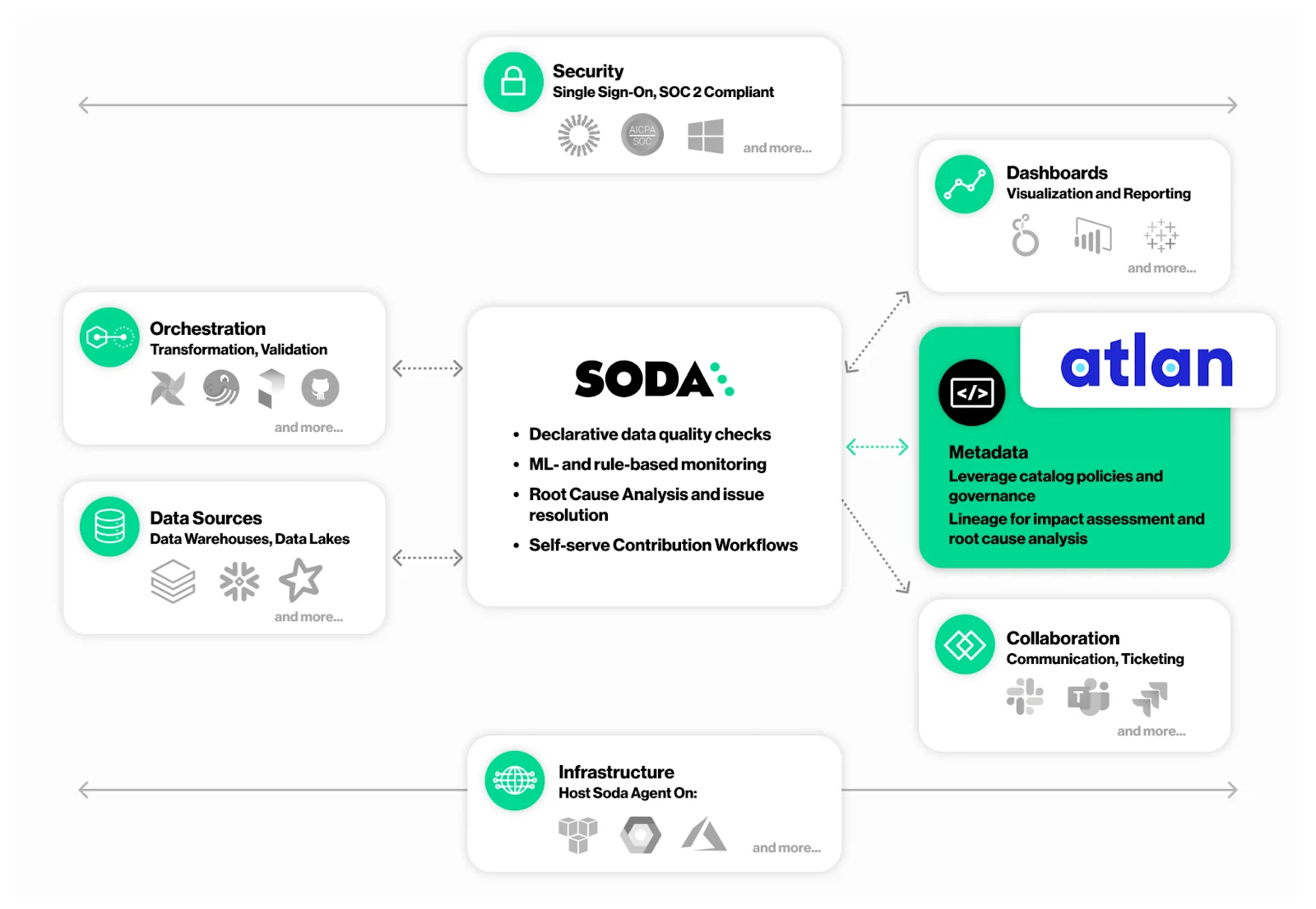

To provide you with an extensive data quality monitoring experience, Soda also integrates with Atlan. Both tools work better together, as Atlan offers users a better way to search, discover, and share data in a trustworthy manner, enabling self-service data access across the organization. At the same time, Soda helps automate data quality checks and rule management.

Soda and Atlan for data quality monitoring - Image by Atlan.

Check out the official documentation from Atlan and Soda to learn more about this integration and how both tools can offer you a complete view of your organization’s data quality.

Monte Carlo

Permalink to “Monte Carlo”Monte Carlo is a data and AI observability platform that helps you with data quality monitoring, testing, report and dashboard integrity, among other things. The platform utilizes monitoring and observability agents that leverage AI to recommend data quality rules and assist with root cause analysis when data quality issues arise.

Monte Carlo has various monitors for freshness, volume, schemas, and metrics, among other things. It also provides you with the option to set up alerts and notifications based on breaches in data quality thresholds, as well as agreed-upon service levels within or outside the organization. It also provides several reports and dashboards, including the Table Health Dashboard and the Data Operations Dashboard, enabling you to monitor data quality continuously.

To provide a comprehensive view of data quality across your organization, accessible from a single control plane, Monte Carlo also integrates with Atlan, allowing incidents captured by Monte Carlo to be surfaced in Atlan. This integration enables data engineers, analysts, and business users to examine data quality issues, incidents, and their impact on any downstream data assets through Atlan’s lineage interface.

For more information on how Atlan and Monte Carlo work better together to improve data quality in your organization, head over to the official documentation.

Anomalo

Permalink to “Anomalo”Anomalo is an automated data quality monitoring platform that enables data quality checks powered by machine learning, automatic identification of anomalous data, and comprehensive monitoring of all your tables, providing a rich view of your organization’s data quality state.

Anomalo supports automatic root cause analysis based on the data quality incidents reported. This is also accompanied by incident resolution workflows that suit your organization. Moreover, you can also get a lineage view of the data assets registered within Anomalo, so that you can assess data quality for those assets.

For a more comprehensive view of data quality across your data estate, Anomalo integrates with Atlan. With this integration, you can get real-time updates from Anomalo in Atlan, which is useful in building and improving trust in data within the organization.

Bigeye

Permalink to “Bigeye”Bigeye is a data observability platform that helps build trust in data for an organization, enabling users to utilize the data for analytics and reporting purposes. Bigeye allows you to define data quality rules and detect anomalies in data, while also providing a view of the data lineage.

You can connect Bigeye to any of the popular data platforms or sources. Once you do that, Bigeye’s Autometrics will begin monitoring data quality for data pipelines that utilize those sources. You can also use Collections to organize monitoring alerts and notifications. The tool also assists with rule-based anomaly detection, enabling you to address threshold violations related to data quality issues and resolve them effectively.

Bigeye also integrates with Atlan, where issue-related tags and certificates are synced with Atlan, so that users can see those as trust signals in Atlan’s lineage interface. Once the data quality issues and incidents are resolved, the tags are removed from Atlan. This helps you build trust in data within your organization.

Lightup

Permalink to “Lightup”Lightup is a data quality and data observability platform that helps you run continuous data quality checks across all your data assets. It utilizes extensive data profiling, data quality monitoring, and anomaly detection to ensure efficient and ongoing data quality management.

Lightup has four types of metrics–column metrics, comparison metrics, table metrics, and SQL metrics–to help you track data quality from the coarse to the most granular level. On top of these metrics, Lightup also provides several automatically created metrics, which you can enable as Auto metrics, metadata metrics, and deep auto metrics.

To give you a fuller picture of data quality, Lightup also integrates with Atlan. This integration utilizes Atlan’s API to log data quality information into Atlan’s catalog.

What do you need to successfully implement a data quality monitoring tool?

Permalink to “What do you need to successfully implement a data quality monitoring tool?”Once configured, it allows you to view incident announcements, table monitoring information, overall health, and freshness data within Atlan’s interface. You can also review the data quality information coming from Lightup in Atlan’s data lineage interface to understand the source and impact of any data quality incidents.

While many of these tools offer excellent data quality features, they often result in the creation of a separate layer of data quality definitions and metrics that is not in sync with the other key contextual and structural information about the data. This is why there’s a need to drive data quality through a single place that allows you to catalog, discover, and govern all your data assets – a metadata control plane.

A unified metadata control plane allows you to tie the structure, context, test results, data quality metrics, governance, and other elements, all of which are activated through the singular metadata layer that the control plane offers.

Atlan is a metadata activation that provides a unified metadata control plane described above. In addition to having its own native data quality and governance features, it also integrates with several popular data quality monitoring tools mentioned above, including Soda, Anomalo, and Monte Carlo.

Moreover, as more data platforms, such as Databricks and Snowflake, release their own native data quality functionality, it is essential to leverage it. Atlan integrates with the native data quality metrics and tools of both Databricks and Snowflake and integrates them into the Atlan Data Quality Studio, using which you can define and track data quality metrics in a central place.

Data quality monitoring tools: Summing up

Permalink to “Data quality monitoring tools: Summing up”Data quality is one of the most important pillars of data engineering. Even if you have the most performant pipelines and the most advanced access control systems and security, it won’t amount to anything if the data quality is poor, which is why it is crucial to address data quality centrally and consistently.

This article walked you through the key features of a data quality monitoring tool and how they show up in some of the popular data quality monitoring tools.

You also learned about the most common challenge in improving data quality in an organization – the lack of a metadata foundation.

This is where a tool like Atlan, which provides a unified control plane for metadata, helps you integrate the data quality features from the best monitoring tools on the market, along with the native data quality functionality from data platforms like Databricks and Snowflake.

Data quality monitoring tools: Frequently asked questions (FAQs)

Permalink to “Data quality monitoring tools: Frequently asked questions (FAQs)”1. What is a data quality monitoring tool?

Permalink to “1. What is a data quality monitoring tool?”A data quality monitoring tool continuously checks the state of your data across systems to ensure it meets defined quality standards. It helps teams define rules, run validations, detect anomalies, and track metrics such as completeness, freshness, and consistency across pipelines, tables, and reports.

2. What are some key data quality metrics to track for a data asset?

Permalink to “2. What are some key data quality metrics to track for a data asset?”Some of the key data quality metrics are around completeness, consistency, validity, availability, uniqueness, accuracy, timeliness, precision, and usability. These metrics take a slightly different shape and form depending on your systems.

3. What are the benefits of having continuous data quality monitoring?

Permalink to “3. What are the benefits of having continuous data quality monitoring?”Continuous data quality monitoring helps you get on top of any source-related issues caused by schema drift, bad inputs, etc., as soon as possible to minimize downstream impact on the pipelines and data assets that use the data in question.

Moreover, continuously monitoring data quality also fosters a culture of transparency and trust within the organization, as it allows you to identify any data quality issues at any given time.

4. What is the difference between data profiling and data quality monitoring?

Permalink to “4. What is the difference between data profiling and data quality monitoring?”Data profiling is the process of examining the data available in a data source and collecting descriptive statistics about that data. Data profiling usually gives you the prominent characteristics of the data, while also giving you an insight into what potential issues it might have.

Data quality monitoring, on the other hand, is much more active and continuous, with many metrics related to freshness, completeness, reliability, among other things.

5. How do I choose the right data quality monitoring tool for my organization?

Permalink to “5. How do I choose the right data quality monitoring tool for my organization?”Start by evaluating your data stack, the complexity of your pipelines, and the types of data quality issues you’re facing. Look for tools that offer native integrations with your platforms, support for both rule-based and anomaly detection methods, and visibility across lineage and impact.

6. What is the role of metadata in improving data quality monitoring?

Permalink to “6. What is the role of metadata in improving data quality monitoring?”Metadata acts as the connective layer between data assets, quality rules, and governance policies. By tagging data with ownership, classification, and business context, teams can build more targeted and effective quality rules, automate enforcement, and ensure traceability.

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.

Data quality monitoring tools: Related reads

Permalink to “Data quality monitoring tools: Related reads”- Data Quality Explained: Causes, Detection, and Fixes

- Data Quality Framework: 9 Key Components & Best Practices for 2025

- Data Quality Measures: Best Practices to Implement

- Data Quality Dimensions: Do They Matter?

- Resolving Data Quality Issues in the Biggest Markets

- Data Quality Problems? 5 Ways to Fix Them

- Data Quality Metrics: Understand How to Monitor the Health of Your Data Estate

- 9 Components to Build the Best Data Quality Framework

- How To Improve Data Quality In 12 Actionable Steps

- Data Integrity vs Data Quality: Nah, They Aren’t Same!

- Gartner Magic Quadrant for Data Quality: Overview, Capabilities, Criteria

- Data Management 101: Four Things Every Human of Data Should Know

- Data Quality Testing: Examples, Techniques & Best Practices in 2025

- Atlan Launches Data Quality Studio for Snowflake, Becoming the Unified Trust Engine for AI

- Atlan Launches Data Quality Studio for Databricks, Activating Trust for the AI-Native Era