DataHub Data Lineage: Native Features, Supported Sources & More

Share this article

Data lineage is one of the most misunderstood data engineering concepts. Terms like end-to-end and granular data lineage are thrown around with little context. The irony here is that the whole point of having data lineage was to add visibility and context to data movement and transformation.

DataHub is one of the popular open-source data catalogs. It was born out of LinkedIn’s attempt to solve the problem of searching for and discovering data assets at scale. It started supporting basic column-level lineage for limited sources from v0.8.28 onwards.

See How Atlan Simplifies Data Governance – Start Product Tour

Since then, there’s been quite a bit of development and refinement of this feature in terms of DataHub’s ability to capture lineage from various sources along with several changes to the UI, making it more readable and consumable in almost every following release, the latest in v0.14.1.

DataHub’s goal in offering accurate lineage metadata is to help you maintain data integrity, simplify relationships between various assets, and automatically propagate any metadata across the lineage graph.

With that in mind, this article will take you through the features, coverage, and inner workings of column-level lineage in DataHub under the following themes:

- Data lineage features

- Column-level lineage coverage

- Sources with column-level lineage support

Table of contents #

- DataHub data lineage: Native features

- Column-level data lineage in DataHub: How does it work?

- Sources with column-level lineage support in DataHub

- Summary

- DataHub data lineage: Related reads

DataHub data lineage: Native features #

Initially, DataHub started supporting two lineages, i.e., Dataset to Dataset (for example, Snowflake, Databricks, etc.) and DataJob to Dataset lineages. Today, DataHub also supports three more types of lineage connections, all of which are listed below:

- Dataset to Dataset

- DataJob to DataFlow

- DataJob to Dataset

- Chart to Dashboard

- Chart to Dataset

With these five types of lineage connections, DataHub is positioned to support a range of lineage-related metadata. If your data source is covered under one of the aforementioned connection types, it should have support for automatic lineage extraction and ingestion. Otherwise, you can use the DataHub API and SDK to do that yourself.

While column-level lineage has been a part of the key DataHub features, many sources aren’t yet supported, such as Athena, ClickHouse, Glue, Kafka, Hive, Google Cloud Storage, MySQL, and PostgreSQL.

Although there haven’t been significant changes in how lineage works in DataHub, there have been some improvements in the functionality and the user interface.

Here are a few key enhancements that were made to DataHub in the past few releases, especially from v0.13.0 onwards:

- Support for OpenLineage for capturing using the Spark Lineage Beta Plugin

- Support for incremental column-level lineage

- Improved data propagation view in the UI, which helps increase lineage visibility

- Support for query extractor for BigQuery that helps you fetch lineage metadata

- Support for Iceberg tables in Snowflake access history

- Support for enhanced lineage view without using query-based lineage

- Support for customized dialects using the SQLGlot parser

These features either help capture lineage better or help you get a better user experience while consuming and interacting with the data lineage captured by DataHub.

Several other incremental changes are related to the data lineage feature in DataHub. You can check more details on the release page of the DataHub GitHub repository.

Column-level data lineage in DataHub: How does it work? #

There’s a significant difference in effort and complexity between extracting data lineage at the table and column levels, the latter being far more complex because it deals with reading and parsing your queries. SQL parsing is a hard problem, especially when dealing with many SQL dialects like Snowflake, Databricks, SparkSQL, MySQL, PostgreSQL, DuckDB, and whatnot.

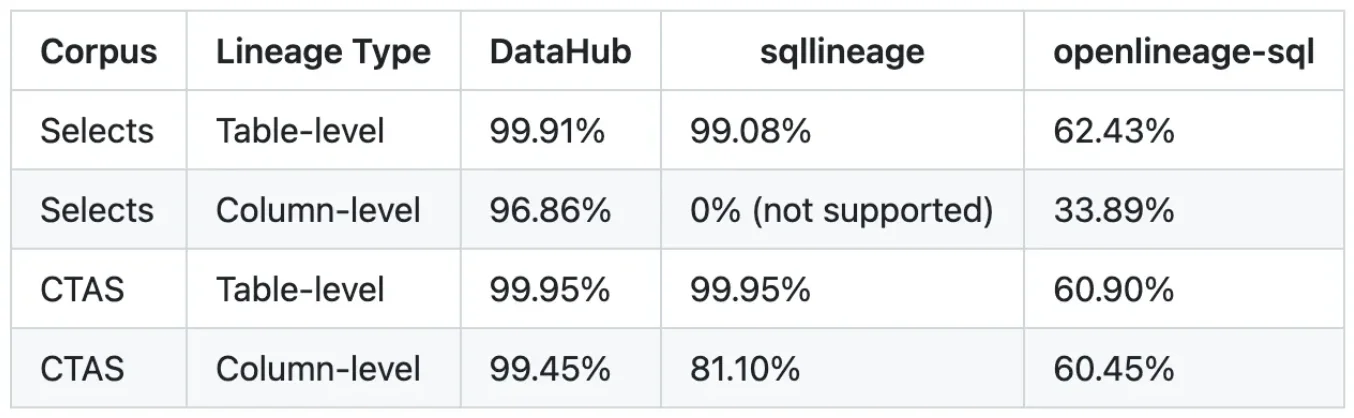

After much deliberation, DataHub used Toby Mao’s multi-dialect SQL parser and transpiler called SQLGlot. This parser is essential in generating the Abstract Syntax Tree (AST) that can be used to infer column-level lineage. DataHub used SQLGlot instead of OpenLineage (Marquez) and SQLLineage (OpenMetadata) as it was found that SQLGlot gave the highest percentage of queries (from a corpus of 7K BigQuery SELECT and 2K CTAS statements) that resulted in the correct table and column-level lineage.

Here are the findings from DataHub’s blog post about this benchmarking test comparing different SQL parsers.

DataHub’s benchmarking results while comparing different SQL parsers to extract lineage at table and column level using BigQuery - Image from the DataHub blog.

This is how DataHub internally parses queries, but before doing that, it also needs to connect to the data source and get the query text, among other things. This process is also different for different sources. This is because every data source has a different implementation of the information_schema and the metadata model.

Here’s an example of how lineage metadata is extracted from Snowflake:

- Table to View lineage via

snowflake.account_usage.object_dependenciesview. - AWS S3 to Table lineage via show external tables query.

- View to Table lineage via the

snowflake.account_usage.access_historyview (requires Snowflake Enterprise Edition or above). - Table-to-table lineage via the

snowflake.account_usage.access_historyview (requires Snowflake Enterprise Edition or above). - AWS S3 to Table via the

snowflake.account_usage.access_historyview (requires Snowflake Enterprise Edition or above).

There are similar limitations and caveats while dealing with other data sources, so keep that in mind while configuring data lineage for your data sources. Let’s talk more about DataHub’s support for column-level lineage.

Sources with column-level lineage support in DataHub #

Column-level lineage was only partially available before the wider release and support of data lineage, even for data sources like Snowflake and Databricks. As of release v0.14.1, DataHub natively supports column-level lineage metadata for Databricks, BigQuery, and Snowflake, among other tools like Teradata, Looker, Power BI, and Tableau.

If you are working with any supported sources, you can use the Lineage API. You’ll need to understand the metadata model supporting DataHub’s internal operations to know more. There, you will find examples of FineGrainedLineage and UpstreamLineage to enable column-level lineage for your workload. Your code will look something like this (example from DataHub documentation):

FineGrainedLineage(

upstreamType=FineGrainedLineageUpstreamType.FIELD_SET,

upstreams=[

fldUrn("fct_users_deleted", "browser_id"),

fldUrn("fct_users_created", "user_id"),

],

downstreamType=FineGrainedLineageDownstreamType.FIELD,

downstreams=[fldUrn("logging_events", "browser")],

)

upstream = Upstream(

dataset=datasetUrn("fct_users_deleted"), type=DatasetLineageType.TRANSFORMED

)

fieldLineages = UpstreamLineage(

upstreams=[upstream], fineGrainedLineages=fineGrainedLineages

)

In case you are working with a data source that DataHub doesn’t yet support, you can use the Python SDK and write the lineage fetching logic for your source yourself. You’ll need to use the parse_sql_lineage() method. This would mean more work for you. If it’s not a custom requirement, you can also try to check the public roadmap, as more and more sources keep getting added with every release.

How Atlan Benefits Customers with Data Lineage #

Atlan automatically captures end-to-end, column-level lineage and “activates” metadata through features like automated pipeline health alerting and propagation.

The platform surfaces usage and cost metrics on lineage processes, translates complex lineage transformations into business user-friendly explanations, and enables proactive collaboration by sending notifications about changes to assets.

Atlan’s automated lineage feature helped Takealot improve their time-to-resolution for root cause analysis by 50%.

Aliaxis leverages Atlan’s pipeline observability and end-to-end lineage features to find pipeline breaks 95% faster, accelerating issue resolution time from 1 day to 1 hour.

Atlan makes lineage transformations easier to understand by translating them into business-user-friendly explanations.

Book your personalized demo today to find out how Atlan can help your organization to capture end-to-end, column-level lineage.

Summary #

DataHub’s latest releases have some promising updates in terms of lineage capture from a variety of sources. However, automatic lineage capture is still very limited, especially at the column level.

If column-level lineage is the answer to some of your priority use cases, then we urge you to check out the best-in-class data lineage in Atlan, which has lineage capabilities, including:

- Granular and column-level lineage, that is quick to set up with an automated, no-code approach

- Open API architecture that ensures lineage reaches every corner, all the way to the source of the data estate, with out-of-the-box and custom connectors

- Proactive impact analysis in GitHub further empowers by preventing dashboard disruptions

You can also review how Atlan compares to DataHub in other core features here.

DataHub data lineage: Related reads #

- DataHub: LinkedIn’s Open-Source Tool for Data Discovery, Catalog, and Metadata Management

- DataHub Set Up Tutorial: A Step-by-Step Installation Guide Using Docker

- LinkedIn DataHub Demo: Explore DataHub in a Pre-configured Sandbox Environment

- Amundsen vs. DataHub: Which Data Discovery Tool Should You Choose?

- Atlan vs. DataHub: Which Tool Offers Better Collaboration and Governance Features?

- OpenMetadata vs. DataHub: Compare Architecture, Capabilities, Integrations & More

Share this article