Atlan vs. DataHub: Which Tool Offers Better Collaboration and Governance Features?

Share this article

Atlan and DataHub emerged at around the same time.

Atlan was originally developed as an internal tool at Social Cops. It was battle-tested through more than 100 data projects that improved access to energy, healthcare, and education, and impacted the lives of over 1 billion citizens, before being released into the market.

DataHub was built at LinkedIn as the successor to WhereHows, which was their first internal data cataloging tool.

Is Open Source really free? Estimate the cost of deploying an open-source data catalog 👉 Download Free Calculator

In this article, we will compare the capabilities, architecture, and cost of deployment between both tools, among other factors, to gain a better understanding of them.

What is Atlan? #

Built by a data team for data teams, Atlan is the active metadata platform for modern data teams. Atlan creates a single source of truth by acting as a collaborative workspace for data teams and bringing context back into the tools where data teams live.

Atlan can be deployed as a single-tenant enterprise data catalog on AWS with a high level of isolation of Kubernetes resources.

Because of its rich features, security, and reliability, many companies like Autodesk, Nasdaq, Unilever, Juniper Networks, Postman, Monster, WeWork, and Ralph Lauren have deployed Atlan to manage data at scale. Atlan deployment in Google Cloud and Azure is on the roadmap.

What is DataHub? #

DataHub is an open-source project maintained by 300+ contributors driven by a public roadmap. You can deploy DataHub on your local machine using Docker or on a cloud platform of your choice. If you want to deploy DataHub to work at scale, deploying it using Kubernetes might be a better option.

Many customers including Coursera, LinkedIn, N26, Typeform, Wikimedia, and Zynga have seen successful deployments of DataHub.

Table of contents #

- What is Atlan?

- What is DataHub?

- Core features

- Things you must consider while evaluating these data catalogs

- Summary

- Atlan vs DataHub: Related reads

Atlan vs. DataHub: Core features #

- Data discovery

- Data lineage

- Data governance

- Collaboration

- Active metadata

Data discovery #

Every data source has an internal implementation of a data catalog, which manages the storage, retrieval, and manipulation of data. A data catalog can simply integrate data from all the different data sources, but that isn’t enough.

Users need a way to interact with data that feels intuitive and useful. A natural-language full-text search engine provides fulfills that requirement. Atlan and DataHub implement an Elasticsearch-based search engine to serve the search queries.

Building a data catalog involves more than just solving the engineering problem of integrating metadata and exposing it to a search engine. Atlan provides a search experience that is easy for you to use, similar to shopping for products. You can filter, search, and sort through data assets as you would with any product you use. Additionally, Atlan enhances your discovery process by embedding trust signals in the listing of your data assets. These signals might include something as simple as a conversation about the data asset in a tool like Slack.

DataHub on the other hand, also offers some advanced filters and advanced queries to parse through your data assets. For programmatic access, you can use the same GraphQL engine that powers the search and discovery engine for third-party integrations too.

Data lineage #

Both Atlan and DataHub offer column-level data lineage, which allows end-users to understand the flow of data in your business and perform impact analysis based on changes in data assets.

DataHub supports a list of data sources to fetch lineage information, such as charts, dashboards, databases, and workflow engines. DataHub also allows you to edit data lineage from the UI and the API. DataHub’s Lineage Impact Analysis feature enables end-users to identify breaking changes related to changing schemas and data quality.

Atlan’s data lineage powers up the lineage game by providing several automation-focused features, such as automated SQL parsing and automatic propagation of tags, classifications, and column descriptions.

Moreover, with Atlan’s in-line actions, you can add business and technical context to your data assets and data lineage by creating alerts for failed or problematic data assets, creating Jira tickets, asking questions on Slack, and performing impact analysis.

Data governance #

DataHub provides a simple way to automate workflows based on changes made in the metadata using th Actions Framework. DataHub’s governance framework allows an end-user to tags, glossary terms, and domains to classify the metadata. You can send notifications on Slack, Teams, or email when a particular tag is added to a data set. You can also trigger workflows based on that change. All this ties nicely with DataHub’s goal of governing in real-time.

Atlan’s data governance offering is much more sophisticated. On top of the tagging and classification of data, Atlan also allows end-users to run Playbooks to auto-identify PII, HIPAA, and GDPR data. You can also add masking and hashing policies to protect and secure sensitive data. Tags and classifications are automatically propagated to dependent data assets. Atlan allows you to customize governance based on personas, purposes, and compliance requirements.

Two core ideas are driving the future of data governance at Atlan: shift-left and AI. The shift left approach in data governance involves creating documentation, running data quality and profiling checks, and setting tags and policies on data assets as close to creating those data assets.

With the help of LLMs like ChatGPT, Atlan’s Trident AI in the future will help out with writing column descriptions, and business term descriptions, writing detailed documentation, fixing SQL queries, and more. This AI integration will integrate seamlessly within the tool and act as an assistant to you taking care of a lot of the gruntwork that developers and analysts aren’t necessarily excited to do.

Collaboration #

DataHub currently allows you to send notifications to Slack, but it doesn’t have deep integration with any of the tools businesses use to have conversations. Although features around collaboration were in the pipeline back in 2021, they weren’t completed, which is why there’s no direct way to collaborate within the platform.

On the other hand, Atlan has deep integrations with tools like Slack and Jira. For instance, you can tag Slack users or channels in conversations about a data asset on Atlan. You can also create and manage Jira tickets and see activity on a particular data asset with the help of the Jira integration. Moreover, Altan also integrates with tools like Tableau, Looker, and PowerBI via a Chrome plugin that helps you get all the metadata, including ownership information from the data assets in your BI tool.

Active metadata #

Metadata activation is one of the superpowers of Altan. The drive towards active metadata pushes for always-on, intelligent, and action-oriented metadata. This means Atlan leverages metadata that is always available and in use, metadata that is connected in a way that allows deduction of meaning or intelligence, and finally, metadata that can be used to create and trigger new workflows to save time and money.

How does active metadata manifest in your data flows? Here are some examples:

One of the most annoying things you see in ill-managed data catalogs is the high frequency of stale and unused data assets. You can use Atlan to purge stale metadata based on custom rules.

Similarly, introducing a data catalog doesn’t add a lot of value if you cannot identify which of these assets comply with data quality rules to be classified as usable. Hence, freshness and accuracy are both critical metrics for data assets.

Your metadata should be able to indicate both of these. Atlan’s automation-based approach allows you to perform all these tasks with ease.

DataHub’s Actions Framework, on the other hand, provides some great functionality to automate but still requires you to write quite a bit of code or perform complex configurations to do that. With users of different technical capabilities using a data catalog, it isn’t easy to expect everyone to write their own automation scripts or configurations.

Atlan vs. DataHub: Things you must consider while evaluating these data catalogs #

- Managed vs. self-hosted tools

- Ease of setting up

- Integration with other tools

- The true cost of deploying an open-source tool

- Architecture

1. Managed vs. self-hosted tools #

Open-source tools are great but also challenging to manage, especially in their nascent stage. Unless your company has expendable engineering capacity and a strict organizational stance to go open-source, it is wise to use a managed, SaaS-based tool. DataHub is an open-source data catalog, while Atlan is an enterprise data catalog.

Managed tools are built using a lot of customer feedback and user research. They are built to work for different workloads and businesses, and experts in the field usually build them. With infrastructure taken care of, managed tools make a lot of sense, especially when looking for a long-term, scalable solution. On the other hand, open-source, self-hosted tools are suitable for running PoCs.

2. Ease of setting up #

Deploying DataHub is easy, but like many other open-source data catalogs, it doesn’t come with all the features you would want in an enterprise setup, such as authentication with OIDC, authorization with Apache Ranger, backups, and so on.

Atlan, on the other hand, has all of these things in place from the word go. For instance, Atlan comes with a pre-configured Apache Ranger to manage access and policies for the metastore.

Atlan has an enterprise-grade high availability with a disaster recovery plan in place. All the persistent data stores, such as Cassandra, Elasticsearch, PostgreSQL, and more, are backed up every day, which makes the RPO (recovery point objective) 24 hours.

3. Integration with other data tools #

While DataHub provides integrations with popular databases and data warehouses using their standard APIs, Atlan has gone beyond that and has built deep integrations to provide purpose-built features for partners like dbt and Snowflake, which enables Atlan to treat, for instance, dbt metrics as first-class citizens and get column-level lineage information directly from dbt.

Some other examples of Atlan innovating with best-in-class tools would be Atlan’s dbt + GitHub integration that allows you to detect and act on breaking changes before they are committed into your Git repository with impact previews.

This integration also allows you to ensure the quality and accuracy of business metrics.

Atlan is also the first data catalog to be certified as a Snowflake Ready Technology Partner.

4. Risk of failure adopting the tool #

Although with any tool, open-source or proprietary, there is a risk of no/low adoption; the risk depends on several factors, such as UI/UX, feature set, ease of use, extensibility, etc. Open-source data catalogs often see low adoption because of a lack of clear documentation, absent customer support, and bad user experience.

The team at Delhivery faced these issues when they decided to scrap Apache Atlas after spending many months on it. They faced different but similar problems when they tried Amundsen next, which turned out to be a failure because no one wanted to use it. The team then turned towards Atlan, which solved most of the problems they faced deploying open-source tools.

In another instance, while deploying DataHub, a customer quickly realized that deploying and maintaining DataHub would need its own team of two people. As more and more users start using the data catalog, you’ll end up discovering more issues, thereby requiring you to expand the team even further. That is the story of running open-source data catalogs at scale.

5. The true cost of deploying an open-source tool #

Assessing the cost of setting up, deploying, and maintaining the tool is one of the most crucial things you need to consider. Most open-source data catalogs give the impression of being easy to set up. It is true in most cases, but what they are talking about is a barebones setup. So much more work goes into setting up a tool to become usable, such as figuring out infrastructure, configuring authentication, creating backups, and so on.

On top of that, there’s a considerable cost attached to failure. In the previous section, we talked about some of the failed implementations that took months and months of engineering teams’ time and left the business with nothing to work with. And then, there’s the cost of building new features on top of the existing tool. Unless you are a sponsor or have sway over the product roadmap of the open-source project, you’ll need to build the features you want yourself, which is very time-consuming and not ideal.

Instead, it may be better to choose a tool like Atlan that harnesses the power of open-source tooling but, at the same time, provides you with the reliability and assurance of an enterprise-grade product.

5. Architecture #

DataHub Architecture #

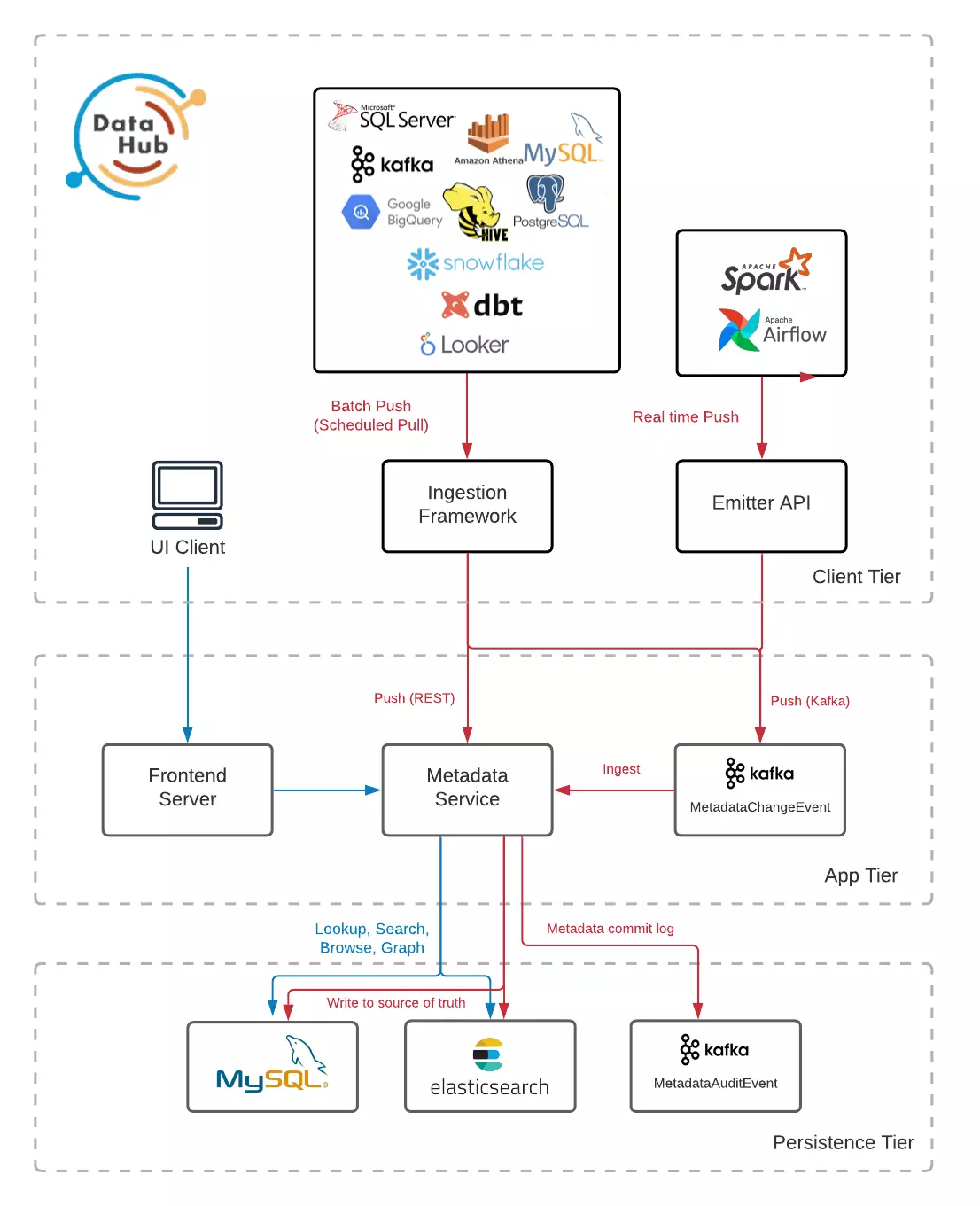

DataHub is built on top of various services utilizing Elasticsearch, MySQL, neo4j, and Apache Kafka. For metadata ingestion, it has both push and pull-based mechanisms.

It extracts the data from databases, data warehouses, and BI tools based on a scheduled pull or batch-based push.

For orchestration and data processing engines like Apache Airflow and Apache Spark, it follows a push-based approach using the Emitter API. All the collected metadata is exposed via the DataHub frontend application. DataHub has a microservices-based architecture. You can deploy these services with Docker containers, optionally orchestrated using Kubernetes.

DataHub architecture diagram. Source: DataHub Docs.

Atlan architecture #

Atlan is designed on a microservices-based architecture. Atlan runs on Kubernetes with the help of Loft’s Virtual Clusters where containers are orchestrated using Argo Workflows. As Atlan is deployed in AWS, all Atlan resources are isolated with AWS VPCs.

While PostgreSQL is used by various microservices within Atlan, Apache Cassandra stores the actual metadata. The same data is also indexed for full-text search in Elasticsearch. Atlan also uses ArgoCD and GitHub Actions for CI/CD at scale.

Atlan strongly recommends a single-tenant deployment on AWS to ensure the highest data security and privacy standards. Atlan’s single-tenancy is achieved using CloudCover’s ArgoCD and Loft-based implementation.

While DataHub has many useful data catalog features, you’ll still need to go a lot on the infrastructure, DevOps, GitOps, and other automation areas. Atlan uses a mix of managed open-source and enterprise tools to ensure the high availability and reliability of the data catalog. Tools like Rancher for Kubernetes cluster management, Velero for cluster volume backups, Apache Calcite for parsing SQL, Apache Atlas as the metadata backend are central to Atlan’s architecture.

Summary #

This article took you through the core features of the open-source data catalog DataHub and the enterprise data catalog Atlan. It also walked you through the factors you need to consider when choosing one data cataloging tool.

While we spoke about how both tools stack up currently, you should also consider their future plans. DataHub’s public roadmap shows us that a few exciting features, like DataHub Actions and Tag/Term propagation, are in progress. Atlan’s focus on AI-led data governance and metadata-based automation distinguishes it from the rest.

And finally, one of the most critical aspects of implementing a new tool is cost. You should consider that seriously before committing to any long-term adoption plans. You can deploy DataHub as a PoC on your own machine and also ask for a proof-of-value from Atlan. Go test them out!

Atlan vs DataHub: Related reads #

- DataHub: LinkedIn’s Open-Source Tool for Data Discovery, Catalog, and Metadata Management

- DataHub Set Up Tutorial : A Step-by-Step Installation Guide Using Docker

- LinkedIn DataHub Demo : Explore and Get a Feel for DataHub with a Pre-configured Sandbox Environment.

- Atlan Product Demo

- Atlan vs Amundsen : A Comprehensive Comparison of Features, Integration, Ease of Use, Governance, and Cost for Deployment

- Atlan vs Apache Atlas : What to Consider When Evaluating?

- Amundsen vs DataHub : Which Data Discovery Tool Should You Choose?

- OpenMetadata vs DataHub : Compare Architecture, Capabilities, Integrations & More

Share this article