Data Governance for AI: Framework & Best Practices 2025

What are the key aspects of data governance for AI?

Permalink to “What are the key aspects of data governance for AI?”Summarize and analyze this article with 👉 🔮 Google AI Mode or 💬 ChatGPT or 🔍 Perplexity or 🤖 Claude or 🐦 Grok (X) .

AI requires data governance, which handles the security of its data, the safety of user interfaces, and testing standards to maintain trust. Let’s look at some ideas for addressing each of these issues.

- Data security: Prevents sensitive information from infiltrating AI training datasets where it becomes embedded in neural networks and potentially accessible to users without detection. Hidden security risks emerge when terabytes of data contain personal information that gets trained into models.

- Data lineage: Maintains comprehensive tracking of data sources, transformations, and dependencies throughout AI pipelines. Understanding data lineage becomes critical when AI systems use hundreds of data sources, enabling rapid identification of issues and ensuring transparency.

- Data quality: Establishes validation processes for training data accuracy, completeness, and consistency. Poor data quality directly impacts AI model performance and can propagate errors throughout the system, making quality controls essential.

- Compliance: Ensures AI systems meet evolving regulations like GDPR, CCPA, and emerging AI-specific legislation. Regulatory landscapes are shifting rapidly, requiring proactive compliance frameworks rather than reactive approaches.

- Ethical considerations: Implements bias detection and fairness testing to prevent discriminatory outcomes. AI systems can perpetuate historical biases present in training data, making ethical oversight crucial for responsible AI deployment.

Why is data governance important for AI?

Permalink to “Why is data governance important for AI?”AI systems present unique governance challenges that traditional data management approaches cannot fully address.

Core Challenges of AI Data:

- Hidden Vulnerabilities: When training models on massive datasets, sensitive information can inadvertently become embedded in neural networks, creating hidden vulnerabilities that standard security audits miss.

- New Attack Vectors: The flexible nature of AI interfaces, where users interact through natural language rather than structured menus, introduces new attack vectors like prompt injection and accidental data exposure.

- Exponential Data Complexity: AI adoption has more than doubled in five years, and the complexity of managing AI data grows exponentially.

- Chaotic Outputs and Costly Testing: Unlike traditional systems with predictable data flows, AI systems require continuous monitoring because their outputs can be chaotic, making testing for edge cases prohibitively expensive.

Risks of Inadequate Governance: Without proper data governance, organizations face significant consequences:

- Regulatory violations

- Privacy breaches

- Model drift

- Loss of stakeholder trust in AI-driven decisions

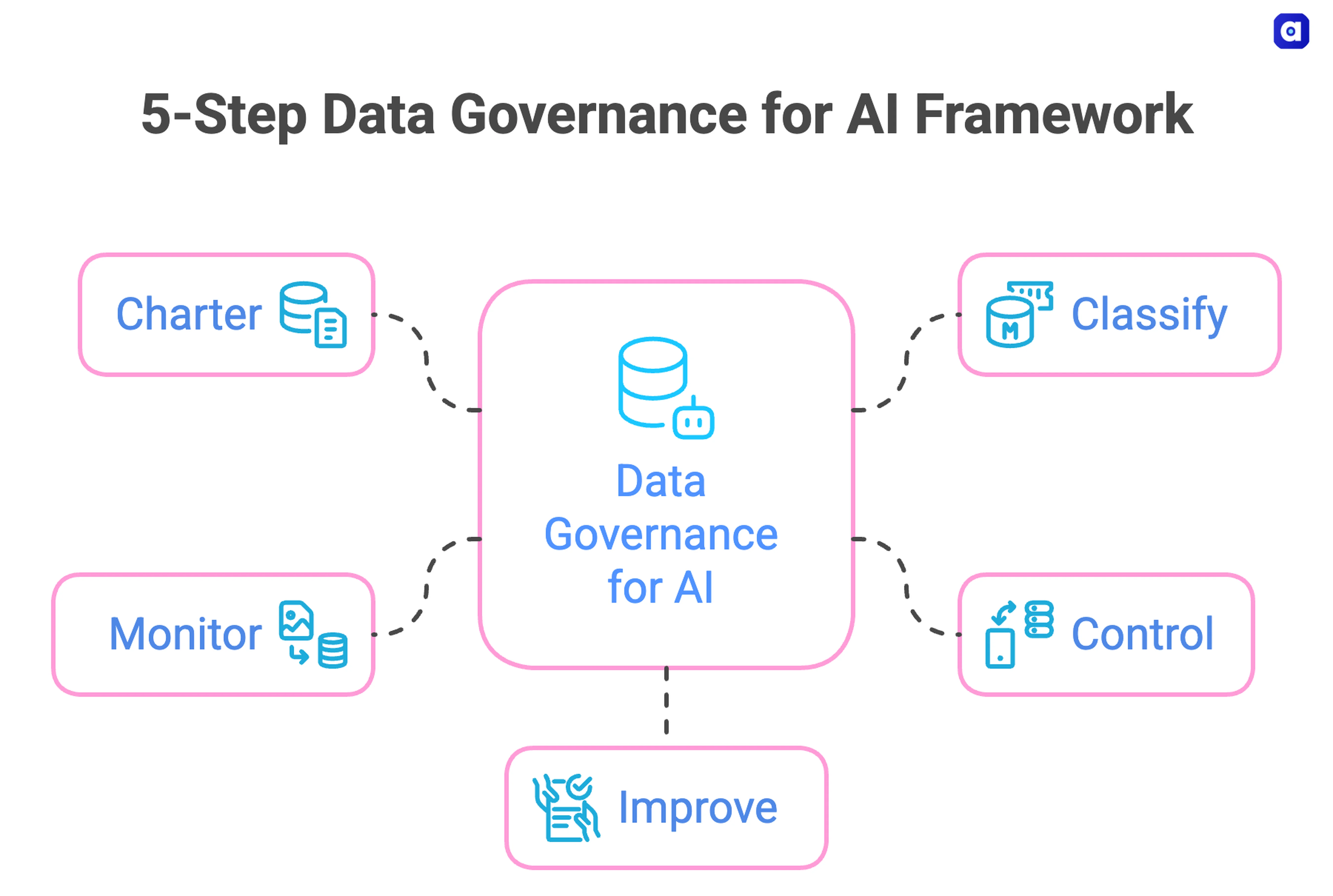

The 5-Step Data Governance for AI Framework for Securing AI Data

Permalink to “The 5-Step Data Governance for AI Framework for Securing AI Data”To effectively manage and secure AI data, a structured approach is essential. Here’s a 5-step framework designed to bolster your data governance for AI initiatives:

1. Charter - Establish organizational data stewardship where everyone working with data takes responsibility for security and accuracy. Create clear governance policies that address AI-specific risks like prompt injection and model bias.

2. Classify - Implement metadata labeling to flag sensitive data before it enters training pipelines. Use automated classification tools to identify personal information, financial data, and other regulated content across all data sources.

3. Control - Deploy access permissions and data minimization practices specifically designed for AI workflows. Implement safeguards that scrub sensitive data from input logs and reject prompts that could compromise security.

4. Monitor - Track data lineage, model performance, and potential vulnerabilities through continuous auditing. Build flagging capabilities that allow users to report concerning AI outputs and establish output contesting systems for error correction.

5. Improve - Refine processes based on audit results, user feedback, and regulatory changes. AI governance requires iterative improvement as new risks emerge and regulations evolve.

How do AI governance frameworks address core challenges?

Permalink to “How do AI governance frameworks address core challenges?”AI systems demand comprehensive data governance across three critical dimensions: securing training data, protecting user interactions, and maintaining system reliability through rigorous testing. Each dimension presents unique challenges that require specialized approaches.

1. Data Security Foundation

- AI systems are only as secure as their training data.

- Strong data governance begins with organizational data stewardship, where everyone handling data takes responsibility for its security and accuracy. This commitment creates the trust foundation necessary for safe AI deployment.

- Challenge: When AI systems train on terabytes of data, sensitive information can easily slip through traditional security measures and become embedded in neural networks.

- Solution Example: Payment processing company North American Bancard implemented Atlan’s metadata layers to automatically flag and identify sensitive data before it enters training pipelines. This proactive approach prevents security vulnerabilities from forming.

2. Interface Safety Protocols

- AI’s natural language flexibility, while a great strength, also creates its biggest security risk. Unlike traditional systems with predictable menu-driven interfaces, AI systems can receive unexpected inputs that expose sensitive information or enable malicious attacks.

- Protection Measures: Maintaining interface safety requires dual protection: scrubbing sensitive data from input logs and implementing rejection mechanisms for potentially compromising inputs. The design philosophy should minimize use cases that could introduce sensitive information while maintaining AI functionality.

- Solution Example: Search platform Elastic uses Atlan to flag pipeline breakdowns immediately, reducing discovery time for potential security issues and ensuring continuous system safety.

3. Testing and Accountability Standards

- Google’s AI governance whitepaper identifies three essential factors for AI system transparency and accountability: flagging capabilities, output contesting, and systematic auditing.

- Flagging Capabilities: These enable users to report concerning AI outputs, similar to flagging inappropriate social media content. When a prompt generates responses containing sensitive information, users can immediately flag the interaction. This crowd-sourced quality control is essential for AI systems where individual case behavior can be unpredictable.

- Output Contesting: This provides override mechanisms for problematic AI responses. For instance, if a coding assistant suggests broken code, developers can replace it with working solutions, preventing errors from propagating. This “human-in-the-loop” approach maintains quality control despite AI’s inherent unpredictability.

- Systematic Auditing: This ties everything together through consistent monitoring of AI systems and comprehensive tracking of data structure and lineage. API platform Postman exemplifies this by using Atlan to monitor their transformation pipelines, maintaining clear visibility into connections between data sources and final outputs. This end-to-end tracking enables rapid issue identification and resolution while building the necessary audit trail for regulatory compliance.

What pitfalls derail AI data governance?

Permalink to “What pitfalls derail AI data governance?”-

Hidden security risks: When training AI systems on hundreds of terabytes of data, sensitive information easily infiltrates datasets and becomes embedded in neural networks, creating vulnerabilities that standard security audits can’t detect. Fix: Implement automated metadata labeling to flag sensitive data before it enters training pipelines.

-

Irregular user interfaces: Unlike traditional systems with structured menus, AI’s natural language flexibility enables unexpected inputs like accidental sensitive data sharing or malicious prompt injection attacks. Fix: Deploy input sanitization, logging safeguards, and prompt injection detection mechanisms.

-

Unexplainability challenges: AI algorithms aren’t explicitly designed, making their decision-making processes opaque and difficult to audit for compliance or bias issues. Fix: Implement explainability tools, model documentation standards, and algorithmic impact assessments.

-

Expensive testing requirements: AI system outputs can be chaotic due to flexible inputs, making comprehensive testing for edge cases and failure modes prohibitively costly. Fix: Establish continuous monitoring protocols, automated anomaly detection, and user flagging systems for ongoing quality assurance.

-

Unclear ownership: Data stewardship responsibilities scattered across teams without accountability, leading to governance gaps between AI development and compliance teams. Fix: Assign dedicated data stewards for each AI project with clear escalation paths.

-

Siloed approaches: Different departments create conflicting AI policies and data access controls without coordination, creating compliance vulnerabilities. Fix: Establish centralized governance committee with cross-functional representation and standardized AI governance processes.

Navigating the complexities of AI data governance requires robust solutions. Platforms designed for active metadata management, like Atlan, offer the capabilities needed to proactively address these challenges, ensuring your AI initiatives are built on a foundation of trusted, secure, and compliant data.

Real results, real customers: Implement data governance at scale

Permalink to “Real results, real customers: Implement data governance at scale”

Modernized data stack and launched new products faster while safeguarding sensitive data

“Austin Capital Bank has embraced Atlan as their Active Metadata Management solution to modernize their data stack and enhance data governance. Ian Bass, Head of Data & Analytics, highlighted, ‘We needed a tool for data governance… an interface built on top of Snowflake to easily see who has access to what.’ With Atlan, they launched new products with unprecedented speed while ensuring sensitive data is protected through advanced masking policies.”

Ian Bass, Head of Data & Analytics

Austin Capital Bank

🎧 Listen to podcast: Austin Capital Bank From Data Chaos to Data Confidence

See how Atlan can help implement data governance for AI

Book a Personalized Demo →

53 % less engineering workload and 20 % higher data-user satisfaction

“Kiwi.com has transformed its data governance by consolidating thousands of data assets into 58 discoverable data products using Atlan. ‘Atlan reduced our central engineering workload by 53 % and improved data user satisfaction by 20 %,’ Kiwi.com shared. Atlan’s intuitive interface streamlines access to essential information like ownership, contracts, and data quality issues, driving efficient governance across teams.”

Data Team

Kiwi.com

🎧 Listen to podcast: How Kiwi.com Unified Its Stack with Atlan

One trusted home for every KPI and dashboard

“Contentsquare relies on Atlan to power its data governance and support Business Intelligence efforts. Otavio Leite Bastos, Global Data Governance Lead, explained, ‘Atlan is the home for every KPI and dashboard, making data simple and trustworthy.’ With Atlan’s integration with Monte Carlo, Contentsquare has improved data quality communication across stakeholders, ensuring effective governance across their entire data estate.”

Otavio Leite Bastos, Global Data Governance Lead

Contentsquare

🎧 Listen to podcast: Contentsquare’s Data Renaissance with Atlan

Ready to trust your AI data?

Permalink to “Ready to trust your AI data?”While AI is powerful, we have seen that it comes with unique data governance issues. Atlan’s metadata tracking, enhanced data discoverability, and automated data lineage can help you handle the data governance of your AI system

Let’s help you build a robust data governance framework

Book a Personalized Demo →FAQs about data governance for AI

Permalink to “FAQs about data governance for AI”What exactly is data governance for AI?

Permalink to “What exactly is data governance for AI?”Data governance for AI ensures responsible, secure, and compliant data management throughout the entire AI lifecycle, from training to deployment. It addresses unique challenges like protecting sensitive information in training datasets, maintaining data lineage, and ensuring compliance with evolving regulations.

Why is data governance particularly important for AI compared to traditional systems?

Permalink to “Why is data governance particularly important for AI compared to traditional systems?”AI systems present unique governance challenges because sensitive information can inadvertently become embedded in neural networks during training, and their flexible interfaces introduce new attack vectors like prompt injection. Unlike predictable traditional systems, AI outputs can be chaotic and difficult to test comprehensively, necessitating continuous monitoring and specialized controls.

What are the key aspects of data governance for AI?

Permalink to “What are the key aspects of data governance for AI?”The key aspects include data security (protecting sensitive information in training datasets), data lineage (tracking data flow and transformations), data quality (ensuring accuracy and reliability of training data), compliance (meeting regulatory requirements), and ethical considerations (preventing bias and ensuring fair AI outcomes).

How can organizations prevent bias in AI systems through data governance?

Permalink to “How can organizations prevent bias in AI systems through data governance?”Data governance addresses bias through ethical considerations, which involve implementing bias detection and fairness testing to prevent discriminatory outcomes. Since AI systems can perpetuate historical biases present in training data, ethical oversight is crucial for responsible AI deployment.

What is a practical first step to implement data governance for AI?

Permalink to “What is a practical first step to implement data governance for AI?”A practical first step is to establish organizational data stewardship, where everyone working with data takes responsibility for security and accuracy. This includes creating clear governance policies that specifically address AI-related risks such as prompt injection and model bias.

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.

Related Reads: Data Governance for AI

Permalink to “Related Reads: Data Governance for AI”- AI Data Catalog: Its Everything You Hoped For & More

- 8 AI-Powered Data Catalog Workflows For Power Users

- AI Data Governance: Why Is It A Compelling Possibility?

- AI Governance for Healthcare: Benefits and Usecases

- What is Data Governance? It’s Importance, Principles & How to Get Started?

- Enterprise Data Governance — Basics, Strategy, Key Challenges, Benefits & Best Practices

- Automated Data Governance: How Does It Help You Manage Access, Security & More at Scale?

- 6 Commonly Referenced Data Governance Frameworks in 2025

- 8 Best Practices for a Robust Data Governance Program

- Data Governance Roles and Responsibilities: The complete list

- 5 Popular Data Governance Certification & Training in 2025

- 8 Best Data Governance Books Every Data Practitioner Should Read in 2025

- Snowflake Data Governance — Features, Frameworks & Best Practices

- What is Active Metadata? — Definition, Characteristics, Example & Use Cases

- Data Governance in Action: Community-Centered and Personalized

- Data Governance Framework — Examples, Templates, Standards, Best practices & How to Create One?

- Data Governance Tools: Importance, Key Capabilities, Trends, and Deployment Options

- Data Governance Tools Comparison: How to Select the Best

- Data Governance Tools Cost: What’s The Actual Price?

- Data Governance Process: Why Your Business Can’t Succeed Without It

- Data Governance and Compliance: Act of Checks & Balances

- Data Governance vs Data Compliance: Nah, They Aren’t The Same!

- Data Compliance Management: Concept, Components, Getting Started