Why Data Quality Dashboards Matter & How They Improve Data Trust & Reliability in 2025

Last Updated on: June 30th, 2025 | 12 min read

Unlock Your Data's Potential With Atlan

Quick Answer: What are data quality dashboards?

Permalink to “Quick Answer: What are data quality dashboards?”Data quality dashboards provide a visual summary of the health and reliability of your data assets. They display key metrics like accuracy, completeness, and timeliness, often allowing users to drill down to the schema, table, or column level. These dashboards help teams monitor trends, identify issues quickly, and prioritize fixes.

In this article you will learn about the need for data quality dashboards and what metrics a typical data quality dashboard showcases. You will also learn about how a metadata foundation is needed for good data quality controls and dashboards.

Table of contents

Permalink to “Table of contents”- Data quality dashboards explained

- Why do you need data quality dashboards?

- What are the benefits of using data quality dashboards?

- What metrics does a data quality dashboard track?

- Why is metadata essential to building effective dashboards?

- How does Atlan help with tracking and improving data quality?

- Final thoughts on data quality dashboards

- Data quality dashboards: Frequently asked questions (FAQs)

Data quality dashboards explained

Permalink to “Data quality dashboards explained”Data quality dashboards help organizations keep tabs on the state of their data by visualizing quality indicators in real time. These dashboards give data teams a centralized view of data quality indicators across the environment. They typically include filters and drill-downs by dataset or column, making it easier to detect issues early and maintain trust in data used for reporting or analytics.

What do data quality dashboards actually do?

Permalink to “What do data quality dashboards actually do?”Data quality dashboards provide a centralized view of quality across your organization’s data assets. They aggregate insights into a visual, interactive format that allows for filtering and drilldowns by source, schema, table, or column.

Modern data teams use dashboards to:

- Track metrics like nulls, duplicates, and schema drift in near real time

- Identify root causes using lineage or query history

- Set service-level indicators and receive alerts when thresholds are breached

- Build internal trust in data across technical and business teams

This is a step change from the legacy approach where issues were often discovered downstream — after the damage was already done. Dashboards bring visibility upstream and enable faster action.

Why do you need data quality dashboards?

Permalink to “Why do you need data quality dashboards?”Over the last few years, organizations have been trying to adopt a shift-left approach to data governance, with the help of the various tools they use. A shift-left approach means that you bring the governance as close to the action as possible.

While there are many aspects of governance that need to be addressed, data quality is undoubtedly the most challenging and impactful. This was reflected in dbt Labs’ 2025 State of Analytics Engineering report, where “poor data quality” was the most frequently reported challenge for data teams to do their work well, pointed out by over 56% of organizations.

Traditionally, the approach to data quality was that quality checks happened downstream, after data was extracted, transformed, and loaded into a data warehouse or a data lake. This approach meant that you’d have to waste a lot of compute resources to find out whether there was a data quality issue in the first place. This was a rather passive approach to data quality.

More recently, tracking data quality looks more like application or database monitoring has become much more active. Just like apps and databases observability platforms like Grafana, which let you create dashboards to observe the key performance and usage metrics, data platforms now have a growing need for data quality dashboards, where data quality metrics are actively tracked and reported.

What are the benefits of using data quality dashboards?

Permalink to “What are the benefits of using data quality dashboards?”As highlighted earlier, you need data quality dashboards to track and observe key data quality metrics, so that you can detect abnormalities early and deploy fixes if and when required.

Having data quality dashboards also helps you prevent unnecessary compute and processing costs for data quality tests and checks after the fact.

This goes to the shift-left approach to data quality, similar to shift-left in governance, but here you try to measure all the key data quality metrics as close to the source of data as possible. That includes ensuring the structural compliance of data according to the data contracts, the service levels for various data assets and fields, among other things.

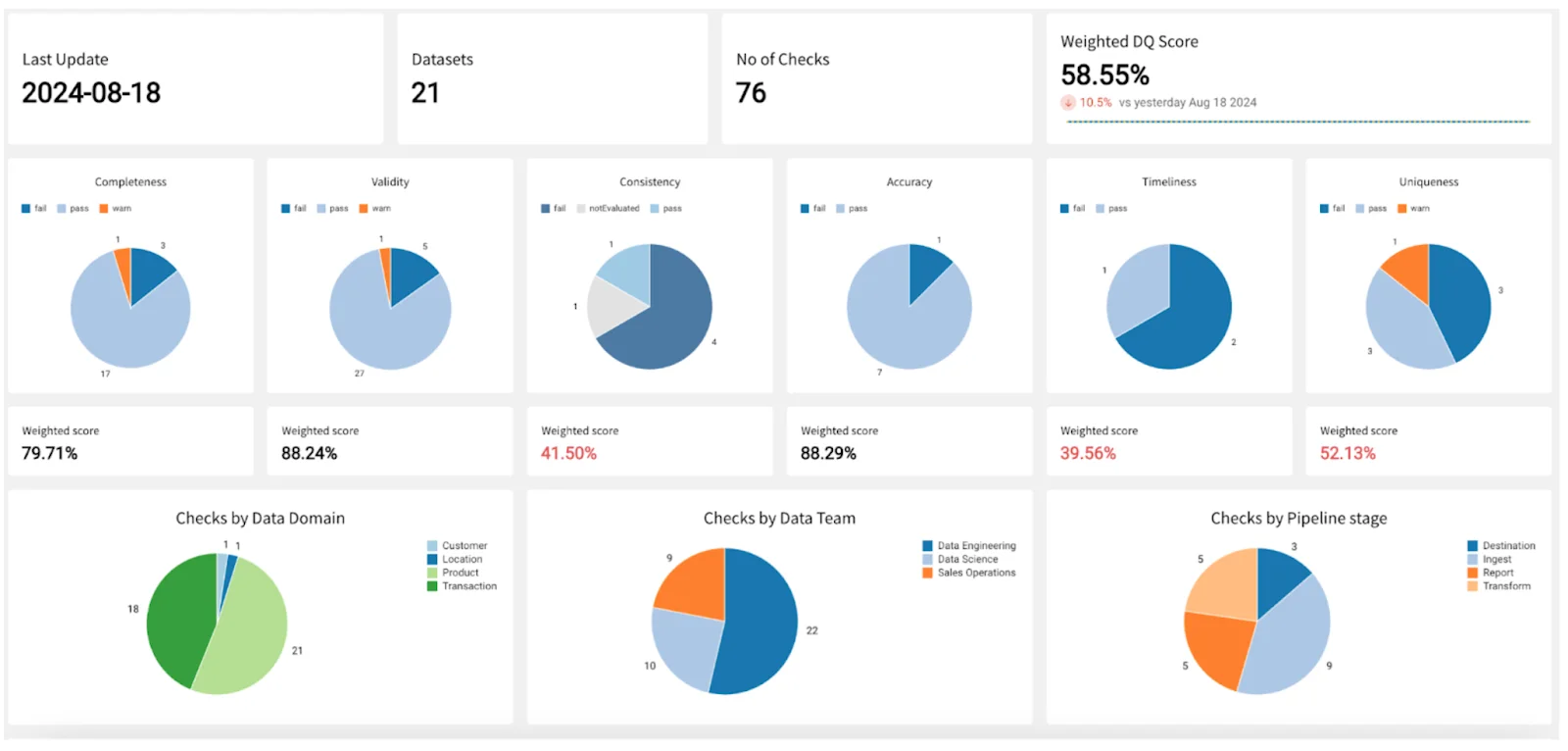

For reference, here is what a data quality dashboard looks like in Soda, which is a data quality monitoring tool.

Data quality dashboard in Soda - Source: Soda.

Aside from the efficiency gains, data quality dashboards help enable transparency within an organization and build trust in data with an easy-to-use visual interface. This results in the various stakeholders having higher confidence in using data for making key decisions, not to mention the boost in trust of the data analysts and engineers who develop and maintain the data assets on a day to day basis.

With that in mind, let’s look at some of the metrics that help you build this trust in data within your organization.

What metrics does a data quality dashboard track?

Permalink to “What metrics does a data quality dashboard track?”Before setting up a holistic view into your organization’s data quality, you must ensure that all of your data assets are cataloged and discoverable from a single place. You have a place where all the metadata is stored because the metadata becomes the foundation for building and executing continuous data quality tests on the data.

After that, you can track data quality using metrics that roughly align into one of the following categories:

- Structure: Involves continuously monitoring the data quality in terms of your data pipeline’s compliance with the data contracts in terms of the overall structure of the data assets and individual properties of the fields. This is the basis for completeness, accuracy, validity, integrity, and consistency tests on your data.

- Lineage: Involves running continuous data quality tests for detecting breaks and inconsistencies in your organization’s data lineage, i.e., to answer questions like:

- Where is the data coming from?

- How has it been transformed?

- What explicit actions have been taken on the data?

- How has it been enriched?

- Data owners can also get alerts and notifications based on any data quality issues, so that they can analyze the impact and prevent issues cascading downstream.

- Aggregate: Helps you detect anomalies and track patterns for specific data assets and fields, where there might not be a strict data contract but there might be a service level agreement. This is definitely helpful in proactively raising alarms about any potential issues that might break things if left unhandled. An example would be calculating the count and percentage of unique values and null values in your data asset.

- Frequency: Involves continuously monitoring the freshness of data, which is one of the key things that most organizations seek now-a-days. That’s because of a move towards streaming-first or more real-time data requirements for quicker and better decision-making.

Examples of data quality metrics that a data quality dashboard would track

Permalink to “Examples of data quality metrics that a data quality dashboard would track”Common data quality metrics that should be included in a data quality dashboard include completeness, uniqueness, freshness, accuracy, and timeliness, among other key metrics.

Moreover, you can include additional data quality metrics based on the data the data asset contains. For instance, you can have a test that checks whether a column only includes data from a predefined list of values.

For this and other metrics, you’ll need to set a baseline depending on service level or handshake agreements. Using that baseline, you can track metrics on the data quality dashboard. This way, you’ll be able to identify when the data quality breaches the threshold, triggering an alert or notification so that the data quality doesn’t have cascading side effects downstream.

Why is metadata essential to building effective dashboards?

Permalink to “Why is metadata essential to building effective dashboards?”To have a data quality dashboard that tracks metrics under these categories, you need a wealth of metadata from all your data sources and the right set of connectors that can push down data quality metric calculations to the source systems.

A collated data dictionary isn’t enough, and neither is a technical data catalog by itself. What you need is a metadata control plane that lets you activate your metadata by letting you use it for automation in all aspects of data, especially in governance and quality.

Without a unified metadata layer, data quality metrics remain siloed. Metadata bridges the gap between observability and actionability.

A metadata control plane aggregates technical and business metadata from across your data stack. This includes schema definitions, ownership, lineage, access patterns, and classifications.

That’s where a tool like Atlan comes into the picture.

How does Atlan help with tracking and improving data quality?

Permalink to “How does Atlan help with tracking and improving data quality?”As a unified metadata control plane, Atlan lets you bring all your organization’s metadata into one place and apply governance, policies, quality, and security to it. The idea is to build trust and derive the maximum business value from data.

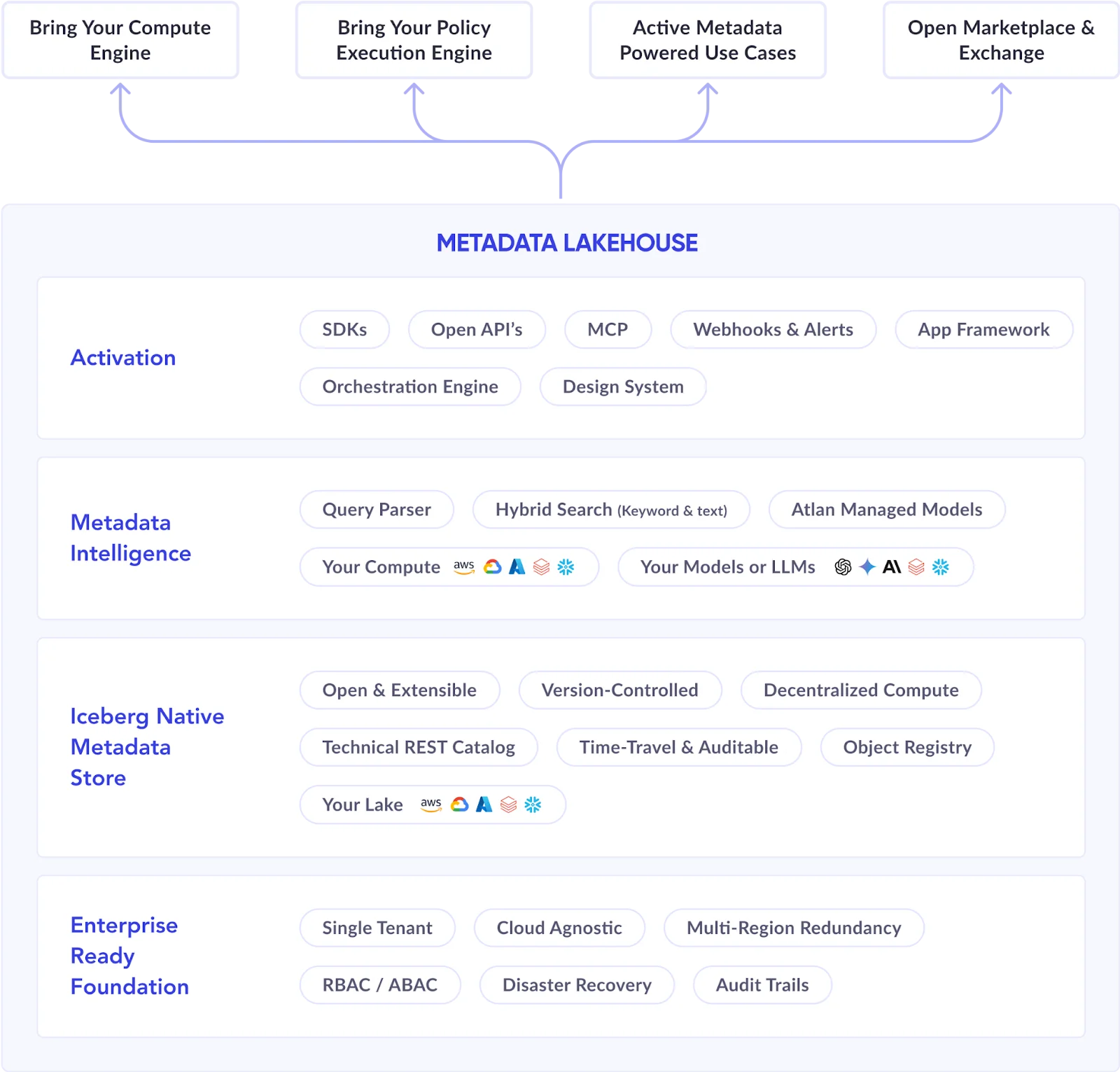

This control plane is powered by Atlan’s Metadata Lakehouse pattern, which serves as the unified context engine for your organization’s data use cases.

Atlan’s metadata-lakehouse-powered control plane for data - Image by Atlan.

Data governance and quality are at the core of metadata activation in Atlan. Whether you want to use external data quality tools like Anomalo, Soda, and Monte Carlo, or data-source-native functionality with something like Snowflake Data Quality and Metric Functions (DMFs), Atlan lets you do that by natively integrating with all these tools.

Take, for example, Snowflake, where Atlan’s Data Quality Studio connects with Snowflake and lets you:

- Define data quality metrics from the Data Quality Studio interface

- Define a schedule to run data quality metrics in Snowflake

- Pushdown the metric calculation queries to Snowflake using DMFs

- View the results of the metric calculations in a user-friendly data quality dashboard

- Assess the impact of data quality issues downstream using lineage impact analysis

All in all, you get to define, automate, and monitor business-aligned data quality rules, which will produce metrics that you can monitor using Atlan Data Quality Studio currently in Private Preview.

You can learn more about how Atlan’s Data Quality Studio can support your data quality workloads in both Snowflake and Databricks. The data quality integration for Snowflake was recently announced by Atlan at the Snowflake Summit 2025 and the data quality integration for Databricks was announced at the Databricks Data + AI Summit 2025.

For more information, head over to Atlan’s official documentation and product updates.

Final thoughts on data quality dashboards

Permalink to “Final thoughts on data quality dashboards”This article introduced you to data quality dashboards, for which there’s a growing need as an organization’s data landscape grows with more data sources and assets.

Various studies from Gartner, Forrester, and other research firms have found that organizations usually end up paying a very high cost for bad data quality and that data quality is still one of the biggest pain points for data teams.

Better features in data platforms help solve the problem to an extent. However, without a metadata foundation, the problem isn’t solved for good, and without visibility into data quality, an organization cannot build trust in data.

That’s why data quality dashboards powered by something like Atlan’s Data Quality Studio are needed. Learn more about Atlan’s Data Quality Studio to keep bad data out of your organization.

Data quality dashboards: Frequently asked questions (FAQs)

Permalink to “Data quality dashboards: Frequently asked questions (FAQs)”1. What is a data quality dashboard?

Permalink to “1. What is a data quality dashboard?”A data quality dashboard is a concise, easy-to-understand visual representation of the data quality metrics for your data estate.

Such a dashboard might have various drill-down features, where you can drill down into the metrics on a more granular level. For instance, you can have schema, table, and column-level data quality metrics.

2. What are some examples of metrics that a data quality dashboard should display?

Permalink to “2. What are some examples of metrics that a data quality dashboard should display?”Some commonly used data quality metrics are the percentage of null values, the percentage of unique values, and deviation from standard or expected values. While these relate to the profile of the data, there are other data quality metrics, which are more operational, such as data freshness.

3. Why are data quality dashboards important in 2025?

Permalink to “3. Why are data quality dashboards important in 2025?”With more real-time pipelines and AI models relying on reliable data, visibility into quality is no longer optional. Dashboards provide the transparency needed to catch issues early, maintain stakeholder trust, and avoid costly downstream errors.

4. How do data quality dashboards help reduce incident response time?

Permalink to “4. How do data quality dashboards help reduce incident response time?”Dashboards surface quality issues in near real time and often integrate with alerts and lineage. This allows teams to quickly trace problems to their origin, assess downstream impact, and assign fixes — reducing downtime and effort.

5. What tools are commonly used to create data quality dashboards?

Permalink to “5. What tools are commonly used to create data quality dashboards?”Data quality dashboards can be built using platforms like Soda, Monte Carlo, Anomalo, Bigeye, and Lightup. Many organizations integrate these tools into a metadata platform like Atlan to get a unified view that ties quality with governance.

6. Which integrations does Atlan’s Data Quality Studio support?

Permalink to “6. Which integrations does Atlan’s Data Quality Studio support?”Atlan’s Data Quality Studio currently supports data quality metrics natively for Snowflake and Databricks, but it also integrates with third-party data quality and observability tools, such as Anomalo, Monte Carlo, and Soda.

7. What are some of the key features of the Data Quality Studio?

Permalink to “7. What are some of the key features of the Data Quality Studio?”Atlan’s Data Quality Studio integrates with data platforms like Snowflake and Databricks, leveraging their native data quality functionality while allowing users to utilize it natively within Atlan’s user interface.

The Data Quality Studio provides a 360° view of data quality, allowing you to define data contracts near the source, integrate with lineage for an in-line view of data quality, and overall makes it easy for you to define and manage data quality for your data assets.

8. Does Atlan work with Snowflake’s native data quality functions?

Permalink to “8. Does Atlan work with Snowflake’s native data quality functions?”Yes, Atlan leverages Snowflake’s Data Quality and Metric Functions (DMFs) for monitoring data quality. You can define these functions as quality rules and define a schedule for them to run. Atlan’s Data Quality Studio dashboard will automatically update based on the scheduled data quality checks.

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.

Data quality dashboards: Related reads

Permalink to “Data quality dashboards: Related reads”- Data Quality Explained: Causes, Detection, and Fixes

- Data Quality Framework: 9 Key Components & Best Practices for 2025

- Data Quality Measures: Best Practices to Implement

- Data Quality Dimensions: Do They Matter?

- Resolving Data Quality Issues in the Biggest Markets

- Data Quality Problems? 5 Ways to Fix Them

- Data Quality Metrics: Understand How to Monitor the Health of Your Data Estate

- 9 Components to Build the Best Data Quality Framework

- How To Improve Data Quality In 12 Actionable Steps

- Data Integrity vs Data Quality: Nah, They Aren’t Same!

- Gartner Magic Quadrant for Data Quality: Overview, Capabilities, Criteria

- Data Management 101: Four Things Every Human of Data Should Know

- Data Quality Testing: Examples, Techniques & Best Practices in 2025

- Atlan Launches Data Quality Studio for Snowflake, Becoming the Unified Trust Engine for AI

- Atlan Launches Data Quality Studio for Databricks, Activating Trust for the AI-Native Era