Databricks Data Access Control: A Complete Guide for 2024

Share this article

The Databricks data access control model enables comprehensive oversight of all securable data objects within Databricks. It provides multiple lines of defense and fine-grained controls, including SCIM integration, identity federation, and persona-based access, to protect and manage data assets effectively.

See How Atlan Simplifies Data Governance – Start Product Tour

This article explains how Databricks’ data access control works and how integrating it with Atlan’s control plane can improve organization-wide data governance.

Table of contents #

- Databricks data access control: Native features

- Data access control with Databricks and Atlan: Setting up a unified control plane for data

- Summing up

- Databricks data access control: Related reads

Databricks data access control: Native features #

Databricks access control centers on identity management, role-based access control (RBAC), access types, securable features, and privileges. Here’s a closer look at how these features combine to create a cohesive data access control framework in Databricks.

Databricks identity, role, and access types #

Access Control Lists (ACLs) allow permission configuration and access restriction to workspace-level objects like clusters, jobs, notebooks, files, models, queries, and dashboards.

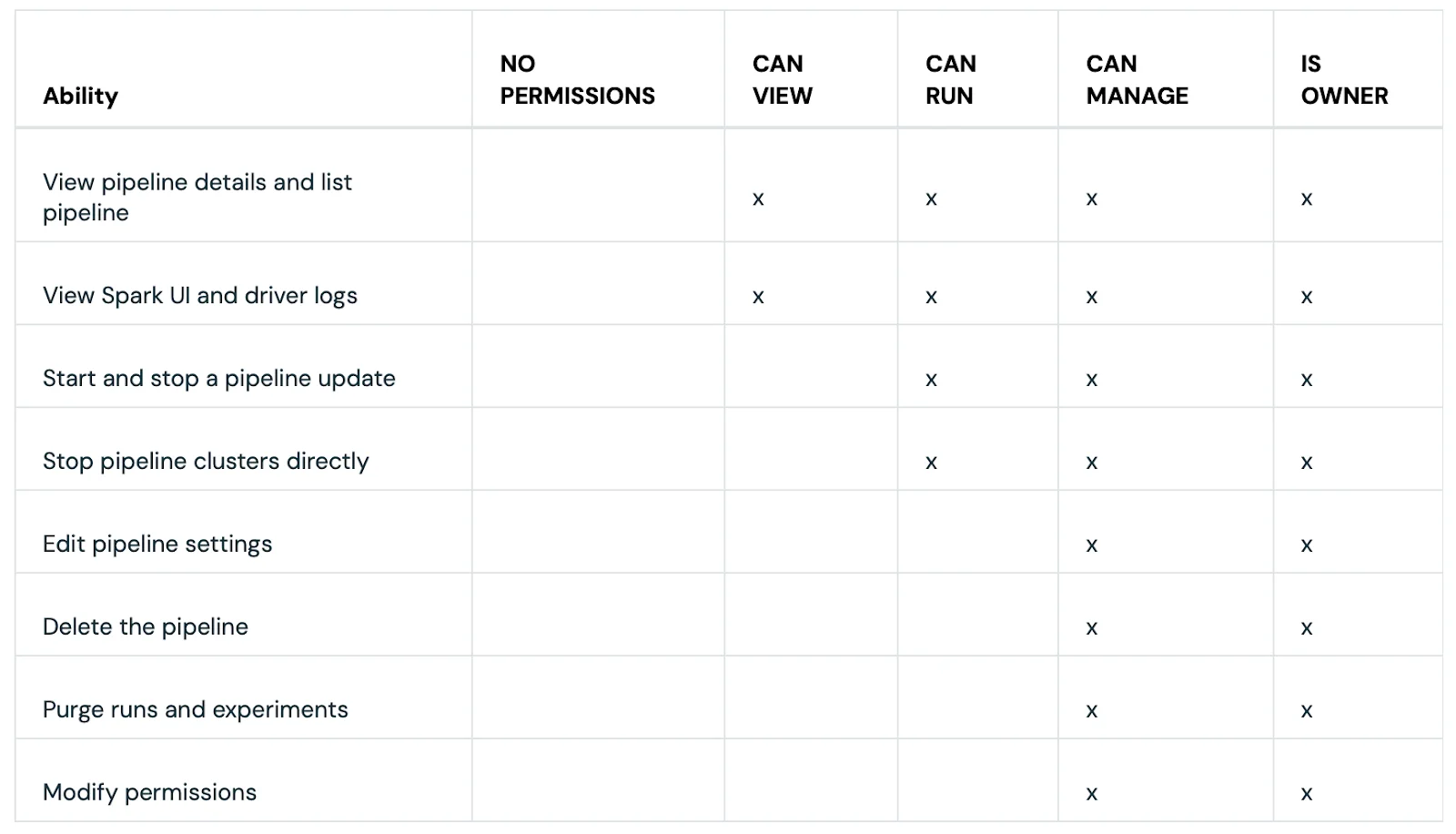

Each workspace-level object has a different set of permissions attached to it. For example, DLT (Delta Live Table) pipeline ACLs have these options:

- CAN VIEW

- CAN RUN

- CAN MANAGE

- IS OWNER

DLT (Delta Live Table) pipeline ACLs (Access Control Lists) - Source: Databricks Documentation.

Meanwhile, SQL warehouse ACLs have the following options:

- CAN USE

- CAN MONITOR

- IS OWNER

- CAN MANAGE

SQL warehouse ACLs - Source: Databricks Documentation.

Databricks implements role-based access control (RBAC) for all account-level objects, including all three identity types:

The management of these identities can only be handled by one of the following four built-in roles:

Databricks offers several other built-in roles with much narrower permissions for specific purposes, such as:

- Metastore admin: Manage the privileges and ownership of all securable data objects within the Unity Catalog metastore

- Billing admin: View budgets and manage budget policies across the Databricks account.

Databricks Unity Catalog securable objects and privileges #

Unity Catalog privileges work slightly differently than other workspace and account-level securable objects as Unity Catalog’s purview applies only to the securable objects stored in the metastore.

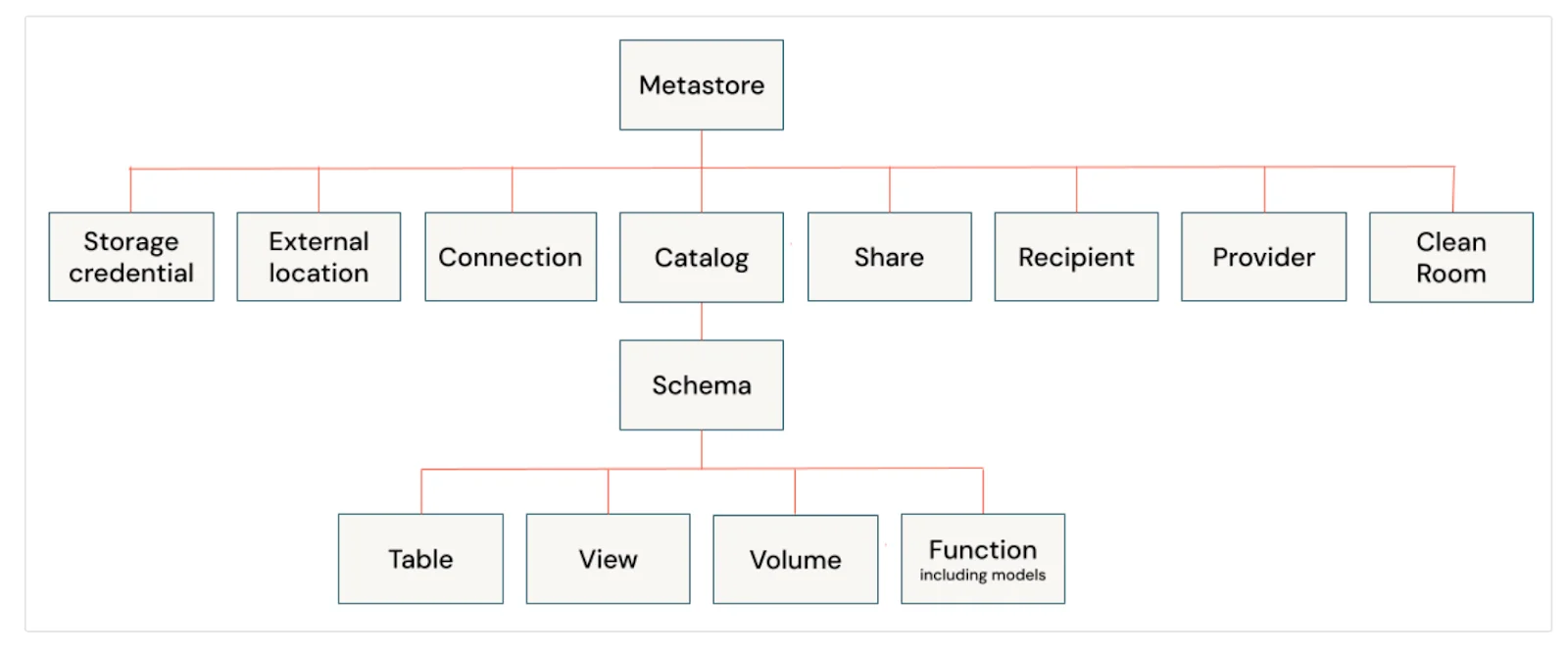

Unity Catalog manages securable objects (e.g., schemas, tables, catalogs) with a hierarchical model, governed by privileges based on an ownership system.

Securable objects in Unity Catalog - Source: Databricks Documentation.

By default, only a user with one of these three roles–account admin, workspace admin, and metastore admin–can manage privileges in the Unity Catalog.

Privileges to an object can be granted either by the object’s owner (or the object’s catalog or schema) or someone with either the account admin or metastore admin role. When granting or revoking privileges, you need to know four things:

- Securable object type: The type of the securable object, such as

SCHEMA,TABLE,CATALOG, etc. - Privilege type: The type of privilege you want to apply on the securable object. For instance, for the

SCHEMAobject, you can apply privileges likeALL PRIVILEGES,CREATE TABLE,CREATE MATERIALIZED VIEW, etc. - Securable object name: The name of the securable object, such as

marketingschema - Principal: The identity type, i.e., user, service principal, or group for which you want to

GRANTorREVOKEprivilege(s)

For instance, to grant specific privileges to the sales_analyst group, you’ll need to use the following set of statements:

GRANT CREATE TABLE ON SCHEMA `primary.sales` TO `sales_analyst`;

GRANT USE SCHEMA ON SCHEMA `primary.sales` TO `sales_analyst`;

GRANT USE CATALOG ON CATALOG `primary` TO `sales_analyst`;

Combining all these aspects of identity, privileges, and securable objects, Databricks offers a solid RBAC framework.

Also, read → Databricks data catalog 101

However, while Databricks excels at managing data assets within its environment, organizations often need a neutral control plane to apply consistent policies across their entire data ecosystem.

Such a control plane for data will sit across all your tools with data assets to which you want to apply consistent policies. Let’s see how.

Data access control with Databricks and Atlan: Setting up a unified control plane for data #

A control plane for data is a governance and management layer that lets you manage all components in your data ecosystem, such as transformation tools, job orchestrators and, most importantly, the core data platform, which, for the scope of this article, is Databricks.

Atlan serves as this control plane, integrating data asset, usage, and governance metadata from Databricks and other tools to create a centralized “lakehouse for metadata.” This setup provides a unified interface to search, discover, and govern data across your organization.

Key concepts enabling Atlan’s integration with Databricks include:

- User roles: Every Atlan user needs to map to one of these predefined roles: Admin, Member, or Guest. You can also use user groups to organize all of your Atlan users better.

- Personas: Personas help you better manage access by letting you curate data assets and working with various types of access policies to implement fine-grained access control.

- Purposes: Purposes allow you to group data assets by team, project and domain, among other things. They also help you implement granular access control, especially when dealing with sensitive data.

In addition to this, Atlan takes a simple but robust approach to the principle of least privilege by following these three rules:

- Access is denied by default

- Explicit grants or allows are combined, i.e., they form a superset of grants or allows

- Explicit restrictions or denies take priority over existing grants or allows

Atlan’s integration with Databricks works well because Atlan leverages all the metadata captured by Databricks in its system tables to its fullest potential. Plus, setting up and working with an Atlan + Databricks connection is straightforward and well-documented.

Also, read → Databricks connectivity 101

Summing up #

Databricks provides a solid framework for data access control, enabling RBAC to secure data assets within its environment.

However, a comprehensive data governance strategy often requires a unified control plane to manage assets across various tools in the data ecosystem. Atlan fulfills this role, acting as a centralized control plane that enhances Databricks access control and provides consistent governance for all data assets organization-wide.

For more details on how Atlan integrates with Databricks, explore the official documentation on the Atlan + Databricks integration.

Databricks data access control: Related reads #

- Databricks Data Security: A Complete Guide for 2024

- Data Governance and Compliance: An Act of Checks and Balances

- Data Compliance Management: Concept, Components, Steps (2024)

- Databricks Unity Catalog: A Comprehensive Guide to Features, Capabilities, Architecture

- Data Catalog for Databricks: How To Setup Guide

- Databricks Lineage: Why is it Important & How to Set it Up?

- Databricks Governance: What To Expect, Setup Guide, Tools

- Databricks Metadata Management: FAQs, Tools, Getting Started

- Databricks Cost Optimization: Top Challenges & Strategies

- Databricks Data Mesh: Native Capabilities, Benefits of Integration with Atlan, and More

- Data Catalog: What It Is & How It Drives Business Value

Share this article