Working with Apache Iceberg in Google BigQuery: A Complete Guide for 2025

Share this article

Google Cloud first ventured into its support for Apache Iceberg in late 2022 when it announced the Google BigLake + Apache Iceberg integration. Since then, the BigLake integration has matured, which now supports creation of Iceberg objects using BigLake’s custom Iceberg catalog, AWS Glue Data Catalog, or vanilla Iceberg JSON files that store table metadata.

See How Atlan Simplifies Data Governance ✨ – Start Product Tour

In this article, we’ll explain how to work with Apache Iceberg specifically when using Google BigQuery. We’ll also explore the need for a unified control plane for data to support your organization’s wider data ecosystem and not just Apache Iceberg-based data tools.

Table of Contents

Permalink to “Table of Contents”- Apache Iceberg in Google BigQuery: How does it work?

- Apache Iceberg + BigQuery: Using BigQuery metastore for Iceberg tables

- Apache Iceberg in Google BigQuery: Why you need a metadata control plane for this integration

- Apache Iceberg in Google BigQuery: Summing up

- FAQs about using Apache Iceberg with Google BigQuery

- Working with Apache Iceberg in Google BigQuery: Related reads

Apache Iceberg in Google BigQuery: How does it work?

Permalink to “Apache Iceberg in Google BigQuery: How does it work?”Previously called BigQuery tables for Apache Iceberg, Iceberg tables for Google BigQuery lay the foundation of open-format data lakehouse architecture on Google Cloud.

In the past, the Google BigLake + Iceberg integration was limited – it prevented the creation of Iceberg tables in BigQuery using external query engines like Apache Spark, Trino, and Presto.

BigLake external tables also only allow read-only access to Iceberg tables. Since BigLake didn’t have any internal engine to support write access to Iceberg tables, you had to write those tables using another Iceberg client.

More recently, these limitations have been mitigated substantially by giving you three different options to work with Apache Iceberg in Google BigQuery and Google BigLake:

- BigLake external tables for Apache Iceberg: To create external tables on top of Iceberg data already stored in Google Cloud Storage

- Iceberg tables for BigQuery: To allow direct reads and writes on Iceberg tables hosted in Google Cloud Storage

- BigQuery metastore for Iceberg tables: To create an Iceberg catalog to act as a metastore for Iceberg tables, available to external query engines

So today, Iceberg tables are readable and writeable directly from Google BigQuery. They support some key features of schema evolution, automatic storage optimization, row and column-level security, and batch and streaming use cases.

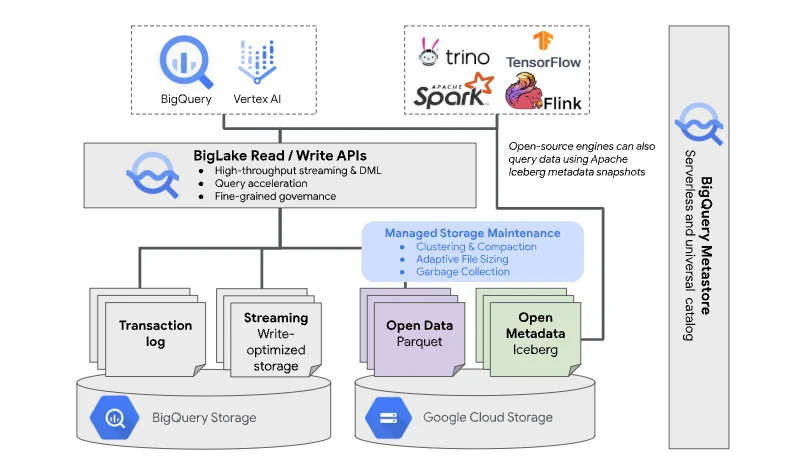

Managed table architecture in BigQuery for open file and table formats - Source: Google Cloud blog

It is important to note that this feature is currently in preview and has its fair share of limitations listed in the official documentation. Key limitations include:

- You cannot copy, clone, snapshot, or rename Iceberg tables.

- You cannot set up materialized views, partitioning, row-level security, time travel, CDC updates, etc.

- There’s no support for linked datasets.

Using Iceberg tables makes sense when you want to query data in BigQuery using the metadata from Iceberg tables.

Now, let’s look at the use case where you want to read and write from engines other than BigQuery but store the Iceberg metadata in BigQuery.

Apache Iceberg + BigQuery: Using BigQuery metastore for Iceberg tables

Permalink to “Apache Iceberg + BigQuery: Using BigQuery metastore for Iceberg tables”The BigQuery metastore acts as an Iceberg catalog, which allows you to store data in any storage platform, including Google Cloud Storage and HDFS. Iceberg metadata gets stored in the BigQuery metastore, which BigQuery can access, along with a wide variety of Iceberg clients, such as Spark, Trino, Flink, and Presto.

This fully managed serverless metastore acts as the single source of truth for all data sources within and outside Google Cloud. It brings engine interoperability as a key feature to creating a data platform that uses open table formats and query engines. So, you can create a table with Spark or Trino and query it in BigQuery.

BigQuery metastore interoperability - Source: Google Cloud blog

As this feature was released only a couple of months ago in January 2025, it is currently in preview (pre-GA), which is why it also has several limitations that are mentioned in the official documentation.

While the BigQuery metastore is a promising new feature for enabling interoperability to reduce unnecessary data copying, movement, and format conversions, it does not fulfill the need for organization-wide cataloging, governance, lineage, and quality needs. That’s where a metadata control plane comes into the picture.

Apache Iceberg in Google BigQuery: Why you need a metadata control plane for this integration

Permalink to “Apache Iceberg in Google BigQuery: Why you need a metadata control plane for this integration”A metadata control plane sits horizontally across your organization’s data ecosystem, bringing together metadata from all the data sources. These include data sources using Iceberg as a table format for better governance, cataloging, and collaboration use cases.

Atlan is a metadata control plane that brings all data from your data assets under a single roof, not just for cataloging but also for governing, profiling, analyzing, and thoroughly using them.

Atlan, with its partner and integration ecosystem, connects with all major cloud providers, data platforms, and query engines, among other data tools.

Atlan integrates with Apache Iceberg via an open-source Iceberg catalog called Apache Polaris. It also integrates all the metadata that resides within BigQuery.

Apache Iceberg in Google BigQuery: Summing up

Permalink to “Apache Iceberg in Google BigQuery: Summing up”This article gave you an overview of the support for Apache Iceberg in Google Cloud, with a focus on BigQuery. We learned about native integration as well as alternatives when using multiple query engines and table formats.

While Apache Iceberg is a very popular table format, organizations are using other table formats in conjunction with Iceberg, such as Apache Hudi, Apache Paimon, and Delta Lake.

There’s an ever greater need to bring all these table formats, file formats, query engines, data platforms, orchestrators, and other data tools together.

The only sustainable way to do it is using metadata and that’s what Atlan’s control plane does so well. To learn more, check out Atlan’s documentation.

FAQs about using Apache Iceberg with Google BigQuery

Permalink to “FAQs about using Apache Iceberg with Google BigQuery”How can I access Apache Iceberg tables from external systems like Apache Spark or Trino when using BigQuery?

Permalink to “How can I access Apache Iceberg tables from external systems like Apache Spark or Trino when using BigQuery?”You can access BigQuery-managed Apache Iceberg tables from external systems through two main methods:

- BigQuery Storage API: This allows external engines to read and write data with strong consistency and enforces row and column-level permissions. BigQuery offers open-source connectors for engines like Spark, Trino, and Flink.

- Direct Access from Cloud Storage: External engines can read Iceberg table data stored in Google Cloud Storage directly. However, this method is read-only and provides eventually consistent access, as metadata updates happen asynchronously.

Is BigQuery’s support for Apache Iceberg the only method for modifying data lake files within Google Cloud?

Permalink to “Is BigQuery’s support for Apache Iceberg the only method for modifying data lake files within Google Cloud?”No, BigQuery’s support for Apache Iceberg is just one method. You can also modify data using Data Manipulation Language (DML) queries. External engines can append data through the Storage Write API, though it’s recommended to use BigQuery as the primary writer to avoid data consistency issues.

How does BigLake integrate with BigQuery and Apache Iceberg, and what are the use cases for each?

Permalink to “How does BigLake integrate with BigQuery and Apache Iceberg, and what are the use cases for each?”BigLake enhances security and performance for external tables in BigQuery, including those using Apache Iceberg. It allows read-only external tables on Iceberg data stored in Google Cloud Storage, while BigQuery’s native Iceberg tables support both reading and writing. Choosing between BigLake and BigQuery depends on your use case: use BigLake for secure, external table reads, and BigQuery for full data management capabilities.

What are the limitations of using Apache Iceberg tables with BigQuery?

Permalink to “What are the limitations of using Apache Iceberg tables with BigQuery?”Some limitations include:

- No support for operations like copying, cloning, snapshotting, or renaming Iceberg tables.

- Features like materialized views, partitioning, row-level security, time travel, and Change Data Capture (CDC) updates are not available.

- Linked datasets are not supported.

These limitations should be considered when planning your data architecture and workflows.

Why is implementing a metadata control plane important when integrating Apache Iceberg with BigQuery?

Permalink to “Why is implementing a metadata control plane important when integrating Apache Iceberg with BigQuery?”A metadata control plane centralizes metadata management across different data sources, offering several benefits:

- Unified Governance: Ensures consistent data governance across platforms.

- Enhanced Collaboration: Centralizes metadata, improving team collaboration.

- Improved Data Quality: Helps monitor and maintain data quality.

A metadata control plane is crucial for managing complex data ecosystems effectively.

Working with Apache Iceberg in Google BigQuery: Related reads

Permalink to “Working with Apache Iceberg in Google BigQuery: Related reads”- Apache Iceberg: All You Need to Know About This Open Table Format in 2025

- Apache Iceberg Data Catalog: What Are Your Options in 2025?

- Apache Iceberg Tables Data Governance: Here Are Your Options in 2025

- Apache Iceberg Alternatives: What Are Your Options for Lakehouse Architectures?

- Apache Parquet vs. Apache Iceberg: Understand Key Differences & Explore How They Work Together

- Apache Hudi vs. Apache Iceberg: 2025 Evaluation Guide on These Two Popular Open Table Formats

- Apache Iceberg vs. Delta Lake: A Practical Guide to Data Lakehouse Architecture

- Working with Apache Iceberg on Databricks: A Complete Guide for 2025

- Working with Apache Iceberg on AWS: A Complete Guide [2025]

- Working with Apache Iceberg and AWS Glue: A Complete Guide [2025]

- Polaris Catalog from Snowflake: Everything We Know So Far

- Polaris Catalog + Atlan: Better Together

- Snowflake Horizon for Data Governance

- What does Atlan crawl from Snowflake?

- Snowflake Cortex for AI & ML Analytics: Here’s Everything We Know So Far

- Snowflake Copilot: Here’s Everything We Know So Far About This AI-Powered Assistant

- How to Set Up Data Governance for Snowflake: A Step-by-Step Guide

- How to Set Up a Data Catalog for Snowflake: A Step-by-Step Guide

- Snowflake Data Catalog: What, Why & How to Evaluate

- AI Data Catalog: Exploring the Possibilities That Artificial Intelligence Brings to Your Metadata Applications & Data Interactions

- What Is a Data Catalog? & Do You Need One?

Share this article