Working With Apache Iceberg on AWS: A Complete Guide [2025]

Share this article

AWS first forayed into Iceberg-land a couple of years ago with the announcement of Apache Iceberg support in Athena. This integration followed several others, including Amazon EMR, AWS Glue, Amazon RDS, and Amazon DynamoDB.

See How Atlan Simplifies Data Governance ✨ – Start Product Tour

Now, Iceberg is considered one of the important components in AWS to create data lakes and lakehouses, which is why its use is assessed in the AWS Well Architected Data Analytics Lens, too.

This article will briefly introduce how to use Apache Iceberg with AWS native services. It will also specifically discuss the various AWS services you can use as Iceberg catalogs. Toward the end, we’ll explore the need for a broader and horizontal metadata control plane across your data ecosystem.

Table of Contents #

- Apache Iceberg on AWS: An overview

- Apache Iceberg workloads with Amazon EMR and Athena

- Cataloging Apache Iceberg on AWS: What are your options?

- Apache Iceberg on AWS: Why you need a metadata control plane

- Apache Iceberg on AWS: Summing up

- Working with Apache Iceberg on AWS: Related reads

Apache Iceberg on AWS: An overview #

Apache Iceberg helps you create a data lakehouse (which AWS calls a modern data lake). Its key goal is to bring database and data warehousing-type features–ACID transactions, isolation, write concurrency, advanced filtering–to data lakes.

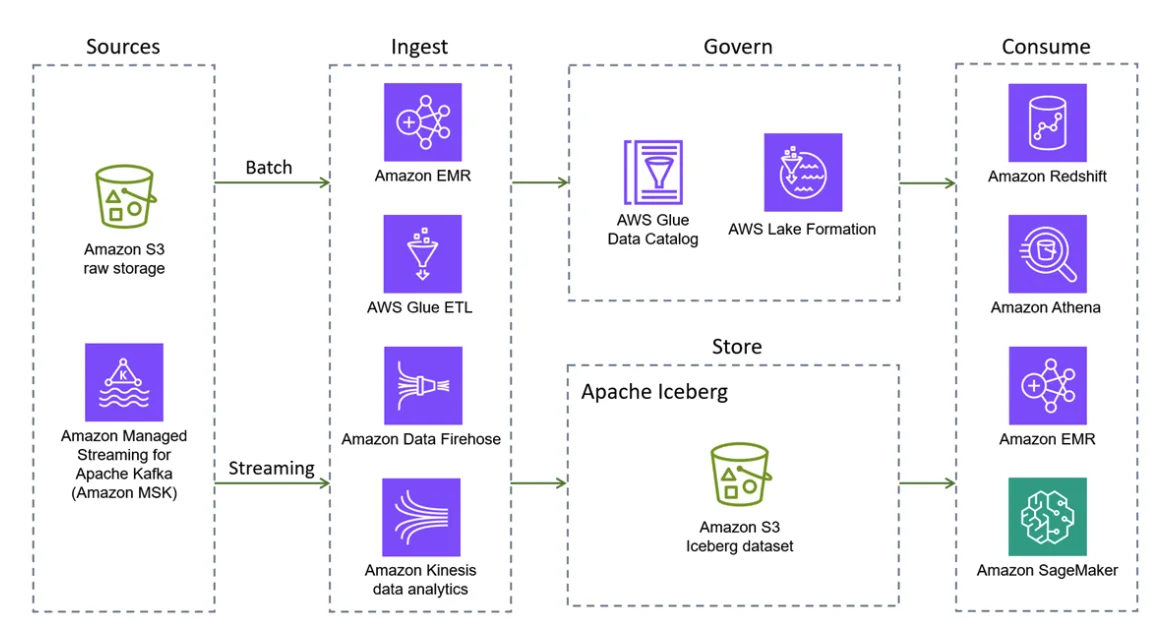

This goal is realized by using several AWS services:

- AWS S3 serves the backend object store for physical files in various Iceberg-supported file formats (Apache Parquet and Apache Avro)

- AWS Glue Data Catalog, DynamoDB, or Amazon RDS fulfil the technical cataloging needs

- Amazon EMR and Redshift act as the consumption layers

Apache Iceberg and AWS in action - Source: Amazon.

Currently, AWS provides native integrations with Apache Iceberg for 10 services – Amazon S3, EMR, Athena, Redshift, Sagemaker AI, AWS Glue, AWS Glue Data Catalog, AWS Glue crawler, Amazon Data Firehose, and AWS Lake Formation.

For more on how to go about building a data lakehouse or a modern data lake on AWS using Apache Iceberg, you can use the AWS Well Architected Framework along with the AWS Perspective Guidance documentation.

Apache Iceberg workloads with Amazon EMR and Athena #

There are various ways to store, catalog, and consume Iceberg tables in AWS. Amazon EMR is a MapReduce-based service that allows you to interact with Iceberg tables using its Apache Hive, Apache Flink, and Apache Spark integrations. Native Iceberg support has been available in Amazon EMR since the release of version 6.5.0. You can natively read and write data using Spark code in EMR notebooks.

However, Amazon EMR doesn’t have an internal catalog, and for that purpose, you can leverage AWS Glue Data Catalog. Also, if you don’t want to go the Hive, Flink, or Spark route, you can also use Amazon Athena to read and write data into Iceberg tables.

Cataloging Apache Iceberg on AWS: What are your options? #

AWS has three different categories of services that can be used as technical catalogs for Iceberg:

- AWS Glue Data Catalog, a managed Hive-compatible catalog

- Amazon RDS, which works via JDBC

- DynamoDB via REST

Let’s explore each alternative further.

Apache Iceberg workloads on AWS Glue #

Among these three, AWS Glue Data Catalog is the most widely used because of its compatibility with other AWS services and general ease-of-use.

The support for Iceberg was introduced from AWS Glue 3.0, but currently, AWS Glue 5.0 supports the Iceberg 1.6.1. Using Iceberg in Glue is simple – enable the Iceberg framework in Glue by specifying Iceberg as the data lake format. You can write a table in S3 that gets registered in the Glue Data Catalog using the following command:

dataFrame.writeTo("glue_catalog.my_data_lake.my_table") \

.tableProperty("format-version", "2") \

.create()

The glue_catalog Python library (which is also available for Scala) along with other CLI tools makes it quite easy to work with Apache Iceberg on AWS Glue.

Amazon RDS as the JDBC catalog for Apache Iceberg #

Using a JDBC database as the catalog for Iceberg usually makes sense when you already have an existing relational database in RDS with many important tables. In that case, it is worth having all the metadata in one place – Amazon RDS database.

The JDBC catalog manages Iceberg tables using a single table in a relational database. It requires a database and a JDBC connection to support serializable isolation. The single database table that maintains all the Iceberg data will behave like a key-value pair. Its value would contain nested JSON objects with the various metadata fields that Iceberg needs to maintain for a table.

DynamoDB as the catalog and lock manager for Apache Iceberg #

Another AWS backend that you can use as an Iceberg catalog is DynamoDB. This is suggested especially when your write workloads can result in hot partitions. The DynamoDB catalog design is simple. It uses a single table to store all the Iceberg metadata, as shown below.

Column name | Key type | Data type | Description |

|---|---|---|---|

identifier | Partition key | String | Table name |

namespace | Sort key | String | Namespace name, which is also used as a GSI (Global Secondary Index) partition key |

v | NA | String | Row version, which is for optimistic locking |

updated_at | NA | Number | Last updated timestamp (in ms) |

created_at | NA | Number | Table creation timestamp (in ms) |

p.property_key | NA | String |

DynamoDB can also act in another capacity with Iceberg, i.e., as a lock manager. DynamoDB is used widely as a lock manager for other use cases, most popularly with tools like Terraform for managing locks on Terraform state files. It can perform a similar function with Iceberg.

Whether it is data storage, processing, transformation, or cataloging, AWS provides various integration points with Apache Iceberg, making it easier to build data lakehouses on AWS. Despite having a lot of Iceberg catalog options, it doesn’t provide a single place to manage all your metadata, whether it is in AWS or elsewhere, in Iceberg table format or not. That’s where the need for a metadata control plane arises.

Apache Iceberg on AWS: Why you need a metadata control plane #

While the flexibility to choose your catalogs for Iceberg is helpful for data teams, it doesn’t give you the flexibility of bringing any data assets into the same catalog – at least not without putting in a lot of custom development.

In reality, one data source, file format, or table format is often insufficient to serve an enterprise’s broad use cases. This is why a control plane for your whole data stack is needed, where you can plug in all your data systems, including Iceberg, and manage your complete data estate from one place.

Atlan is a metadata control plane for your data, metadata, and AI assets. This makes it easier for you to bring in features like data governance, lineage, business glossary, data policies, and contracts to your stack.

Apache Iceberg on AWS: Summing up #

AWS has a wide landscape of data services for various use cases. Meanwhile, Apache Iceberg has been a relatively recent entrant into the data ecosystem. Since the adoption of open-source tools and open standards across industries is growing, AWS has integrated with Apache Iceberg – giving you the ability to create a modern data lake or a data lakehouse.

This article explains the various ways you can work with Apache Iceberg in AWS using some of the native services and capabilities, including, but not limited to, AWS Glue, Amazon EMR, Amazon RDS, and Amazon Athena.

The article examines the AWS-native cataloging options – AWS Glue Data Catalog, Amazon RDS, and Amazon DynamoDB. It also established why a native Iceberg catalog isn’t enough and why a metadata control plane is needed for the business to get the most out of its data.

For more information on the Atlan + AWS integration or the Atlan + Iceberg integration, please refer to the official documentation.

Working with Apache Iceberg on AWS: Related reads #

- Apache Iceberg: All You Need to Know About This Open Table Format in 2025

- Apache Iceberg Data Catalog: What Are Your Options in 2025?

- Apache Iceberg Tables Data Governance: Here Are Your Options in 2025

- Apache Iceberg Alternatives: What Are Your Options for Lakehouse Architectures?

- Apache Parquet vs. Apache Iceberg: Understand Key Differences & Explore How They Work Together

- Apache Hudi vs. Apache Iceberg: 2025 Evaluation Guide on These Two Popular Open Table Formats

- Apache Iceberg vs. Delta Lake: A Practical Guide to Data Lakehouse Architecture

- Polaris Catalog from Snowflake: Everything We Know So Far

- Polaris Catalog + Atlan: Better Together

- Snowflake Horizon for Data Governance

- What does Atlan crawl from Snowflake?

- Snowflake Cortex for AI & ML Analytics: Here’s Everything We Know So Far

- Snowflake Copilot: Here’s Everything We Know So Far About This AI-Powered Assistant

- How to Set Up Data Governance for Snowflake: A Step-by-Step Guide

- How to Set Up a Data Catalog for Snowflake: A Step-by-Step Guide

- Snowflake Data Catalog: What, Why & How to Evaluate

- AI Data Catalog: Exploring the Possibilities That Artificial Intelligence Brings to Your Metadata Applications & Data Interactions

- What Is a Data Catalog? & Do You Need One?

Share this article