Apache Paimon vs. Apache Iceberg: 2025 Evaluation Guide on These Two Popular Open Table Formats

Share this article

Apache Paimon and Apache Iceberg are two of the four main table formats that data engineers consider when architecting a data lakehouse. The other two are Apache Hudi and Delta Lake.

Apache Iceberg is a widely used table format enabling organizations to create large-scale data lakehouses, whereas Apache Paimon is a more recent entrant. Both formats address the limitations of the legacy table format Apache Hive in their own different ways.

See How Atlan Simplifies Data Governance ✨ – Start Product Tour

In this article, we’ll compare Apache Paimon vs. Apache Iceberg on the core technical specifications, capabilities, and interoperability. We’ll also explore the need for a metadata control plane for your data estate, irrespective of the file or table formats used to store and manage them.

Table of Contents

Permalink to “Table of Contents”- Apache Paimon vs. Apache Iceberg: An overview

- Apache Paimon vs. Apache Iceberg: How do their features compare?

- Apache Paimon and Apache Iceberg ecosystems: The need for a metadata control plane

- Apache Paimon vs. Apache Iceberg: Wrapping up

- FAQs about Apache Paimon and Apache Iceberg

- Apache Paimon vs. Apache Iceberg: Related reads

Apache Paimon vs. Apache Iceberg: An overview

Permalink to “Apache Paimon vs. Apache Iceberg: An overview”Apache Paimon and Apache Iceberg address Apache Hive’s shortcomings but cater to distinct workloads. While Iceberg was built more for large-scale batch-based read and write workloads, Paimon was designed with streaming data lakehouses in mind.

To conduct an in-depth comparison of Apache Paimon vs. Apache Iceberg, let’s begin by exploring the nuances of each tool, starting with Iceberg.

Large scale data lakehouses with Apache Iceberg

Permalink to “Large scale data lakehouses with Apache Iceberg”Before Iceberg, the dominant metadata organization engine and metastore was Apache Hive, which had several limitations around slow query planning and expensive directory listing operations. To get rid of these issues, Netflix developed Iceberg, which they later donated to the Apache Software Foundation.

Because of its intensive read and write workloads, Netflix needed to support transactions, making the data platform reliable and trustworthy. Apache Hive couldn’t provide write consistency, atomicity, and transaction isolation, which paved the way for Iceberg. Netflix created Iceberg to handle transactions with atomic commits and snapshot isolation.

Iceberg was also designed to avoid full rewrites of partitions when data changed. It was also developed to use other partition-based efficiencies, such as automatic partition pruning and hidden partitioning, to speed up both read and write workloads.

Iceberg also supported other key query engines, such as Apache Spark, Apache Flink, Trino, and Presto, making it a very compelling alternative to Apache Hive.

Iceberg was designed with Netflix’s workloads in mind, which were primarily related to large-scale data ingestion, processing, and ETL. While Iceberg could handle streaming workloads, it wasn’t built to solve that problem. This led to more innovation at Alibaba Cloud and the creation of Paimon.

Real-time streaming data lakehouses with Paimon

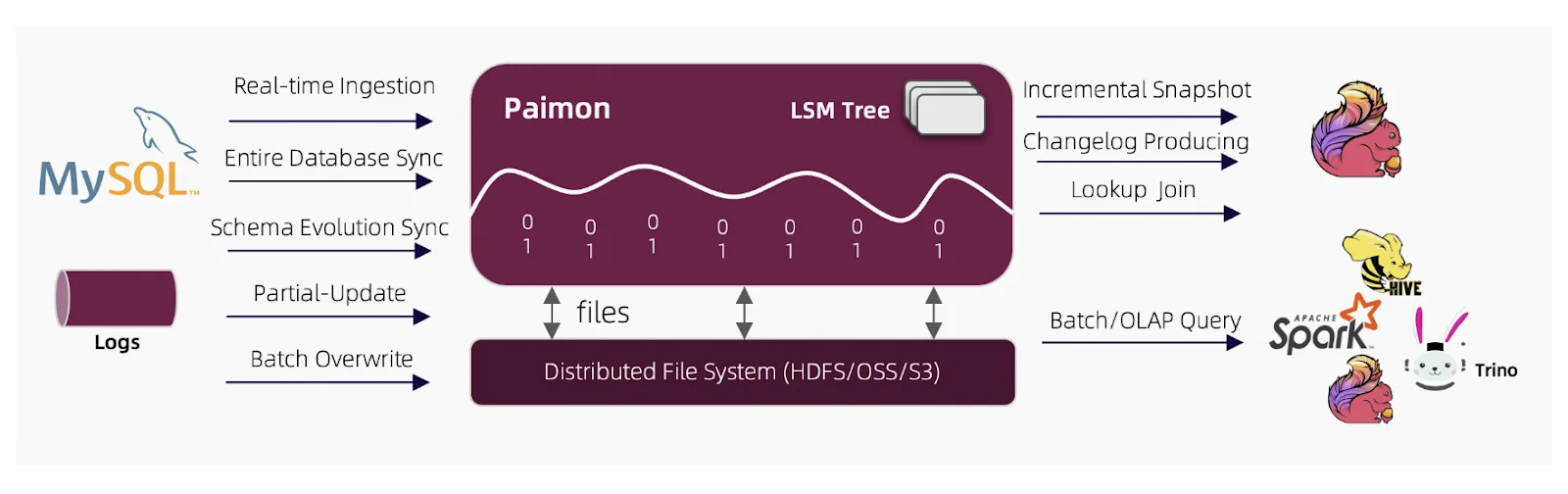

Permalink to “Real-time streaming data lakehouses with Paimon”Paimon was first released as Flink Table Store. Its main purpose is to augment Parquet and ORC file formats with LSM (Log Structured Merge) architecture. This architecture helps deal with streaming data more efficiently.

Whether or not you partition your tables, this architecture brings efficiency to real-time data workloads.

Apache Paimon architecture - Source: Apache Paimon documentation.

As organizations are already using Iceberg, Paimon also supports the Iceberg specification and helps generate metadata compatible with the Iceberg. This avoids unnecessary copying of data as much as possible, enabling you to work just by automatic metadata conversion under the hood.

Other projects, like Apache XTable and Delta Lake UniForm, also attempt to solve the problem of table format interoperability.

Paimon’s key features include handling real-time updates, large-scale append-based data processing, petabyte streaming scale data lake with ACID transactions, time travel, and schema evolution capabilities.

Apache Paimon vs. Apache Iceberg: How do their features compare?

Permalink to “Apache Paimon vs. Apache Iceberg: How do their features compare?”Apache Paimon and Iceberg were designed to be foundational elements of a data lakehouse. However, they solve slightly different problems.

Iceberg is a more general-purpose data lakehouse table format that works with multiple file formats, while Paimon is aimed towards more streaming and real-time data lakehouses.

The following table compares both tools regarding some key aspects of table formats.

Comparing on | Apache Paimon | Apache Iceberg |

|---|---|---|

Function in a data lakehouse | Designed for supporting real-time and streaming read and write queries on a data lakehouse | Designed for more general purpose workloads with high write concurrency, ACID transactions, and snapshot isolation |

Support for transactions | Employs snapshot isolation for streaming data architecture | Employs snapshot isolation to enable time travel, rollback, and write concurrency |

Query planning and optimization | Supports primary keys, indexes, and partition pruning | Uses partition pruning and hidden partitioning to improve query performance |

Partition evolution | Has some partition evolution features; works with primary key based partitioning | Supports all partition evolution features |

Schema evolution | Supports schema changes such as adding or removing columns, although to a limited extent | Supports most schema evolution features, which include adding, removing, renaming, and reordering columns |

Point-in-time querying with time travel | Offers point-in-time queries but is optimized for real-time reads and writes | Designed for giving you a full view of the state of data at any given time with snapshot-based time travel |

Write performance | Designed for enabling real-time ingestion, updates and merges at scale | Supports batch-based workloads to write large amounts of data in a single go |

When is it best to use? | When you want to build a high-volume real-time streaming data lakehouse with both analytical and transactional workloads | When you want to support very large analytical workloads with transactional guarantees, concurrent writers, and time travel |

Overall, Apache Paimon is slightly better suited for the real-time and streaming data lakehouse use case. Iceberg works more generally, especially catering to batch-based analytical workloads at scale.

Both table formats are designed to support high write concurrency at scale with ACID guarantees.

Paimon also natively supports Iceberg metadata, eliminating the need for a third-party table format metadata conversion tool like Apache XTable. However, when you use Iceberg, it doesn’t natively support Paimon metadata yet.

While both Paimon and Iceberg maintain their metadata in their catalogs, it is important to understand that those internal catalogs can’t help you with business use cases involving data governance, lineage, quality, observability, etc.

For that, there’s a real need for a control plane of metadata and that’s where a tool like Atlan makes a difference.

Apache Paimon and Apache Iceberg ecosystems: The need for a metadata control plane

Permalink to “Apache Paimon and Apache Iceberg ecosystems: The need for a metadata control plane”Individual catalogs of various databases, storage engines, query engines, and table formats work well for their internal purposes but don’t provide real business value unless they are available via a tool like Atlan, which acts as your organization’s control plane for metadata.

A control plane for metadata (like Atlan) sits horizontally across all your data tools and technologies, effectively stitching it together via cataloged metadata. As a result, data and business teams can find, trust, and govern AI-ready data.

Read more → What is a unified control plane for data?

Atlan integrates directly with one of Iceberg’s catalog implementations: Apache Polaris. This lets you bring all of your Iceberg assets into Atlan and leverage features, such as business glossary, data lineage, centralized data policies, and active data governance.

As Paimon supports the generation of Iceberg compatible metadata, Paimon tables can be directly consumed by Iceberg readers. So, you can enable a read-only integration for Paimon with Atlan via Apache Polaris or any other Iceberg catalog that integrates with Atlan, such as AWS Glue Data Catalog.

Apache Paimon vs. Apache Iceberg: Wrapping up

Permalink to “Apache Paimon vs. Apache Iceberg: Wrapping up”Apache Paimon and Iceberg were created to support open data lakehouse architecture. Paimon caters to more real-time and streaming lakehouses, while Iceberg’s built for general-purpose large-scale data lakehouses.

Both table formats provide a solid foundation for managing the data in the cloud object store, but that still leaves the need for a centralized tool to manage all your data assets in one place for better overall governance – a control plane of metadata.

To fulfill that gap, you can use Atlan’s various integrations with storage engines supporting both Paimon and Iceberg. For more information on these integrations, check out the Atlan + Polaris integration or head over to Atlan’s official documentation for connectors.

FAQs about Apache Paimon and Apache Iceberg

Permalink to “FAQs about Apache Paimon and Apache Iceberg”What are the primary differences between Apache Paimon and Apache Iceberg?

Permalink to “What are the primary differences between Apache Paimon and Apache Iceberg?”Apache Paimon and Apache Iceberg are both open-source table formats designed for data lakehouses, but they cater to different use cases. Iceberg is optimized for large-scale, batch-oriented analytical workloads, supporting features like schema evolution and time travel. In contrast, Paimon is tailored for real-time streaming data, offering low-latency ingestion and processing, and integrates seamlessly with Apache Flink and Spark.

Which data processing engines are compatible with Apache Paimon and Apache Iceberg?

Permalink to “Which data processing engines are compatible with Apache Paimon and Apache Iceberg?”Apache Iceberg is compatible with multiple query engines, including Apache Spark, Apache Flink, Trino, and Presto. This broad compatibility allows organizations to leverage Iceberg across various data processing platforms. On the other hand, Apache Paimon was initially developed with a focus on real-time streaming and has native integrations with Apache Flink and Spark, making it particularly suitable for streaming data workloads.

How do Apache Paimon and Apache Iceberg handle schema evolution?

Permalink to “How do Apache Paimon and Apache Iceberg handle schema evolution?”Schema evolution is a critical feature for managing changing data structures over time. Apache Iceberg supports comprehensive schema evolution, including adding, removing, renaming, and reordering columns. This flexibility is essential for adapting to evolving data requirements. In contrast, Apache Paimon offers limited schema evolution capabilities, primarily supporting the addition or removal of columns, which may be a consideration depending on your project’s needs.

When should I choose Apache Paimon over Apache Iceberg for my data lakehouse?

Permalink to “When should I choose Apache Paimon over Apache Iceberg for my data lakehouse?”The choice between Paimon and Iceberg depends on your specific workload requirements:

- Choose Apache Paimon if you require real-time streaming data processing with low-latency ingestion and updates. Paimon is designed to efficiently handle streaming workloads and integrates closely with Flink and Spark.

- Choose Apache Iceberg if your focus is on large-scale analytical workloads that benefit from features like schema evolution, time travel, and compatibility with multiple query engines. Iceberg is well-suited for batch processing and analytical queries.

How do Apache Paimon and Apache Iceberg support partition evolution and time travel?

Permalink to “How do Apache Paimon and Apache Iceberg support partition evolution and time travel?”Partition evolution and time travel are important for managing data versions and historical queries. Apache Iceberg offers robust support for partition evolution, allowing changes in partitioning strategies without data rewrites, and provides time travel capabilities through snapshot-based querying. Apache Paimon has some partition evolution features and supports time travel, but its capabilities in these areas are not as extensive as those of Iceberg.

Apache Paimon vs. Apache Iceberg: Related reads

Permalink to “Apache Paimon vs. Apache Iceberg: Related reads”- Apache Iceberg: All You Need to Know About This Open Table Format in 2025

- Apache Iceberg Data Catalog: What Are Your Options in 2025?

- Apache Iceberg Tables Data Governance: Here Are Your Options in 2025

- Apache Iceberg Alternatives: What Are Your Options for Lakehouse Architectures?

- Apache Parquet vs. Apache Iceberg: Understand Key Differences & Explore How They Work Together

- Apache Hudi vs. Apache Iceberg: 2025 Evaluation Guide on These Two Popular Open Table Formats

- Apache Iceberg vs. Delta Lake: A Practical Guide to Data Lakehouse Architecture

- Working with Apache Iceberg on Databricks: A Complete Guide for 2025

- Working with Apache Iceberg on AWS: A Complete Guide [2025]

- Working with Apache Iceberg and AWS Glue: A Complete Guide [2025]

- Polaris Catalog from Snowflake: Everything We Know So Far

- Polaris Catalog + Atlan: Better Together

- Snowflake Horizon for Data Governance

- What does Atlan crawl from Snowflake?

- Snowflake Cortex for AI & ML Analytics: Here’s Everything We Know So Far

- Snowflake Copilot: Here’s Everything We Know So Far About This AI-Powered Assistant

- How to Set Up Data Governance for Snowflake: A Step-by-Step Guide

- How to Set Up a Data Catalog for Snowflake: A Step-by-Step Guide

- Snowflake Data Catalog: What, Why & How to Evaluate

- AI Data Catalog: Exploring the Possibilities That Artificial Intelligence Brings to Your Metadata Applications & Data Interactions

- What Is a Data Catalog? & Do You Need One?

Share this article