Apache Parquet vs. Apache Iceberg: Understand Key Differences & Explore How They Work Together

Share this article

Apache Parquet and Apache Iceberg are important components of the modern data lake, and data lakehouse architectures. They’re often compared and contrasted, however, it’s crucial to note that they solve very different problems and work on very different levels.

Apache Parquet is a file format optimized for efficient data storage and retrieval, while Apache Iceberg is a table format that can organize and manage Parquet files with schema evolution, ACID transactions, and multi-engine support in data lakehouses.

See How Atlan Simplifies Data Governance ✨ – Start Product Tour

However, there’s enough overlap between the two that it confuses someone new to the lakehouse ecosystem, which could result in them asking questions like:

- Which of the two is more suitable for a data lakehouse?

- Can Iceberg replace an existing Parquet-based setup?

- Are there scenarios where Parquet is better than Iceberg?

- Can Parquet and Iceberg both work together?

These questions arise from the complexity of data lakehouse architectures and file and table formats. This article first provides a very quick refresher on lakehouse architecture and clarifies the difference between file and table formats. After that, it compares Apache Parquet vs. Apache Iceberg in detail before finally discussing how Parquet and Iceberg work together.

Understanding the Parquet vs. Iceberg distinction is just the start — Atlan’s Apache Iceberg guide explains what the table layer adds, and how Iceberg compares to Delta Lake, Hudi, and Paimon.

Table of Contents

Permalink to “Table of Contents”- Apache Parquet vs. Apache Iceberg: An overview

- Apache Parquet vs. Apache Iceberg: Key differences

- Apache Parquet and Apache Iceberg: How do they work together?

- Apache Parquet + Apache Iceberg environments: The need for a metadata control plane

- Apache Parquet vs. Apache Iceberg: Wrapping up

- Apache Parquet vs. Apache Iceberg: Related reads

Apache Parquet vs. Apache Iceberg: An overview

Permalink to “Apache Parquet vs. Apache Iceberg: An overview”Apache Parquet and Apache Iceberg address Apache Hive’s shortcomings but cater to distinct workloads. Parquet is optimized for real-time streaming and transactional data lakes, while Iceberg is designed for large-scale analytics with advanced schema evolution, ACID compliance, and multi-engine compatibility.

To conduct an in-depth comparison of Apache Parquet vs. Apache Iceberg, it’s important to explore the history of open table formats. This evolution was driven by the need for scalable, efficient file management, leading to the emergence of Parquet and Iceberg.

A brief history of file and table formats in lakehouses

Permalink to “A brief history of file and table formats in lakehouses”Moving on from the traditional data warehouses, data lakes looked pretty good for a few years. However, organizations started facing scalability and hygiene issues, with the lakes becoming dumping grounds for data and eventually becoming swamps.

The data lakehouse pattern was conceived to bring a data warehouse’s structure, reliability, and governance to data lakes. This meant bringing features like transactions, schema evolution, data dictionary, indexing, and partitioning. Doing so meant that you didn’t have to build a separate data warehouse on top of your data lake to serve reporting and BI workloads.

This move towards data lakehouses triggered the development of newer file formats, departing from the Apache Hive and Hadoop ecosystems because of their limitations around handling transactions, slowness due to direct listing, etc.

File formats like Parquet and ORC were developed to target columnar data consumption. The organization and management of Parquet, ORC, and other files were mostly left to the query engine like Apache Spark or a separate metadata catalog like Apache Hive.

That’s where table formats like Apache Iceberg came into the picture. They solved the inefficiencies of file management and organization and provided a range of features that included transaction support, point-in-time querying, and version control.

Read more → Apache Iceberg at a glance

In essence, file formats were restricted to controlling how data is stored physically in data files. In contrast, table formats control how the data files are managed and organized to ensure efficient file scanning (avoiding inefficient directory listing), enabling file versioning, and the other things mentioned earlier.

After learning about the context in which file and table formats like Parquet and Iceberg were developed, let’s compare their features.

Apache Parquet vs. Apache Iceberg: Key differences

Permalink to “Apache Parquet vs. Apache Iceberg: Key differences”Apache Parquet was created by Twitter and Cloudera in 2013 to serve analytical workloads from data lakes by creating a columnar file format in competition with row-based ones like CSV and Avro.

By the time Netflix created Iceberg in 2017, the data architecture patterns had shifted greatly with the introduction of Apache Arrow and Apache Hudi.

While the intentions behind the creation of Parquet were primarily to serve concurrent read-heavy workloads, Iceberg was created to bring the transactions, version control, schema evolution, and other things to the lakehouse.

Let’s now understand some of the key technical and capability differences between Apache Parquet vs. Apache Iceberg to clarify their purpose and aptness for specific use cases.

Here’s a comparison table summarizing these differences between Apache Parquet vs. Apache Iceberg.

Comparing on | Apache Parquet | Apache Iceberg |

|---|---|---|

Function in a data lakehouse | Works closely with the storage layer by enabling efficient data storage using columnar organization and compression | Works on the table format layer responsible for efficient management and organization of files, enabling ACID transactions, schema evolution, and time travel |

Transaction support | Doesn't handle or support transactions by itself | Uses snapshot isolation for consistent and concurrent reads and writes |

Concurrency control | Is not designed for multiple concurrent writes | Employs optimistic concurrency control to enable multiple writers to write to the lakehouse |

Query planning and optimization | Helps the query engine or catalog to perform predicate pushdowns for better performance | Leverages manifest-based and partition-based data to ensure very efficient data scanning and query performance |

Partition management | Partitioning is left for data engineers to do manually | Uses hidden partitioning and allows the evolution of existing partitions, avoiding full partition rewrites |

Schema evolution | Supports schema merging and evolution but might need rewriting whole partitions or tables in some cases | Supports all schema evolution capabilities, such as adding, renaming, reordering, or dropping one or more columns, without the need for rewriting the data |

Point-in-time querying with time travel | Doesn't support point-in-time querying | Uses snapshots in the metadata layer to maintain historical versions of the data |

When is it best to use? | Parquet can be used to store data for analytical consumption with read-heavy workloads | Iceberg makes a good choice when you’re designing data lakehouses for write-heavy workloads with the need for transactions, point-in-time querying, and schema evolution |

Who uses it? | Airbnb, Apple, Adobe, and Salesforce use Iceberg |

In the table above, it is clear that both these technologies are meant to solve different problems, and while they may have some overlap, both Parquet and Iceberg work well together. Let’s find out how in the next section.

Apache Parquet and Apache Iceberg: How do they work together?

Permalink to “Apache Parquet and Apache Iceberg: How do they work together?”Before describing how Parquet and Iceberg work together, it is important to note that data architecture needs a file format. Still, it doesn’t necessarily need a table format, as some of that functionality is often offloaded to the catalog or the query engine.

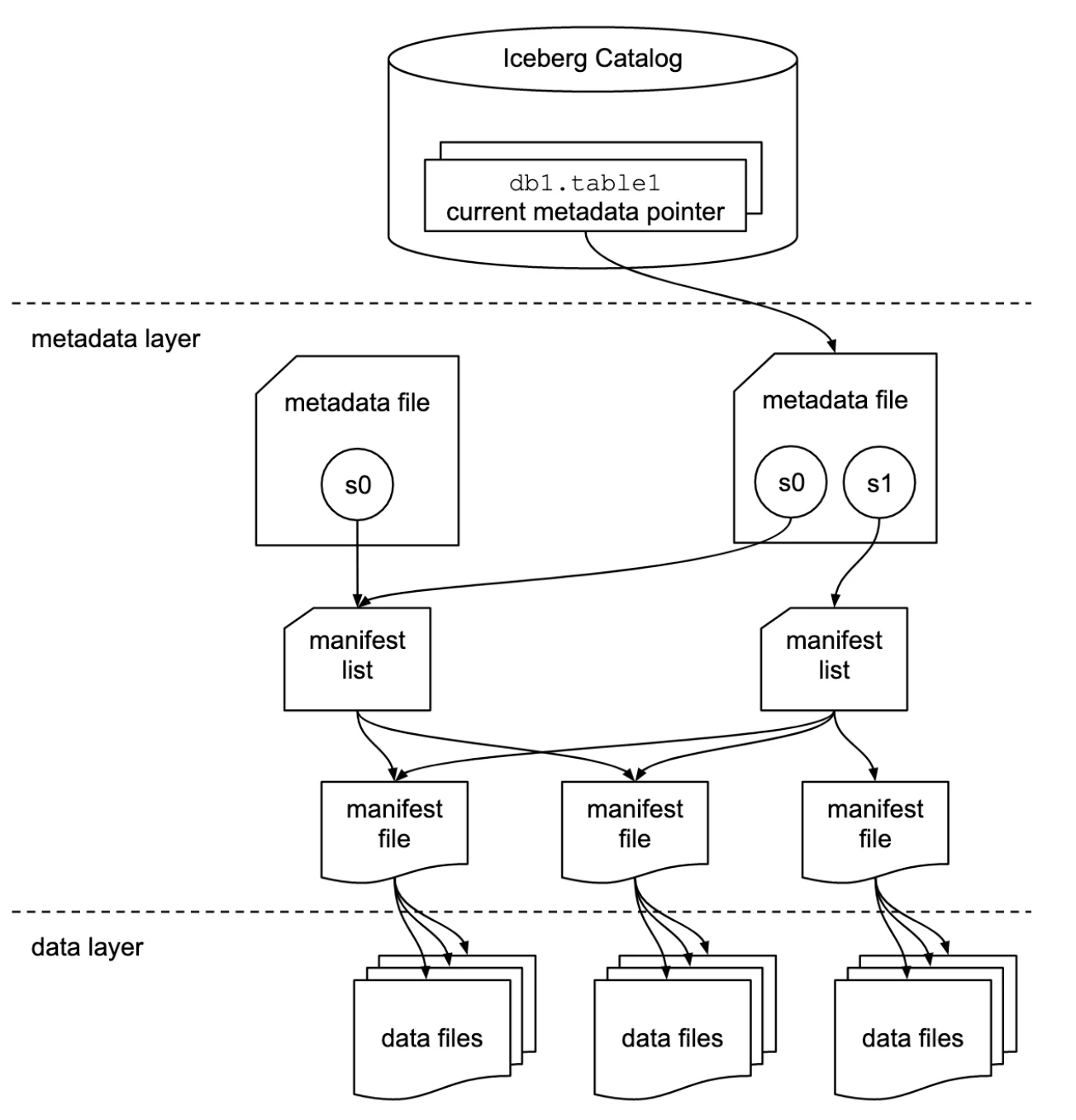

The Parquet + Iceberg pairing blossoms when implementing data lakehouse architectures because they complement each other quite well. For instance, Parquet can efficiently store data on disk, while Iceberg efficiently manages and organizes those Parquet data files on disk using its own internal mechanisms of snapshots and manifests.

Apache Parquet can form the data layer - Source: Apache Iceberg documentation.

Parquet is an immutable file format, but Iceberg implements a separate metadata layer to capture schema changes. Parquet is one of the three file formats supported by Iceberg and also the key file format used in two major Iceberg alternatives – Apache Hudi and Delta Lake.

Let’s look at what else is needed to bring the most out of the Parquet + Iceberg collaboration.

Apache Parquet + Apache Iceberg environments: The need for a metadata control plane

Permalink to “Apache Parquet + Apache Iceberg environments: The need for a metadata control plane”Iceberg, while using Parquet as the underlying file format, allows you to deploy your choice of catalog with technologies like AWS Glue Data Catalog, Project Nessie, JDBC, and even a REST-based catalog.

Snowflake created Polaris, a fully-featured implementation of the Iceberg catalog with its REST API. It added features like access control and governance to the mix. However, there remains a need for a metadata control plane that sits across your various technologies, stacks, and architectures, agnostic of the cloud platform.

Together, Parquet and Iceberg generate and maintain a wealth of metadata. However, they still need a fully-featured platform, a control plane for metadata, to leverage the metadata and enable features like granular data lineage, role-based access control, business glossary, and centralized data policies.

That’s where Atlan comes into the picture. A metadata control plane like Atlan sits on top of your disparate data infrastructure, effectively stitching it together via cataloged metadata – including that from Iceberg’s catalog.

Read more → What is a unified control plane for data?

Apache Parquet vs. Apache Iceberg: Wrapping up

Permalink to “Apache Parquet vs. Apache Iceberg: Wrapping up”Parquet and Iceberg solve different problems and work on various levels of architecture. Despite that, both Parquet and Iceberg form a great combination to provide some of the key features of a data lakehouse.

The Iceberg catalog helps by maintaining an internal catalog of all Iceberg assets. Still, it is usually not enough for an organization with a broad data stack, especially with diverse teams using different tools and technologies. That’s where the need for a control plane for metadata arises, i.e., something that brings all the data assets in one place for better visibility, exploration, governance, quality, and overall consumption.

To fulfill that need, you can use Atlan’s integration with Iceberg’s Polaris catalog and bring in all your Iceberg assets in Atlan, where many of the features above are already present and have been battle-tested by numerous organizations.

For more information on these integrations, check out the Atlan + Polaris integration or head over to Atlan’s official documentation for connectors.

Apache Parquet vs. Apache Iceberg: Related reads

Permalink to “Apache Parquet vs. Apache Iceberg: Related reads”- Apache Iceberg: All You Need to Know About This Open Table Format in 2025

- Apache Iceberg Data Catalog: What Are Your Options in 2025?

- Polaris Catalog from Snowflake: Everything We Know So Far

- Polaris Catalog + Atlan: Better Together

- Snowflake Horizon for Data Governance

- What does Atlan crawl from Snowflake?

- Snowflake Cortex for AI & ML Analytics: Here’s Everything We Know So Far

- Snowflake Copilot: Here’s Everything We Know So Far About This AI-Powered Assistant

- How to Set Up Data Governance for Snowflake: A Step-by-Step Guide

- How to Set Up a Data Catalog for Snowflake: A Step-by-Step Guide

- Snowflake Data Catalog: What, Why & How to Evaluate

- AI Data Catalog: Exploring the Possibilities That Artificial Intelligence Brings to Your Metadata Applications & Data Interactions

- What Is a Data Catalog? & Do You Need One?

Share this article