Snowflake + Fivetran: Data movement for the modern data platform

Share this article

Snowflake is a data cloud platform that works on the core idea of separating storage and compute, allowing you to scale these two independently. Earlier, you would have had to buy more disk storage, even if you just needed more memory and compute power. Snowflake is also one of the few SaaS platforms that takes a lot of load off data engineers by managing your infrastructure, processing, etc., and allows you to manage everything with plain old SQL.

Scaling AI on Snowflake? Here’s the playbook - Watch Now

Fivetran is an automated data movement platform that provides you with a no-code solution for data ingestion and basic transformation. It also offers an integration with dbt Core to allow you to reshape data into different data models. Fivetran’s power lies in its built-in connectors’ ease of use. These connectors let you integrate with over 300 connectors having pre-defined schemas, so you don’t have to do a lot of work apart from setting the initial connection with the data source.

Modern data problems require modern solutions - Try Atlan, the data catalog of choice for forward-looking data teams! 👉 Book your demo today

Snowflake and Fivetran work well with each other and let you have a lean team to manage your data engineering workloads. This article will take you through how these platforms integrate and enable you to focus on reshaping data and making it consumable for analytics and visualization. We’ll go through the article under the following themes:

- Using Fivetran’s built-in connectors with Snowflake

- Setting up Fivetran for Snowflake

- Applying custom transformations in Snowflake with dbt Core

- Working with Fivetran logs for Snowflake

- Using Local Data Processing for CDC in Snowflake

- Activating metadata in Snowflake using Atlan

Let’s get to it!

Table of contents #

- Using Fivetran built-in connectors with Snowflake

- Setting up Fivetran for Snowflake

- Transforming data with Fivetran and dbt Core

- Working with Fivetran logs for Snowflake

- Using Local Data Processing for CDC in Snowflake

- Activating metadata in Snowflake using Atlan

- Snowflake + Fivetran: Related reads

Using Fivetran built-in connectors with Snowflake #

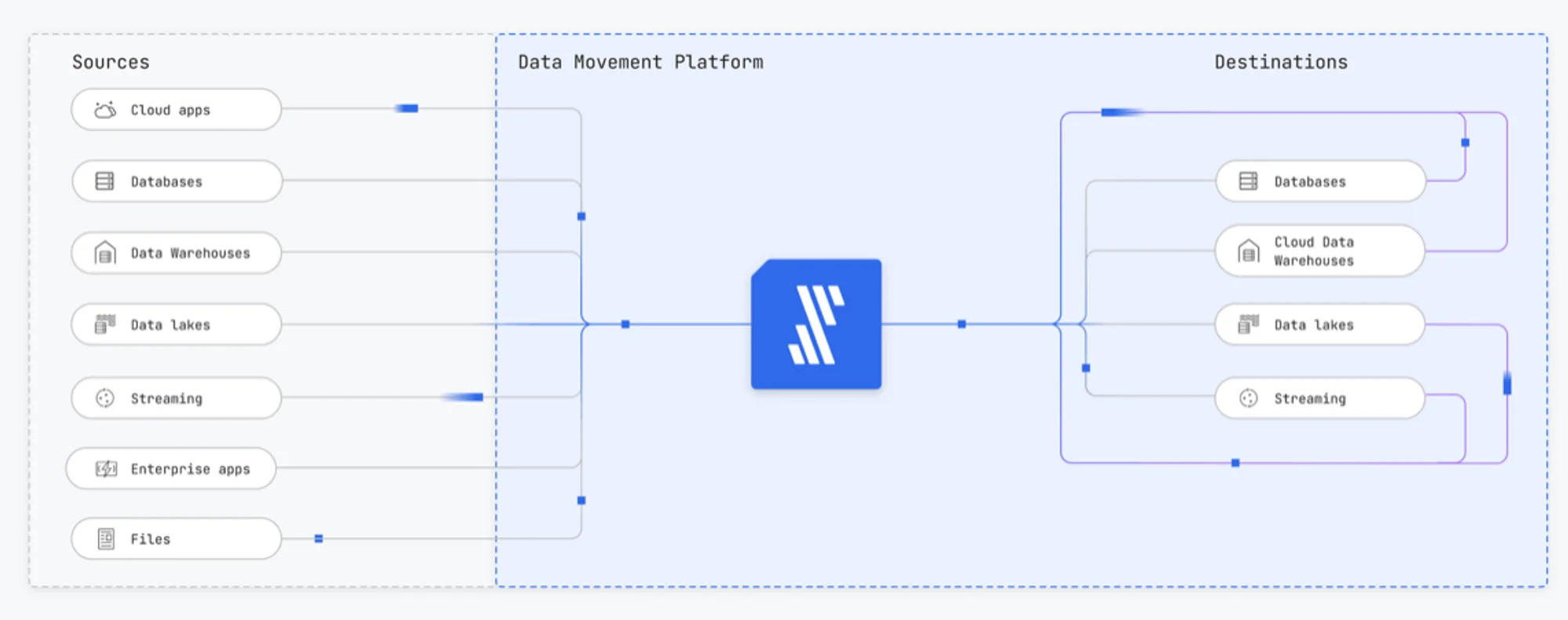

Fivetran follows a source-and-sink, connector-based approach to data movement. Examples of supported sources like various types of databases, third-party applications, events, file stores, and more. You can ingest data from any source into Snowflake and other destinations. This enables you to move and consolidate all your data from various sources to Snowflake using Fivetran, as shown in the image below:

Fivetran facilitating data movement from sources to destinations - Source: Fivetran documentation.

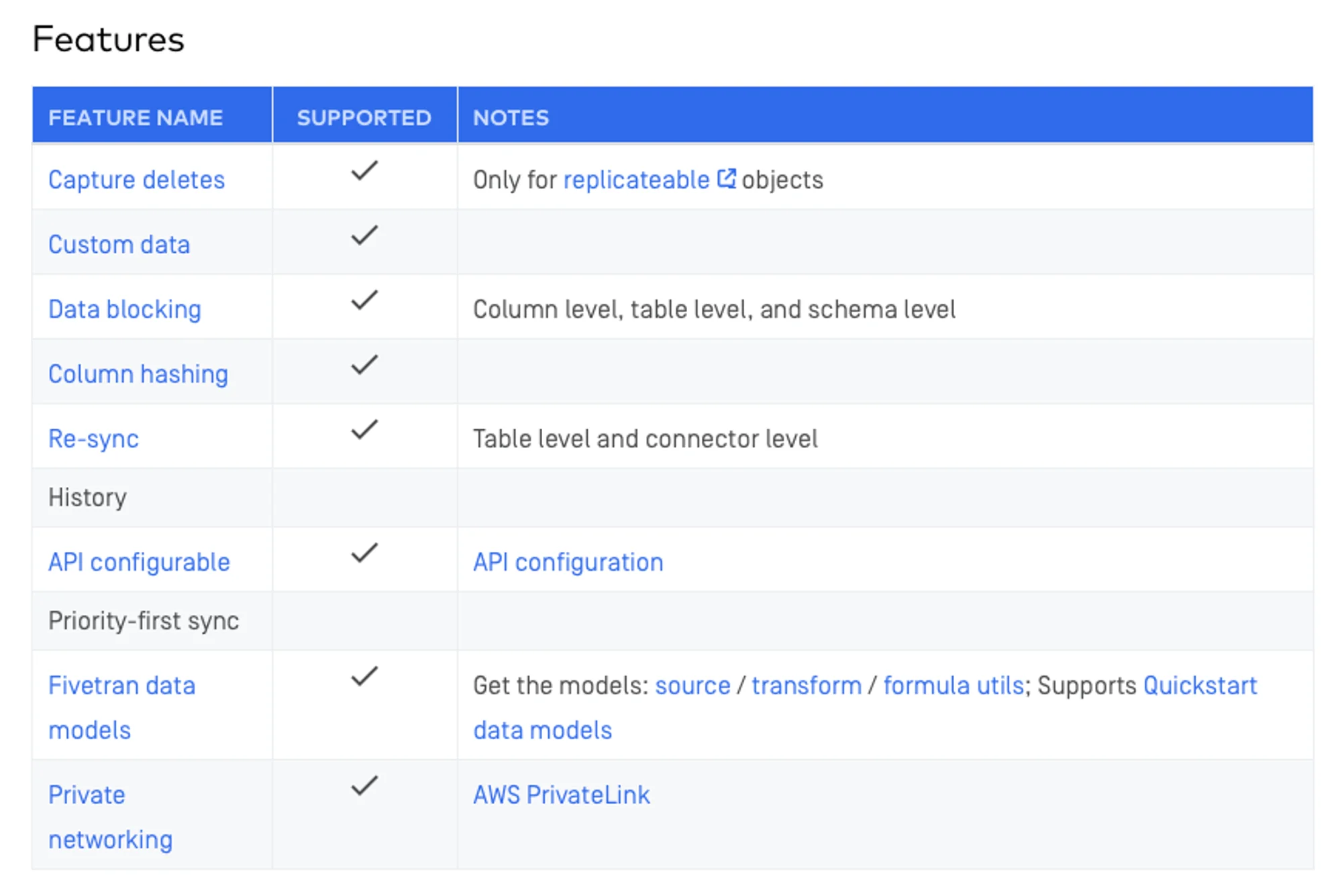

It’s important to note that all connectors (sources and destinations) don’t have the same level of support. All the supported features are mentioned in the connector documentation. Here’s what the Salesforce connector support looks like:

Salesforce connector supported features - Source: Fivetran documentation.

Fivetran creates one of the three types of schemas based on the support for the source connector - fully customizable, semi-customizable, and non-customizable. This schema is visible from your Fivetran dashboard once you connect to the data source. Now, let’s set up Fivetran to ingest data into Snowflake.

Setting up Fivetran for Snowflake #

Assuming you have access to a Fivetran role that allows you to create and manage destination permissions, you can create a Snowflake user for Fivetran. Here’s how you can do it:

- Assume the

SECURITYADMINrole in Snowflake - Create a role and a user for your Fivetran connection

- Create a database for Fivetran to use

- Create a warehouse that you’ll use for ingesting data from Fivetran

- Grant the role to the user

- Switch to

ACCOUNTADMINrole in Snowflake - Grant

USAGEto the Fivetran role on the warehouse - Grant

USAGEto the Fivetran role on the database - Grant

USAGEto sync to the Fivetran role on all schemas you want - Grant

SELECTto the Fivetran role on all current and future tables and views in the schemas you want to sync

Businesses with good security practices will likely not use purely username-password authentication to connect Fivetran and Snowflake. You can alternatively use a key-pair-based method to authenticate.

When you are dealing with highly sensitive PII and PHI data, you might opt for Snowflake’s Business Critical Edition, which allows you to use private networking services in your cloud platform, such as AWS Private Link, Azure Private Link, or Google Cloud Private Service Connect.

Transforming data with Fivetran and dbt Core #

When ingesting data into Snowflake, Fivetran provides two levels of transformations: Quickstart Data Models and Transformations with dbt Core. Quickstart Data Models are built into 20+ connectors like Salesforce, Adobe Analytics, Mixpanel, Shopify, and Stripe.

These data models don’t need a custom dbt project. Once you set up a Quickstart data model, Fivetran creates one for you. After that, you can customize the transformations based on your data reshaping and modeling requirements.

When using dbt Core to transform your data in Snowflake, you can run the transformations based on a schedule specified in Fivetran or your dbt project. Using the Fivetran-based schedule, you can achieve fully integrated, partially integrated, or independent scheduling. These differ in their support and regard for upstream models while transforming data.

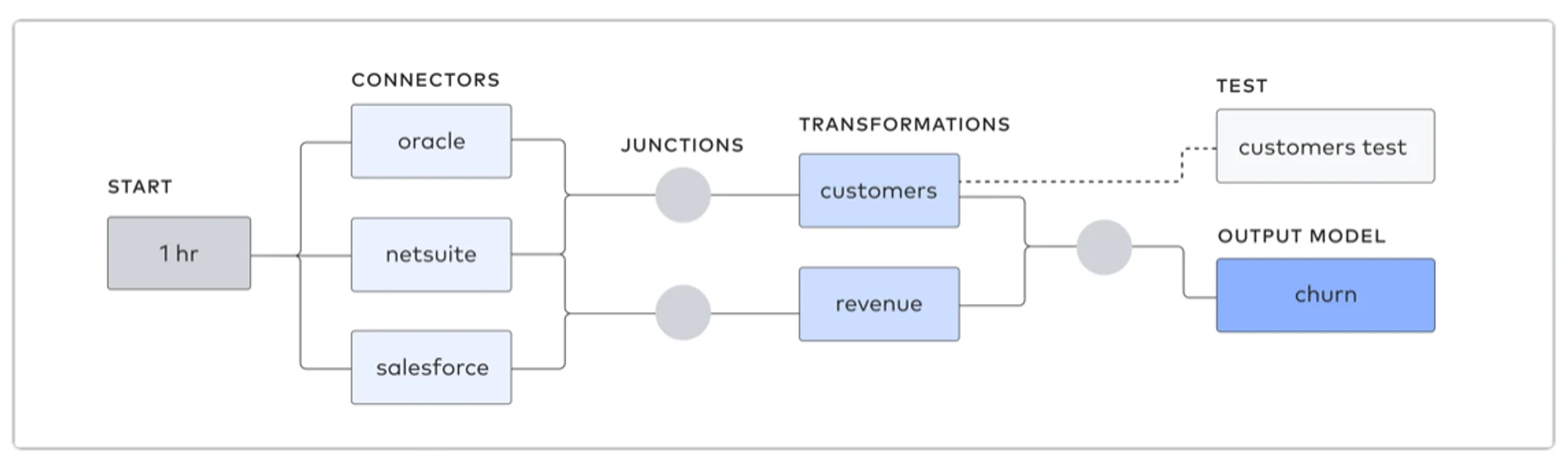

Connectors, junctions, and transformations in Fivetran - Source: Fivetran documentation.

The image above shows the constructs - start, connector, junction, transformation, output, and test - Fivetran uses to represent a data pipeline. You can manage all these natively from the Fivetran Dashboard.

Working with Fivetran logs for Snowflake #

While facilitating data movement, Fivetran logs activity on two levels: connector and account. Using the connector-level logs, you can view, analyze, and troubleshoot what’s happening with your source and destination connectors. Account-level logs, on the other hand, provide events from user actions, API calls, etc.

You can monitor the connector-level logs from the Fivetran GUI. Connector-level logs are kept for a week. The retention of account-level logs depends on your retention policy. You can use the Fivetran Platform Connector or external log services, such as AWS CloudWatch, Datadog, or Splunk, to monitor Fivetran logs.

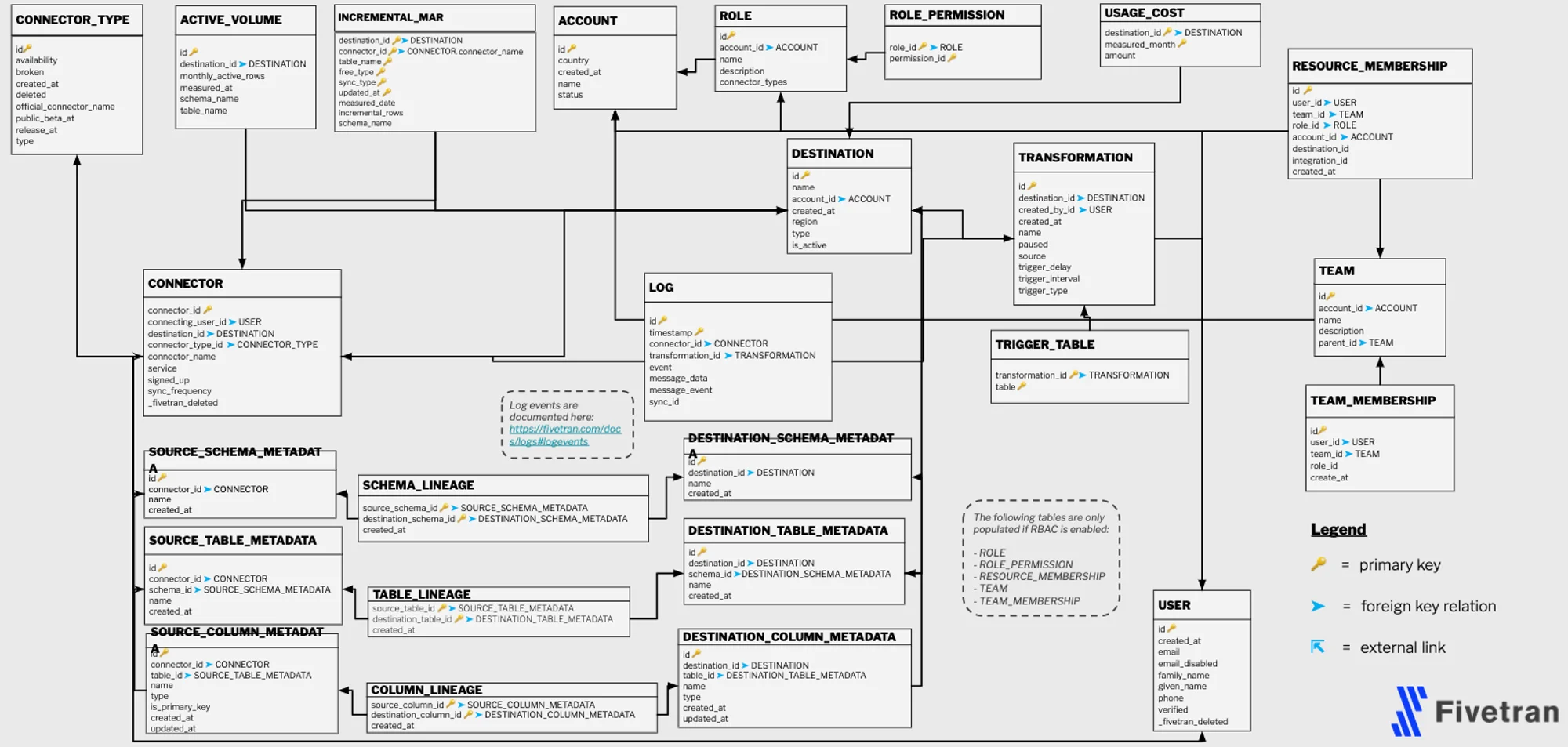

Although all activity is logged in these log events, the Fivetran Platform Connector logs provide a method of checking things like MAR (Monthly Active Rows) and records modified since the last sync using SQL queries, which is especially helpful with managing Fivetran cost. Fivetran creates the following schema in your target database:

Fivetran Platform Connector schema to be made in Snowflake - Source: Fivetran documentation.

Using this schema, you can write queries that allow you to calculate MAR grouped by connector, destination, and month, for instance:

SELECT schema_name,

destination_id,

DATE_TRUNC('MONTH', measured_date) AS measured_month,

SUM(incremental_rows) AS MAR

FROM incremental_mar

WHERE free_type = 'PAID'

GROUP BY schema_name, destination_id, measured_month

ORDER BY measured_month, schema_name;

More Snowflake-specific queries are available on the sample queries page on the Fivetran documentation.

Using Local Data Processing for CDC in Snowflake #

In late 2021, Fivetran acquired a CDC and data replication product company called HVR, which was known for real-time data replication between both homogeneous and heterogeneous locations. The product was later integrated into Fivetran as Local Data Processing. This is an excellent method for low-latency real-time data replication that allows you to preserve transactional integrity in a non-blocking manner as it queries the database log instead of the database directly.

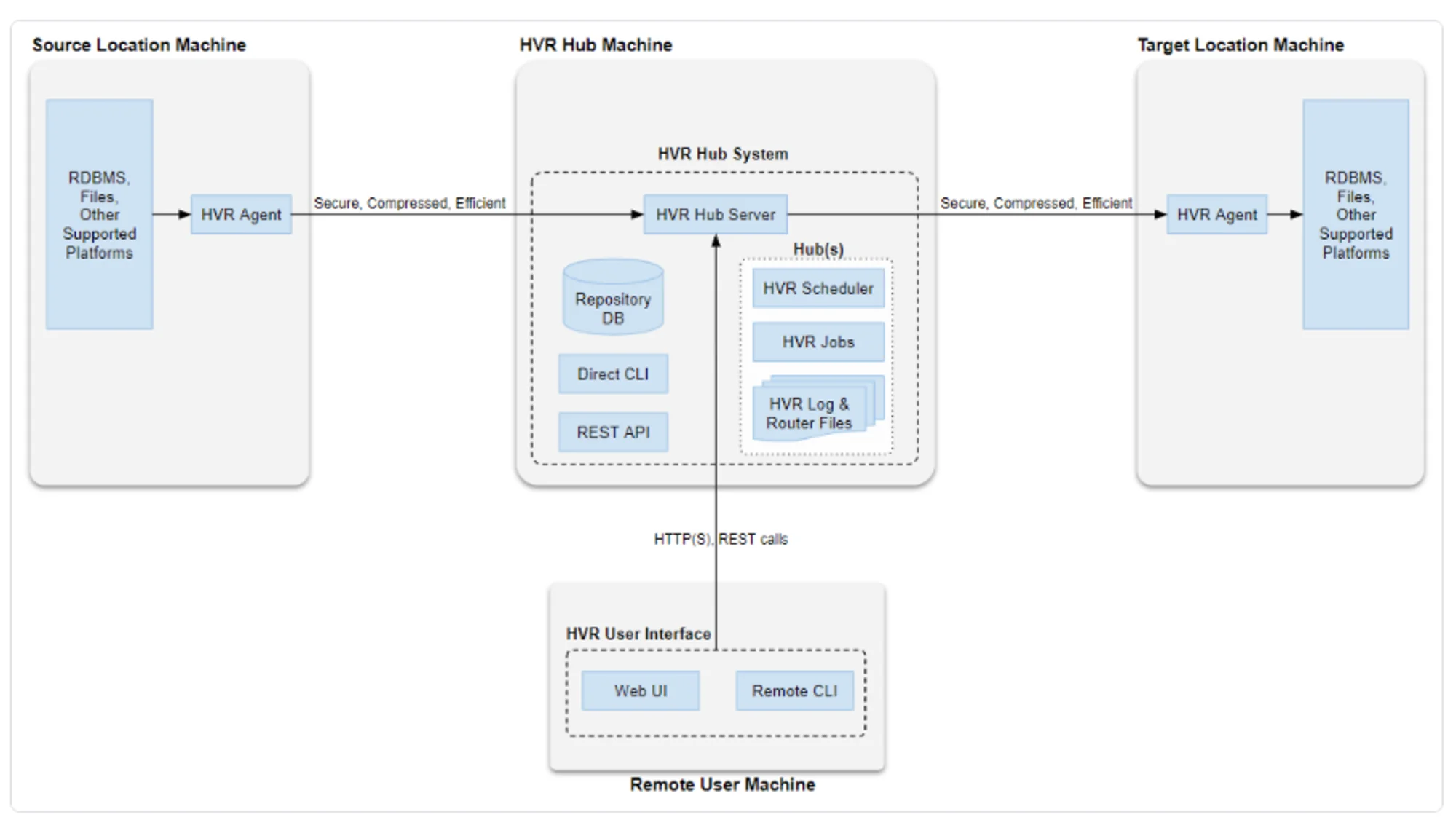

Your source location can be on-premises or in the cloud. To extract data, you must install an HVA (High Volume Agent) on a Linux, Windows, or Mac instance to facilitate traffic between your source location and HVR Hub. The HVR Hub Server then moves the data in a secure and compressed manner to the target location, which, in this case, would be Snowflake. The ingestion of this data into Snowflake will also be facilitated by an HVA agent, as shown in the representation of the Local Data Processing architecture below:

Local Data Processing (HVR6) Architecture - Source: Fivetran documentation.

In addition to database-to-database movement, Local Data Processing supports various other CDC and data movement patterns, such as file-to-file, file-to-database, and database-to-file. This enables you to use Local Data Processing for different data architectures, including data warehouses, data lakes, data archives, data lakehouses, and more.

Activating metadata in Snowflake using Atlan #

This article showed how you can supercharge your data platform using fully managed no-code and SaaS platforms like Snowflake and Fivetran. Platforms like these do a great job of bringing all your siloed data together in one place. Bringing data together, however, doesn’t automatically solve the problem of data visibility and observability. Technical metadata is present in Fivetran and Snowflake, but it is hard to use it to any meaningful effect without much effort.

Atlan is a metadata activation platform that takes source and destination metadata to provide clear visibility of your whole data platform to enable data discovery, lineage, and governance use cases. Atlan partners with all three platforms discussed throughout this article - Snowflake, Fivetran, and dbt.

Snowflake + Fivetran: Related reads #

- Snowflake Summit 2025: How to Make the Most of This Year’s Event

- Snowflake Data Catalog: Importance, Benefits, Native Capabilities & Evaluation Guide

- Snowflake Data Governance: Features, Frameworks & Best practices

- Snowflake Data Mesh: Step-by-Step Setup Guide

- Snowflake Data Lineage: A Step-by-Step How to Guide

- Snowflake Data Dictionary: Documentation for Your Database

- Snowflake Metadata Management: Importance, Challenges, and Identifying The Right Platform

Share this article