Apache Iceberg Architecture: Learn About the Core Components & Their Functions

Last Updated on: April 10th, 2025 | 9 min read

Unlock Your Data's Potential With Atlan

Apache Iceberg is a table format for large-scale analytics on data lakes. It emerged to address limitations in Apache Hive’s architecture, such as slow data evolution, inefficient reads/writes, and lack of ACID transaction support.

This article will take you through Apache Iceberg’s core architectural components and explore their functionality, enabling features, and synergies. We’ll also cover key aspects of data cataloging with Iceberg catalogs and the need for a metadata control plane.

Table of Contents

Permalink to “Table of Contents”- Apache Iceberg architecture: Three core components

- Apache Iceberg: Catalog options to consider

- Why do you need a metadata control plane for your Iceberg ecosystem?

- Summing up

- Frequently Asked Questions about Apache Iceberg Architecture

Apache Iceberg architecture: Three core components

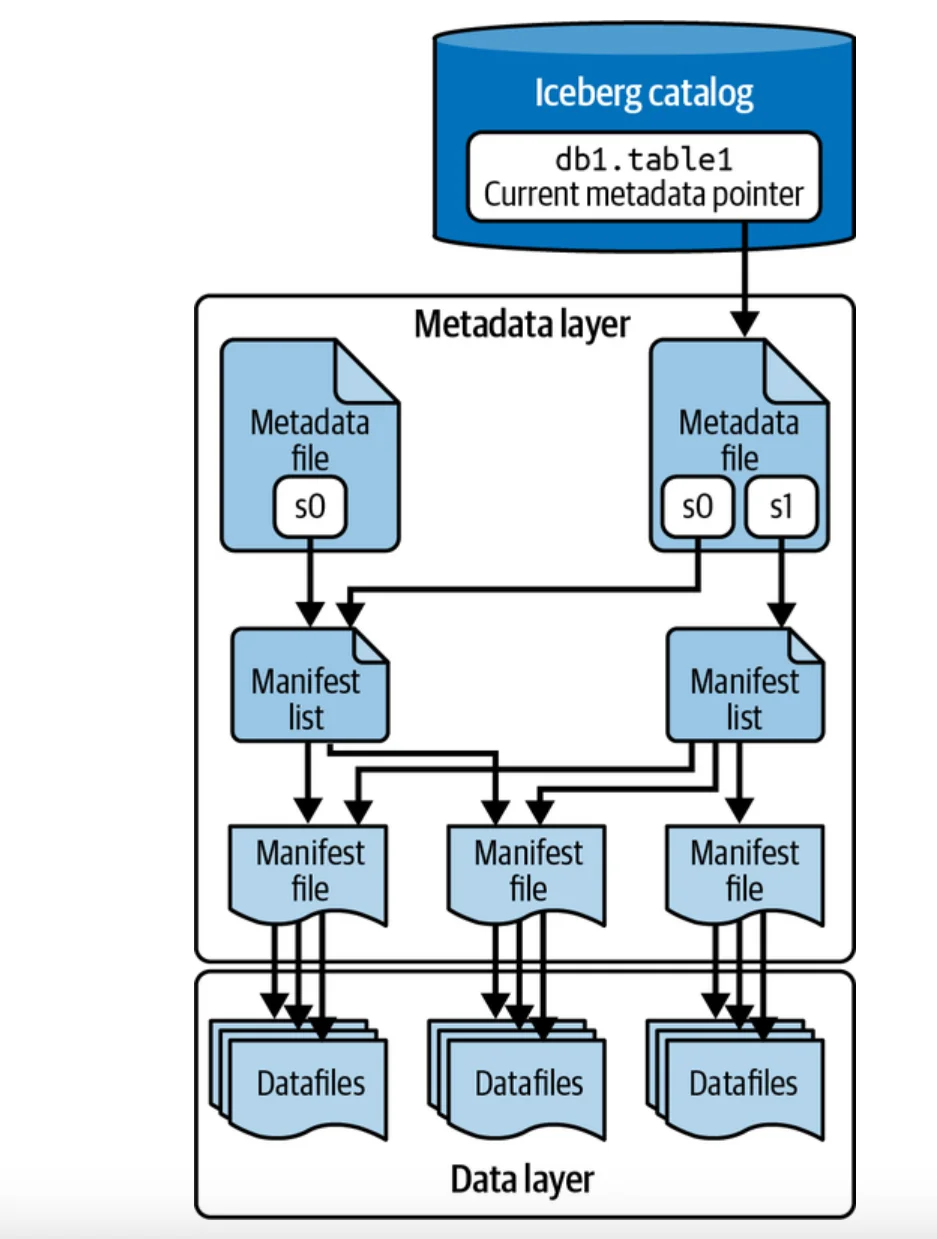

Permalink to “Apache Iceberg architecture: Three core components”The Apache Iceberg architecture has three core components:

- The catalog layer

- The metadata layer

- The storage backend (i.e., the data layer)

Apache Iceberg architecture - Source: O’Reilly Media.

Each of the three components listed above is tied to a layer of abstraction in Iceberg. Let’s go through the specifics of each of these layers briefly.

Unfamiliar with Iceberg? Start here to learn about the basics of Iceberg before going through the specifics of Apache Iceberg architecture components.

1. Apache Iceberg architecture: The catalog layer

Permalink to “1. Apache Iceberg architecture: The catalog layer”Many of Iceberg’s core features, such as partition evolution, schema evolution, time travel, and rollback, are based on its ability to manage versions of table metadata.

In Iceberg, the metadata layer maintains the versions themselves, and the catalog layer tracks and maintains pointers to the latest versions of table metadata. Having pointers to the latest table metadata is extremely important as it allows all Iceberg readers and writers to get a consistent view of the data in real time, partly enabling Iceberg to support ACID transactions.

One of the key distinguishing factors of Iceberg is that it allows you to use a host of catalogs to store this metadata. We’ll explore the catalog options later in the article.

Also, read → Top data cataloging options for Apache Iceberg

Next, let’s discuss how the metadata layer works.

2. Apache Iceberg architecture: The metadata layer

Permalink to “2. Apache Iceberg architecture: The metadata layer”Iceberg’s metadata layer maintains all the granular details about tables. As seen in the Apache iceberg architecture diagram provided earlier, the metadata layer manages data in three separate constructs that work together:

- Metadata files: Metadata files are JSON files containing information about the schema and partitions, along with the history of snapshots and references to the current snapshots.

The catalog layer uses these files to point to the latest version (or an older one).

- Manifest lists: Manifest lists are files stored in Avro format. They contain a list of manifest files with useful statistics, such as size, path, snapshot references, and partition bounds.

- Manifest files: Iceberg explains a manifest file as a “metadata file that lists a subset of data files that make up a snapshot.”

Like manifest lists, manifest files are also stored in Avro format. These files keep track of data files and delete files, which form the basis for enabling features like partition pruning and overall query optimizations.

With the wealth of metadata collected in the metadata layer, Iceberg can optimize query planning and enable partitioning evolution and time travel, among other things.

All the files in the metadata layer are stored with the actual data in the storage backend, which brings us to the data layer.

3. Apache Iceberg architecture: The data layer

Permalink to “3. Apache Iceberg architecture: The data layer”The data layer is where the data lives. This layer is tied to a storage backend like Amazon S3, Azure Data Lake Storage, Google Cloud Storage, or HDFS (Hadoop Distributed File System).

Iceberg supports storing three popular file formats: Avro, Parquet, and ORC. These file formats are used to store data in Iceberg’s data files.

Iceberg also has the concept of delete files, which are used for logical or soft deletion to enforce immutability in the data lakehouse you build. This feature enables many key features in Iceberg, including point-in-time queries and partition evolution. It also prevents rewriting whole data files when only a few records stored in that file change.

There are three types of delete files based on how records are identified:

- Positional delete files: These identify records by file names and positions of a row in a file. These files are only available for the v2 spec of Iceberg.

- Equality delete files: These identify which records to delete by using the equality clause with column values.

- Deletion vectors: Created based on Renjie Liu’s proposal, deletion vectors have significant optimizations over positional deletes; they’re available from the v3 spec.

Now that we’ve talked about the core components of the Apache Iceberg architecture, let’s look at Iceberg’s catalogs, which form the foundation for metadata storage and management in Iceberg.

Apache Iceberg: Catalog options to consider

Permalink to “Apache Iceberg: Catalog options to consider”Iceberg allows you to use a pluggable catalog that suits your organization’s needs. It also allows you to build and implement your own Iceberg catalog with various methods. Here are the most common catalogs available for Iceberg:

- Hive Metastore: Hive metastore is most often associated with legacy Hadoop deployments. It can also be used as the backend for storing Iceberg metadata.

- Hadoop Catalog: Hadoop Catalog is an option used by legacy Hadoop deployments, and the catalog for BigQuery tables for Apache Iceberg.

- AWS Glue Data Catalog: AWS Glue Data Catalog is most frequently used with AWS-native data platform deployments due to seamless integration with AWS data services and Hive compatibility of AWS Glue Data Catalog.

- JDBC Catalog: JDBC Catalog is an option used when you want to implement your catalog backend in a database like MySQL, PostgreSQL, SQL Server, etc.

- REST Catalog: REST Catalog is the most advanced option, allowing you to manage metadata using REST APIs. Implementations of the REST catalog include Project Nessie and Apache Polaris.

A key point to understand is that all these catalog options support core features such as ACID transactions, snapshots, and time travel. Some of them also support advanced features – Git-like version control.

However, these catalogs are only meant for Iceberg’s internal function, which brings us to the need for a metadata control plane.

Also, read → Everything you need to know about working with Iceberg and AWS Glue

Why do you need a metadata control plane for your Iceberg ecosystem?

Permalink to “Why do you need a metadata control plane for your Iceberg ecosystem?”Iceberg catalogs should not be confused with an organization-wide data catalog, which serves as the foundation for unlocking use cases like governance, collaboration, and lineage. A control plane for metadata is needed to fulfill those needs.

Such a metadata control plane sits horizontally across your organization’s data ecosystem. It brings all data from your data assets together in one place, not just for cataloging but also for governing, profiling, analyzing, and thoroughly using them.

This is precisely what Atlan does – Atlan examines your data discovery, cataloging, lineage, collaboration, governance, and documentation needs and brings them all under a single roof, which then acts as the metadata control plane. Atlan integrates with Iceberg using the Apache Polaris catalog.

Summing up

Permalink to “Summing up”We’ve covered the various aspects of Iceberg architecture and the purpose they serve. We also saw how the Iceberg catalog is only meant for internal operations and metadata management.

To reiterate, the Iceberg catalog is a great source of metadata. However, it doesn’t solve the data cataloging, discovery, governance, lineage, and quality use cases like a control plane for metadata like Atlan does. If you’re working with Iceberg tables and want to implement such a control plane for your organization, please check out Atlan’s integration with Iceberg via Apache Polaris.

FAQs about Apache Iceberg architecture

Permalink to “FAQs about Apache Iceberg architecture”What are the core components of Apache Iceberg’s architecture?

Permalink to “What are the core components of Apache Iceberg’s architecture?”Apache Iceberg’s architecture comprises three main components:

- Catalog Layer: Manages table metadata and provides a consistent view of data across different engines.

- Metadata Layer: Stores detailed information about table schemas, partitions, snapshots, and data files.

- Data Layer: Contains the actual data files stored in formats like Parquet, Avro, or ORC, typically on distributed storage systems.

These components work together to enable features like ACID transactions, schema evolution, and time travel.

What are the catalog options available for Apache Iceberg, and how do they differ?

Permalink to “What are the catalog options available for Apache Iceberg, and how do they differ?”Apache Iceberg supports various catalog implementations to manage table metadata:

- Hive Metastore: Integrates with existing Hive deployments.

- Hadoop Catalog: Stores metadata in a Hadoop-compatible filesystem.

- AWS Glue Data Catalog: Integrates with AWS services for metadata management.

- JDBC Catalog: Uses relational databases like MySQL or PostgreSQL for metadata storage.

- REST Catalog: Provides a RESTful interface for metadata operations, facilitating integration with various services.

The choice of catalog depends on the specific infrastructure and integration requirements of the organization.

How does Apache Iceberg handle schema evolution?

Permalink to “How does Apache Iceberg handle schema evolution?”Apache Iceberg supports schema evolution by allowing changes to table schemas without requiring a full rewrite of the data. This is achieved through its metadata layer, which tracks schema changes and ensures that both old and new data conform to the current schema. This flexibility facilitates adding, renaming, or dropping columns as needed.

How does Apache Iceberg implement ACID guarantees?

Permalink to “How does Apache Iceberg implement ACID guarantees?”Apache Iceberg supports ACID guarantees through its metadata and snapshot-based design:

- Atomic writes are achieved by writing new metadata trees and atomically updating the catalog pointer to the latest metadata.

- Consistency is maintained because all readers access the same snapshot of data via the catalog.

- Isolation is ensured through snapshot isolation, allowing readers and writers to work without blocking each other.

- Durability comes from writing to durable storage backends like S3, GCS, or HDFS.

This architecture makes Iceberg safe for concurrent workloads in production-grade lakehouses.

How does Apache Iceberg handle table creation and deletion?

Permalink to “How does Apache Iceberg handle table creation and deletion?”Apache Iceberg ensures atomic table creation by first writing a new metadata tree and then committing it to the catalog. If a failure occurs during this process, the catalog doesn’t register the table, preventing partial or corrupted tables.

For table deletion, Iceberg uses a two-phase process. First, it removes the table from the catalog. Then, background jobs handle the cleanup of metadata and data files. This ensures that deletion is safe, avoids race conditions, and doesn’t impact active queries or processes.

How does Apache Iceberg ensure ACID transactions in a data lake environment?

Permalink to “How does Apache Iceberg ensure ACID transactions in a data lake environment?”Apache Iceberg ensures ACID (Atomicity, Consistency, Isolation, Durability) transactions through its architecture:

- Atomicity and Consistency: Operations are atomic and consistent by maintaining a consistent view of the data through snapshots

- Isolation: Readers and writers operate on isolated snapshots, preventing interference between concurrent operations.

- Durability: Once a transaction is committed, it is durable and will not be lost, as changes are tracked in metadata files.

This design allows multiple engines to safely read and write data concurrently.

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.

Apache Iceberg architecture: Related reads

Permalink to “Apache Iceberg architecture: Related reads”- Apache Iceberg: All You Need to Know About This Open Table Format in 2025

- Apache Iceberg Data Catalog: What Are Your Options in 2025?

- Apache Iceberg Tables Data Governance: Here Are Your Options in 2025

- Apache Iceberg Alternatives: What Are Your Options for Lakehouse Architectures?

- Apache Parquet vs. Apache Iceberg: Understand Key Differences & Explore How They Work Together

- Apache Hudi vs. Apache Iceberg: 2025 Evaluation Guide on These Two Popular Open Table Formats

- Apache Paimon vs. Apache Iceberg: 2025 Evaluation Guide on These Two Open Table Formats

- Apache Iceberg vs. Delta Lake: A Practical Guide to Data Lakehouse Architecture

- Working with Apache Iceberg on Databricks: A Complete Guide for 2025

- Working with Apache Iceberg on AWS: A Complete Guide [2025]

- Working with Apache Iceberg and AWS Glue: A Complete Guide [2025]

- Working with Apache Iceberg in Google BigQuery: A Practical Guide [2025]

- Polaris Catalog from Snowflake: Everything We Know So Far

- Polaris Catalog + Atlan: Better Together

- Snowflake Horizon for Data Governance

- What does Atlan crawl from Snowflake?

- Snowflake Cortex for AI & ML Analytics: Here’s Everything We Know So Far

- Snowflake Copilot: Here’s Everything We Know So Far About This AI-Powered Assistant

- How to Set Up Data Governance for Snowflake: A Step-by-Step Guide

- How to Set Up a Data Catalog for Snowflake: A Step-by-Step Guide

- Snowflake Data Catalog: What, Why & How to Evaluate

- AI Data Catalog: Exploring the Possibilities That Artificial Intelligence Brings to Your Metadata Applications & Data Interactions

- What Is a Data Catalog? Do You Need One?