Working with Apache Iceberg in Azure: A Complete Guide for 2025

Share this article

Apache Iceberg was a late addition to the Azure stack. In 2022, Azure Synapse Analytics started supporting Apache Iceberg.

Since then, other tools in the Azure stack–Azure Data Factory, Microsoft OneLake, Microsoft Fabric–have also started supporting Apache Iceberg in various capacities. In this article, we’ll look into the various ways of supporting Apache Iceberg with the Azure ecosystem.

See How Atlan Simplifies Data Governance ✨ – Start Product Tour

As Apache Iceberg is driven by its underlying metadata management system, this article will also discuss its pros and cons and the need for a metadata control plane to bring all your data assets together in one place.

Running Iceberg on Azure is one part of the multi-cloud story — Atlan’s Apache Iceberg guide covers the full landscape: architecture, cross-cloud integrations, format comparisons, and governance.

Table of Contents

Permalink to “Table of Contents”- Apache Iceberg in Azure: How does it work?

- Apache Iceberg in Azure: The need for a metadata control plane

- Summing up

- FAQs about working with Apache Iceberg in Azure

- Working with Apache Iceberg in Azure: Related reads

Apache Iceberg in Azure: How does it work?

Permalink to “Apache Iceberg in Azure: How does it work?”As mentioned earlier, Apache Iceberg support in the Azure stack is relatively recent. Until 2022, Apache Hive and Delta Lake were the predominant table formats because of Azure’s history with the Hadoop ecosystem and the availability of Databricks, a first-class service within Azure, such as Azure Databricks.

Let’s look at the various ways in which the Azure tech stack supports Apache Iceberg, starting with Azure Synapse Analytics and Data Factory.

Apache Iceberg with Azure Synapse Analytics and Data Factory

Permalink to “Apache Iceberg with Azure Synapse Analytics and Data Factory”Azure Synapse supports Spark Pools for in-memory processing to boost performance when dealing with large-scale data. Spark Pools are serverless Apache Spark environments that can help you process data quickly.

Azure Synapse supports Iceberg with Spark Pools by allowing you to configure SparkCatalog with the Iceberg catalog as Hadoop. Here’s an example of how you can configure Iceberg in Azure Synapse.

spark.conf.set("spark.sql.catalog.iceberg", "org.apache.iceberg.spark.SparkCatalog")

spark.conf.set("spark.sql.catalog.iceberg.type", "hadoop")

spark.conf.set("spark.sql.catalog.iceberg.warehouse", "abfss://<container-name>/iceberg/")

Synapse’s storage integration allows you to store all the Iceberg data in Azure Data Lake Storage (ADLS).

You can process that data using Spark Pools. You can also read and write from/to these Iceberg tables directly from Synapse.

Now, let’s look at moving Iceberg data during the ETL process using Azure Data Factory.

Azure Data Factory (ADF) is a popular tool for moving, copying, transforming, and orchestrating data between sources, intermediate layers, and target systems.

ADF allows you to use Copy activity to copy various file formats between on-premise and cloud locations. Iceberg is one of those formats. However, the Copy activity support for Apache Iceberg is limited to Azure Data Lake Storage Gen 2.

Note: Azure calls Iceberg a file format instead of a table format.

Delta Lake + Apache Iceberg interoperability in OneLake

Permalink to “Delta Lake + Apache Iceberg interoperability in OneLake”Microsoft OneLake is a unified data lake platform that integrates object stores from multiple cloud platforms, such as ADLS Gen 2, Amazon S3, and Google Cloud Storage. As such, you can work with data regardless of storage location without copying or moving it around.

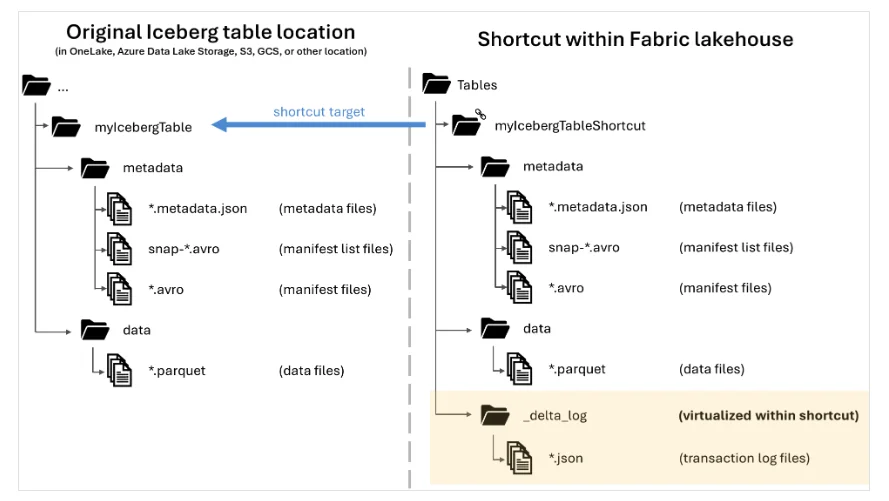

OneLake enables the creation of virtual links between various storage locations when the data is managed using one of the open table formats like Delta Lake or Apache Iceberg. It lets you create these virtual links or shortcuts to Iceberg tables so that they become directly available within Fabric.

Creating shortcuts to Iceberg tables in Microsoft Fabric - Source: Microsoft documentation.

As OneLake’s default table format is Delta Lake, creating a shortcut for an Iceberg table also creates a Delta Log entry under the folder containing the Iceberg table metadata in Delta Lake format.

Although this feature is currently in preview and only works when you are writing Iceberg tables from Snowflake to OneLake, it is designed to be engine-independent. This means writing Iceberg tables into OneLake is possible from other platforms and query engines, such as Databricks, Spark, Trino, and Presto.

Microsoft Purview data quality for Iceberg tables

Permalink to “Microsoft Purview data quality for Iceberg tables”In early 2025, Microsoft Purview started supporting Apache Iceberg for data discovery, profiling, and quality. While Purview supports Iceberg for data quality, which allows you to access the data quality metrics from the Data Quality schema page, the support for Iceberg assets in Purview Data Map is still lacking. So, you can’t find Iceberg tables in the data asset view.

You can still configure Iceberg assets in Microsoft Purview Unified Catalog and associate them with a data product. Then, you can define and apply data quality rules to the associated data assets. This results in data quality scans at the table and column levels, which generate a table and column profile.

It’s important to note that the current support for Apache Iceberg data assets is limited to the Hadoop Iceberg catalog, and not other catalogs. If you’re using this feature while it’s in preview, make sure to consider Microsoft’s official recommendations on using Purview with Apache Iceberg.

While Microsoft Purview attempts to combine metadata from various cloud and data platforms into a single view, it’s still in the early stages of supporting open table formats, especially Apache Iceberg. A control plane for metadata solves this need. Let’s see how.

Apache Iceberg in Azure: The need for a metadata control plane

Permalink to “Apache Iceberg in Azure: The need for a metadata control plane”A control plane for metadata sits horizontally across your organization’s data ecosystem, integrating with all data assets, agnostic of their storage location and the engines accessing those assets for reading and writing purposes. This control plane serves as the solution for data discovery, governance, quality, lineage, and collaboration, among other things.

This is where Atlan comes into the picture. Atlan brings all the metadata in one place, creating a metadata lake that powers the metadata platform. Whether making your organization’s data AI-ready, democratizing data consumption across the business, or enforcing privacy and governance, Atlan’s metadata control plane supports diverse end-users, use cases, and applications.

Summing up

Permalink to “Summing up”In this article, we explore how Azure data services integrate with Apache Iceberg and that most of these integrations are in their initial stages. Azure had integrated with Delta Lake and made it the default table format for data lakehouse implementations.

We also discussed the need for a metadata control plane and how Microsoft Purview manages this need but falls short, especially for open data architectures. A metadata control plane like Atlan can bring all the data enablement features, such as discovery, governance, quality, lineage, and collaboration, under a single control plane for your organization to manage and leverage.

To learn more, check out how Atlan integrates Azure data services and Apache Iceberg.

FAQs about working with Apache Iceberg in Azure

Permalink to “FAQs about working with Apache Iceberg in Azure”What is Apache Iceberg, and why is it important for Azure?

Permalink to “What is Apache Iceberg, and why is it important for Azure?”Apache Iceberg is an open table format designed for handling large-scale, complex data lakes. It ensures better performance and scalability by managing tables with high levels of granularity and version control. For Azure, integrating Apache Iceberg enables advanced data lakehouse architectures that benefit from flexible data governance and seamless data processing across multiple tools in the Azure ecosystem, such as Synapse Analytics and Data Factory.

How does Apache Iceberg work with Azure Synapse Analytics?

Permalink to “How does Apache Iceberg work with Azure Synapse Analytics?”Azure Synapse supports Apache Iceberg by integrating with Spark Pools, allowing high-performance, in-memory processing of data. Iceberg tables can be stored in Azure Data Lake Storage (ADLS), and Synapse allows for direct reading and writing of these tables. By configuring Iceberg with Synapse Spark Pools, organizations can process data more efficiently using Spark’s capabilities.

What role does Azure Data Factory play in managing Apache Iceberg data?

Permalink to “What role does Azure Data Factory play in managing Apache Iceberg data?”Azure Data Factory (ADF) helps manage the movement, transformation, and orchestration of data. While ADF supports Apache Iceberg for data stored in Azure Data Lake Storage Gen 2, it refers to Iceberg as a file format, and the support is limited to copy activities between storage layers. This means that organizations can efficiently move and copy Iceberg-based data as part of their ETL processes.

Can you use Delta Lake and Apache Iceberg together in Azure?

Permalink to “Can you use Delta Lake and Apache Iceberg together in Azure?”Yes, Microsoft OneLake supports the interoperability between Delta Lake and Apache Iceberg, enabling the creation of virtual links or shortcuts to Iceberg tables. Although OneLake’s default format is Delta Lake, it can accommodate Iceberg tables, allowing flexible access to data across different storage systems without requiring data copies.

What is the role of Microsoft Purview in managing Iceberg tables?

Permalink to “What is the role of Microsoft Purview in managing Iceberg tables?”Microsoft Purview supports Apache Iceberg for tasks like data discovery, profiling, and quality assessment. However, it has limited support for Iceberg in the Data Map and Unified Catalog, meaning full visibility of Iceberg assets is not yet available. You can still manage data quality rules and scans at the table and column level for Iceberg tables, but the need for a comprehensive metadata control plane is evident.

Why is a metadata control plane necessary for Apache Iceberg in Azure?

Permalink to “Why is a metadata control plane necessary for Apache Iceberg in Azure?”Apache Iceberg, as a table format, relies heavily on metadata management. While tools like Microsoft Purview provide some degree of support, a unified metadata control plane, such as Atlan, is essential for comprehensive data management. Atlan brings all metadata—whether from Azure, Iceberg, or other sources—into one platform. This enables seamless data governance, discovery, quality control, and collaboration across an organization.

How does Atlan help manage Apache Iceberg in Azure?

Permalink to “How does Atlan help manage Apache Iceberg in Azure?”Atlan acts as a metadata control plane, integrating with Azure’s data ecosystem, including Apache Iceberg. It helps consolidate metadata from different tools and platforms, ensuring data governance, discovery, and collaboration across the organization. By democratizing data through its unified platform, Atlan enables businesses to unlock the full potential of their data assets while ensuring proper compliance and quality.

Working with Apache Iceberg in Azure: Related reads

Permalink to “Working with Apache Iceberg in Azure: Related reads”- Apache Iceberg: All You Need to Know About This Open Table Format in 2025

- Apache Iceberg Data Catalog: What Are Your Options in 2025?

- Apache Iceberg Tables Data Governance: Here Are Your Options in 2025

- Apache Iceberg Alternatives: What Are Your Options for Lakehouse Architectures?

- Apache Parquet vs. Apache Iceberg: Understand Key Differences & Explore How They Work Together

- Apache Hudi vs. Apache Iceberg: 2025 Evaluation Guide on These Two Popular Open Table Formats

- Apache Iceberg vs. Delta Lake: A Practical Guide to Data Lakehouse Architecture

- Working with Apache Iceberg on Databricks: A Complete Guide for 2025

- Working with Apache Iceberg on AWS: A Complete Guide [2025]

- Working with Apache Iceberg and AWS Glue: A Complete Guide [2025]

- Polaris Catalog from Snowflake: Everything We Know So Far

- Polaris Catalog + Atlan: Better Together

- Snowflake Horizon for Data Governance

- What does Atlan crawl from Snowflake?

- Snowflake Cortex for AI & ML Analytics: Here’s Everything We Know So Far

- Snowflake Copilot: Here’s Everything We Know So Far About This AI-Powered Assistant

- How to Set Up Data Governance for Snowflake: A Step-by-Step Guide

- How to Set Up a Data Catalog for Snowflake: A Step-by-Step Guide

- Snowflake Data Catalog: What, Why & How to Evaluate

- AI Data Catalog: Exploring the Possibilities That Artificial Intelligence Brings to Your Metadata Applications & Data Interactions

- What Is a Data Catalog? & Do You Need One?

Share this article