Modern Data Stack Explained: Past, Present & the Future

Share this article

A modern data stack is a cloud-native ecosystem of tools designed to streamline data integration, storage, transformation, and analysis. It leverages scalable technologies such as data warehouses, pipelines, and BI platforms to enable real-time insights and decision-making.

See How Atlan Simplifies Data Governance – Start Product Tour

Unlike traditional data systems, modern stacks prioritize automation, flexibility, and self-service analytics, allowing businesses to efficiently handle diverse data types and volumes.

This architecture ensures secure, compliant, and cost-effective data management while supporting advanced analytics and machine learning applications.

The modern data stack refers to a collection of cloud-based tools and technologies that work together to enable data processing, analysis, and insight generation in a scalable and efficient manner. The stack typically consists of several layers, each addressing a specific aspect of data management, from ingestion and storage to transformation and visualization.

Modern data stack has enjoyed much innovation and attention in recent times. It can be challenging to sift through the avalanche of information surrounding it. Importantly, accelerated innovation and heightened enthusiasm make it even harder to objectively evaluate the benefits of the tools and technologies. The goal of this article is to help you with that.

Here, you will find the definition of modern data stack, features and common capabilities of modern data stack tools, and actionable advice on how to approach tooling to meet the requirements of your data team.

Table of contents

Permalink to “Table of contents”- What is modern data stack?

- Modern data stack and modern data platform

- Key characteristics

- What led to the conception of the modern data stack?

- Components of the modern data platform

- How to get started with modern data stack?

- Future of Modern Data Stack

- How organizations making the most out of their data using Atlan

- FAQs about Modern Data Stack

- Modern Data Stack: Related reads

What is modern data stack?

Permalink to “What is modern data stack?”The birth of cloud data warehouses with their massively parallel processing (MPP) capabilities and first-class SQL support has made processing large volumes of data faster and cheaper. This has led to the development of many cloud-native data tools that are low code, easy to integrate, scalable and economical. These tools and technologies are collectively referred to as the Modern Data Stack (MDS).

Modern data stack and modern data platform: Which is what?

Permalink to “Modern data stack and modern data platform: Which is what?”Even though the terms data platform and data stack are sometimes used interchangeably, a data platform is the set of components through which data flows while a data stack is the set of tools that serve these components.

Modern data stack tools share a few distinct characteristics. The next section describes their common characteristics in brief.

What are the key characteristics of modern data stack?

Permalink to “What are the key characteristics of modern data stack?”- Cloud-first

- Built around cloud data warehouse/lake

- Focus on solving one problem

- Offered as SaaS or open-core

- Low-entry barrier

- Actively supported by communities

1. Cloud-first

Permalink to “1. Cloud-first”Modern public cloud vendors have enabled MDS tools to become highly elastic and scalable. This makes it easy for organizations to integrate them into their existing cloud infrastructure.

2. Built around cloud data warehouse/lake

Permalink to “2. Built around cloud data warehouse/lake”Modern data stack tools recognize that a central cloud data warehouse/lake is what fuels data analytics. So they are designed to integrate seamlessly with all the prominent cloud data warehouses (like Redshift, Bigquery, Snowflake, Databricks, and so on) and take full advantage of their features.

3. Focus on solving one specific problem

Permalink to “3. Focus on solving one specific problem”The modern data stack is a patchwork quilt of tools connected by the different stages of the data pipeline. Each tool focuses on one specific aspect of data processing/management. This enables modern data stack tools to fit into a variety of architectures and plugs into any existing stack with few or no changes.

4. Offered as SaaS or open-core

Permalink to “4. Offered as SaaS or open-core”Modern data stack tools are mostly offered as SaaS (Software as a Service). In some cases, the core components are open-source and come with paid add-on features like end-to-end hosting and professional support.

5. Low entry barrier

Permalink to “5. Low entry barrier”Modern data stack tools are packaged in easy pay-as-you-go and usage-based pricing models. Data practitioners can explore new tools and their features and utility before making big commitments. This saves money and time.

Also, MDS tools are designed to be low-code or even no-code. Tool setup can be completed in a few hours and does not require big tech expertise or time investments.

6. Actively supported by communities

Permalink to “6. Actively supported by communities”Modern data stack solution providers invest considerable time and effort in community building. There are slack groups, meetups, and conferences that actively support tool users and data practitioners. This fosters supportive and creative ecosystems around these tools.

According to an article titled “Five Key Trends in AI and Data Science for 2024” by MIT Sloan Management Review, 57% of organizations had not made changes to their data strategies, despite 93% acknowledging its critical role in deriving value from generative AI as per AWS survey in 2023.

What led to the conception of the modern data stack?

Permalink to “What led to the conception of the modern data stack?”- The emergence of Hadoop and the public cloud

- The launching of Amazon’s Redshift

- A growing need for better tooling

The emergence of Hadoop and the public cloud

Permalink to “The emergence of Hadoop and the public cloud”Before Hadoop, it was only possible to vertically scale the infrastructure. So data processing demanded a large upfront investment. Then Hadoop came along and made it possible to horizontally scale storage and compute on cheap hardware. But even after that, the user experience was clunky (map-reduce) and only large organizations could invest in the special skills required to make it work well. But when public clouds became inexpensive and accessible, even smaller companies could afford storage and compute on the cloud.

Launching of Amazon’s Redshift

Permalink to “Launching of Amazon’s Redshift”Meanwhile, the microservices architecture had popularized NoSQL and non-relational databases. When loaded into a Hadoop cluster for analytics, this non-relational data was hard to process using SQL. This forced data teams to use other programming languages like Java, Scala, and Python to process data. Organizations came to depend on expensive engineering resources and highly specialized skills. Data democracy took a hit.

Amazon’s Redshift changed all that.Launched in 2012, Redshift was the first cloud data warehouse. Not only did it allow large volumes of data to be stored on horizontally scalable infrastructure, but it also made it possible to query the data using plain SQL.

Growing need for better tooling

Permalink to “Growing need for better tooling”In the following years, data warehouse solution providers were able to further improve the architecture, separate storage and compute and offer better price points and scalability. But transforming, modeling, cleaning, and converting data into actionable insights remained cumbersome and error-prone.

Fast-growing businesses became unhappy with what they were getting in return for their large infrastructure investments. Their data had grown in volume, variety, and complexity, but the ecosystem still did not have the tools that could manage it well.

Privacy too had become a serious matter and governments across the globe wanted to protect their citizens from overly digitized information systems. This led to stringent regulatory frameworks such as the EU’s GDPR and California’s CCPA.

As the basic building blocks of the analytical data platform matured and stabilized, better data management and observability became super important. The ground was fertile for the development of a better set of tools that could address these challenges. Investors and entrepreneurs became interested and the modern data stack became the focus of attention and innovation.

What are the fundamental components of the modern data platform?

Permalink to “What are the fundamental components of the modern data platform?”To understand the benefits of specific MDS tools and make good tooling choices, it is useful to first understand the individual components of the data platform and the common capabilities of the tools that serve each of them.

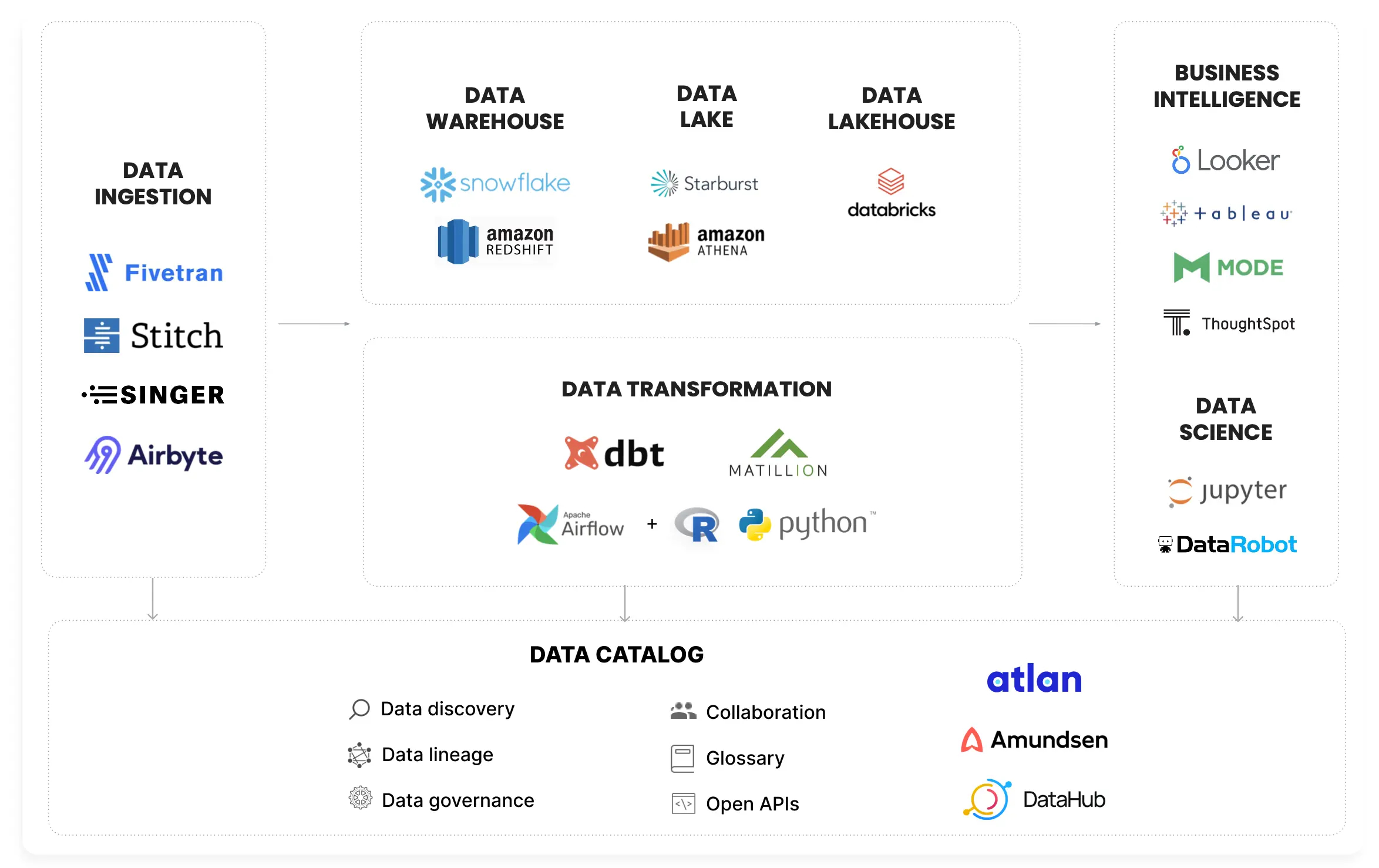

The basic components of a data platform (in the direction of data flow) are:

- Data Collection and Tracking

- Data Ingestion

- Data Transformation

- Data Storage (Data warehouse/lake)

- Metrics layer (Headless BI)

- BI Tools

- Reverse ETL

- Orchestration (Workflow engine)

- Data Management, Quality, and Governance

Tools in the modern data stack. - Image by Atlan.

Data Collection and Tracking

Permalink to “Data Collection and Tracking”This includes the process of collecting behavioral data from client applications (mobile, web, IoT devices) and transactional data from backend services.

The MDS tools in this area focus on reducing quality issues that arise due to poorly designed, incorrectly implemented, missed, or delayed tracking of data.

Common capabilities of MDS data collection and tracking tools

- Interface for event schema design

- Workflow for collaboration and peer review

- Integration of event schema with the rest of the stack

- Auto-generation of tracking SDKs from event schemas

- Validation of events against schemas

Data Ingestion

Permalink to “Data Ingestion”Ingestion is the mechanism for extracting and loading raw data from its source of truth to a central data warehouse/lake.

A modern data ecosystem has pipelines bringing in raw data from hundreds of 1st and 3rd-party sources into the warehouse. New ingestion pipelines need to be constantly laid out to meet growing business demands.

MDS data ingestion tools aim to reduce boilerplate, improve productivity and ensure data quality.

Common capabilities of MDS data ingestion tools

- Configurable framework

- Plug and play connectors for well-known data formats and sources

- Plug and play integrations for popular storage destinations

- Quality checks against ingested data

- Monitoring and alerting of ingestion pipelines

Additional reads: Data ingestion 101

Data Transformation

Permalink to “Data Transformation”Transformation is the process of cleaning, normalizing, filtering, joining, modeling, and summarizing raw data to make it easier to understand and query. In the ELT architecture, transformation happens immediately after data ingestion.

MDS data transformation tools focus on reducing boilerplate, providing frameworks that enable consistent data model design, promoting code reuse and testability.

Common capabilities of MDS data transformation tools

- Strong support for software engineering best practices like version control, testing, CI/CD, and code reusability

- Support for common transformation patterns such as idempotency, snapshots, and incrementality

- Self-documentation

- Integration with other tools in the data stack

Additional reads: Data transformation 101 | Data transformations can be enabled by cloud

Data storage (Data Warehouse/lake)

Permalink to “Data storage (Data Warehouse/lake)”Data Warehouse/lake is at the heart of modern data platforms. It acts as a historical record of truth for all behavioral and transactional data of the organization.

MDS data storage systems focus on providing serverless auto-scaling, lightning-fast performance, economies of scale, better data governance, and high developer productivity.

Common capabilities of MDS data warehouses/lakes

- Auto-scaling during heavy loads

- Support for open data formats such as Parquet, ORC, and Avro

- Strong security and access control

- Data governance features such as managing personally identifiable information

- Support for both batch and real-time data ingestion

- Rich information schema

Additional reads: Data warehouse 101 | Data lake 101 | Data warehouse vs data lake vs data lakehouse

Metrics Layer (Headless BI)

Permalink to “Metrics Layer (Headless BI)”The metrics layer (headless BI) sits between data models and BI tools, allowing data teams to declaratively define metrics across different dimensions. It provides an API that converts metric computation requests into SQL queries and runs them against the data warehouse.

The metrics layer helps to achieve consistent reporting, especially in large organizations where metrics definitions and computation logic tend to diverge across different departments.

Common capabilities of MDS Metrics (Headless BI) tools

- Declarative definitions of metrics

- Version control of metrics definitions

- API for querying metrics

- Integration with popular BI tools

- Performance optimizations for low latency

BI tools

Permalink to “BI tools”BI tools are analytical, reporting, and dashboarding tools used by data consumers to understand data and support business decisions in an organization.

MDS BI tools focus on enabling data democracy by making it easy for anyone in the organization to quickly analyze data and build feature-rich reports.

Common capabilities of MDS BI tools

- Low or no code

- Data visualizations for specific use cases such as geospatial data

- Built-in metrics definition layer

- Integration with other tools in the data stack

- Embedded collaboration and documentation features

Reverse ETL

Permalink to “Reverse ETL”Reverse ETL is the process of moving transformed data from the data warehouse to downstream systems like operations, finance, marketing, CRM, sales, and even back into the product, to facilitate operational decision making.

Reverse ETL tools are similar to MDS data ingestion tools except that the direction of data flow is reversed (from the data warehouse to downstream systems).

Common capabilities of Reverse ETL tools

- Configurable framework

- Plug and play connectors for well-known data formats and destinations

- Plug and play integrations for popular data sources

- Quality checks against egressed data

- Monitoring and alerting of data pipelines

Orchestration (Workflow engine)

Permalink to “Orchestration (Workflow engine)”Orchestration systems are required to run data pipelines on schedule, request/relinquish infrastructure resources on-demand, react to failures and manage dependencies across data pipelines from a common interface.

MDS orchestration tools focus on providing end-to-end management of workflow schedules, extensive support for complex workflow dependencies, and seamless integration with modern infrastructure components like Kubernetes.

Common capabilities of MDS orchestration tools

- Declarative definition of workflows

- Complex scheduling

- Backfills, reruns, and ad-hoc runs

- Integration with other tools in the data stack

- Modular and extendible design

- Plugins for popular cloud and infrastructure services

Additional reads: Data orchestration 101 | Open source data orchestration tools

Data Management, Quality, and Governance

Permalink to “Data Management, Quality, and Governance”Data Governance is the umbrella term that includes managing data quality, lineage, discovery, cataloging, information security, and data privacy by effectively collecting and utilizing metadata.

MDS data governance tools focus on enabling a high level of transparency, collaboration, and data democracy.

Common capabilities of MDS Data governance tools

- Integration with other tools in the data stack

- Search and discovery of data assets across the organization

- Observation of data in motion and at rest to ensure data quality

- Visualization of data lineage

- Crowdsourcing of data documentation

- Collaboration and sharing

- Monitoring and alerting of data security and privacy non-compliance

Additional reads: Data governance for the MDS | Data catalog for the MDS | Metadata management 101 | Modernize Data Management

Data catalog and governance across the Modern Data Stack

How to get started with modern data stack?

Permalink to “How to get started with modern data stack?”Picking the right MDS tools for your business requirements can be a daunting task, given a large number of tooling choices available in the ecosystem.

This section highlights a few important considerations to help you make the right choice for your data team’s needs.

How to evaluate a tool in the modern data stack

- Do you really need this tool?

- Does the tool seamlessly integrate with your existing infrastructure?

- Do you have the necessary tech skills?

- How much does it cost?

- What is the effort required for setup/onboarding?

- What are the hosting options?

- Is the tool scalable?

- How will the tool affect your current cloud infrastructure?

- Do you have easy access to raw data?

- What kind of data privacy and access controls will be necessary?

- Are there open APIs for extensibility?

- Is the tool interface easy-to-use and intuitive?

- Does the tool work well with your project management and collaboration platforms?

- Is the source code open or proprietary?

- Is the tool well supported by the provider and the community?

- Is the solution provider company adequately funded?

- What is the tool footprint?

Now, let’s dwell a bit on each question

Do you really need this tool?

Permalink to “Do you really need this tool?”Even though most MDS tools are economical and easy to set up, it is still worth asking yourself if you really need the tool. If your data footprint is negligible and your team size small, you may be better off without the additional tooling overhead.

Does the tool seamlessly integrate with your existing infrastructure?

Permalink to “Does the tool seamlessly integrate with your existing infrastructure?”Ideally, you should pick a tool that sits well with your existing infrastructure and data architecture. If your organization already has a mature setup, the cost of tool migration is likely to be significant. Buying a tool that fits your existing infrastructure or at least being mindful of the tradeoffs will save your data team considerable time and effort.

Do you have the necessary tech skills?

Permalink to “Do you have the necessary tech skills?”Does your team have the skills to manage, maintain or extend the tool in the long run? Even with no code MDS tools, some coding skills and effort may be necessary for configuration and fine-tuning.

How much does it cost?

Permalink to “How much does it cost?”Explore the pricing tiers before making a choice. It makes good sense to start at the lowest tier that includes the most essential features and scale up as required.

Factor in the infrastructure/cloud costs - they may not always be included in the pricing.

Look for hidden costs. Always involve your legal and financial teams in all your pricing negotiations.

What is the effort required for setup and onboarding?

Permalink to “What is the effort required for setup and onboarding?”Evaluate the effort required for installation and onboarding. Factor in migration effort, user training, and dependencies on other teams, if any.

What are the hosting options?

Permalink to “What are the hosting options?”Your infrastructure and security team may mandate the tool to be hosted on-prem or within your cloud. Check if this is supported and if there is additional cost/effort required.

Is the tool scalable?

Permalink to “Is the tool scalable?”Verify if the tool can scale up to meet your growth needs with respect to the number of users, storage and compute, etc., for the next 3 to 5 years.

How will the tool affect your current cloud infrastructure?

Permalink to “How will the tool affect your current cloud infrastructure?”If the tool is set up to use your existing cloud resources, it may impact their performance and availability. You will need to take this into account when planning your infrastructure requirements.

Do you have easy access to the raw data?

Permalink to “Do you have easy access to the raw data?”Where does your raw data live? Does the tool store it in your own cloud? Or does it store it in the tool’s internal storage? If so, will you have easy access to it? Also, will you be able to easily import raw data into your own warehouse in standard file formats or via API? These are important considerations especially if your organization handles sensitive data or needs to comply with stringent data laws.

What kind of data privacy and access controls will be necessary?

Permalink to “What kind of data privacy and access controls will be necessary?”Does the tool support your SSO provider or require separate credentials?

Is data encrypted in motion and at rest?

What access control policies does the tool support and how does it comply with regulatory frameworks such as GDPR?

Are there open APIs for extensibility?

Permalink to “Are there open APIs for extensibility?”It’s worth checking if the tool has an API to help you extend its capabilities as and when required.

Is the tool interface easy to use and intuitive?

Permalink to “Is the tool interface easy to use and intuitive?”A tool with a poor user experience will not be easily adopted. Be sure to identify the UX expectations of the intended audience and if the tool can match them. Also, pay attention to the developer experience to minimize resistance to usage and reduced productivity.

Does the tool work well with your project management and collaboration platforms?

Permalink to “Does the tool work well with your project management and collaboration platforms?”The tool you choose should integrate well with project management and collaboration platforms like Jira, Confluence, Slack, and Email to support globally distributed teams in their day-to-day tasks.

Is the source code open or proprietary?

Permalink to “Is the source code open or proprietary?”In both cases, the software must be actively maintained with regular releases of new versions, upgrades, and bug fixes.

But if the tool is open-source, it must have sufficiently permissive licensing and be written in a language that your team can support with their current expertise.

Is the tool well supported by the provider and the community?

Permalink to “Is the tool well supported by the provider and the community?”Make sure that there is extensive documentation and reliable community support for the tool of your choice. Also, make sure that the tool provider can provide quality tech support when necessary.

Is the solution provider company adequately funded?

Permalink to “Is the solution provider company adequately funded?”New tools emerge every day in the modern data ecosystem. So it is important to know if the solution provider company of the tool you choose, is supported by strong leadership and adequate funding. This is especially important when you are interested in a tool that meets your requirements but is not yet widely adopted in the community.

What is the tool footprint?

Permalink to “What is the tool footprint?”If you pick a tool with a large footprint, you will need to do thorough research to make sure that you do not have to replace it too soon. Tools with larger footprints are harder to replace because of their bigger scope in the data platform.

Future of Modern Data Stack

Permalink to “Future of Modern Data Stack”Modern data stack tools have exponentially improved the productivity of data practitioners. Because of this, teams are ready and willing to look at solving more complex problems in data. New practices like Data Mesh, Headless BI, Stream Processing, and Data Operationalization have become fertile grounds for further innovation.

At the same time, emerging MDS tools are constantly pushing the boundaries of how data is stored, processed, analyzed, and managed. It will be interesting to see how the modern data stack will evolve further to solve the next level of complexity in data.

How organizations making the most out of their data using Atlan

Permalink to “How organizations making the most out of their data using Atlan”The recently published Forrester Wave report compared all the major enterprise data catalogs and positioned Atlan as the market leader ahead of all others. The comparison was based on 24 different aspects of cataloging, broadly across the following three criteria:

- Automatic cataloging of the entire technology, data, and AI ecosystem

- Enabling the data ecosystem AI and automation first

- Prioritizing data democratization and self-service

These criteria made Atlan the ideal choice for a major audio content platform, where the data ecosystem was centered around Snowflake. The platform sought a “one-stop shop for governance and discovery,” and Atlan played a crucial role in ensuring their data was “understandable, reliable, high-quality, and discoverable.”

For another organization, Aliaxis, which also uses Snowflake as their core data platform, Atlan served as “a bridge” between various tools and technologies across the data ecosystem. With its organization-wide business glossary, Atlan became the go-to platform for finding, accessing, and using data. It also significantly reduced the time spent by data engineers and analysts on pipeline debugging and troubleshooting.

A key goal of Atlan is to help organizations maximize the use of their data for AI use cases. As generative AI capabilities have advanced in recent years, organizations can now do more with both structured and unstructured data—provided it is discoverable and trustworthy, or in other words, AI-ready.

Tide’s Story of GDPR Compliance: Embedding Privacy into Automated Processes

Permalink to “Tide’s Story of GDPR Compliance: Embedding Privacy into Automated Processes”- Tide, a UK-based digital bank with nearly 500,000 small business customers, sought to improve their compliance with GDPR’s Right to Erasure, commonly known as the “Right to be forgotten”.

- After adopting Atlan as their metadata platform, Tide’s data and legal teams collaborated to define personally identifiable information in order to propagate those definitions and tags across their data estate.

- Tide used Atlan Playbooks (rule-based bulk automations) to automatically identify, tag, and secure personal data, turning a 50-day manual process into mere hours of work.

Book your personalized demo today to find out how Atlan can help your organization in establishing and scaling data governance programs.

FAQs about Modern Data Stack

Permalink to “FAQs about Modern Data Stack”1. What is a modern data stack?

Permalink to “1. What is a modern data stack?”A modern data stack refers to a collection of cloud-based tools and technologies designed to streamline data processing, analysis, and insight generation. It integrates various components like data warehouses, pipelines, and BI tools, offering scalability, efficiency, and real-time processing capabilities.

2. How does a modern data stack work?

Permalink to “2. How does a modern data stack work?”The modern data stack works by connecting disparate data sources through cloud-native pipelines. Data is ingested, transformed, and stored in data warehouses or lakes, making it accessible for analytics and visualization via BI tools. This setup ensures real-time insights and scalable performance.

3. What are the key components of a modern data stack?

Permalink to “3. What are the key components of a modern data stack?”Key components include data ingestion tools, data warehouses, data lakes, transformation tools (ETL/ELT), and business intelligence (BI) tools. These components work together to manage data efficiently, providing real-time analytics and insights.

4. How does a modern data stack improve analytics?

Permalink to “4. How does a modern data stack improve analytics?”By automating data integration and processing, the modern data stack accelerates analytics workflows. It provides real-time insights, enhances data accuracy, and ensures that business decisions are based on up-to-date, actionable information.

5. What are the advantages of using a cloud-based data stack?

Permalink to “5. What are the advantages of using a cloud-based data stack?”Cloud-based data stacks offer scalability, cost-efficiency, and flexibility. They enable real-time data processing, reduce infrastructure management overhead, and provide pay-as-you-go pricing models, making them ideal for businesses of all sizes.

6. How do modern data stacks handle data security and compliance?

Permalink to “6. How do modern data stacks handle data security and compliance?”Modern data stacks incorporate robust security measures, including encryption, access controls, and compliance with regulations like GDPR and HIPAA. These features ensure data privacy and protection, safeguarding sensitive information throughout the data lifecycle.

Modern data stack: Related reads

Permalink to “Modern data stack: Related reads”- Modern data culture: The open secret to great data teams

- Data Catalog: Does Your Business Really Need One?

- What is modern data stack: History, components, platforms, and the future

- What is a modern data platform: Components, capabilities, and tools

- Modern data team 101: A roster of diverse talent

- Modern data catalogs: 5 essential features & evaluation guide

- Data ingestion 101: Using Big Data starts here

- What is data transformation: Definition, processes, and use cases

- What Is the Difference between Data Warehouse, Data Lake and a Data Lakehouse

- Data Governance Tools: Importance, Key Capabilities, Trends, and Deployment Options

Photo by Francesco Ungaro on Pexels

Share this article