Snowflake on AWS: How to Deploy in 2025 [Practical Guide]

Share this article

Snowflake is a Data Cloud platform that supports modern data architectures like warehouses, lakes, and lakehouses across your preferred cloud provider.

AWS, the first cloud platform that emerged in the mid-2000s with the launch of Amazon SQS and AWS S3, offers a wide range of services that pair well with Snowflake.

Scaling AI on Snowflake? Here’s the playbook - Watch Now

This article explores how to deploy and run Snowflake on AWS, covering:

- Region availability and pricing

- Deploying Snowflake on AWS

- Loading and unloading data in Amazon S3

- Using external AWS Lambda functions

- Using the Snowflake Business Critical Edition on AWS

- Common FAQs about deploying Snowflake on AWS

Modern data problems require modern solutions - Try Atlan, the data catalog of choice for forward-looking data teams! 👉 Book your demo today

Table of contents #

- Is Snowflake available on AWS?

- How can you deploy Snowflake on AWS?

- Can you use external Lambda functions with Snowflake?

- How can you use the Snowflake Business edition on AWS?

- Summary

- Snowflake on AWS: Frequently asked questions (FAQs)

- Snowflake on AWS: Related reads

Is Snowflake available on AWS? #

Snowflake started as an AWS customer and competitor. Today, it also runs on Microsoft Azure and Google Cloud Platform. As of May 2025, Snowflake is available in 24 AWS regions, including a few SnowGov regions in the US.

If you already use AWS and store data in S3 buckets, you can bulk load that data directly into Snowflake using your existing buckets and folder paths.

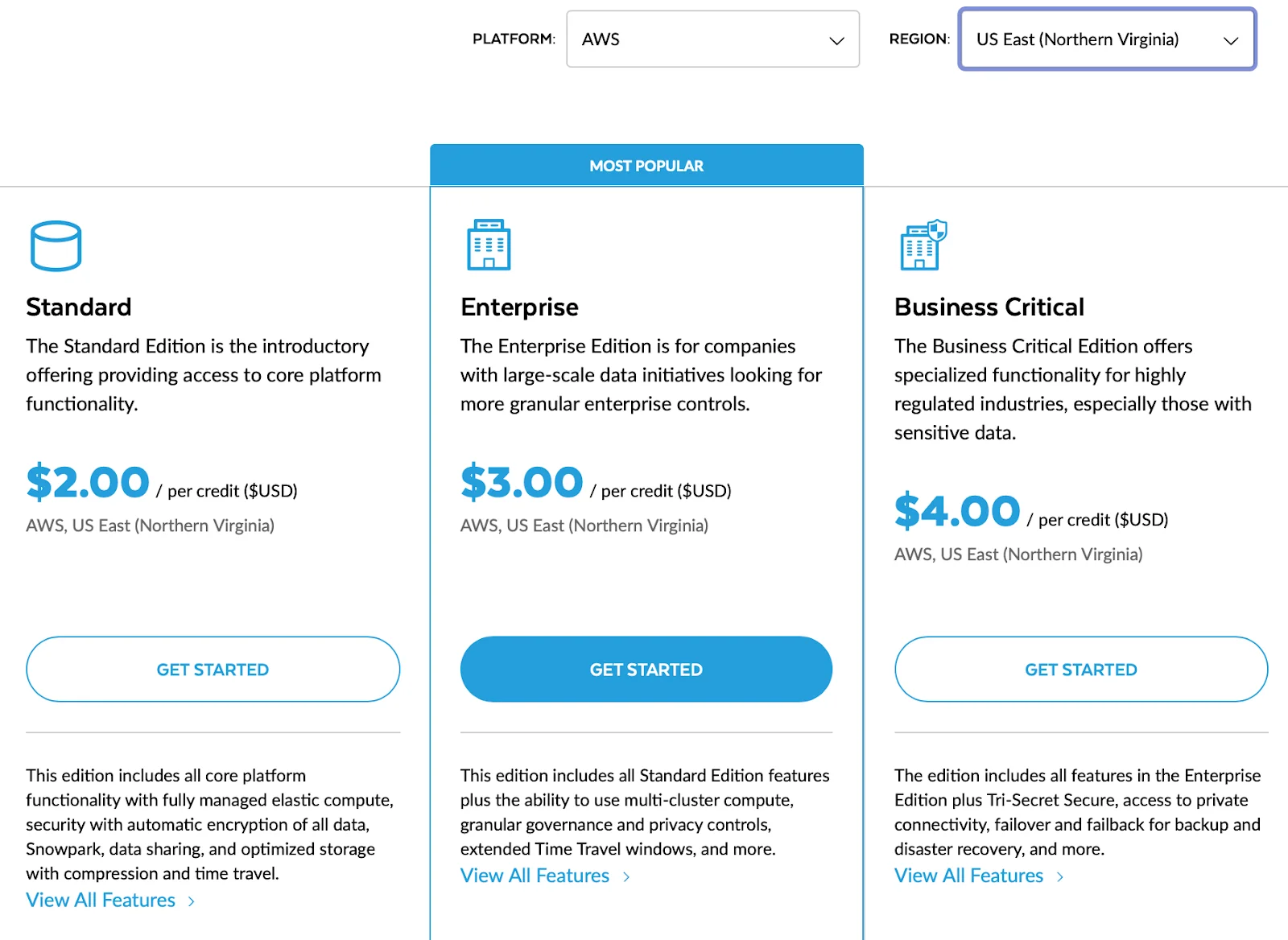

You can deploy Snowflake by using on-demand resources or signing up for longer-term contracts to get discounts. You also need to consider the features you need for your business use cases. Based on that, you can decide on a Snowflake Edition. Here’s what the Snowflake pricing page looks like for the AWS US East (Northern Virginia) region.

Snowflake credit pricing for AWS’s Northern Virginia region - Source: Snowflake.

When deciding on the region, you need to consider the data residency, security, compliance, and usage requirements, too.

Although most mature features are available across cloud platforms and regions, you might need help finding some newly released features in all the cloud regions. Another major thing to consider is that the cost of data movement, compute power, etc., differs significantly across regions.

How can you deploy Snowflake on AWS? #

You can deploy Snowflake on AWS by:

- Choosing a supported AWS region and Snowflake Edition

- Setting up Snowflake warehouses to run compute workloads

Note: Most processing and consumption workloads need a running warehouse, though some Snowflake features use separate serverless compute.

- Using Amazon S3 as external stages for loading/unloading data

- Creating storage integrations and external tables for direct access

- Using AWS services like Lambda and API Gateway for serverless extensions

Let’s explore the specifics.

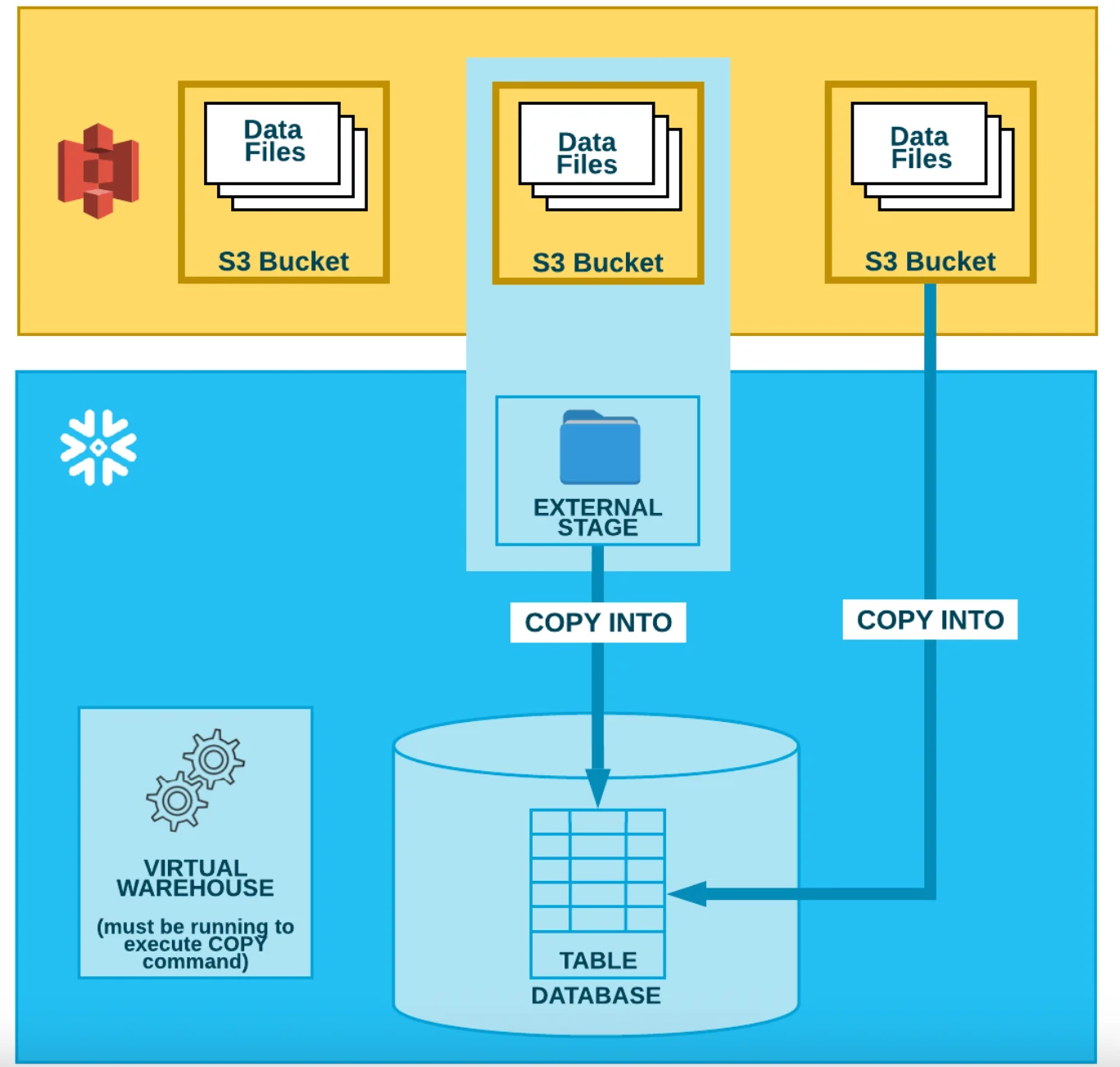

Staging data in Amazon S3 #

Snowflake uses the concept of stages to load and unload data from and to other data systems. You can choose one of the following options:

- Use a Snowflake-managed internal stage to load data into a Snowflake table from a local file system

- Use an external stage to load data from object-based storage

The unloading process also involves the same steps but in reverse.

As mentioned earlier, if your data is located in AWS S3 buckets, you can create an external stage in S3 to load your data into Snowflake. To do that, you’ll first need to create a storage integration, which essentially acts as a credentials file when you link Snowflake and S3 to create a stage.

You can create a storage integration to enable external stages in S3 using the following SQL statement:

CREATE STORAGE INTEGRATION <storage_integration_name>

TYPE = EXTERNAL_STAGE

STORAGE_PROVIDER = 'S3'

ENABLED = TRUE

STORAGE_AWS_ROLE_ARN = '<aws_iam_role_arn>'

STORAGE_ALLOWED_LOCATIONS = ('s3://<bucket>/<path>/', 's3://<bucket>/<path>/')

[ STORAGE_BLOCKED_LOCATIONS = ('s3://<bucket>/<path>/', 's3://<bucket>/<path>/') ]

Using that storage integration, you can go ahead and create a stage by specifying the S3 location and format of those files you want to stage into Snowflake using the following SQL statement:

CREATE STAGE <stage_name>

STORAGE_INTEGRATION = <storage_integration_name>

URL = 's3://<bucket>/<path>/'

FILE_FORMAT = <csv_format_name>;

Using the storage integration and the external stages, you can load data from and unload data into S3. Let’s explore the specifics.

Loading and unloading data in Amazon S3 #

Once the stage is created, you can use the COPY INTO command for bulk-loading the data into a table, as shown in the SQL statement below:

COPY INTO <table_name>

FROM @<stage_name>

PATTERN='.*address.*.csv';

The pattern would get all the address.*.csv files, such as address.part001.csv, address.part002.csv, and so on, and load them in parallel into the specified Snowflake table.

You don’t necessarily have to create a separate stage for unloading data. Still, it’s considered best practice to create a unload directory to unload the data from a table, as shown in the SQL statement below:

COPY INTO @<stage_name>/unload/ from address;

After this, you can run the GET statement to unload the data from the stage to your local file system:

GET @mystage/unload/address.csv.gz file:///address.csv.gz

Loading the contents of Amazon S3 buckets into Snowflake - Source: Snowflake.

The Snowflake + AWS integration also allows you to run queries on an external stage without getting the data into Snowflake. To do this, you’ll need to create external tables in Snowflake, which collect table-level metadata and store that in Snowflake’s data catalog.

It is a common practice to store files by year, month, and day partitions in object stores like Amazon S3. You can query information using the METADATA$FILENAME pseudo column while creating the external table, using which you can enforce a partitioning scheme, as shown in the SQL statement below:

CREATE EXTERNAL TABLE orders (

date_part date AS TO_DATE(SPLIT_PART(METADATA$FILENAME, '/', 3)

|| '/' || SPLIT_PART(METADATA$FILENAME, '/', 4)

|| '/' || SPLIT_PART(METADATA$FILENAME, '/', 5), 'YYYY/MM/DD'),

order_time bigint AS (value:order_time::bigint),

order_quantity int AS (value:order_quantity::int)),

reference_number varchar AS (value:order_quantity::varchar))

PARTITION BY (date_part)

LOCATION=@<stage_name>/orders/

AUTO_REFRESH = TRUE

FILE_FORMAT = (TYPE = PARQUET)

AWS_SNS_TOPIC = 'arn:aws:sns:ap-southeast-2:1045635537262:<bucket_name>';

With the AUTO_REFRESH and AWS_SNS_TOPIC options, you can also refresh your external tables based on specific triggers sent by AWS SNS, which makes it easy to keep the tables updated.

Can you use external Lambda functions with Snowflake? #

In addition to external tables where you don’t have to move your data into Snowflake, you can also use external functions where you don’t have to move your business logic into Snowflake.

An external function or a remote service gives you the option to offload certain aspects of your data platform to certain services in AWS, the most prominent of which is AWS Lambda, the serverless compute option from AWS.

Integrating with AWS Lambda works the same way integrating with AWS S3 works, i.e., you have to create an integration; in this case, an API integration, as shown in the SQL statement below:

CREATE OR REPLACE API INTEGRATION <api_integration_name>

API_PROVIDER = aws_api_gateway

API_AWS_ROLE_ARN = '<aws_iam_role_arn>'

API_ALLOWED_FIXES = ('https://')

ENABLED = TRUE;

Snowflake uses AWS API Gateway as a proxy service to send requests for Lambda invocation, which is why you have the API_PROVIDER listed as aws_api_gateway.

Several use cases exist for external functions to enrich, clean, protect, and mask data. You can call other third-party APIs from these external functions or write your code within the external function. One common example of external functions in Snowflake is the external tokenization of data masking, where you can use AWS Lambda as the tokenizer.

How can you use the Snowflake Business edition on AWS? #

The Business Critical Edition of Snowflake ups the Enterprise Edition’s security, availability, and support. Concerning AWS, the Business Critical Edition allows you to use AWS PrivateLink, which connects your VPCs and AWS services without using the internet and only AWS and its partner network infrastructure.

The whole point behind using PrivateLink is to empower you to comply with compliance regulations, such as PCI and HIPAA, and reduce the risk of data exposure. AWS PrivateLink also allows you to access Snowflake’s internal stages and run external functions with private endpoints to make them more secure.

Some businesses, especially those dealing with financial and medical data, have rigorous data storage and residency requirements. These companies sometimes store critical data on their own on-premises data centers. In such cases, you can use AWS Direct Connect, a dedicated connection between your data center and AWS, to make all your infrastructure available in a single private network.

Summary #

Snowflake runs seamlessly on AWS, offering integration with key AWS services like Amazon S3, API Gateway, Lambda, PrivateLink, and Direct Connect. These services enhance Snowflake’s native capabilities for data loading, processing, and secure connectivity. Teams can also take advantage of the Business Critical Edition on AWS to meet advanced privacy, compliance, and integration needs across their cloud infrastructure.

By combining Snowflake’s governance capabilities with AWS Glue Data Catalog, you can build a metadata-rich environment on AWS. This metadata can be integrated into Atlan—a control plane for data, metadata, and AI—to improve discovery, governance, and collaboration across teams. With Atlan’s two-way tag sync for Snowflake, you can also implement shift-left governance and manage tags directly from Atlan.

You can learn more about the Snowflake + AWS partnership from Snowflake’s official website and the Snowflake + Atlan partnership here.

Snowflake on AWS: Frequently asked questions (FAQs) #

1. Is Snowflake available on AWS? #

Yes, Snowflake has been available on AWS since its early days. As of May 2025, it’s deployed across 24 AWS regions, including SnowGov regions in the US.

2. How do I deploy Snowflake on AWS? #

You can deploy Snowflake by selecting an AWS region, choosing a Snowflake Edition based on your requirements, and provisioning compute resources (warehouses) to run your workloads. Snowflake is fully managed and can be deployed within your chosen AWS region.

3. Can I load and unload data between Snowflake and Amazon S3? #

Yes. Snowflake supports external stages that let you load data from and unload data to Amazon S3 buckets using simple SQL commands like COPY INTO. It also supports partitioning and auto-refresh for keeping external tables up-to-date.

4. How does Snowflake integrate with AWS Lambda? #

You can create external functions that connect to AWS Lambda via AWS API Gateway. This allows you to offload logic such as data enrichment or masking to serverless functions outside of Snowflake.

5. Can I use Snowflake’s Business Critical Edition on AWS? #

Snowflake’s Business Critical Edition provides enhanced security and compliance (like HIPAA and PCI). On AWS, it includes support for AWS PrivateLink and Direct Connect to establish private, secure networking between Snowflake and your infrastructure.

6. Can I use AWS services like Glue or EventBridge with Snowflake? #

Yes. Snowflake integrates with AWS services like Glue for metadata management, EventBridge for automation, and Direct Connect for hybrid cloud infrastructure.

7. How does Atlan enhance metadata governance on AWS + Snowflake? #

Atlan acts as a metadata control plane, integrating with Snowflake and AWS Glue to improve discovery and governance. With features like two-way tag sync, you can manage metadata and policies directly within Atlan.

Snowflake on AWS: Related reads #

- Snowflake Summit 2025: How to Make the Most of This Year’s Event

- Snowflake Data Mesh: Step-by-Step Setup Guide

- Snowflake Data Catalog: Importance, Benefits, Native Capabilities & Evaluation Guide

- Snowflake Data Governance: Features, Frameworks & Best practices

- Snowflake Metadata Management: Importance, Challenges, and Identifying The Right Platform

- Snowflake Data Lineage: A Step-by-Step How to Guide

- Snowflake Data Dictionary: Documentation for Your Database

- Snowflake on Azure: How to Deploy in 2025 [A Practical Guide]

- Snowflake on GCP: A Practical Guide For Deployment

- Snowflake Conferences 2025: Top Events to Watch Out For

- Snowflake Horizon for Data Governance: Your Complete 2025 Guide

- Snowflake Cortex: Top Capabilities and Use Cases to Know in 2025

- Snowflake Copilot: Everything We Know About This AI-Powered Assistant

Share this article