Snowflake on GCP: How to Deploy in 2025 [Practical Guide]

Share this article

Snowflake is a Data Cloud platform that supports modern data architectures like warehouses, lakes, and lakehouses across your preferred cloud provider.

Google Cloud Platform (GCP), part of the broader Google Cloud ecosystem, offers over 100 services to build and scale cloud applications—from infrastructure to AI and analytics.

Scaling AI on Snowflake? Here’s the playbook - Watch Now

This article explores how to deploy and run Snowflake on GCP, covering:

- Region availability and pricing

- Deploying Snowflake on GCP

- Loading and unloading data in Google Cloud Storage

- Using Snowpipe with GCS events

- Leveraging Google Cloud Functions

- Using the Snowflake Business Critical Edition on GCP

- Common FAQs about deploying Snowflake on GCP

Table of contents #

- Is Snowflake available on Google Cloud Platform (GCP)?

- How can you deploy Snowflake on GCP?

- Can you use Snowpipe with GCS events?

- How can you use external cloud functions in GCP?

- How can you use the Snowflake Business edition on GCP?

- Summary

- Snowflake on GCP: Frequently asked questions (FAQs)

- Snowflake on GCP: Related reads

Is Snowflake available on Google Cloud Platform (GCP)? #

Snowflake is a SaaS platform that handles infrastructure provisioning, database maintenance, data storage, etc. Since early 2020, Snowflake has been generally available on the Google Cloud Platform.

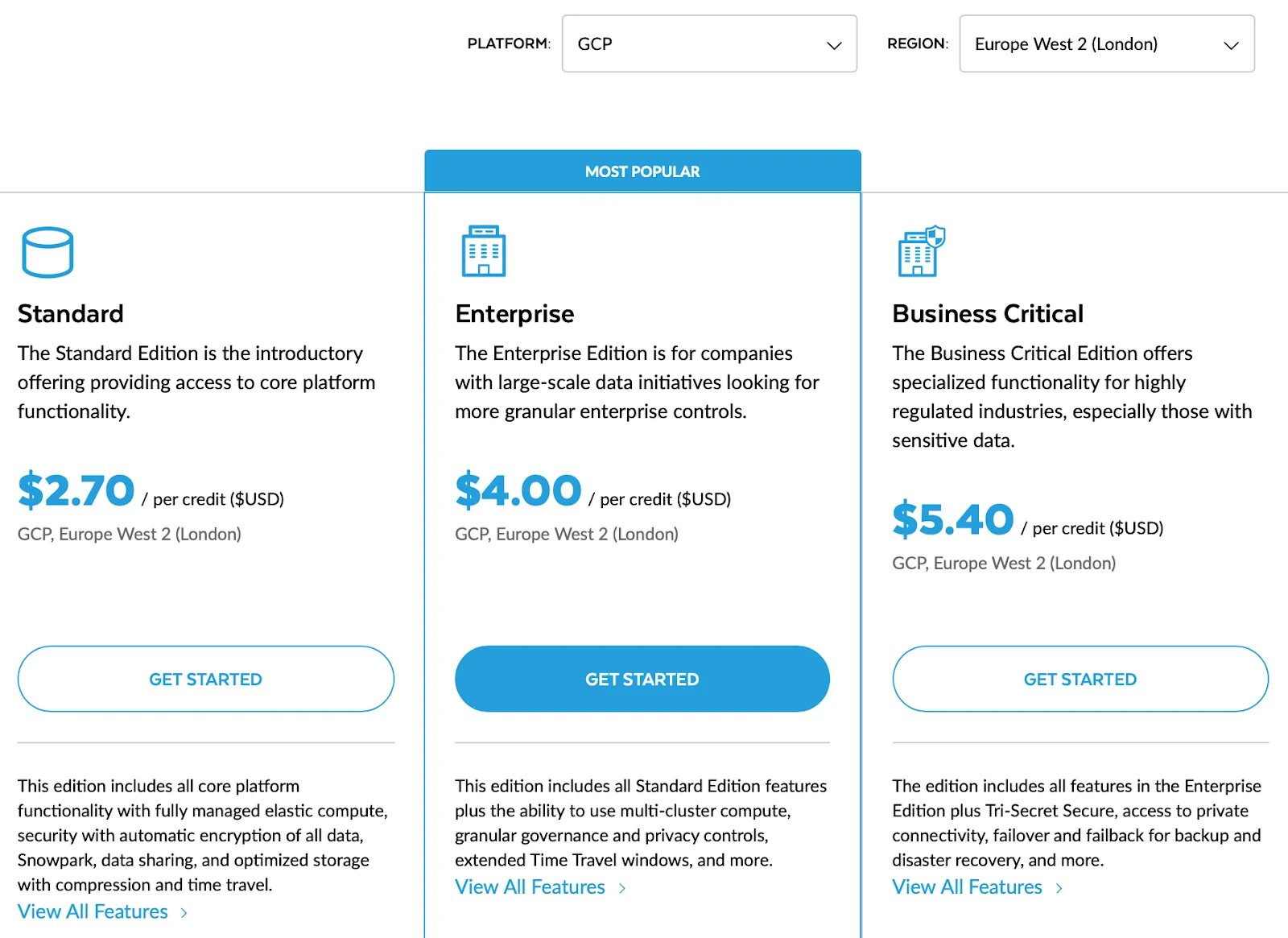

As of May 2025, the Google Cloud Platform (GCP) is only available in six regions – Iowa, North Virginia, London, the Netherlands, Frankfurt, and Dammam. Here’s what the Snowflake pricing page looks like for the Google Cloud London region.

Snowflake credit pricing for GCP’s London region - Source: Snowflake.

Not all regions support the same features, compliance needs, or pricing. Security options, newly released features, and costs for compute, storage, and data movement can vary. Evaluate these factors carefully before choosing a region for your Snowflake deployment.

How can you deploy Snowflake on GCP? #

You can deploy Snowflake on GCP by:

- Choosing a supported GCP region and Snowflake Edition

- Setting up Snowflake warehouses to run compute workloads

- Using Google Cloud Storage (GCS) as external stages

- Creating storage integrations and external tables

- Using Snowpipe with Google Cloud Pub/Sub

- Integrating with Cloud Functions via Google API Gateway

Let’s explore the specifics further.

Staging data in GCP #

Snowflake uses the concept of stages to load and unload data from and to other data systems. You can choose one of the following options:

- A Snowflake-managed internal stage to load data into a Snowflake table from a local file system

- An external stage to load data from object-based storage

The unloading process also involves the same steps but in reverse.

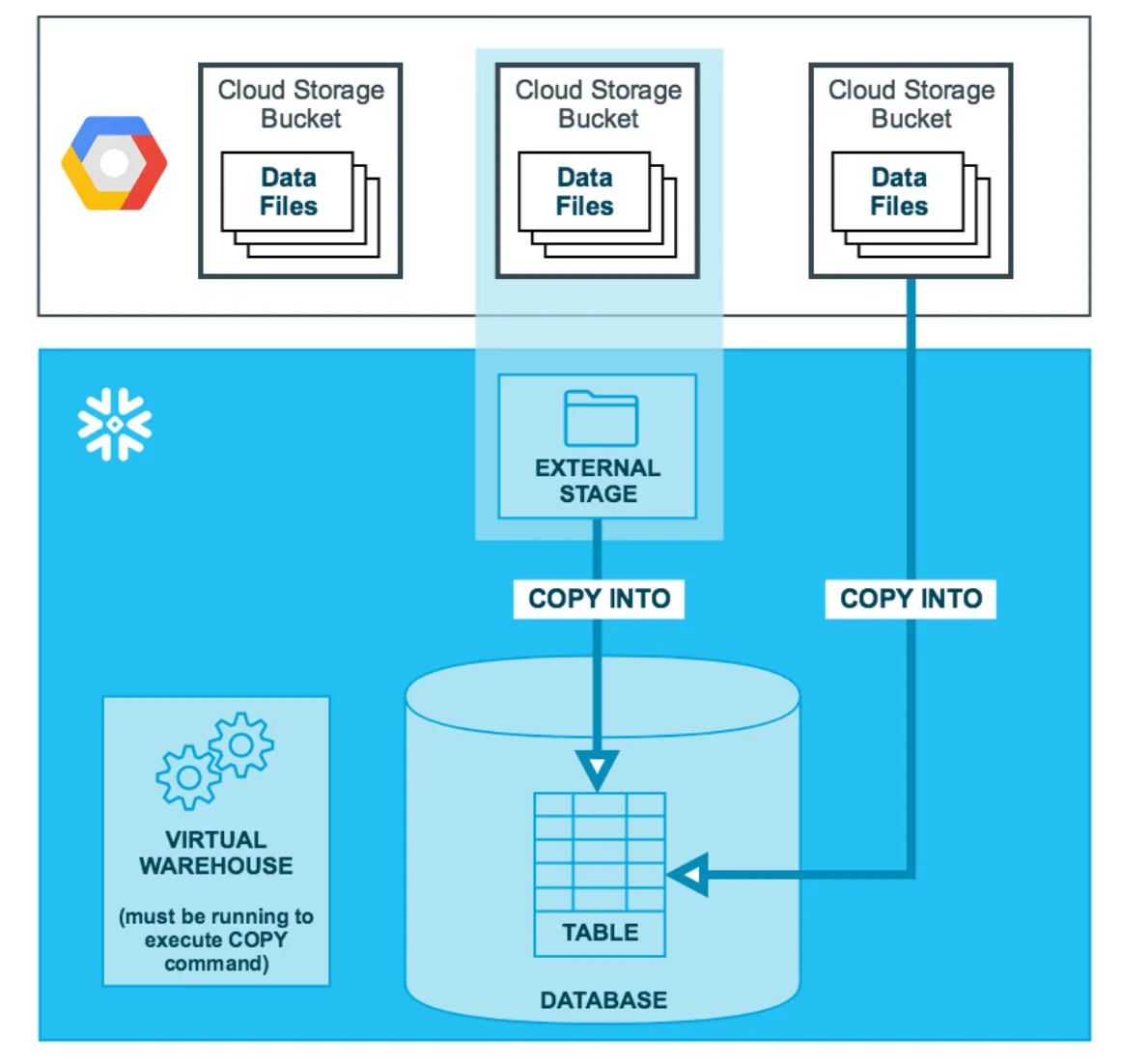

If you’re using the Google Cloud Platform, it is fair to assume that you might be storing data either temporarily or permanently in Google Cloud Storage buckets. You might even have an existing data lake in place from which you would want to move data into Snowflake for more structured consumption.

Snowflake uses stages to load and unload data from and to other data systems. You can either use a Snowflake-managed internal stage to load data into a Snowflake table from a local file system, or you can use an external stage to load data from object-based storage too. The unloading process also involves the same steps but in reverse.

If your data is in Google Cloud Storage buckets, you can create an external stage for your bucket to load data into Snowflake. First, create a storage integration with your Google Cloud Platform credentials. You can create a storage integration using the following SQL statement in Snowflake:

CREATE STORAGE INTEGRATION <integration_name>

TYPE = EXTERNAL_STAGE

STORAGE_PROVIDER = 'GCS'

ENABLED = TRUE

STORAGE_ALLOWED_LOCATIONS = ('gcs://<bucket>/<path>/', 'gcs://<bucket>/<path>/')

[ STORAGE_BLOCKED_LOCATIONS = ('gcs://<bucket>/<path>/', 'gcs://<bucket>/<path>/') ]

Using the storage integration you just created, you can create a stage by specifying the Google Cloud Storage bucket path and the format of the files you want to stage into Snowflake using the following SQL statement:

CREATE STAGE my_gcs_stage

URL = 'gcs://mybucket1/path1'

STORAGE_INTEGRATION = gcs_int

FILE_FORMAT = my_csv_format;

Once that’s done, you can load data from and unload data into Google Cloud Storage buckets.

Loading and unloading data in Google Cloud Storage (GCS) #

Once the Google Cloud Storage (GCS) external stage is created, you can use the COPY INTO command for bulk-loading the data into a table, as shown in the SQL statement below:

COPY INTO <table_name>

FROM @<stage_name>

PATTERN='.*address.*.csv';

The pattern would get all the address.*.csv files, such as address.part001.csv, address.part002.csv, and so on, and load them in parallel into the specified Snowflake table.

You don’t necessarily have to create a separate stage for unloading data. Still, it’s considered best practice to create an unload directory to unload the data from a table, as shown in the SQL statement below:

COPY INTO @<stage_name>/unload/ from address;

After this, you can run the GET statement to unload the data from the stage to your local file system:

GET @mystage/unload/address.csv.gz file:///address.csv.gz

Loading the contents of Cloud Storage bucket into Snowflake - Source: Snowflake.

The Snowflake + Google Cloud Platform storage integration also allows you to run queries on an external stage without getting the data into Snowflake. To do this, you’ll need to create external tables in Snowflake, which collect table-level metadata and store that in Snowflake’s data catalog.

When you create external tables that refer to a Google Cloud Storage external stage, you can set that external table to refresh automatically using Google Cloud Pub/Sub events from your Google Cloud Storage bucket.

Can you use Snowpipe with GCS events? #

Snowpipe is a managed micro-batching data loading service offered by Snowflake. Like bulk data loading from Google Cloud Storage, you can also load data in micro-batches using Snowpipe. The Snowpipe + Google Cloud Platform integration also rests upon the storage integration and external stage construct in Snowflake.

To trigger Snowpipe data loads, you can use Google Cloud Pub/Sub messages for Google Cloud Storage events. One additional step here is to create a notification integration using the following SQL statement:

CREATE NOTIFICATION INTEGRATION <notification_integration_name>

TYPE = QUEUE

NOTIFICATION_PROVIDER = gcp_pubsub

ENABLED = TRUE

GCP_PUBSUB_SUBSCRIPTION_NAME = '<gcp_subscription_id>';

Using the same notification integration, you need to create a pipe and then use the following SQL statement:

CREATE PIPE <pipe_name>

AUTO_INGEST = TRUE

INTEGRATION = '<notification_integration_name>'

AS

<copy_statement>;

How can you use external cloud functions in GCP? #

In addition to external tables where you don’t have to move your data into Snowflake, you can also use external functions where you don’t have to move your business logic into Snowflake.

An external function or a remote service gives you the option to offload certain aspects of your data platform to certain services in Google Cloud Platform, the most prominent of which is Cloud Functions, the serverless compute option in Google Cloud Platform.

To integrate Snowflake with Cloud Functions, you have to create an API integration, as shown in the SQL statement below:

CREATE OR REPLACE API INTEGRATION <api_integration_name>

API_PROVIDER = google_api_gateway

GOOGLE_AUDIENCE = '<google_audience_claim>'

API_ALLOWED_FIXES = ('https://')

ENABLED = TRUE;

Snowflake uses Google Cloud API Gateway as a proxy service to send requests for Cloud Function invocation, which is why you have the API_PROVIDER listed as google_api_gateway.

Several use cases exist for external functions to enrich, clean, protect, and mask data. You can call other third-party APIs from these external functions or write your code within the external function. One typical example of external functions in Snowflake is the external tokenization of data masking, where you can use Cloud Functions as the tokenizer.

How can you use the Snowflake Business edition on GCP? #

Snowflake’s Business Critical Edition has the highest data security and protection levels, especially concerning PII and PHI data. This is very helpful when your business needs to comply with regulations like HITRUST CSF and HIPAA.

You can deploy Business Critical Edition on the Google Cloud Platform and have Google Private Service Connect in place, but make sure that you are aware of the limitations.

Summary #

Snowflake integrates seamlessly with Google Cloud services like Cloud Storage, Cloud Functions, API Gateway, Private Service Connect, and Pub/Sub to enhance native capabilities for data loading, automation, and secure connectivity.

By combining Snowflake’s governance features with Google Cloud Data Catalog, you can build a metadata-rich stack and connect it with Atlan—a metadata control plane—to drive better discovery, governance, and collaboration.

You can learn more about the Snowflake + Google Cloud partnership from Snowflake’s official website and the Snowflake + Atlan partnership here.

Snowflake on GCP: Frequently asked questions (FAQs) #

1. Is Snowflake available on Google Cloud Platform? #

Yes, Snowflake has been generally available on GCP since early 2020. As of May 2025, it is supported in six GCP regions including Iowa, North Virginia, London, the Netherlands, Frankfurt, and Dammam.

2. How do I deploy Snowflake on GCP? #

You can deploy Snowflake by selecting a supported GCP region, choosing a Snowflake Edition, and provisioning compute resources (warehouses) to run your workloads.

3. Can I load and unload data between Snowflake and Google Cloud Storage? #

Yes. Snowflake supports external stages that let you load data from and unload data to Google Cloud Storage (GCS) using SQL commands like COPY INTO.

4. Does Snowflake integrate with Google Cloud Functions? #

Yes. You can create external functions in Snowflake that invoke Google Cloud Functions using Google API Gateway, enabling serverless processing outside of Snowflake.

5. Can I use Snowpipe with Google Cloud Pub/Sub? #

Yes. Snowpipe can be triggered using Google Cloud Pub/Sub events to support automated micro-batch data ingestion from GCS.

6. Is Snowflake’s Business Critical Edition supported on GCP? #

Yes. Business Critical Edition is available on GCP and includes support for Google Private Service Connect, offering enhanced data security and compliance for regulated industries.

7. How does Atlan support metadata governance on Snowflake + GCP? #

Atlan integrates with Snowflake and Google Cloud Data Catalog to centralize metadata, with features like active governance and collaboration, including support for tag sync with Snowflake.

Snowflake on GCP: Related reads #

- Snowflake Summit 2025: How to Make the Most of This Year’s Event

- Snowflake Data Mesh: Step-by-Step Setup Guide

- Snowflake Data Catalog: Importance, Benefits, Native Capabilities & Evaluation Guide

- Snowflake Data Governance: Features, Frameworks & Best practices

- Snowflake Metadata Management: Importance, Challenges, and Identifying The Right Platform

- Snowflake Data Lineage: A Step-by-Step How to Guide

- Snowflake Data Dictionary: Documentation for Your Database

- Snowflake on Azure: How to Deploy in 2025 [A Practical Guide]

- Snowflake on GCP: A Practical Guide For Deployment

- Snowflake Conferences 2025: Top Events to Watch Out For

- Snowflake Horizon for Data Governance: Your Complete 2025 Guide

- Snowflake Cortex: Top Capabilities and Use Cases to Know in 2025

- Snowflake Copilot: Everything We Know About This AI-Powered Assistant

- GCP Data Catalog: What, Why & Popular Choices

- Benefits of Data Governance on GCP: What’s Available and How Can You Build On It?

Share this article