Share this article

Data mesh architecture revolutionizes data management by decentralizing data ownership and treating data as a product.

See How Atlan Simplifies Data Governance – Start Product Tour

It empowers domain teams with self-serve infrastructure to manage, access, and share data seamlessly.

Unlike traditional data warehouses, it fosters scalability, real-time analytics, and federated governance.

This model enhances collaboration, ensuring interoperability across domains while maintaining strict compliance and security standards.

Table of contents #

- What is Data Mesh Architecture?

- Why should organizations consider using a data mesh architecture?

- Core principles of a data mesh architecture

- Core components of the data mesh architecture

- How Atlan Supports Data Mesh Concepts

- Conclusion on Data Mesh Architecture

- FAQs about Data Mesh Architecture

- Data mesh architecture: Related reads

What is Data Mesh Architecture? #

The data mesh architecture uses a decentralized approach to data processing, allowing data consumers to access and query data where it lives without transporting it to a data lake or a warehouse.

Organizations will work with multiple interoperable data lakes instead of having one data lake to rule them all.

The Ultimate Guide to Data Mesh - Learn all about scoping, planning, and building a data mesh 👉 Download now

Need an analogy? Here’s how Neal Ford, Director and Software Architect at Thoughtworks, puts it:

Rather than having data oceans, a data mesh introduces smaller data ponds, with canals between them.

As a result, it’s possible to mine vast amounts of data for insights in real time and at scale.

Organizations work with massive volumes of big data. Processing such volumes in real-time require a distributed approach to data storage, as opposed to the current centralized data lakes and warehouses.

That’s why the data mesh architecture is the key to unlocking the full potential of everything from cloud computing and microservices to machine learning.

This article will discuss the data mesh architecture and its core principles, components, and benefits.

What is a Data Mesh? #

Data mesh is a modern, distributed approach to data management using a decentralized architecture. Such an approach addresses diverse needs, from analytics and business to machine learning.

Zhamak Dehghani, a ThoughtWorks consultant, first defined the term “data mesh” as a data platform architecture designed to adapt the all-pervasive nature of data in enterprises using a domain-oriented structure.

Here’s how Deloitte defines it:

The Data Mesh concept is a democratized approach of managing data where different business domains operationalize their own data, backed by a central and self-service data infrastructure.

The data mesh architecture challenges the traditional assumption that organizations should put all of their data in a single place — a monolithic architecture — to extract its true value.

A monolithic data platform, according to Zhamak Dehghani. Image source: Martin Flower.

Instead, the data mesh architecture asserts that the full potential of big data can only be leveraged when it is distributed among the owners of domain-specific data.

The concept looks at data as a set of data products distributed across several repositories. We can consider each business domain as a data product. Data owners and their cross-functional teams manage each product.

For example, let’s consider Sales as a data domain. The owner of Sales data ensures its credibility, usability, and ease of access to the right users. Here, the owner is just like a product owner, and sales data is the product.

Dehghani asks organizations to think of data as a product. As such, the domain owner is now providing data as an asset to the rest of the organization. So, just like with product thinking, data-as-a-product should provide a delightful experience to the data scientists who want to find and use this data.

To sum it up, domain-specific teams assume ownership of their data and manage it, end-to-end, as a product — that’s the core premise of the data mesh.

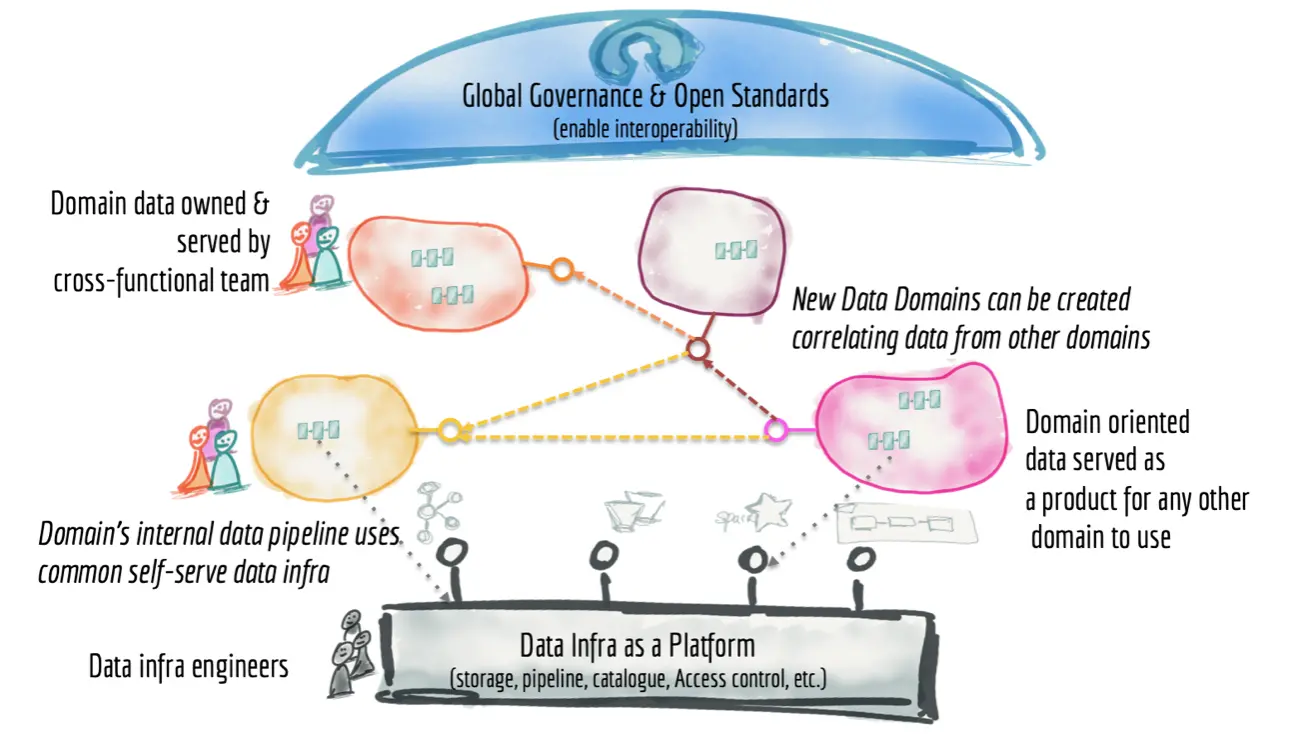

The data mesh architecture, according to Zhamak Dehghani. Image source: Martin Flower.

Read more about data mesh, here.

Also, read → Modern Data Environment Uses Both Data Fabric And Data Mesh

Modern Data Catalogs: The Key Trends, the Data Stack, and the Humans of Data

Download free ebook

Why should organizations consider using a data mesh architecture? #

As we’ve mentioned earlier, the data mesh architecture can turbocharge analytics by providing rapid access to fast-growing distributed domain sets.

This approach eliminates the challenges of data accessibility and availability at scale. For example, a central ETL pipeline can slow down when data teams have to run several transformations at once. Since business users depend on technologists (engineers and scientists) to get access to data and extract value from it, the data team deals with a lot of pressure. In such scenarios, the data team is largely operational — running around meeting demands that keep piling up.

The data mesh architecture puts the onus of extracting value from data on the data product owner, which frees up the technologists to pursue strategic tasks that enhance the value of data for the entire organization.

Moreover, it facilitates data democratization, empowering every data consumer — data scientists, analysts, business managers — to access, analyze and gain business insights from any data source, without needing help from data engineers.

According to the article State of the Data Lakehouse, 2024 by Business Wire, as of late 2023, 84% of organizations have either fully or partially implemented data mesh strategies, with 97% expecting further expansion in the coming year. The primary objectives for adopting data mesh include improving data quality (64%) and enhancing data governance (58%). Notably, data mesh initiatives are increasingly driven by business leaders and units (52%) rather than centralized IT teams, indicating a shift towards domain-oriented data ownership.

Also, read → Business outcomes drive data architecture strategy

Now let’s look at the guiding principles behind a data mesh architecture.

Core principles of a data mesh architecture #

According to Dehghani, there are four principles that any data mesh setup should embody:

- Domain-oriented decentralized data ownership and architecture

- Data as a product

- Self-serve data infrastructure as a platform

- Federated computational governance

1. Data Ownership #

The goal of the data mesh is to decentralize the entire data ecosystem. Achieving this requires delegating the responsibility of managing data to the folks who work closely with it, i.e., the data product owners. This makes it easier to keep the data updated and rapidly incorporate changes.

According to the article Pros and Cons of Data Mesh by PwC, transitioning to a data mesh requires a fundamental change in how data is perceived and managed. Organizations may face resistance as teams adjust to decentralized data ownership and governance models.

2. Data as a product #

Additionally, to simplify the discovery, understanding, and trustworthiness of data, the data mesh architecture employs the data-as-a-product principle. In this principle, the domain-specific data is a “product”, and its users are its “customers”.

Referring to our previous example, the product would be Sales data. The customers would be the scientists, analysts, and sales managers who use sales data for reporting, tracking their metrics, and deriving business insights.

If you’re still wondering, “how does this help data teams?”, here’s an analogy from Ken Collier, the head of Data Science and Engineering at Thoughtworks, to help you out:

Let’s consider insurance claims. People responsible for building the operational domains that deal with claims are also responsible for providing claims information as easily consumable, trustworthy data, whether as events or, you know, historical snapshots, to the rest of the organization.

3. Self-serve data platform #

The data mesh should be supported by a self-serve infrastructure to make data democratization a reality and make it easy to set up and run different data domains.

Such a platform lets all data owners set up polyglot storage (i.e., various forms of storing data) and helps them provide access to these domains securely. Moreover, the setup shouldn’t require any complex engineering skills or support from technologists.

Here’s how Dehghani puts it:

To make analytical data product development accessible to generalists, the self-serve platform should support any domain data product developer. It should lower the cost and specialization needed to build data products.

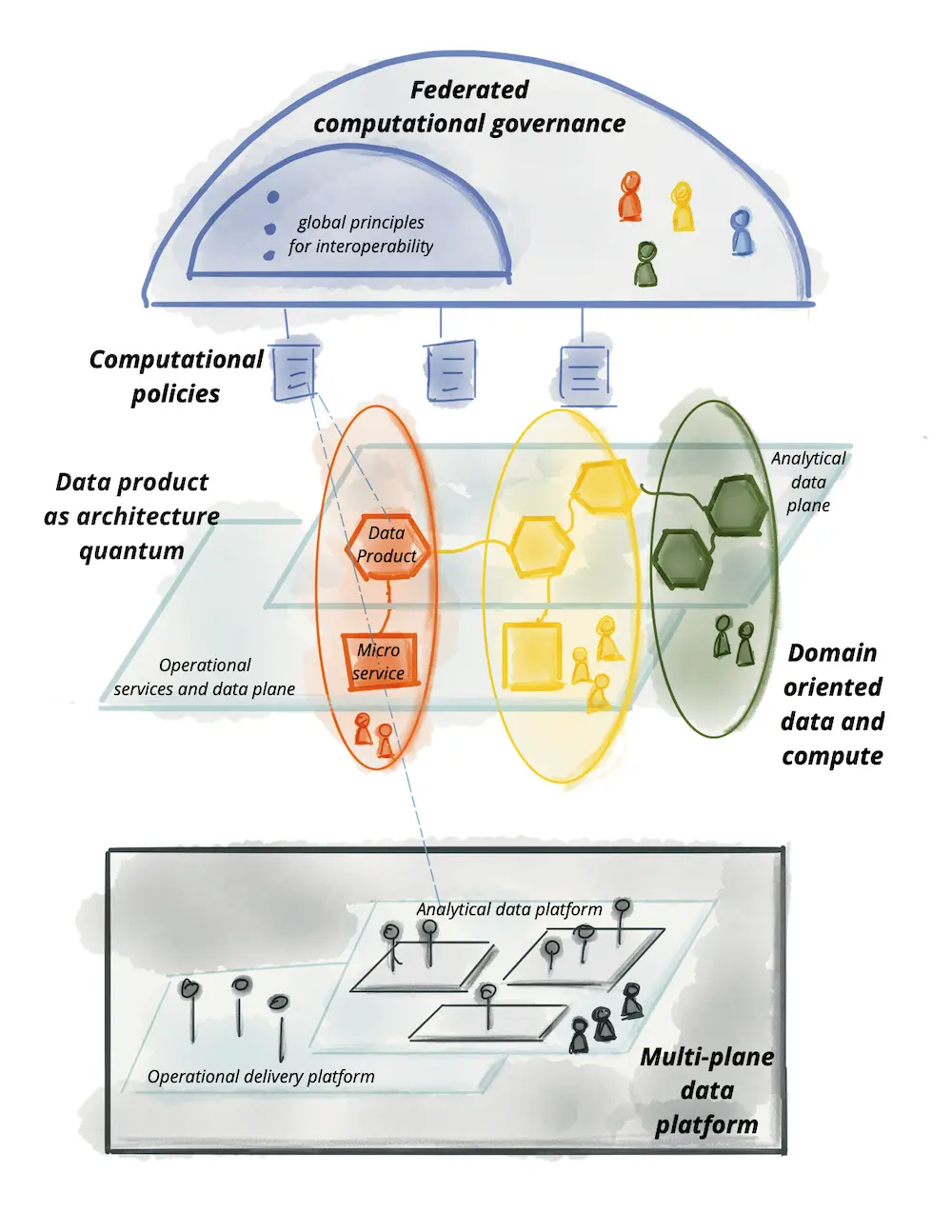

4. Federated computational governance #

The data mesh concept champions a federated computational governance model for seamless interoperability. However, the data shouldn’t live in silos despite having a decentralized architecture.

Instead, the entire data ecosystem must be interoperable to extract meaning from data. That’s because everything from finding patterns to performing transformations requires all the data products to talk to each other.

Lastly, it should follow the standards set by an organization’s data governance program to avoid security and compliance-related issues.

Here’s how Dehghani explains it:

A data mesh implementation requires a governance model that embraces decentralization and domain self-sovereignty, interoperability through global standardization, dynamic topology, and automated execution of decisions by the platform.

Now let’s look at the components of such a distributed ecosystem.

Core components of the data mesh architecture #

The data mesh architecture requires several components, such as data sources, infrastructure, governance, and domain-oriented pipelines, to operate smoothly.

A representation of data mesh architecture and its components. Image source: Martin Flower

These components play a crucial role in ensuring:

- Universal interoperability: It’s the key to aggregating and correlating data across domains.

- Governance: A global governance program should map the standards, definitions, policies, and processes around data across domains.

- Observability: If you cannot observe data, you cannot trust it.

- Domain-agnostic standards: Such standards are essential for ensuring that data definitions are universal, without any room for ambiguity.

Now, let’s explore each component.

1. Domain-oriented data #

Going from a monolithic architecture to a data mesh requires organizations to rethink their data ecosystems completely.

To get the ball rolling, the first step is to group data by domains — also a best practice for good data governance. That means identifying the domains, which could include sales, finance, and accounting, purchasing, marketing, and manufacturing.

Next, organizations should think about the interface. Each domain’s interface should facilitate interoperability and easy access for cross-domain analytics and reporting.

In addition, the architecture should resolve any friction or disputes with other domains. So, sales and financial data, for instance, shouldn’t contradict each other.

Decentralization can also lead to data duplication across domains, and the interface design should account for this problem to avoid redundancy and cut costs.

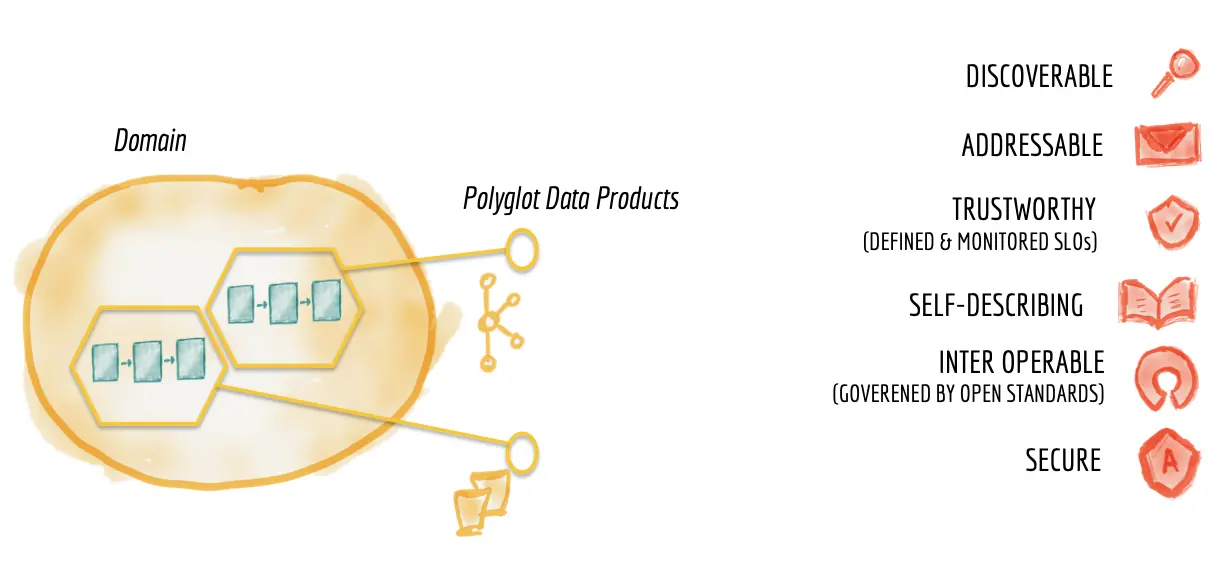

2. Data products #

In the data mesh architecture, the data product is the node that provides access to the analytical data of a domain as a product. This data product should be:

- Easy to discover and access (using a data catalog that crawls metadata and tracks lineage)

- Trustworthy (employing quality checks and profiling to weed out bad data)

- Easy to search and understand (adding context with business glossaries, metadata descriptions, and a Google-search-like interface to find data sets)

- Interoperable (allowing data to be correlated across domains for further analysis and reporting)

- Secure (ensuring security, privacy, and compliance with global regulations)

The characteristics of a data product. Image source: Martin Flower.

Furthermore, each data product has three core components:

- Code: For enabling functions such as data pipelines, APIs to access metadata, compliance, and access policies

- Data and metadata: For understanding and using data in various forms (graphs, tables, events) — polyglot data — along with the relevant context (i.e., metadata such as semantic definition, attributes, syntax)

- Infrastructure: For ensuring an ecosystem that lets users build, deploy and run code while facilitating storage and access

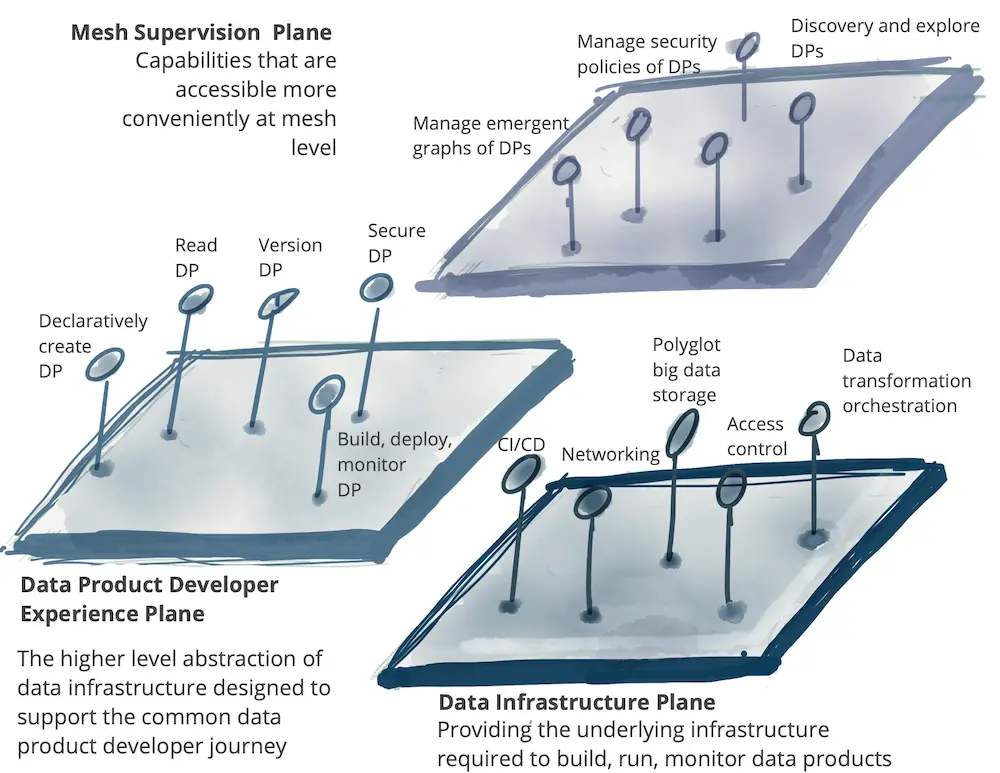

3. Data planes #

A data plane is a level of existence. It isn’t a physical layer, but a logical interface. So, you can use several physical interfaces to set up a single data plane that maps the abilities of a self-serve data platform.

A self-serve platform can have several data planes depending on the user profile. Let’s look at three such possibilities.

1. Data infrastructure plane

This plane acts as the backbone of the data mesh architecture — everything you need to run the mesh. So, it oversees the entire data infrastructure lifecycle management and helps set up:

- Distributed storage

- Granular access controls

- Data orchestration and querying

2. Data product developer experience plane

This plane is like the main artery of the data mesh architecture. It supports the entire lifecycle of a data product — everything needed to build, deploy and manage a data product.

So, this plane acts as a declarative interface to help users run their workflows.

3. Data mesh supervision plane

This plane helps with discovery, management, and governance at the mesh level. So, it dictates the rules, standards, definitions, and policies for all the data products within the mesh.

This plane is the key to enabling cross-domain analytics — running queries that pull data from multiple data products. It’s also essential for discovery — browsing across all data products.

A representation of multiple planes for a self-serve data platform. Image source: Martin Flower.

4. Data Governance #

As mentioned earlier, one of the key tenets of a distributed architecture with several independent data products is federated data governance. Standardized, domain-agnostic governance is essential to ensure interoperability, eliminate data silos and ensure compliance.

To set up such a federated model, the data product owners must form a committee — for example, a data governance committee or council. This committee can help the owners “decide how to decide when it comes to data”.

For instance, some decisions, such as data asset definitions or rules for data capture and storage, should be global. The definition of a customer should be universal for the organization.

Other decisions, such as the transformations that a data asset should undergo, could be local, i.e., depending on the domain. For example, the process by which a user becomes a customer is something that the domain could decide.

Additionally, some data elements span across several domains. These elements are called polysemes and the federated governance committee is responsible for modeling them.

Making such decisions without encroaching on the autonomy or independence of a domain is crucial for a federated model to be effective.

How Atlan Supports Data Mesh Concepts #

Atlan helps organizations implement data mesh principles by enabling domain teams to create and manage data products that can be easily discovered and consumed by other teams.

Data products in Atlan are scored based on data mesh principles such as discoverability, interoperability, and trust, providing organizations with insights into their data mesh maturity.

Atlan’s automated lineage tracking and metadata management capabilities further support data mesh implementation by providing a comprehensive understanding of data flows and dependencies across domains.

How Autodesk Activates Their Data Mesh with Snowflake and Atlan #

- Autodesk, a global leader in design and engineering software and services, created a modern data platform to better support their colleagues’ business intelligence needs

- Contending with a massive increase in data to ingest, and demand from consumers, Autodesk’s team began executing a data mesh strategy, allowing any team at Autodesk to build and own data products

- Using Atlan, 60 domain teams now have full visibility into the consumption of their data products, and Autodesk’s data consumers have a self-service interface to discover, understand, and trust these data products

Book your personalized demo today to find out how Atlan supports data mesh concepts and how it can benefit your organization.

Conclusion on Data Mesh Architecture #

We live in times where cloud computing and digital transformation have become the norm for organizations to thrive. The amount and complexity of data amassed in such an environment will only continue to grow exponentially.

If data has to keep pace with business, conventional monolithic architectures for data storage, processing, and governance won’t cut it. Instead, organizations must decentralize their data ecosystem using architectures like the data mesh and think of data as a product — the most important product within their enterprise.

However, decentralization doesn’t mean isolation. So, all the data domains should be interconnected using a unified data platform like Atlan that:

- Simplifies discovery

- Enables cross-domain querying with a visual query builder

- Ensures granular access controls

- Facilitates global governance

FAQs about Data Mesh Architecture #

1. What are the core data mesh principles? #

Data mesh is built on four fundamental principles: domain-oriented decentralized data ownership, data as a product, self-serve data infrastructure, and federated computational governance. These principles aim to improve data accessibility, governance, and scalability across complex organizations.

2. How does data mesh architecture benefit a business over centralized data lakes? #

Unlike centralized data lakes, a data mesh architecture promotes decentralized data ownership, empowering individual business domains to manage and govern their data. This model improves scalability, fosters faster decision-making, and enhances data relevance by keeping it closer to the source of creation.

3. What steps are essential to implement data mesh principles effectively? #

Key steps include establishing domain-oriented data ownership, treating data as a product with specific owners and consumers, building a self-serve data infrastructure, and setting up federated computational governance. Each step supports scalability, consistent data governance, and improved access to high-quality data.

4. What are the potential challenges when adopting data mesh principles? #

Common challenges include managing decentralized governance, ensuring consistent data standards across domains, balancing local autonomy with organizational goals, and setting up a scalable infrastructure to support self-serve data needs. Addressing these challenges requires a well-planned governance strategy and stakeholder buy-in.

5. How does data mesh improve data governance and security? #

Data mesh enhances data governance through federated computational governance, which enforces global standards while allowing domain-specific policies. This decentralized approach improves data security by granting data ownership at the domain level, ensuring compliance and accountability.

Data mesh architecture: Related reads #

- Data Mesh Vs. Data Lake: Definition, principles, and architecture

- What is Data Mesh: Definition, architecture, benefits, and challenges

- Data Warehouse vs Data Lake vs Data Lakehouse: What are the key differences?

- Data Fabric vs Data Mesh: Definition, architecture, benefits, and use cases

- Data Observability & Data Mesh: How Are They Related?

- Forrester on Data Mesh: Approach, Characteristics, Use Cases

- Databricks Data Mesh: Native Features and Atlan Integration

- Best Data Mesh Articles: The Ultimate Guide in 2025

- Data Mesh vs Data Warehouse: How Are They Different?

- Data Mesh vs Data Mart - How & Why Are They Different?

- Data Mesh vs Data Vault: Key Differences, Use Cases, & Examples

- How to Implement Data Mesh From a Data Governance Perspective?

- Data Mesh Principles: 4 Core Pillars & Logical Architecture

- Data Mesh Setup and Implementation: Ultimate Guide for 2025

Share this article