Data Catalog Architecture: Insights into Key Components, Integrations, and Open Source Examples

Share this article

Data catalog architecture refers to the components that gather, manage, and organize metadata to help you discover, understand, interpret, and use data assets.

This guide will explore these components — a metadata store, search engine, frontend, and backend application — and also look at integrations essential for cataloging.

Table of contents #

- Data catalog architecture: Core components

- Data catalog architecture: Integrations and support

- Popular open source tools and architectures

- Summary

- Data catalog architecture: Related reads

Data catalog architecture: Core components #

A well-designed data catalog architecture will have 4 vital components:

- A metadata store: The heart of the data catalog architecture serving as a database storing metadata from various sources while preserving their connections, history, and critical details

- A search engine: The key to indexing and looking up all kinds of metadata, ensuring seamless search-ability

- A backend application: The component that retrieves data, updates metadata and connects with external tools within the data ecosystem

- A frontend application: The component that acts as the portal, offering accessibility and usability to business users.

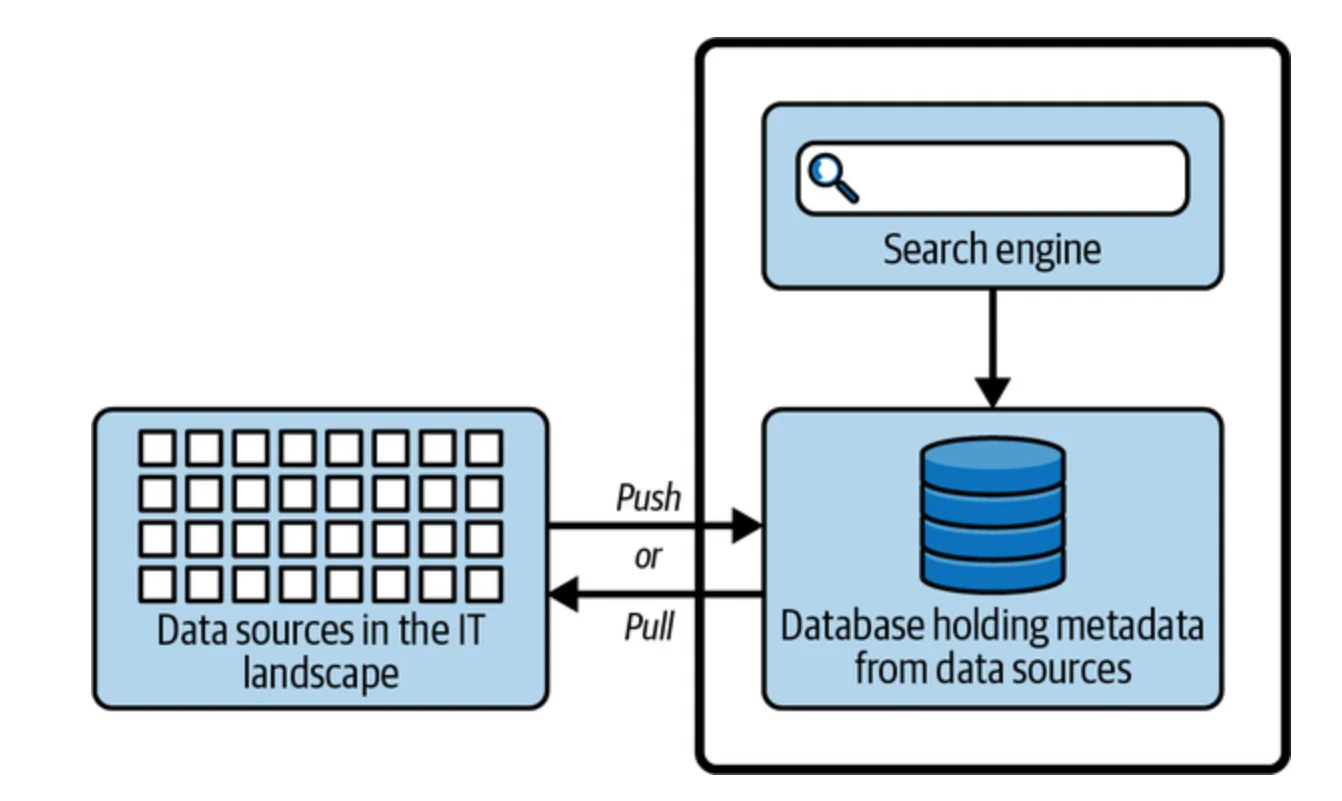

A high-level view of the data catalog architecture. - Source: The Enterprise Data Catalog by Ole Olesen-Bagneux.

These components work together to support essential features such as data discovery, understanding, governance, and collaboration.

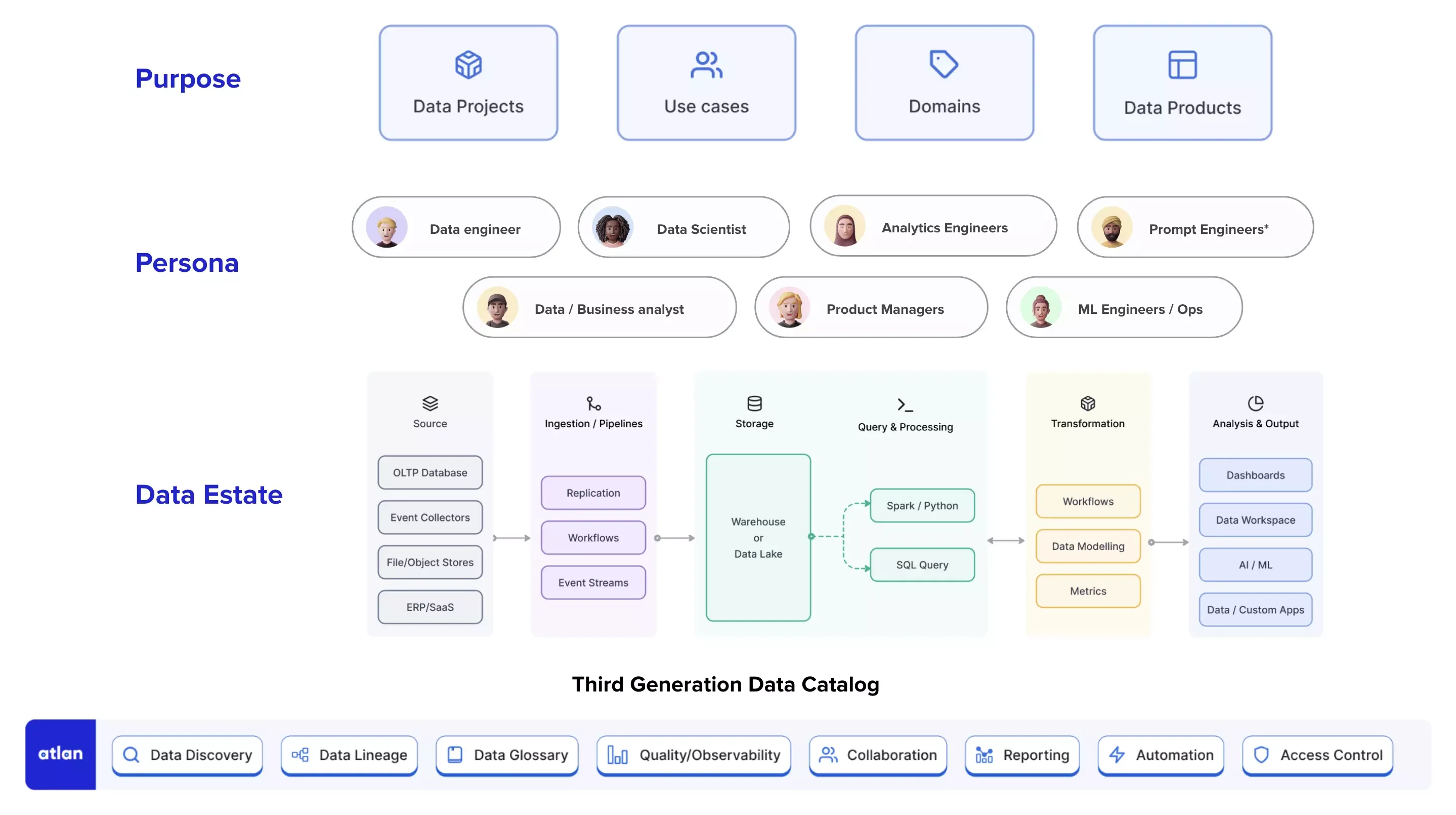

How a third-gen data catalog architecture encompasses your data estate, people, and processes. - Image by Atlan.

Let’s explore each data catalog architecture component in detail.

The metadata store #

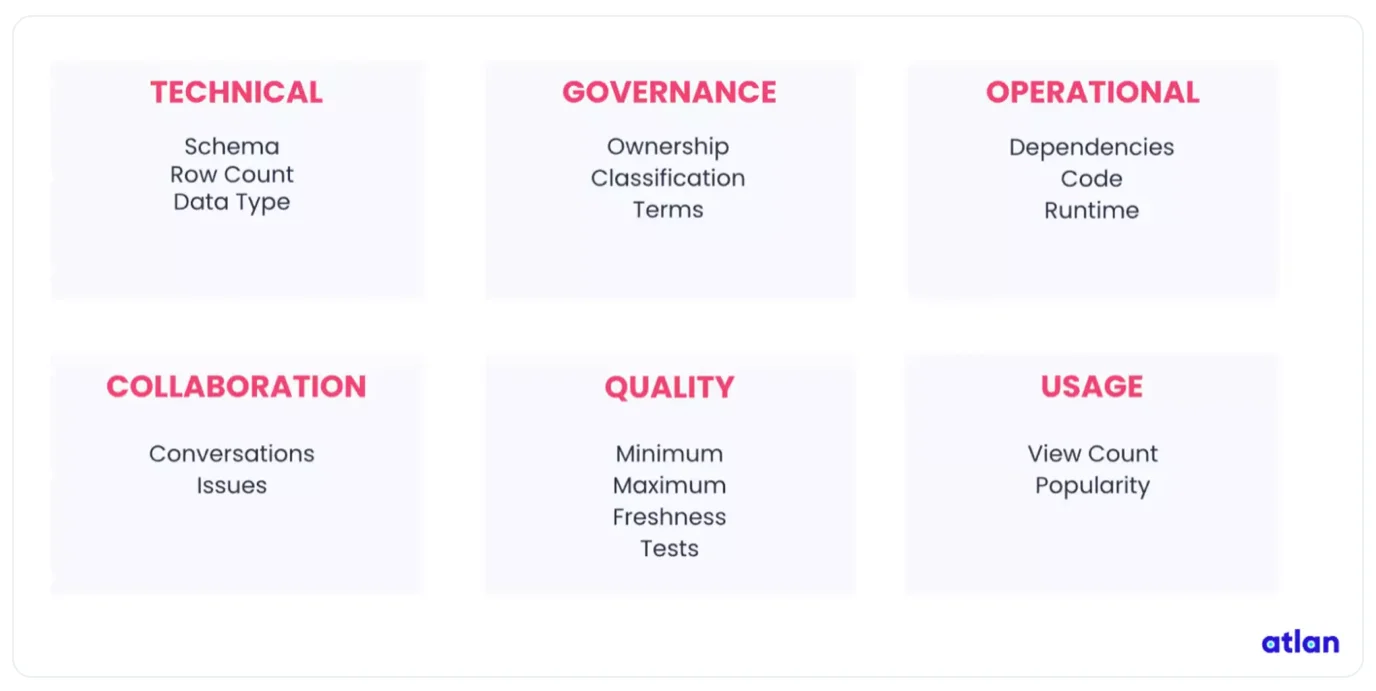

The metadata store is responsible for storing all relevant metadata related to your data assets. This includes technical, governance, operational, collaboration, quality, and usage metadata.

The six types of metadata - Image by Atlan.

Read more → Types of metadata

The metadata store could be thought of as the brain/nerve center of your data ecosystem.

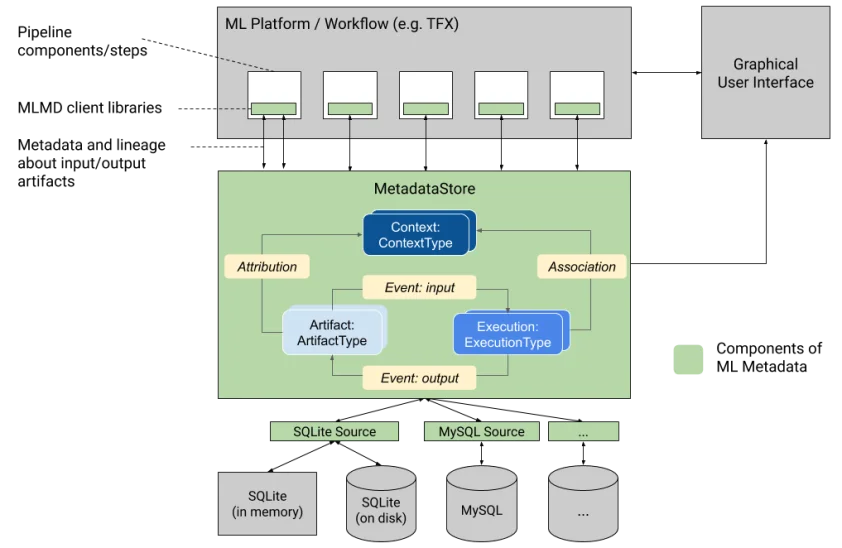

In most cases, the main repository uses open-source technology, such as MySQL or PostgreSQL, to store essential metadata.

An overview of a metadata store. - Source: Neptune.ai

However, some organizations might choose NoSQL options for scalability purposes.

For instance, Atlan’s platform uses PostgreSQL as its SQL database, Redis as a cache layer, and Apache Cassandra, an object-oriented database, to store its metastore’s data.

Read more → Atlan architecture and platform components

Secondary databases, such as neo4j and JanusGraph, can be used to store data asset relationships, data lineage, and other information.

The search engine #

The search engine component plays a critical role in cataloging by indexing large amounts of structured, semi-structured, and unstructured data sources for search and discovery purposes.

Modern, AI-powered data catalogs are equipped with search capabilities that learn from your behavior, and recognize patterns and connections to present intelligent search results and recommendations.

For instance, they might display related data assets. Think of it along the lines of the “People also ask” and “Searches related to…” sections on Google search.

Ole Olesen-Bagneux, the author of The Enterprise Data Catalog, equates the search component to a company’s search engine.

“The search engines on the web index the web, and therefore collective, societal knowledge. The company search engine will do the same thing for a company. The company search engine will remember, and it will answer when we ask.”

Technologies such as database full-text indexes, Elasticsearch, Apache Solr, Lucene, and Algolia can be used to enable these powerful search capabilities.

For instance, Atlan uses Elasticsearch to index data and drive its search functionality.

Elasticsearch uses a structure based on an inverted index for full-text querying, which is particularly efficient for searching huge amounts of log or event data.

On the other hand, Apache Solr and Lucene provide text analysis and search capabilities and are often used in enterprise search applications. Algolia is another flexible search and discovery API that aims to optimize the speed and relevance of its search results.

By effectively indexing and searching metadata, the search layer in a data catalog serves to unify all data assets, creating a single source of truth. This eliminates information silos and offers previously unavailable insights, thereby enhancing the overall analytical efficiency of the organization.

The backend application layer #

The backend application layer in a data catalog system is responsible for:

- Ingesting metadata from different data sources

- Making updates to the metadata from the frontend

- Integrating with other tools in your data stack

Let’s explore this further.

1. Metadata ingestion and updates

Metadata ingestion can be done using pull-based and push-based methods.

In a pull-based approach, the backend application periodically pulls data sources to extract metadata. In contrast, in a push-based approach, data sources notify the backend application of changes, which then triggers metadata extraction.

This ingestion process involves not only gathering data but also updating the metadata store based on the inputs from the frontend application. This ensures that the metadata is always current and consistent.

2. Integrating with other data tools

Beyond data ingestion and updates, the backend application also plays a crucial role in integrating with other tools in the organization’s data stack. This could include:

- Orchestration engines for automating and managing complex data workflows

- Data quality frameworks for ensuring the accuracy and reliability of data

- Observability platforms for monitoring the health and performance of the data catalog system

- Chat engines for facilitating collaboration and communication among users

Furthermore, the backend application enables direct engagement with data lake storage using SQL, Python, Java, etc., without the need for IT operations teams to prepare the data.

This allows analysts, engineers, scientists, and developers to interact with data more swiftly and independently, accelerating data exploration and analysis.

A data catalog’s architecture and its backend must allow engineers and developers to interact with data swiftly. - Source: Twitter.

By providing an analytical compute framework, the backend application empowers organizations to work at scale, while still permitting power analysts to use agile tools and methodologies.

Some organizations may employ development sandboxes within existing Online Analytical Processing (OLAP) systems, while others may opt for cloud-based virtualization platforms like Amazon WorkSpaces, possibly coupled with powerful GPU instances for faster data processing.

By catering to both the operational and analytical needs of the organization, the backend application enhances the overall efficiency and flexibility of the data catalog system, while preserving the necessary security controls.

The frontend application layer #

The frontend application of a data catalog is the primary interface through which business users, data stewards, and data scientists interact with the data catalog. It translates the complexities of data assets and metadata into a user-friendly, intuitive, and interactive experience.

The notable aspects of this layer are its primary interaction method and design approach. Let’s explore each aspect further.

1. Primary interaction method

As the principal mode of interaction, the frontend application facilitates search, exploration, and understanding of data assets.

It provides a rich user interface that allows users to search for data assets, view comprehensive metadata, preview data, and even annotate or update metadata when required.

2. Responsive and accessible design

Frontend applications often follow responsive design principles, ensuring that the interface is user-friendly and works well on any device — from desktops to tablets to mobile phones. This increases accessibility and allows users to interact with the data catalog anytime, anywhere.

So, design should be integral rather than an afterthought.

Airbnb on designing user interfaces - Source: Slideshare.

Data catalog architecture: Integrations and support #

Besides search and discovery, data catalogs also facilitate:

- Data governance and compliance for data usage and trust

- Data quality and profiling to enhance data value

- Data lineage to map data flows

- Documentation to capture tribal knowledge and provide a rich context

These capabilities may either be built into the catalog (i.e., native) or work alongside complementary data tools via connectors (i.e., integrations).

We can break down each of them so you know what they entail.

Governance and compliance for data usage and trust #

Data governance and compliance are paramount in any data catalog, as they help manage and organize data usage throughout an organization. These processes ensure that data remains trustworthy, accessible, and secure, all while adhering to internal guidelines and external regulations.

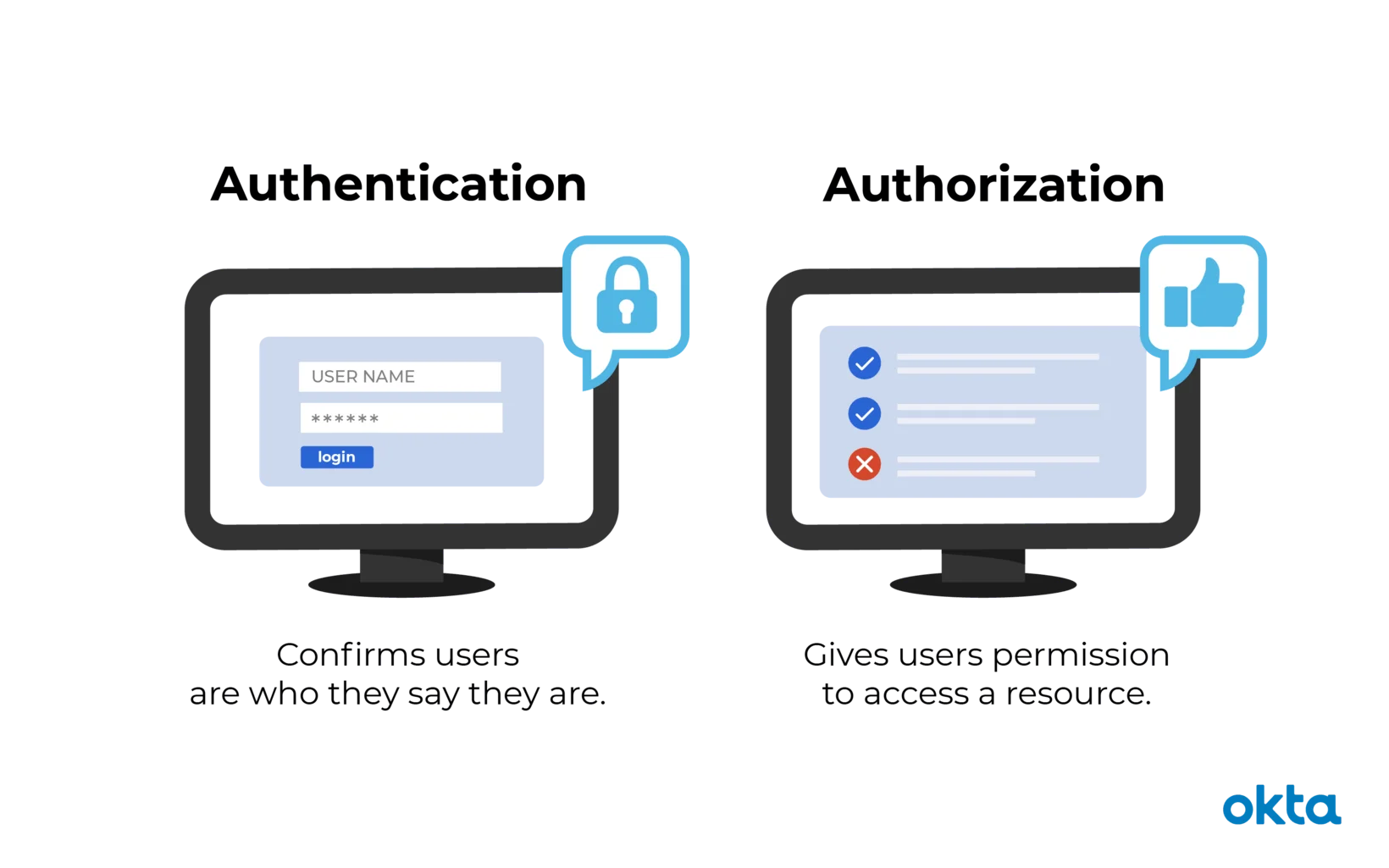

A key aspect of data governance is authentication and authorization. These mechanisms verify the identity of users and determine what data they can access.

Authentication and Authorization: Key aspects of any data catalog’s data governance. - Source: Okta.

Also read → How federated authentication and authorization streamline data security

While some data catalogs have built-in systems for this, others may integrate with third-party services such as Okta, Auth0, Keycloak, Open Policy Agent, Sentinel, or Apache Ranger. These integrations help manage complex user roles and permissions, ensuring that only authorized individuals have access to specific data assets.

Third-party services also play a critical role in maintaining compliance with data protection regulations like the General Data Protection Regulation (GDPR) and the Health Insurance Portability and Accountability Act (HIPAA).

For example, data catalogs can integrate with tools that help manage consent data subject rights, and data minimization.

Let’s take a look at some of these with relevant examples.

Consent Management #

In the context of GDPR and HIPAA, “consent” means obtaining permission from individuals before collecting their personal data.

Consent management solutions allow organizations to track which users have consented to certain uses of their data and ensure that they only use that data for those specific purposes.

Furthermore, by discovering and classifying sensitive information within the data catalog, the organization can establish a robust data inventory for effective data governance and overall regulatory adherence.

Data subject rights #

Data subject access requests (DSARs — a specific way to exercise a specific data subject right) give individuals the right to view any personal data held by an organization and request modifications or deletions.

Data subject rights management software helps companies handle DSARs efficiently while staying within the boundaries of relevant regulations. Integration with a data catalog allows such systems to discover data sources quickly so that businesses can find and fulfill individual data access requests as required.

For example, Microsoft uses Microsoft Purview to assist with GDPR and other data privacy regulation compliance. This tool allows them to locate and classify personally identifiable information throughout Microsoft’s cloud services and products — Azure Data Lake Storage, Azure SQL Database, Power BI, etc.

Purview also facilitates the handling of individual data requests, like accessing, erasing or moving private data.

Data masking and anonymization #

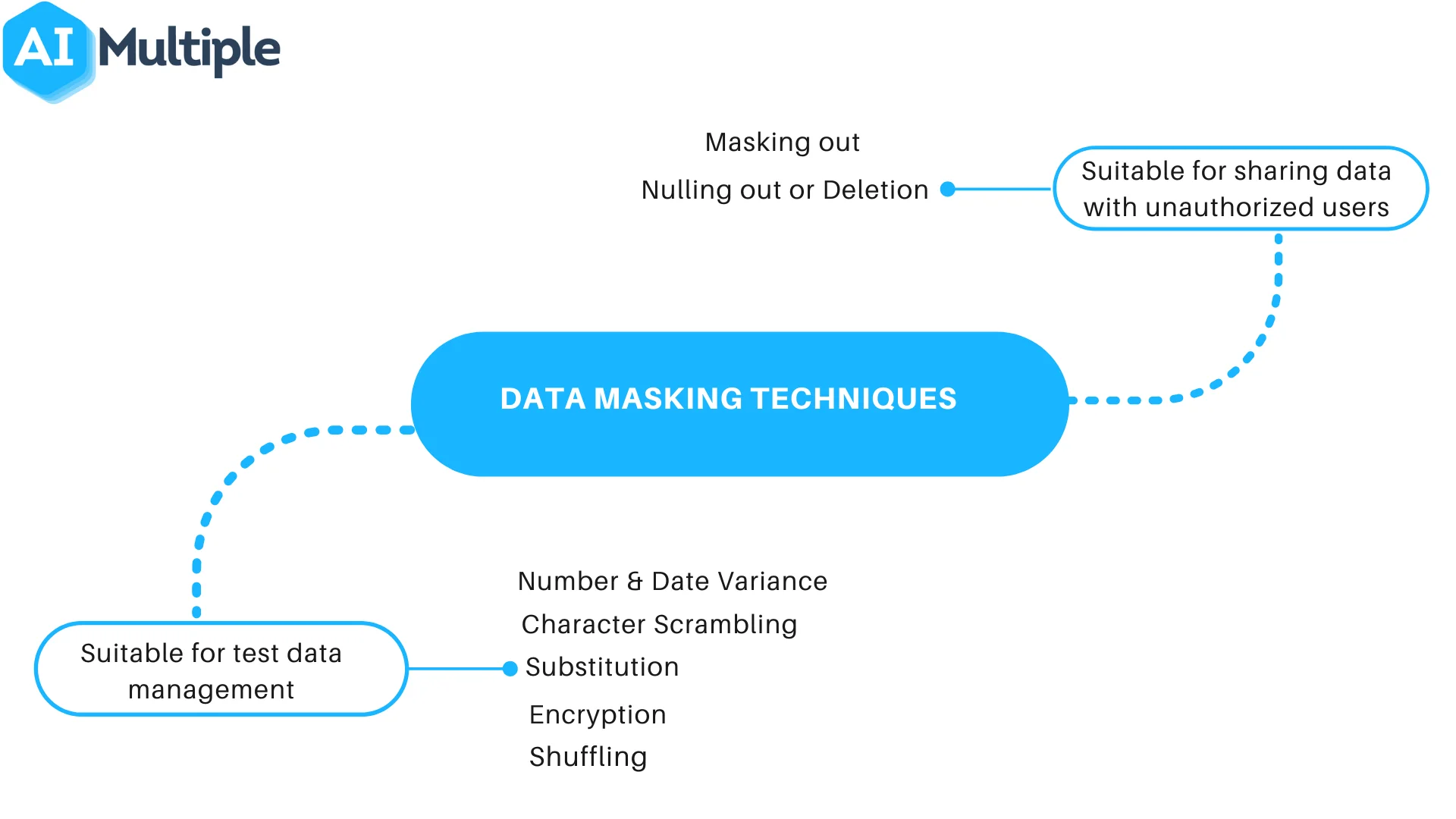

To prevent data misuse and protect sensitive information, data catalogs can implement data masking and anonymization policies.

These policies allow users to preview and share data without exposing PII or other sensitive data. This is particularly important in environments where data is shared broadly across the organization or with external parties.

Data catalog’s data masking techniques - Source: AIMultiple.

Overall, the integration of governance and compliance features—whether native or through third parties—greatly enhances the utility and trustworthiness of a data catalog.

Data quality and profiling to enhance data value #

To fully leverage the power of data, it is crucial to consider its quality and profile.

Data quality factors like accuracy, completeness, consistency, and timeliness impact how valuable the data will be for any given use case.

Meanwhile, data profiling helps highlight relationships, attributes, and anomalies, allowing for better preparation of queries for execution across various systems involved in a data pipeline.

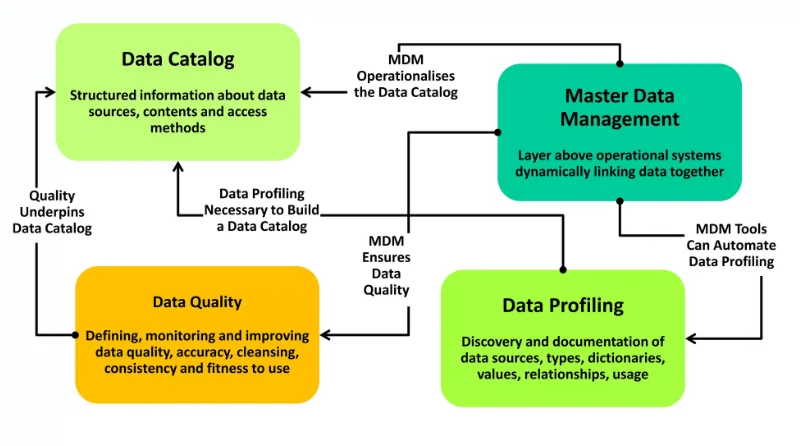

Data quality underpins data catalogs, whereas data profiling is a necessity. - Source: SlideShare.

Data quality and profiling help users experience if integrated with the data catalog. Users can preview the data along with the summary statistics to get an idea of what it looks like.

Additionally, seamless integration with popular quality control tools (Great Expectations, pytest, dbt) lets you embed the test results within data assets.

These measures instill greater trust in data quality while speeding up decisions for effective data-driven operations.

Data lineage to map data flows #

Data lineage describes the lifecycle of data, including its origins, movements, transformations, and terminations within an organization. This is crucial for understanding how data flows across your ecosystem.

Task orchestration and workflow engines play a significant role in extracting and managing data lineage. These tools control the sequence of data processing tasks and can provide a detailed view of the data transformations that occur from the source to the destination.

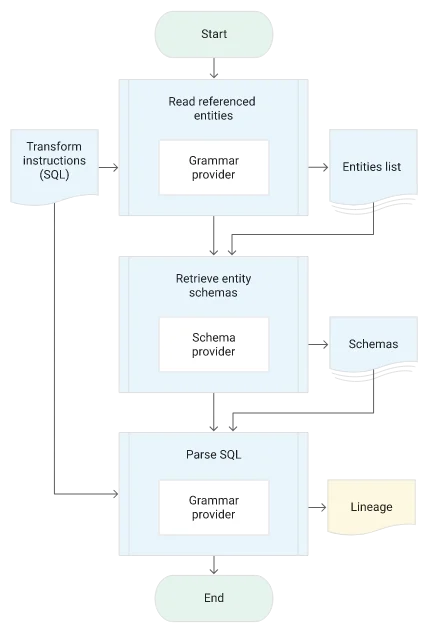

A lineage extraction process in data warehousing - Source: Google Cloud.

Integrating a data catalog with task orchestration and workflow engines, such as Apache Airflow, Luigi, or Prefect, can provide extensive information about the data assets.

These integrations can provide insights into the data’s source, where it was used, who accessed it, what transformations it underwent, and where it moved over time.

Moreover, this integration provides a clear view of dependencies among data assets and understand how changes to one dataset might impact others. This can be particularly helpful during migrations, system upgrades, or when implementing new data policies.

Documentation to capture tribal knowledge and provide rich context #

Clear, comprehensive documentation is crucial for understanding data assets and ensuring smooth operations. This documentation can include a wide range of details, such as definitions, usage instructions, data source details, and historical context.

Emerging technologies, such as generative AI, are paving the way for innovative approaches to documentation.

For instance, Atlan AI leverages artificial intelligence, and is designed to be:

- The copilot for every human of data

- A workspace that automates the documentation process

- A platform that opens up data exploration to all, empowers all data users to explore data and gain insights

Read more → How Atlan AI ushers in the future of working with data

Rich documentation feeds back into the data catalog, enhancing search, discovery, and context building.

Also, read → AI data catalog

Popular open source tools and architectures #

Let’s look at the architecture of open source tools to help you understand the composition of data catalogs:

Let’s explore each tool in terms of the four core components of the data catalog architecture — metadata storage, search and discovery, frontend, and backend systems.

Metadata storage in open source tools #

- Amundsen: Leverages a graph database, Neo4j, to store metadata.

- CKAN: Generally uses a Postgres relational database for storing metadata.

- Metacat: Metacat, developed by Netflix, offers a unified API to handle metadata, making it agnostic to the underlying data formats and storage systems.

- Apache Atlas: Makes use of a graph database, JanusGraph, for storing metadata.

- DataHub: LinkedIn’s DataHub uses a MySQL database for metadata storage.

Search and discovery functions in open source tools #

- Amundsen: Amundsen uses Elasticsearch to support its search functionality and analytic capabilities.

- CKAN: CKAN relies on Solr (an adaptable, widespread software system often used to manage and process text) to support its research functions.

- Metacat: Metacat contains no inherent exploration function.

- Apache Atlas: Atlas uses Solr to offer search and discovery capabilities.

- DataHub: DataHub uses Elasticsearch for search and discovery tasks.

Backend systems in open source tools #

- Amundsen: Uses a microservice architecture, which divides different functionalities into discrete, loosely connected services. Each service can be created, implemented, and scaled independently.

- Metacat: Handles metadata across different data storage systems via a single API.

- Apache Atlas: With its backend focused on managing and governing data inside the Hadoop ecosystem, Apache Atlas is meant to enable scalable governance for Enterprise Hadoop.

- DataHub: Uses a metadata graph model to describe metadata and its relationships

Frontend applications in open source tools #

- Amundsen: Flask and React are used to implement Amundsen’s frontend.

- CKAN: Jinja2, a designer-friendly Python templating language based on Django’s templates, is used to build CKAN’s frontend.

- Metacat: Doesn’t have a dedicated frontend but provides APIs to interact with it from other programs.

- Apache Atlas: Provides a user interface for managing metadata in Hadoop clusters.

- DataHub: Uses React to create a modern and responsive frontend.

| Attribute | Amundsen | CKAN | Metacat | Apache Atlas | DataHub |

| --------------------- | ------------------------- | --------------- | ------------- | -------------------- | -------------------- |

| Metadata storage | Yes, Neo4j/Atlas | Yes, PostgreSQL | Yes, in-house | Yes, JanusGraph | Yes, MySQL |

| Search and discovery | Yes, Elasticsearch | Yes, Solr | No | Yes, Solr | Yes, Elasticsearch |

| Backend systems | Microservice architecture | - | API-based | Hadoop | Metadata graph model |

| Frontend applications | Flask and React | Jinja2 | API-based | Uses Hadoop clusters | React |

Note: The information provided in this table is subject to change and should be verified on the respective websites of each tool. Table by Atlan

Summary #

Four critical components combine to form an efficient data catalog system — the metadata store, search engine, backend application, and frontend application. They play key roles in managing, storing, updating, discovering, and accessing data assets.

Moreover, integration services enhance functionality in terms of governance and compliance.

With proper implementation and integration, data catalogs empower organizations to achieve data-driven decision-making, improved collaboration, strict adherence to regulations, and top-notch data quality.

It’s safe to say, we at Atlan, know a thing or two about data catalog architecture.

Here’s why:

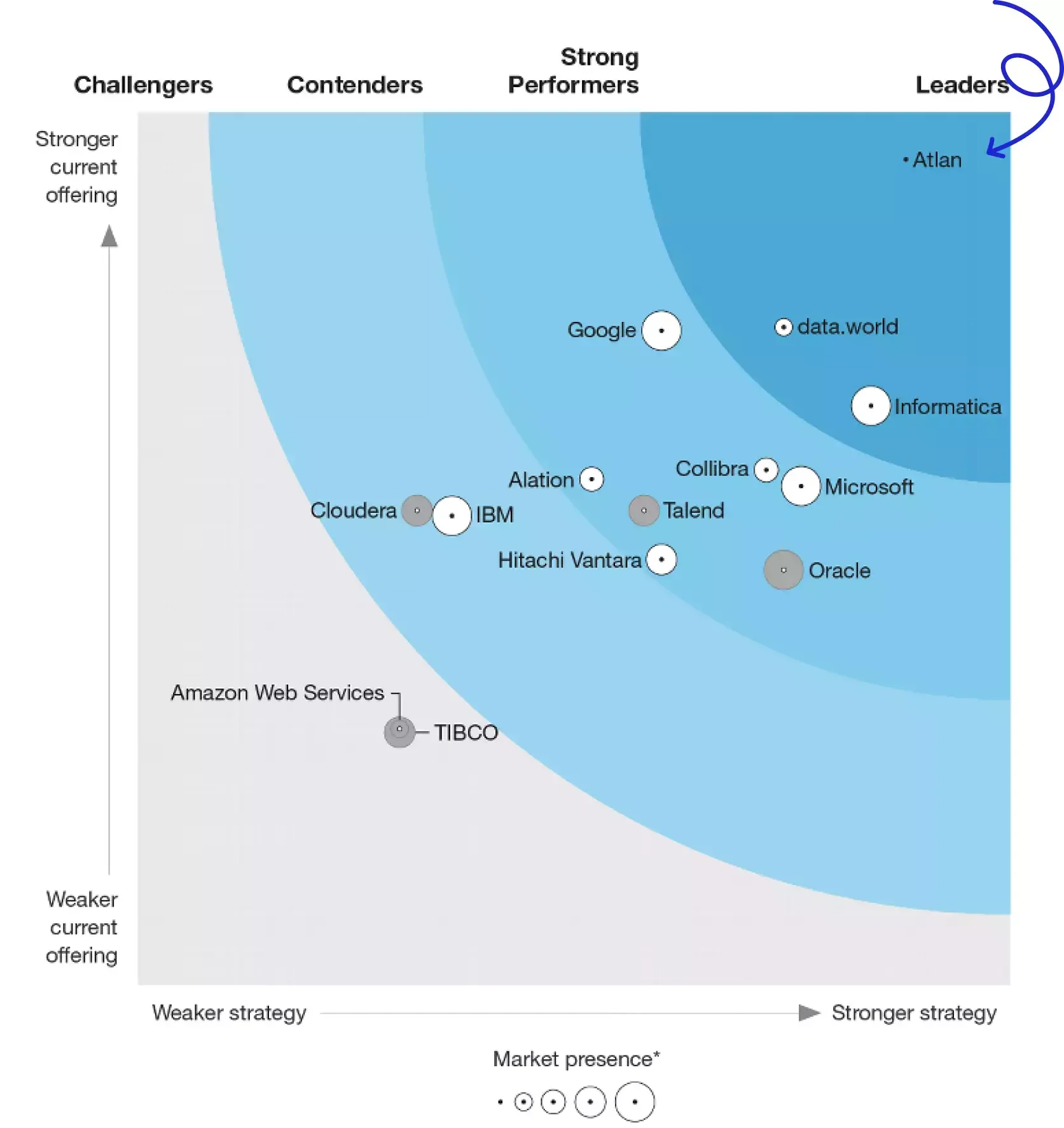

- The latest Forrester report named Atlan a leader in The Forrester Wave™: Enterprise Data Catalogs, Q3 2024, giving the highest possible score in 17 evaluation criteria including Product Vision, Market Approach, Innovation Roadmap, Performance, Connectivity, Interoperability, and Portability.

The latest Forrester Wave Report ranks Atlan as the best data catalog solution. Source: Forrester.

- Atlan enjoys deep integrations and partnerships with best-of-breed solutions across the modern data stack.

So, if you are considering an enterprise data catalog for your organization, take Atlan for a spin.

Data catalog architecture: Related reads #

- What Is a Data Catalog? & Do You Need One?

- AI Data Catalog: Exploring the Possibilities That Artificial Intelligence Brings to Your Metadata Applications & Data Interactions

- 8 Ways AI-Powered Data Catalogs Save Time Spent on Documentation, Tagging, Querying & More

- 15 Essential Data Catalog Features to Look For in 2024

- What is Active Metadata? — Definition, Characteristics, Example & Use Cases

- Data catalog benefits: 5 key reasons why you need one

- Open Source Data Catalog Software: 5 Popular Tools to Consider in 2024

- Data Catalog Platform: The Key To Future-Proofing Your Data Stack

- Top Data Catalog Use Cases Intrinsic to Data-Led Enterprises

- Business Data Catalog: Users, Differentiating Features, Evolution & More

Share this article