Data Quality Framework: Key Components, Templates & More [2025]

Share this article

Quick Answer: What is a data quality framework?

Permalink to “Quick Answer: What is a data quality framework?”A data quality framework defines the standards, processes, and tools that ensure data accuracy, consistency, completeness, and reliability. It establishes guidelines for data governance, enabling organizations to maintain high-quality data for better decision-making and compliance.

See How Atlan Automates Data Quality at Scale ➜

A strong data quality framework typically includes:

- Well-defined quality dimensions and metrics (e.g., completeness, accuracy, timeliness)

- Standardized processes for profiling, cleansing, and monitoring data

- Clear ownership and governance to assign responsibility

- Technology and tools to automate quality checks and reporting

A well-structured framework is essential for aligning data quality practices with business goals and regulatory requirements.

Up next, we’ll break down the key components, challenges, and how to build a framework that scales with your business. We’ll also explore the role of metadata in improving the effectiveness of your data quality framework.

Table of contents

Permalink to “Table of contents”- Data quality framework explained

- What are the key components of a data quality framework?

- How can you choose the best data quality framework for your next project?

- How can you create a data quality management framework for your enterprise?

- How does a unified trust engine elevate your data quality framework?

- Data quality framework: In summary

- Data quality framework: Frequently asked questions (FAQs)

- Data quality framework: Related reads

Data quality framework explained

Permalink to “Data quality framework explained”Data quality framework can be thought of as a formal structure that guides how an organization defines, measures, monitors, and improves the quality of its data.

The composition of such a framework includes establishing data quality standards, data profiling and cleansing, data quality assessment, monitoring and reporting, among others.

Watch Atlan in Action: Data Quality at Scale ➜

The data quality framework provides the foundation for embedding quality into data operations — across ingestion, transformation, storage, and consumption.

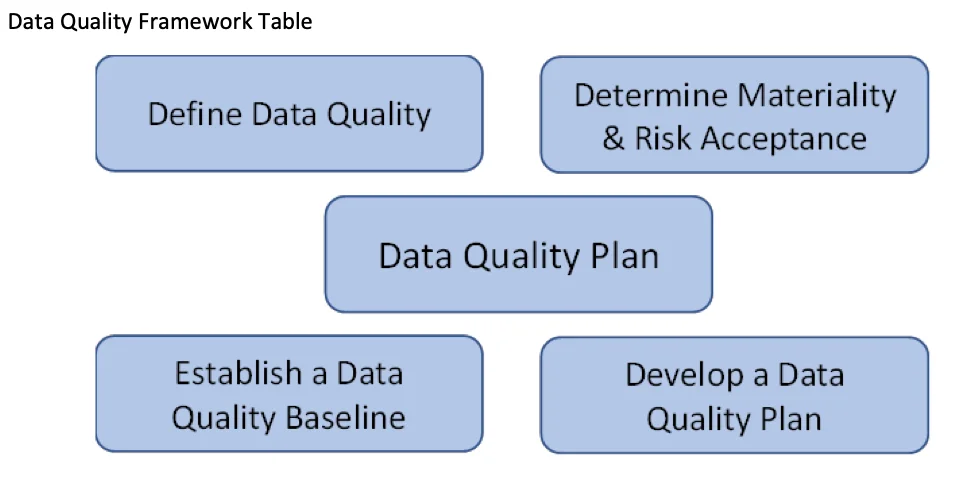

A sample data quality framework from USDA - Source: USDA Data Act.

Why does a data quality framework matter?

Permalink to “Why does a data quality framework matter?”Without a clear data quality framework, organizations risk operating on flawed, inconsistent, or incomplete data. This leads to poor decision-making, regulatory exposure, and wasted resources.

According to Gartner, poor data quality costs companies an average of $12.9 million per year. A robust framework can help reduce this cost and instead, deliver the following benefits:

- Build trust in data across teams and stakeholders

- Accelerate digital transformation and analytics initiatives

- Ensure compliance with regulations like GDPR, HIPAA, and BCBS 239

- Reduce firefighting by replacing ad hoc fixes with structured, scalable processes

What are the key components of a data quality framework?

Permalink to “What are the key components of a data quality framework?”The key components of any data quality framework include:

- Metadata management

- Data governance

- Data profiling

- Data cleansing

- Data quality rules

- Data quality assessment

- Data issue management

- Data monitoring and reporting

- Continuous improvement

Together, these components form a blueprint for reliable, scalable, and sustainable data quality management — embedded into daily data operations and decision-making. Let’s see how.

1. Metadata management

Permalink to “1. Metadata management”“Activating metadata supports automation of tasks that align to the quality dimensions of accessibility, timeliness, relevance and accuracy.” - Gartner on the role of metadata in ensuring data quality

Metadata provides context — what a data field means, where it comes from, how it’s used, and who owns it. Strong metadata management is the foundation for understanding, monitoring, and improving data quality across systems.

2. Data governance

Permalink to “2. Data governance”Data governance defines the policies, roles, and standards for managing data quality. It establishes ownership, accountability, and escalation paths to ensure that quality issues are addressed systematically and in line with business needs.

3. Data profiling

Permalink to “3. Data profiling”Data profiling is the initial, diagnostic stage in the data quality management lifecycle. Profiling helps you detect anomalies, set benchmarks, and prioritize where to focus your quality efforts.

4. Data cleansing

Permalink to “4. Data cleansing”Once issues are detected, data cleansing is the remedial phase. It’s the process of correcting, standardizing, or removing inaccurate, inconsistent, or duplicate data entries to improve its quality.

It ensures datasets are analysis-ready and compliant with defined standards. Proper data cleansing forms the basis for accurate analytics and informed decision-making.

5. Data quality rules

Permalink to “5. Data quality rules”Data quality rules are the business and technical rules that define what “good data” looks like — e.g., customer ID must be unique, birthdates cannot be in the future.

They can be business rule checks, cross-dataset checks, or checks against external data sets or services. Codifying these rules allows for automated validation and enforcement at scale.

6. Data quality assessment

Permalink to “6. Data quality assessment”Data quality assessment involves evaluating data quality performance against defined rules and metrics. It helps quantify the health of data and supports risk-based prioritization. Assessments also inform stakeholders with quality scores and trends over time.

7. Data issue management

Permalink to “7. Data issue management”Quality frameworks must include processes to log, assign, resolve, and track data issues across teams. Centralized issue management prevents duplicate efforts and enables accountability and root cause analysis.

8. Data monitoring and reporting

Permalink to “8. Data monitoring and reporting”Data monitoring is the continuous process of ensuring that data remains trustworthy over time.

Meanwhile, data quality reporting is an important tool for communicating data quality status and progress to stakeholders. For instance, data quality dashboards and real-time alerts make quality visible and actionable, helping teams intervene before issues cause downstream impact.

Together, data monitoring and reporting serve as a continuous feedback loop to data governance policies, potentially leading to updates and refinements in the governance strategy.

9. Continuous improvement

Permalink to “9. Continuous improvement”Data quality isn’t a one-time effort. A feedback loop between profiling, issue resolution, and governance enables organizations to refine standards, enhance automation, and adapt to new requirements over time.

How can you choose the best data quality framework for your next project?

Permalink to “How can you choose the best data quality framework for your next project?”There are several established data quality frameworks available that provide guidelines and best practices for maintaining and improving data quality. Here are six examples to consider:

- The DAMA guide to the data management body of knowledge (DAMA-DMBOK)

- The data quality framework from the Australian Institute of Health and Welfare (AIHW)

- TDWI’s data quality management framework

- The MIT Information Quality (MITIQ) program

- ISO 8000 data quality model

- The data quality assessment framework (DQAF) by IMF

See How Atlan Automates Data Quality at Scale ➜

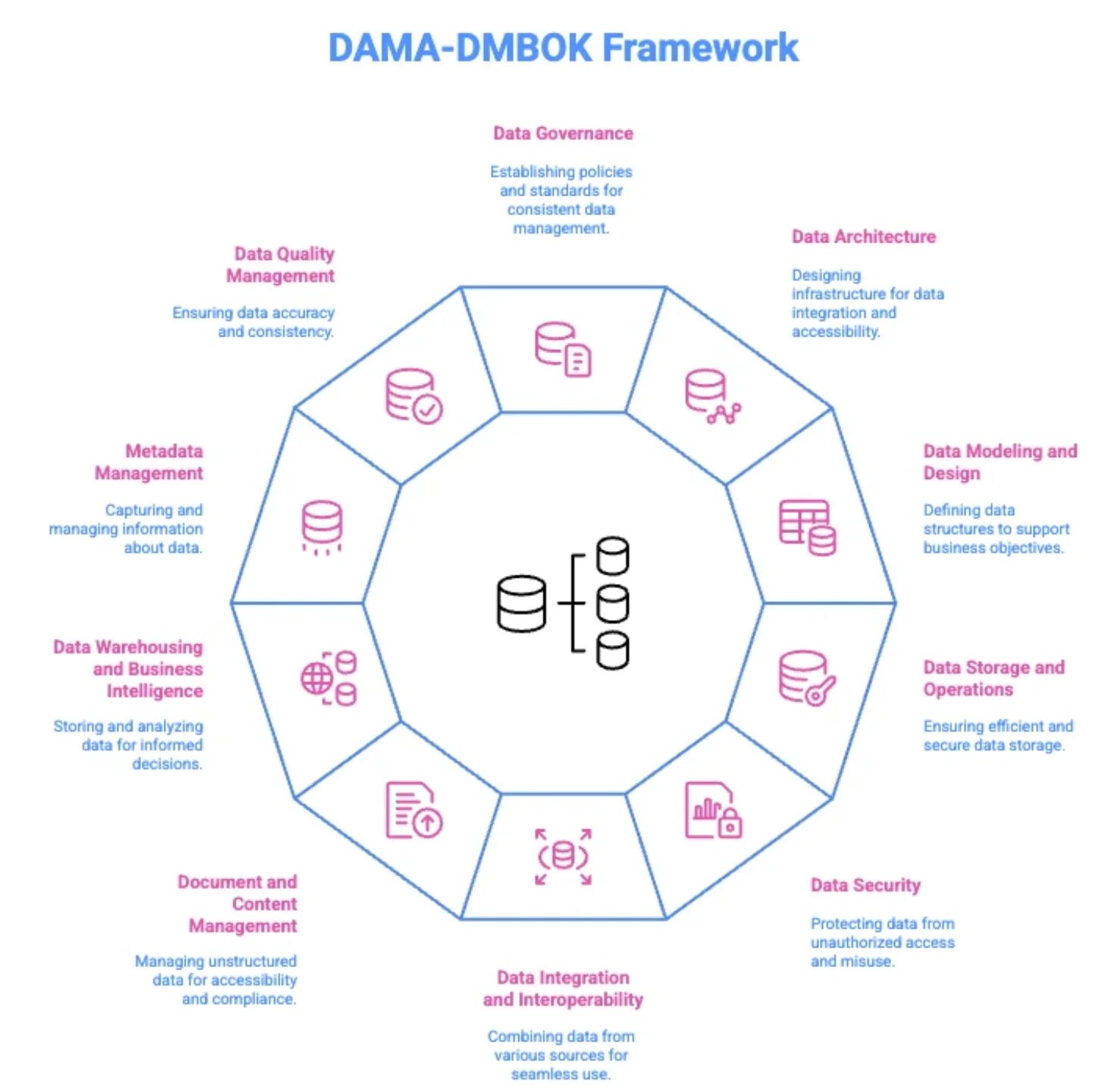

1. The DAMA guide to the data management body of knowledge (DAMA-DMBOK)

Permalink to “1. The DAMA guide to the data management body of knowledge (DAMA-DMBOK)”The DAMA-DMBOK is a comprehensive guide to data management that includes a chapter on data quality. It’s a valuable resource for anyone looking to develop a data quality framework.

DAMA-DMBOK Framework 10 Knowledge Areas - Image by Atlan.

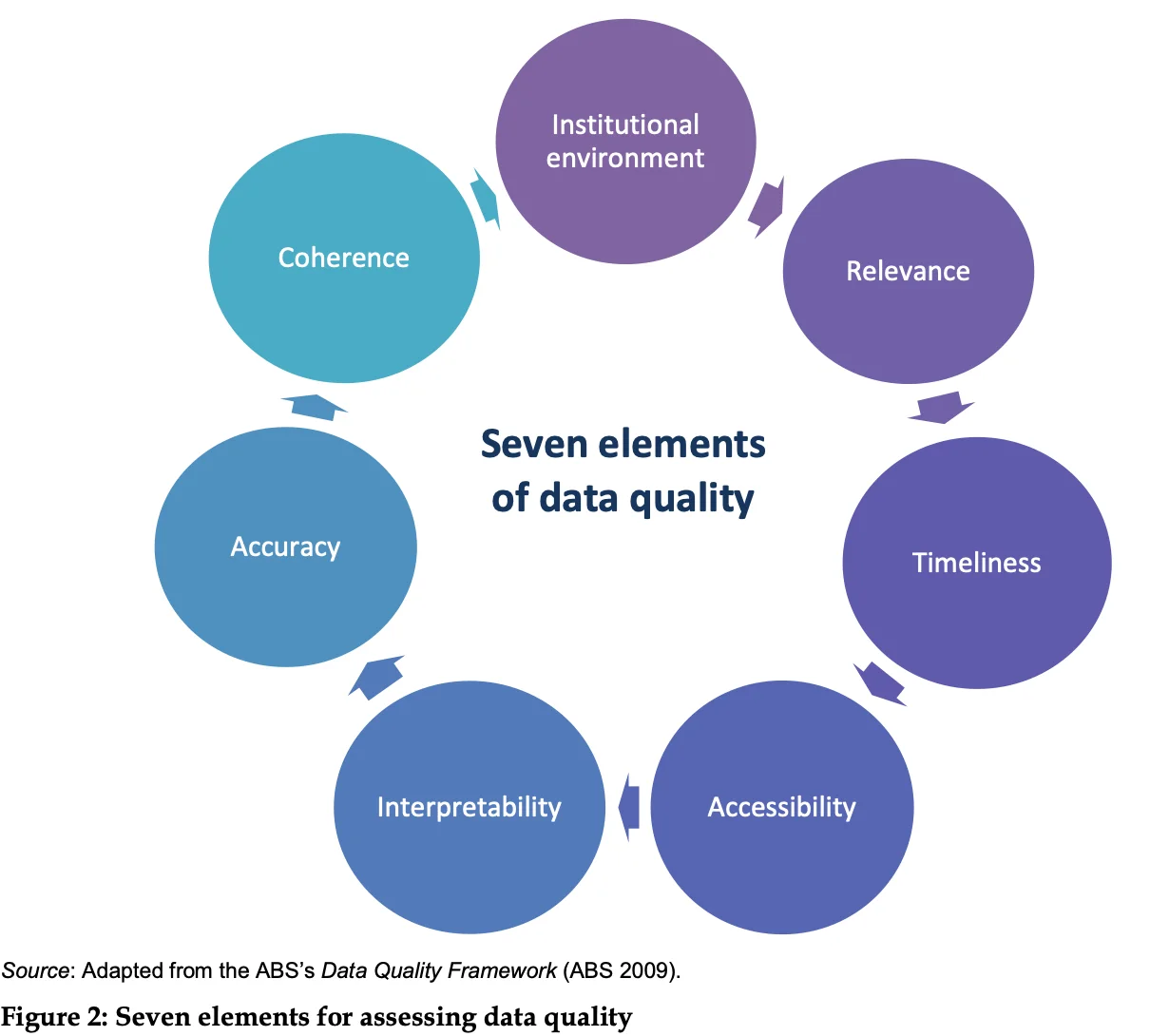

2. The data quality framework from the Australian Institute of Health and Welfare (AIHW)

Permalink to “2. The data quality framework from the Australian Institute of Health and Welfare (AIHW)”The data quality framework by the Australian Institute of Health and Welfare is a framework specifically designed for the health sector. It covers six dimensions of data quality:

- Institutional environment

- Relevance

- Timeliness

- Accuracy

- Coherence

- Interpretability

The key elements of a data quality framework, according to AIHW - Source: AIHW.

3. ISO 8000 data quality model

Permalink to “3. ISO 8000 data quality model”The ISO 8000 data quality model is an international standard for data and information quality management. It provides a model for describing, measuring, and managing data quality.

4. The data quality assessment framework (DQAF) by IMF

Permalink to “4. The data quality assessment framework (DQAF) by IMF”The International Monetary Fund has developed a framework specifically for the assessment of data quality, especially in the realm of macroeconomic and financial statistics. The framework focuses on five dimensions:

- Assurances of integrity

- Methodological soundness

- Accuracy and reliability

- Serviceability

- Accessibility

5. Gartner’s 12-action model for improving data quality

Permalink to “5. Gartner’s 12-action model for improving data quality”Rather than a formal “framework,” Gartner’s model outlines 12 actionable steps to build, scale, and sustain data quality. It emphasizes ownership, metrics, automation, and cultural buy-in, making it highly practical for data leaders.

12 actions to improve an organization’s data quality - Source: Gartner.

These frameworks all approach data quality from slightly different perspectives, but they each provide valuable guidelines and insights for ensuring and improving the quality of data in an organization.

Ultimately, the best framework is one that fits your current maturity while giving you room to scale quality management as your data ecosystem grows.

Many teams start by blending best practices from multiple frameworks into a unified, metadata-driven strategy, especially when using modern data quality trust engines like Atlan.

Are there any books and resources for mastering the data quality framework?

Permalink to “Are there any books and resources for mastering the data quality framework?”Here are some books and resources where you can learn more about data quality frameworks.

Books

- “Data Quality: The Accuracy Dimension” by Jack E. Olson:This book focuses on the dimension of accuracy within data quality, and gives a detailed account of how to ensure data is accurate.

- "Data Quality Assessment" by Arkady Maydanchik: This book provides a comprehensive resource for understanding and implementing data quality assessment in your organization.

- “Executing Data Quality Projects: Ten Steps to Quality Data and Trusted Information” by Danette McGilvray: This book presents a systematic, proven approach to improving and creating data and information quality within the enterprise.

- “Data Governance: How to Design, Deploy and Sustain an Effective Data Governance Program” by John Ladley: This book provides a comprehensive overview of data governance, including the necessary components of a successful program.

- "The Data Warehouse ETL Toolkit: Practical Techniques for Extracting, Cleaning, Conforming, and Delivering Data" by Ralph Kimball and Joe Caserta: This book provides a comprehensive guide to the entire ETL (Extract, Transform, Load) process, and includes valuable advice on ensuring data quality throughout.

Online resources

- DAMA International’s Data Management Body of Knowledge (DMBOK): As mentioned earlier, DAMA International’s Guide to the Data Management Body of Knowledge is a comprehensive, rigorous reference that outlines the scope and understanding necessary to create and manage a data management program.

- Data Governance Institute (DGI): The DGI provides in-depth resources on data governance and data quality, including a useful model for a data governance framework.

- Data Quality Pro: This is a free online resource that offers a wealth of articles, webinars, and tutorials on various data quality topics.

How can you create a data quality management framework for your enterprise?

Permalink to “How can you create a data quality management framework for your enterprise?”Here is a general step-by-step process for creating a data quality framework for an organization:

- Understand the business needs

- Define data quality goals and standards

- Data quality assessment

- Establish data governance

- Implement data quality rules

- Automate data quality processes

- Data cleansing

- Monitor, control, and report

- Implement continuous improvement practices

- Training and culture

- Review and update the framework regularly

A template for crafting an effective data quality framework

Permalink to “A template for crafting an effective data quality framework”Here’s a basic template for a Data Quality Framework document along with a few elements to keep in mind:

1. Executive summary

Write a brief description of the purpose and goal of the Data Quality Framework.

2. Introduction

Offer a deeper explanation of the Data Quality Framework’s role and the business needs that it will serve.

3. Business needs and objectives

Provide a detailed account of the business needs and objectives that necessitate the framework. Also identify critical data elements.

4. Data quality goals and standards

Explain the data quality goals and the key dimensions of data quality relevant to your organization (e.g., accuracy, completeness, timeliness, consistency, and relevance).

This is also where you define data quality standards – the criteria that your data needs to meet to be considered of ‘high quality’. The specific standards used can vary greatly depending on the nature of the data and the use case.

For example, in a financial institution, data quality standards might include an accurate representation of transactional amounts and correct customer details.

5. Data governance

Outline the data governance structure including roles and responsibilities.

6. Data quality rules

Provide details of the data quality rules for validation and cleaning of data.

7. Data quality assessment

Establish the procedure and frequency of data quality assessments. Include statistical methods or tools used for data profiling.

For instance, a financial services firm could decide that all customer records should have a valid email address, a non-null purchase history, and accurate demographic information.

After examining its current customer database, the firm could find that 10% of their customer records have an invalid or missing email address.

8. Data quality monitoring, control, and reporting

Describe the procedures for monitoring, control, and reporting. Also explain the metrics used for data quality evaluation and the frequency at which these metrics will be reported and reviewed.

9. Data quality improvement

Explain how the continuous improvement process would work. Document the methods to be used for implementing improvements based on data quality reports.

Revisiting our previous example of the financial services firm, the improvement could be deciding to implement a new customer onboarding form that validates email addresses at the point of entry, preventing this issue from occurring in the future.

10. Data cleansing

Set up the procedure for correcting the issues identified in your data assessment and improvement plan. Following this and reusing our previous example, the financial services firm could also set up regular checks of its customer data to quickly identify and address any future data quality issues.

11. Training and culture

Describe the staff training plan and document measures to be taken to build a data-centric culture.

12. Framework review and update procedure

Explain how and when the data quality framework will be reviewed and updated.

13. Conclusion

Final thoughts and a brief recap of the data quality framework.

Appendix

Include any supporting documentation or materials like a glossary of terms, reference materials, etc.

This template provides an overarching structure for creating a data quality framework, but remember to tailor it to the specifics of your organization. It’s essential to regularly review and update the document to reflect any changes in the organization’s objectives or the data environment.

How does a unified trust engine elevate your data quality framework?

Permalink to “How does a unified trust engine elevate your data quality framework?”A unified trust engine brings together metadata, governance, and automation to give your data quality framework real-time context, visibility, and control.

Instead of managing rules, profiling, and monitoring in disconnected tools, a unified trust engine for AI like Atlan centralizes quality signals across your stack — from Snowflake and dbt to BI dashboards.

It links every data asset to its lineage, owners, policies, and test results, resulting in fewer blind spots, more accountability, and a self-reinforcing quality loop where trust is built into the system.

Data quality framework: In summary

Permalink to “Data quality framework: In summary”A data quality framework can be seen as a structured plan to ensure and manage the quality, reliability, and integrity of data in an organization. The essential components of a data quality framework provide insights into its composition and practical application.

The data quality framework should be customized to fit the specific needs and capabilities of the organization. It may take time to establish, but once in place, it can greatly improve the reliability and usability of your data.

Data quality framework: Frequently asked questions (FAQs)

Permalink to “Data quality framework: Frequently asked questions (FAQs)”1. What is a data quality framework?

Permalink to “1. What is a data quality framework?”A data quality framework is a structured set of guidelines, processes, and tools designed to ensure data accuracy, consistency, and reliability. It helps organizations manage and improve their data quality to support decision-making and operational efficiency.

2. Why is data quality important for businesses?

Permalink to “2. Why is data quality important for businesses?”High-quality data ensures accurate insights, supports compliance with regulations, and enhances decision-making. Poor data quality can lead to financial losses, inefficiencies, and reputational damage.

3. How do I implement a data quality framework?

Permalink to “3. How do I implement a data quality framework?”Implementing a data quality framework involves defining data quality goals, assessing current data quality, establishing governance structures, applying quality rules, and continuously monitoring and improving data processes.

4. What are the key components of a data quality framework?

Permalink to “4. What are the key components of a data quality framework?”Key components include data quality standards, data profiling, assessment, governance, monitoring, and data cleaning processes. These elements work together to ensure ongoing data integrity and usability.

5. How can data quality frameworks improve business decision-making?

Permalink to “5. How can data quality frameworks improve business decision-making?”Data quality frameworks provide reliable data, reducing risks associated with faulty analyses. They enhance trust in data, leading to better strategic decisions and improved operational performance.

6. What role does metadata play in data quality frameworks?

Permalink to “6. What role does metadata play in data quality frameworks?”Metadata provides the critical context that makes data quality frameworks effective. It describes the origin, structure, usage, ownership, and lineage of data, helping teams understand what data means, where it came from, how it’s used, and who’s responsible.

In a data quality framework, metadata enables automated classification, validation, impact analysis, and policy enforcement. Without it, quality rules lack precision, and data issues are harder to trace or resolve.

7. What role does governance play in data quality frameworks?

Permalink to “7. What role does governance play in data quality frameworks?”Governance defines the rules, roles, and responsibilities that ensure data is managed consistently and ethically across the organization.

In a data quality framework, governance sets standards for quality, assigns accountability (like data stewards or owners), and enforces policies for data access, validation, and remediation. It ensures that data quality is an organizational commitment tied to compliance, trust, and business value.

Data quality framework: Related reads

Permalink to “Data quality framework: Related reads”- Data Quality Explained: Causes, Detection, and Fixes

- Data Quality Measures: Best Practices to Implement

- Data Quality Dimensions: Do They Matter?

- Resolving Data Quality Issues in the Biggest Markets

- Data Quality Problems? 5 Ways to Fix Them

- Data Quality Metrics: Understand How to Monitor the Health of Your Data Estate

- 9 Components to Build the Best Data Quality Framework

- How To Improve Data Quality In 12 Actionable Steps

- Data Integrity vs Data Quality: Nah, They Aren’t Same!

- Gartner Magic Quadrant for Data Quality: Overview, Capabilities, Criteria

- Data Management 101: Four Things Every Human of Data Should Know

- Data Quality Testing: Examples, Techniques & Best Practices in 2025

- Atlan Launches Data Quality Studio for Snowflake, Becoming the Unified Trust Engine for AI

Share this article