Snowflake Data Mesh: A Step-by-Step Setup Guide for 2025

Share this article

Snowflake data mesh can empower organizations to transition from monolithic architectures to decentralized, scalable data ecosystems.

Snowflake provides many of the building blocks required to implement data mesh principles like domain-oriented ownership, federated governance, data as a product, and self-service data access. These capabilities help create real-time, scalable, and collaborative data ecosystems across business units.

Scaling AI on Snowflake? Here’s the playbook - Watch Now

This article provides a practical, step-by-step guide to implementing data mesh on Snowflake—bridging a common gap in data mesh literature by focusing on real-world execution and how to migrate from centralized models.

You’ll explore:

- The core principles and tenets of data mesh

- How Snowflake enables these principles with key features

- A guide to implementing data mesh with Snowflake showcasing various core features and capabilities using an example of a fictional company

- How a metadata control plane like Atlan strengthens domain ownership, governance, and discovery in a Snowflake-powered data mesh

Data Mesh is one of the ways for data leaders to create a more value-driven future for their organization, while simultaneously cultivating a great data culture. Learn from industry experts who have successfully implemented similar approaches - check out the playbook!

Table of contents

Permalink to “Table of contents”- How does Snowflake enable the data mesh?

- How to implement data mesh on Snowflake: A fictional case study

- How to build a data mesh from scratch using Snowflake: Preliminary considerations

- How to identify a sample domain for Data Mesh implementation: Getting started

- How to set up data governance for the Snowflake data mesh at FastCabs: Initial steps

- How to identify the minimum viable data product(s) MVP for the Snowflake data mesh

- How to create the initial data contracts for the Snowflake data mesh

- How to create a self-serve data platform: First steps

- How to ingest raw data into Snowflake: Data mesh in action

- How to transform ingested data into data products in the Snowflake data mesh

- How to scale and sustain the Snowflake data mesh at FastCabs

- Common challenges faced when scaling the Snowflake data mesh at FastCabs

- What role does a metadata control plane like Atlan play in strengthening the Snowflake data mesh?

- How does Atlan support data mesh concepts?

- Final thoughts on Snowflake data mesh

- Snowflake data mesh: Frequently asked questions (FAQs)

- Snowflake data mesh: Related reads

How does Snowflake enable the data mesh?

Permalink to “How does Snowflake enable the data mesh?”Snowflake has a wide variety of features that support a range of data architecture and design patterns. Data mesh is one of them.

At the heart of data mesh, there are four core principles, which Snowflake supports in the following way:

- Domain-oriented architecture and ownership of data: Snowflake is a distributed yet interconnected platform that allows you to structure your organization’s workloads using domain environments based on accounts, databases, schemas, or a mix of all of these. Moreover, Snowflake’s Secure Data Sharing, Snowflake Internal Marketplace are all managed by Snowflake’s internal data catalog: Snowflake Horizon Catalog. This allows you to embrace a more distributed model of data creation and consumption.

- Data as a product: Snowflake allows each of the domains or teams to create and maintain data products that can be shared using the Snowsight UI (or programmatically) with other domains or teams. These can be internal and external to your organization, while you retain the full ownership of the data product. Snowflake defines a data product as “the combination of data plus metadata, code and infrastructure dependencies.” A data product in Snowflake can contain any of the securable objects and can be listed on the Snowflake Internal Marketplace.

- Self-service data platform: Snowflake provides a range of methods for you to access your data using an interface and language of your choice. It supports a variety of workloads, such as data engineering, interactive applications, machine learning, and data warehousing – a mix of which can be leveraged in the data mesh philosophy.

- Federated governance: Snowflake offers a variety of features that allow you to implement federated governance, such as RBAC, row-level access policies, column-level data masking, differential privacy policies, data classification, extensive auditing and logging, object tagging, and data quality metrics. All of these capabilities are packaged within the Snowflake Horizon Catalog.

How does Snowflake bring data products to life?

Permalink to “How does Snowflake bring data products to life?”The concept of data mesh centres around thinking about data as a product, which, Snowflake describes, as having the following four dimensions:

- Data: Snowflake offers various ways to store data, using a file and table format of your choice. It can also connect to external data objects and make them available to you as if they were native to Snowflake.

- Metadata: Snowflake stores and manages the metadata for all data assets. It’s attached to all listings in the marketplace, and includes details like ownership, lineage, business definitions, and more.

- Code: Snowflake also provides you with all the data engineering tools and frameworks you need to ingest, transform, model, and secure data using features like Pipelines, Tasks, Dynamic Tables, Snowpark Container Services and UDFs.

- Infrastructure: Snowflake also allows you to use your native cloud platform’s elastic capabilities to manage compute and storage based on demand. It also provides you a variety of serverless capabilities where you don’t need to depend on configuring a lot of things, especially for a lot of under the hood maintenance and optimization activities.

How to implement data mesh on Snowflake: A fictional case study

Permalink to “How to implement data mesh on Snowflake: A fictional case study”This is a fictitious case study but is based on real-world experience of how companies can go about getting started with Data Mesh.

In this case study we describe the existing data platform of a company called FastCabs. Thereafter we examine some specific problems faced by their centralized and monolithic data platform as their business scales.

In an attempt to help such organizations get started on Data Mesh in small but sure ways, we adopt the principle of iteratively building on existing technologies to the best possible extent.

Let’s start by exploring FastCabs’ business domains, existing data architecture, and challenges to understand the need for a data mesh approach.

FastCabs: Business domains

Permalink to “FastCabs: Business domains”Imagine FastCabs is a ride-sharing business founded in London. It operates a marketplace for drivers and passengers in multiple cities across Europe. Its business is comprised of the following domains:

- Passengers: The demand-side domain involving customer registrations, ride search, ride booking, and so on.

- Drivers: The supply-side domain involving driver registrations, ride acceptances, driver availability, incentives, payments, etc.

- Dispatch: This domain is all about matching drivers to passengers and dispatching the nearest drivers to passengers.

- Rides: The domain that supports the ride experience when a passenger boards a taxi.

- Pricing: This domain involves how much FastCabs charges for a ride and the factors influencing it.

Each of these domains is a data producer. In addition, there are several domains that primarily consume data, although they may also generate derivative data products for other domains to consume. Some examples include:

- Business Intelligence (BI): Central Analytics and Reporting domain

- Finance: Financial reporting, forecasting, and analytics

- Marketing: Analytical support for FastCabs’ marketing and growth initiatives

FastCabs: Current data architecture

Permalink to “FastCabs: Current data architecture”

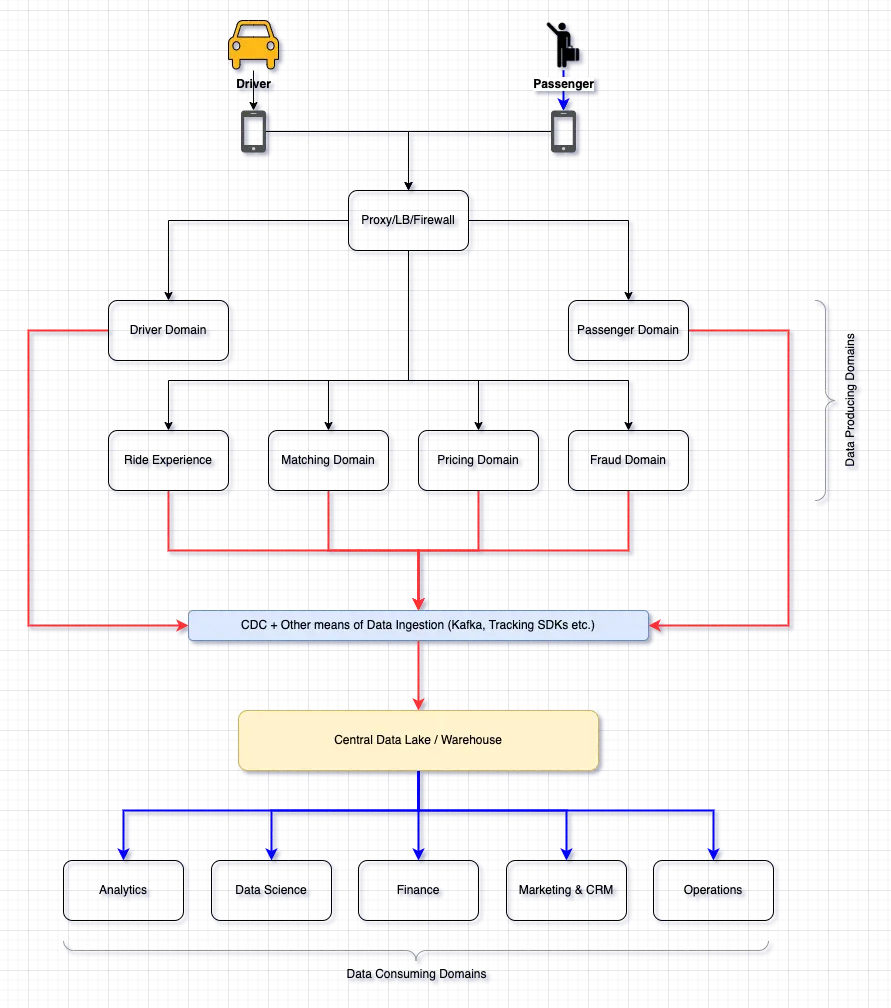

Existing data architecture at FastCabs. Image by Atlan.

The existing data architecture at FastCabs is monolithic. Data Ingestion pipelines carry raw data from operational systems across all data producing domains into an object-store based central data lake on Amazon S3 using a change data capture (CDC) service.

The Central Data Team owns and maintains these data pipelines (shown in red above). The Analytics engineers from the central data team transform raw data into meaningful datasets, used for analysis and reporting by everyone at FastCabs. This team acts as a bridge between the data producers and data consumers. All data-related requests are submitted to the central data team for prioritization.

FastCabs: Current challenges because of data architecture

Permalink to “FastCabs: Current challenges because of data architecture”Because of rapid growth, the data volume is scaling fast and the central data team is struggling to keep pace with new requirements. The team is inundated with requests to create new ingestion pipelines, model new data sets or fix data quality issues.

To make matters worse, the domain teams are constantly updating both the business logic and the architecture of their domains, leading to broken pipelines and incorrectly reported metrics. The backlog of work is piling up.

It is clear to FastCabs’ technical and data management teams that the central monolithic data architecture is no longer viable. A decentralized Data Mesh is the right direction to evolve their data platform.

How to build a data mesh from scratch using Snowflake: Preliminary considerations

Permalink to “How to build a data mesh from scratch using Snowflake: Preliminary considerations”Setting up a Data Mesh from scratch can be daunting as it requires not just technical but also cultural and organizational changes across participating domains.

…Execution of this strategy has multiple facets. It affects the teams, their accountability structure, and delineation of responsibilities between domains and platform teams. It influences the culture, how organizations value and measure their data-oriented success. It changes the operating model and how local and global decisions around data availability, security, and interoperability are made. It introduces a new architecture that supports a decentralized model of data sharing… – Part V, Data Mesh, Zhamak Dehghani

The Head of Data at FastCabs is tasked with the responsibility of taking the organization from a centralized, monolithic data setup to a more effective Data Mesh architecture. She starts this endeavor by carefully reviewing their existing data infrastructure and organizational setup to understand the effort and complexity of this migration.

Here are her notes from a preliminary review:

- Our engineering org is already split across clear domain boundaries.

- We can find a good candidate to pilot our Data Mesh journey and gradually include other domains.

- The CDC raw data ingestion process already copies our transactional data into the data lake automatically.

- We already have Snowflake in our architecture. Some teams are using this as their data warehouse, albeit in a small way. Still, it is good to build our Data Mesh around

Let’s build the data mesh on Snowflake because of the following advantages:

- Snowflake integrates well with our existing data infrastructure.

- It integrates well with Atlan, our primary data governance tool.

- It has multiple options for data ingestion and processing to suit the needs of most of our domains.

- With a little bit of help, domain teams can manage their own compute and storage needs.

- Access control for both compute and storage means that teams can operate in isolation.

- It is mostly programmed in SQL, a skill that most domain teams already possess. Therefore, it is relatively easy for them to be onboarded.

- Finally, using Snowflake will take away the pain of dealing with the technical complexity of the data platform, thus letting me focus on the more critical challenges of organizational and cultural changes required for us to implement a successful Data Mesh.

How to identify a sample domain for Data Mesh implementation: Getting started

Permalink to “How to identify a sample domain for Data Mesh implementation: Getting started”Next, the Head of Data reviews the data-producing domains, the teams that own these domains, and the skills they bring. Her job now is to find a sample domain for the pilot. She wants to team up with this domain, help them create a minimum viable data product (MVP), and record, learn and adapt along their journey before adding more domains into the mix.

The pilot domain will serve as a proof of concept and create a feedback loop for carrying out further development, instead of a large upfront investment in effort and resources.

After careful consideration, she chooses the Drivers domain for the pilot. Here are her reasons for doing so:

- Readiness: The organization’s monolithic architecture has been a bottleneck for the Drivers domain team for a while now. They are tired of running the risk of breaking hundreds of data pipelines every time they decide to make the smallest of changes to their data models. They are ready for a change for the better. They are willing to invest in the extra effort it will take because they are invented in long-term solutions.

- Willing partnership: The Engineering Manager of the Drivers domain is a forward-thinking data enthusiast. Having his buy-in would make it easier for the team when they need to prioritize the work needed for the pilot.

- Expertise: The product manager of the Drivers domain is very experienced and has a strong product-thinking mindset. This is certainly very useful when they attempt to build data products in accordance with Data Mesh principles. Having her on board would make it so much easier to transfer her product skills to data products.

- Nature of Drivers domain data: Drivers domain data volume is considerably less compared to the other domains. It also does not change as rapidly. It would be easier to implement early policies and test them as compared to other domains.

Now that the Head of Data has her pilot domain identified, FastCabs is on its way to taking early steps toward its Data Mesh.

She now forms a Data Mesh Team (DMT) which will own all tasks for the end-to-end Data Mesh migration. The Head of Data will lead this team and act as the program manager for the entire duration.

What is the composition of the Data Mesh Team (DMT) at FastCabs?

Permalink to “What is the composition of the Data Mesh Team (DMT) at FastCabs?”DMT includes the product manager, engineering manager, data analyst, and a backend engineer from the Drivers domain team.

It also includes 2 members of the central data team. One of them is to act as the platform engineer and help the team build the self-serve platform based on Snowflake. Another will assist the head of data in governance tooling.

Eventually, on successful conclusion of the proof of concept, other domains will be onboarded into the Data Mesh and the DMT will be dismantled. At that point, the central data platform team and the governance team will be formalized and continue to support the participating domains.

How to set up data governance for the Snowflake data mesh at FastCabs: Initial steps

Permalink to “How to set up data governance for the Snowflake data mesh at FastCabs: Initial steps”DMT wants to take a pragmatic, “governance-first” approach toward building its Data Mesh.

They know from the collective and documented experiences of the global data community that governance as an “afterthought” would be a mistake. In a distributed environment where domains enjoy a high level of autonomy, a lack of governance can cause the domains to devolve into isolated silos of information with little to no interoperability with each other.

As their first task, the DMT creates an initial set of guidelines and data standards that can help the Drivers domain team get started with the pilot. They formulate a few core guidelines for naming conventions, identifiers, time zones, currencies, data privacy, and access control for the data products.

To begin with, the guidelines are intentionally kept short and non-prescriptive. The idea is to start with basic and non-optional governance principles and allow plenty of room for learning and gradual fine-tuning. This way, governance standards can evolve organically and be applicable to all domains.

DMT plans to harness the rich governance features of Snowflake to implement the policies and standards, right from the start.

How to identify the minimum viable data product(s) MVP for the Snowflake data mesh

Permalink to “How to identify the minimum viable data product(s) MVP for the Snowflake data mesh”The Business Intelligence (BI) domain is one of the primary consumers of the Drivers domain data. In collaboration with them, the DMT figures out a minimum viable data product (MVP) that the BI team can meaningfully use as a proof of concept.

After a few rounds of discussion, the DMT identifies the “Daily Driver Utilization metric” as the key data product that will make the most business sense for BI. The driver utilization metric is one of the key metrics of interest to the BI domain. It is defined as a ratio of the total time that a driver is serving rides vs the total time they are available in a day.

Daily Driver Utilization metric is further derived from other datasets like drivers master data, vehicles master data, and the daily operational data of the vehicle which include minute-level geolocation of the vehicle as well as its state (i.e., whether the vehicle is currently serving a ride, moving towards a ride, idle, etc.). These datasets are also included in the scope of the MVP.

The data products will be published in a database within Snowflake. This database will constitute a data mart for the Drivers domain. The mart will be owned and operated autonomously by the Drivers domain.

The BI domain in turn will start using these data products and report back the issues and usefulness. The DMT will further fine-tune and make adjustments to the products based on the feedback. This will not only create more meaningful products for the business but also establish their earliest feedback loop. This is important to avoid silos that are a natural but undesirable side-effect of the mesh architecture.

How to create the initial data contracts for the Snowflake data mesh

Permalink to “How to create the initial data contracts for the Snowflake data mesh”After identifying the MVP data products, the DMT works on creating detailed data contracts for each of them.

A data contract, much like an API specification, is a documentation of the social and technical agreement between the producers and the consumers of the data product. It includes the data schema and data semantics. For example, it might include details such as what each record of the data product represents, or what the unique identifier for each record would be.

Data contracts are central to the idea of “data as a product”. Contracts help build trust between the producers and consumers of data products. Data contracts are not just passive documents. They are programmatically verifiable artifacts that can evolve over time to include meaningful business requirements.

For the MVP, the DMT has decided to limit the scope of contracts to product schemas. These will be documented in plain text and verified manually.

In later iterations, these data contracts will evolve to include business semantics, data quality, and SLA expectations. They will be eventually described in a machine-readable format so that automated tools can validate each data product against its contractual specifications.

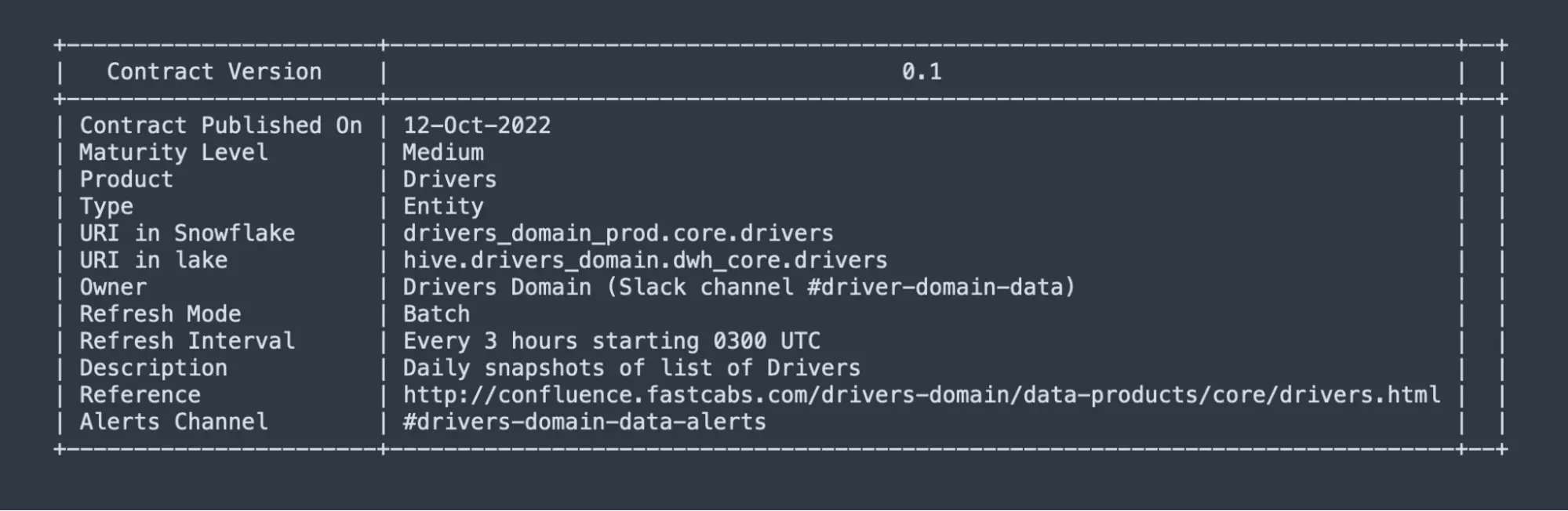

For now, the data contract for the data product drivers master data looks as below:

Metadata

Data contract for the data product drivers master data. Image by Atlan.

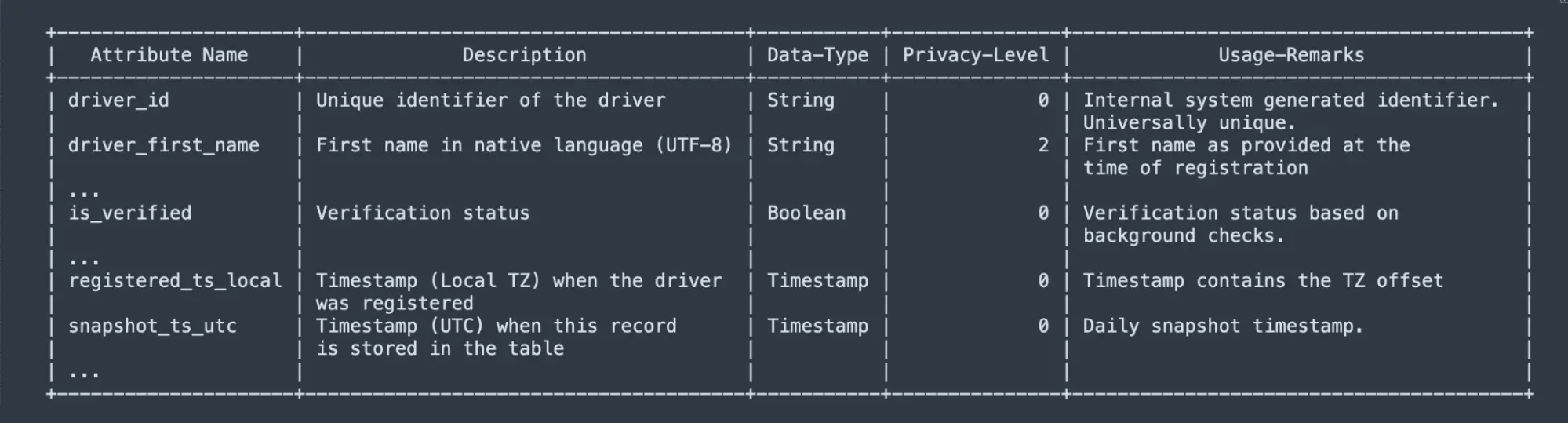

Schemata

Schemata for the data product drivers master data. Image by Atlan.

Similar contracts are created for the rest of the data products.

How to create a self-serve data platform: First steps

Permalink to “How to create a self-serve data platform: First steps”The DMT intends to start small with its self-serve data platform. The first version of the platform will have just enough features to serve the Drivers domain’s needs. Eventually, the platform will grow to serve all the other domains and the entire organization. This approach will help Fastcabs to avoid big upfront investments and costly mistakes.

The first step in this process is to set up an Infrastructure as Code Framework to enable the Drivers domain team to configure their data mart within Snowflake.

Infrastructure-As-Code (IAC) framework

Permalink to “Infrastructure-As-Code (IAC) framework”In a distributed Data Mesh, the domain teams are trusted and enabled to provision their own data infrastructure and tooling with the help of their central platform team.

Infrastructure-As-Code (IAC) frameworks provide the right tools to allocate, manage and configure infrastructure using declarative, machine-readable definition files. This makes IAC an ideal framework for a self-servable platform.

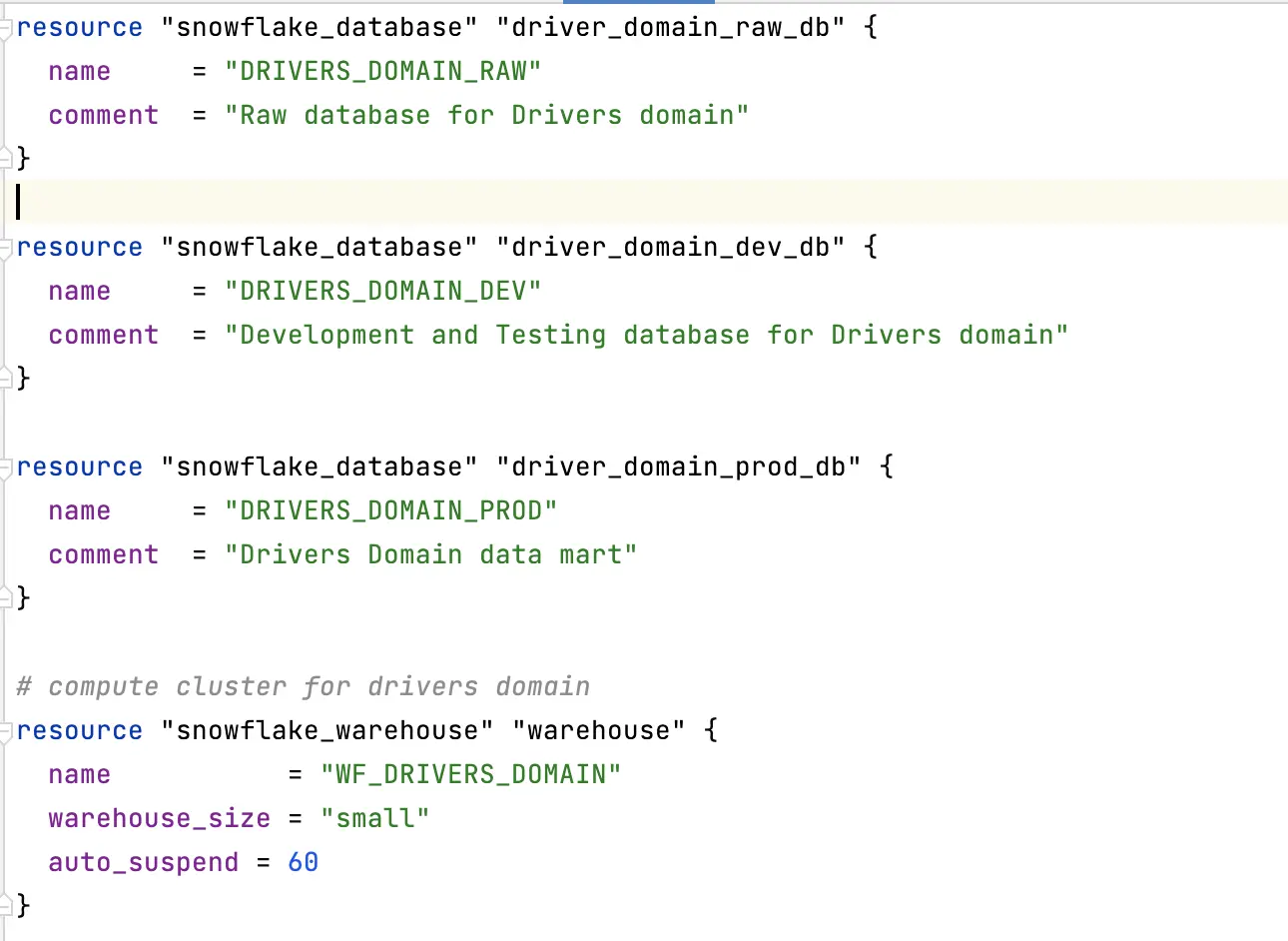

FastCabs already uses Terraform as their IAC framework. Terraform integrates very well with Snowflake.

Therefore, the DMT extends the same to manage their Snowflake infrastructure. Terraform configuration files are version controlled in Git and deployed with a CI/CD process using Atlantis. The code repository is owned by the central platform team who has the right to approve and merge any pull requests raised by the domain teams.

This lightweight process will ensure that domain teams follow a review and approval cycle before requesting any infrastructure on the Snowflake platform. When ready, the Drivers domain team will be able to create or edit these configuration files to provision their own data marts on Snowflake and configure the right level of access control.

Using Terraform with a lightweight review/approval process is ideal for a self-serve data platform. It facilitates the domain teams to become autonomous in allocating their own infrastructure and platform requirements in an automated, seamless manner; while still conforming to the org-wide governance framework.

Here’s an example of how DMT uses Terraform configuration for their data marts and a compute cluster (virtual warehouse).

DMT uses Terraform configuration. Image by Atlan.

In the future, the Drivers team can make further changes to these configurations - add/remove objects, change permissions, scale up/down the compute resources - all on their own - just as long as they have their pull-requests reviewed and approved.

Data marts for the Drivers domain at FastCabs

Permalink to “Data marts for the Drivers domain at FastCabs”The Drivers domain data mart is a walled garden within Snowflake with the necessary access control in place. It is where all the Driver domain’s data products will be eventually built and published. For now, this is where our pilot data products as described earlier will get built and published.

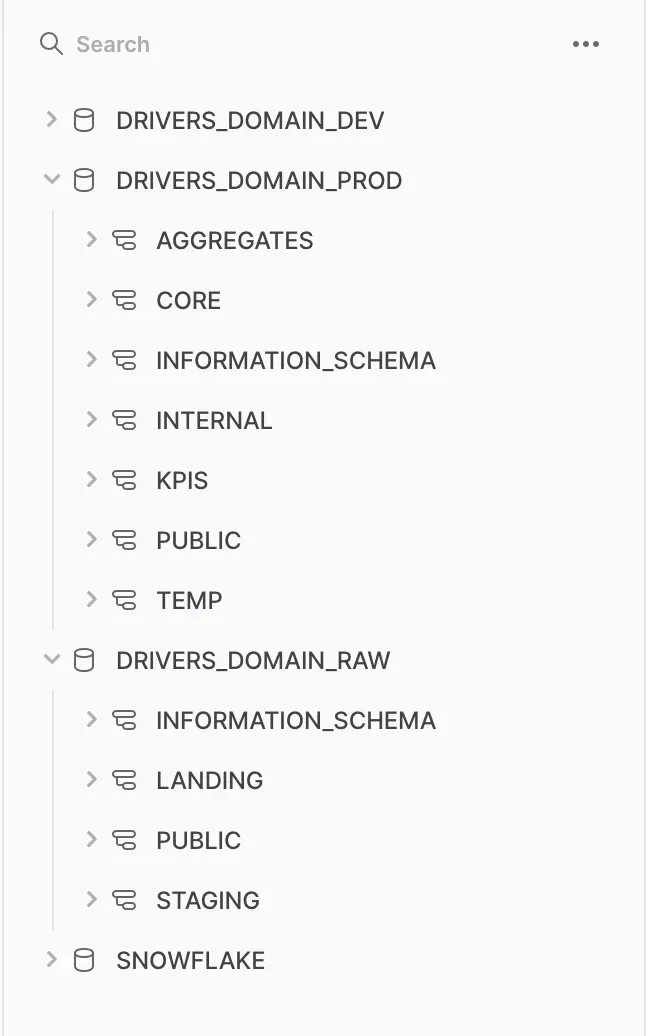

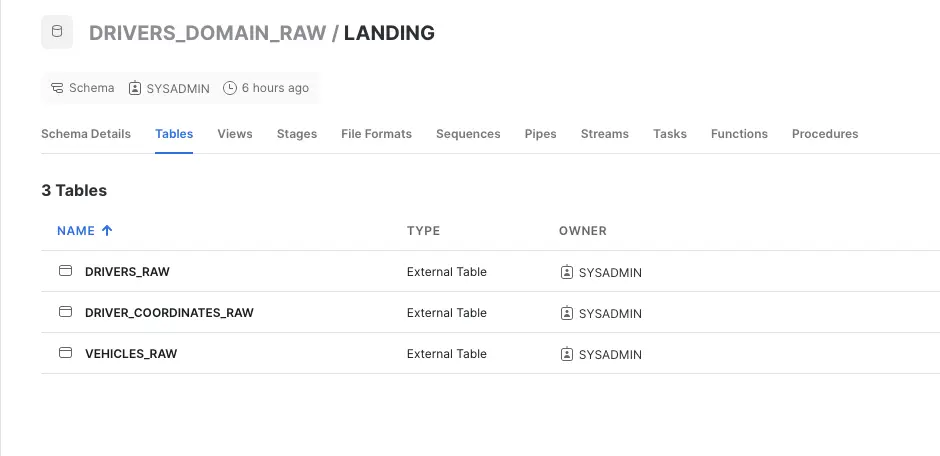

Using Terraform, the DMT provisions the data mart to include the following databases:

- Drivers_domain_raw: The landing database. Raw data from different sources gets copied into this.

- Drivers_domain_prod: The production database. All the data products will be published here.

- Drivers_domain_dev: The development and testing database.

Drivers Data Mart in Snowflake data mesh. Image by Atlan.

Access control for the Drivers data mart at FastCabs

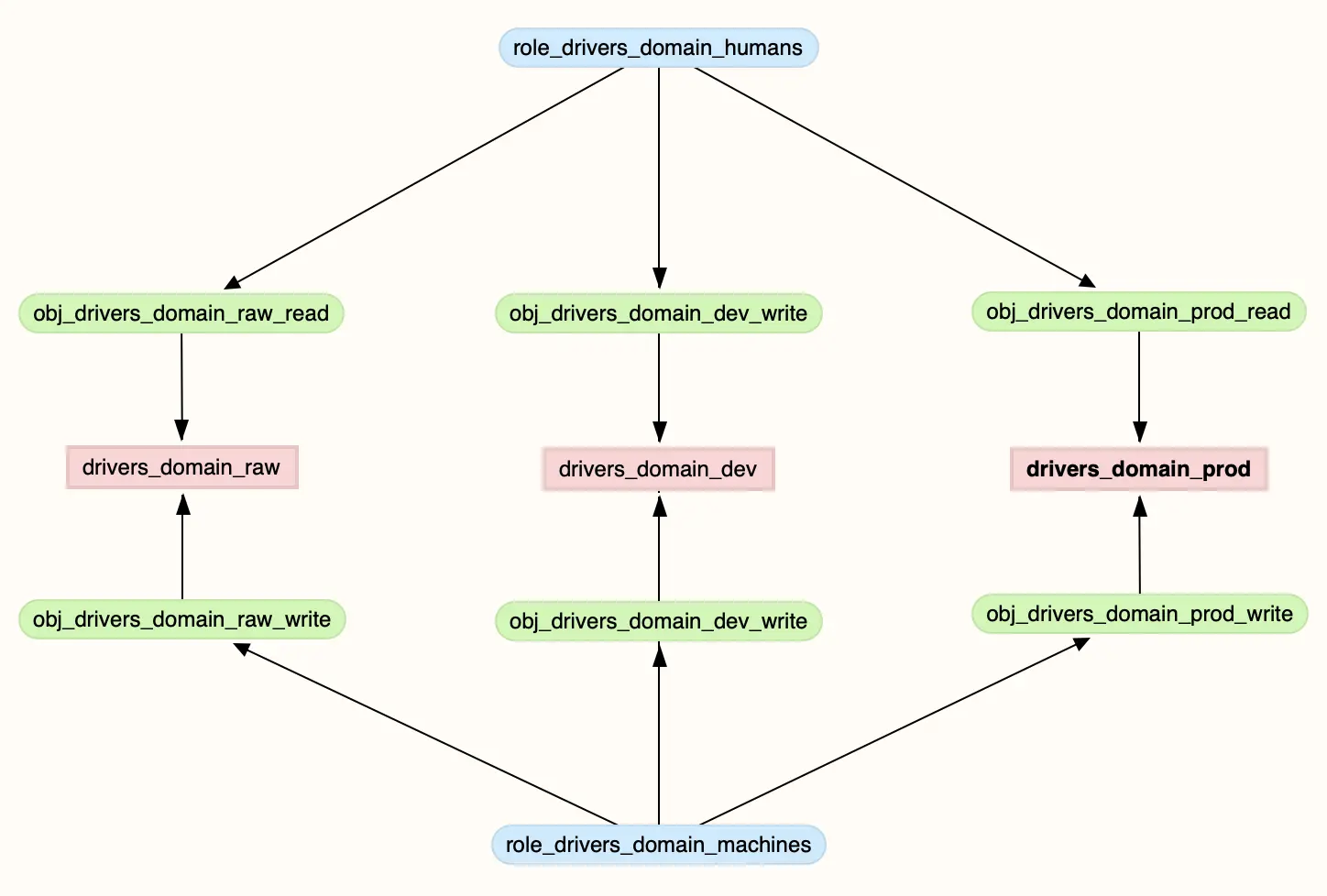

Permalink to “Access control for the Drivers data mart at FastCabs”The data marts must have strong access control policies. Much like the rest of the governance, access control is decided and implemented by the domain teams as per the common guidelines laid out by the governance process.

In this specific example, The DMT uses Snowflake’s RBAC capabilities to control role-based access to their mart. They make sure to follow the best practices laid out by Snowflake as these will help scale access control when more domains join.

System roles are created that allow automation scripts and other machines to have read and write access to the domain’s production databases (Drivers_domain_raw and Drivers_domain_prod) while team members from the Drivers domain only have read access. This discipline ensures that all changes to data are traceable and auditable.

Access Control Policies for Data Producers. Image by Atlan.

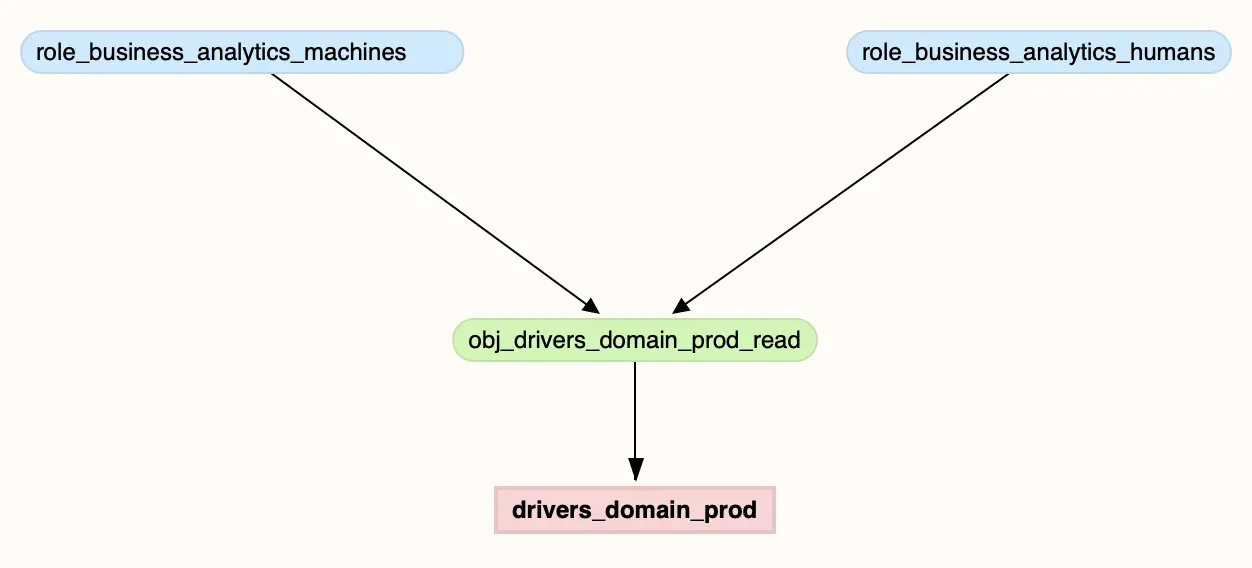

Consumers (in this case the BI team) have only read access to the database Drivers_domain_prod.

Access Control Policies for Data Consumers. Image by Atlan.

In a distributed Data Mesh environment, the final responsibility for data confidentiality, quality, and integrity of the data products lies with the respective domain teams. So, it is important to think about access control upfront, instead of implementing it later.

How to ingest raw data into Snowflake: Data mesh in action

Permalink to “How to ingest raw data into Snowflake: Data mesh in action”It is now time to start ingesting raw data into the Drivers domain data mart. Since their data is already flowing into the S3 system in parquet files, it is easy to copy it into Snowflake.

Snowflake offers a variety of choices for ingesting raw data. Some of them are:

- The COPY command, which works great for batch loads of data on the cloud.

- Snowpipe, which is Snowflake’s micro-batching solution for streaming data.

- External Tables on existing data in an object store

- Kafka Connector for Snowflake

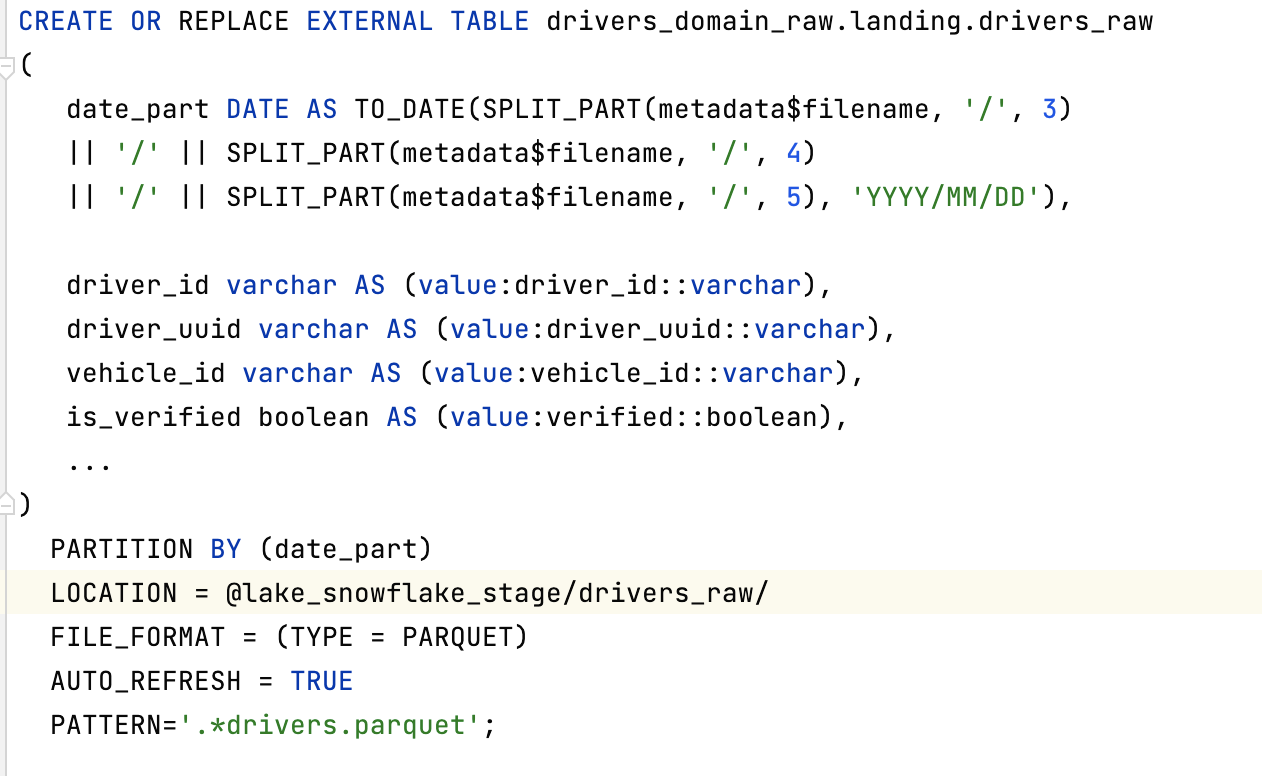

DMT has opted to make use of Snowflake’s external tables feature wherein the files stored in columnar formats like Parquet or ORC can be directly mapped onto a Snowflake table. The performance of these external tables is relatively poorer compared to native tables but this approach would avoid the effort of setting up more complex data ingestion pipelines. This would speed up the MVP phase considerably.

External tables get automatically refreshed whenever new data arrives into S3, keeping the Snowflake tables updated and in sync with those in the lake. Snowflake allows this configuration to be expressed entirely in SQL, providing a high level of autonomy for the Drivers domain team.

Sample code for creating external tables in Snowflake. Image by Atlan.

The external table definitions are version controlled in a Git repository. A CI/CD workflow automatically connects to Snowflake and creates these tables in the drivers_domain_raw database. This repository is fully owned by the Drivers domain team. They have the autonomy to configure the CI/CD workflows for testing and deploying these artifacts, as they deem fit.

When the external tables are deployed, raw data from the domain is available in Snowflake and gets updated every few hours.

Raw data from the domain is available in Snowflake. Image by Atlan.

When other domains get onboarded, they will follow a similar pattern. They may choose a different ingestion mechanism (for example, Snowpipe) but the high-level process/workflow will be similar.

It is now time to start modeling this data into products.

How to transform ingested data into data products in the Snowflake data mesh

Permalink to “How to transform ingested data into data products in the Snowflake data mesh”The Analytics Engineer from the Drivers domain (within DMT) sets up transformation workflows to build the necessary data models using dbt.

dbt is a good tool choice because of its governance-first design. Its support for adding tests to transformation workflows can help ensure that the final data products meet the specifications (data contracts).

dbt’s support for embedding documentation within the code encourages developers to write thorough documentation of the data products as they are being modeled. The documentation authored in dbt can be persisted to Snowflake, making data discovery easier and more organic.

As with the external table definitions, the code repository for the transformation workflows is version controlled in Git and owned by the Drivers domain. This pattern allows easy scaling of the transformation platform - when more domains join, each will have its own respective code repositories. Any common code can be extracted into reusable dbt packages.

DMT makes use of Snowflake’s zero-copy cloning to create a test environment that the Drivers domain can use for extensively testing their data products. dbt test suite ensures that the datasets are continuously tested against the specification each day when the data pipelines are run. They make use of a data profiling tool to validate the final tables against the predefined schema.

The analytics engineer writes SQL transformation to convert the raw data from drivers_domain_raw database into the final data products in the drivers_domain_prod database, making sure that the products comply with the previously agreed upon data contract and governance standards.

The workflows are deployed using Airflow which was already in use by the central data team. Later, as the mesh evolves, orchestration will be decentralized so that each domain can have autonomy on how they wish to orchestrate their data pipelines.

The Drivers domain team has now produced its first MVP successfully. The data products are now available in the Drivers data mart, ready to be used by consumers. Early lessons have been learned and useful and actionable feedback loops have been created along the way.

DMT’s job is done and it is dismantled. The keys to the drivers-mart and workflows are handed over to their rightful owner - the Drivers domain. The Head of Data gets ready to officially create the central platform team and the central governance team.

How to scale and sustain the Snowflake data mesh at FastCabs

Permalink to “How to scale and sustain the Snowflake data mesh at FastCabs”Even though some of the steps above appear similar to those in a monolithic data architecture, they are different in the following key aspects.

- Data pipelines are owned and managed by the Drivers domain instead of the central data team.

- Data products are built and published by the Drivers domain instead of the central data team. The team has full autonomy over its data products.

- Governance is federated. While the guidelines are centrally created, the Drivers domain gets to decide how to implement them.

- The data platform is self-servable. The Drivers domain can allocate and configure its own infrastructure and tools.

In this section, we will look at how FastCabs uses this learning to onboard the other domain teams to evolve their Data Mesh setup. We will also look at how the early governance policies evolve and get more concrete.

Common challenges faced when scaling the Snowflake data mesh at FastCabs

Permalink to “Common challenges faced when scaling the Snowflake data mesh at FastCabs”As they get ready to onboard other domains in the Data Mesh, here are some factors that FastCabs teams need to factor in:

- Data platform

- Governance

- Onboarding of new domains

- Governance hardening

- Organization design

Data platform

Permalink to “Data platform”The central platform team will need to grow with sufficient capacity, support, and clearly defined roles and responsibilities.

Onboarding new domains entail new and varied requirements that will need to be supported by the central platform team.

Beyond a point, these divergent requirements may pose a challenge to standardization. The biggest pitfall would be to end up with a platform that is a disjointed mishmash of multiple tools and technologies.

A central platform team will be required to arbitrate between diverging tools and technical requirements and form baselines for the organization. They will own all choices related to programming languages, compute, and storage frameworks.

Since the central platform team becomes the sole entity that will need to support multiple technology evaluation requests from various teams, it can soon become a bottleneck in the process of evolving into an effective Data Mesh. So in the interim, while evaluation is still going on, there needs to be a clear and documented process so that the domain teams can continue to function with their chosen tech stack. Once the evaluation is complete, there needs to be a documented way to either continue or phase out the tools and frameworks.

Allowing autonomous tool choices for the domains while maintaining standardized governance is a fine line to walk. The central platform team will need to be up for this challenge.

Governance

Permalink to “Governance”The central governance team will need to grow with sufficient capacity and necessary skills to support the Data Mesh. Moreover, the governance policies will require hardening to ensure a healthy balance between standardization and autonomy.

The central governance team will need to ensure that they continue to steward the decision-making and policy compliance without getting caught up in the implementation details.

They will also need to work closely with the central platform team to introduce and mature the tools necessary to govern the mesh.

It is also probably a good idea at this point to objectively evaluate the choice of Snowflake as the foundational platform for the Data Mesh. However, unless significant gaps exist between expectations and reality, it is advisable for FastCabs to continue strengthening its mesh foundations on top of Snowflake.

Onboarding new domains

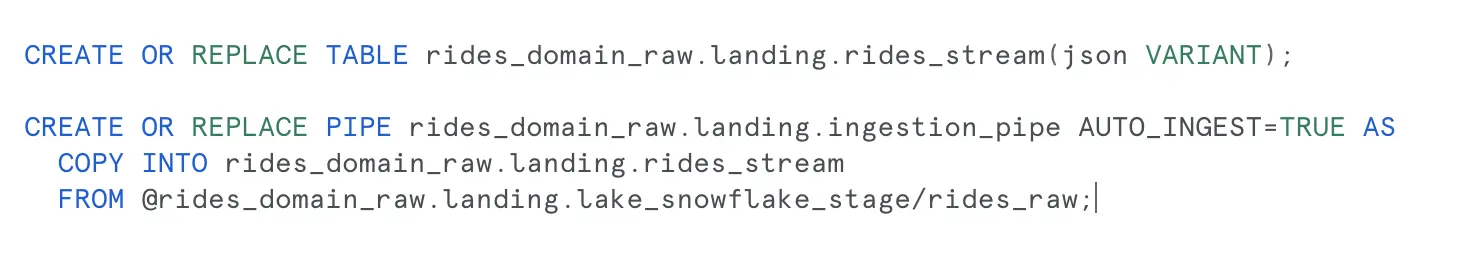

Permalink to “Onboarding new domains”Rides domain is next to be onboarded. As the name suggests, Rides domain supports the riding experience for both the drivers and the passengers. Rides are one of the key datasets for Fastcabs and this domain is the primary source of truth for it.

However, unlike the data from the Drivers domain, Rides data is more transactional in nature and needs to be ingested in real-time. During the course of a ride, the Rides domain captures multiple events and streams them to the data lake on S3. There are several analytical and operational use cases that depend on this near real-time data so the volume of data is very large.

Setting up streaming ingestion in such a situation would normally be a non-trivial process requiring expert data engineering skills. But thanks to Snowflake, setting up a real-time stream from S3 to Snowflake is as simple as writing a few lines of SQL.

setting up a real-time stream from S3 to Snowflake is as simple as writing a few lines of SQL. Image by Atlan.

It is fairly easy to copy the raw data that is already available in S3 into Snowflake, as soon as it arrives. This is another reason why the barrier to entry into Data Mesh is quite low for the Rides domain.

As with Drivers domain, the Rides domain also makes use of Terraform to configure its data mart and allocate the necessary compute resources for creating its data products.

As more domains join the party, the platform team will continue to evaluate cross-cutting requirements and, if relevant, will bake them into the toolset supported by the baseline platform.

An example of this is the workflow orchestration tool, Airflow which was centrally hosted and managed in the legacy, monolithic architecture. Now that there is more than one domain in the mesh, the central platform team has configured the platform such that each domain can provision its own separate instance of Airflow to run its workflows. This flexibility allows domains to tailor the instances according to their needs. At the same time, standardizing on a common orchestration tool ensures a unified experience and better support and maintainability.

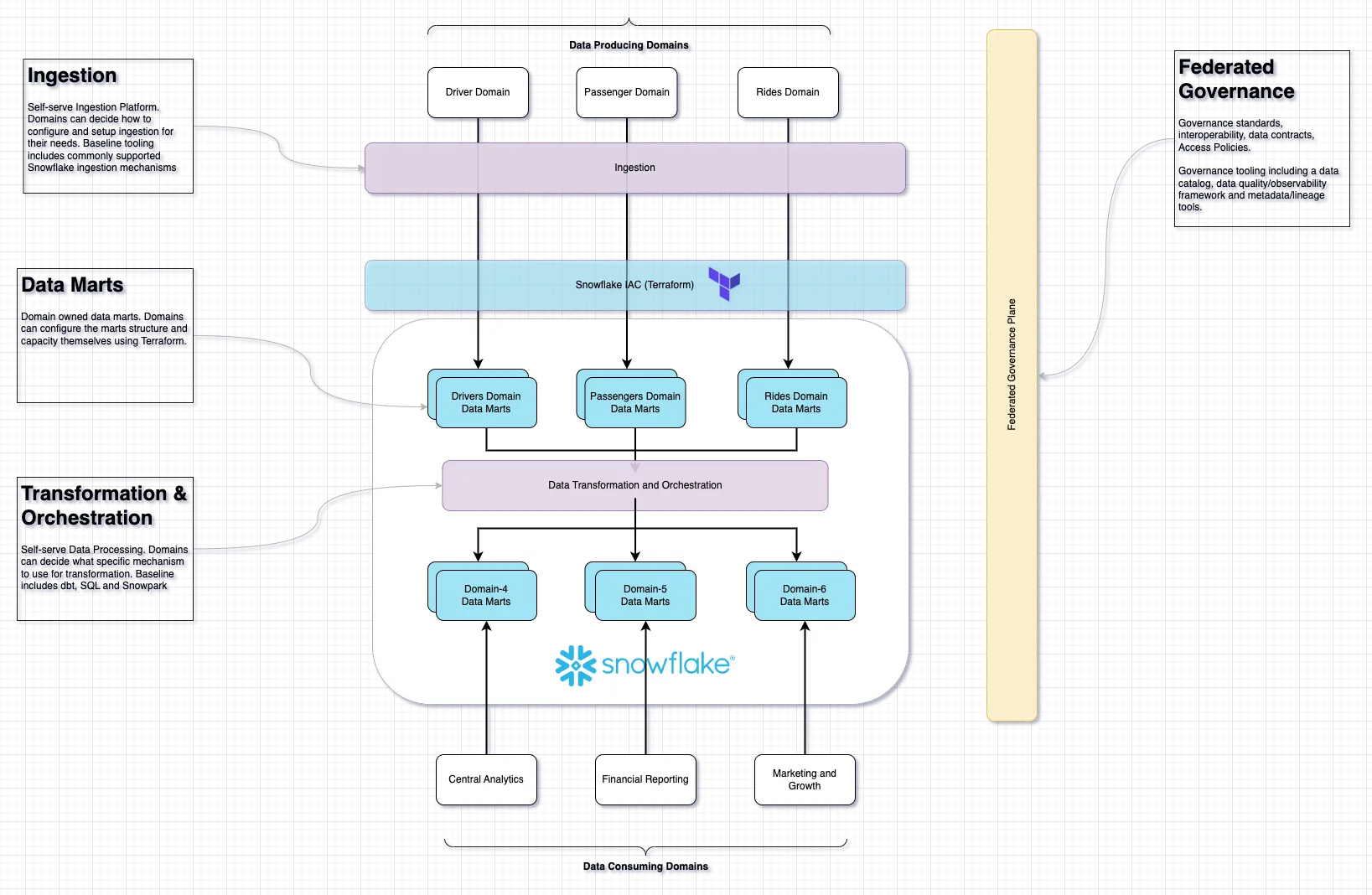

Here is how they visualize the Data Mesh when multiple data-producing and consuming domains join in over time, each domain building and publishing data products in their respective data marts.

Visualization of the Data Mesh when multiple data-producing and consuming domains joined. Image by Atlan.

Governance hardening

Permalink to “Governance hardening”A strong governance architecture is absolutely essential for the domains and their products in a Data Mesh to interoperate and play well together. While the self-serve data platform gives the domains the technological underpinnings to operate as an effective mesh, a well-defined governance architecture will lay out operational rules and policies that govern the sustenance and growth of the Data Mesh.

Eventually, it will become possible for diverse data domains to operate in semi-autonomy without compromising on the business value that their data products are meant to provide. They will be able to provide these data products with a consistent look and feel which makes it easier for the data-consuming domain to be productive.

In the following sections let’s look at a few common aspects that are included in the governance of Data Mesh.

Interoperability of data products and creation of the Data Stewardship Committee

Permalink to “Interoperability of data products and creation of the Data Stewardship Committee”One of the concerns of decentralized data products is that it can become difficult to connect and correlate data products to each other. Each domain may use a slightly different vocabulary to define the entities in their bounded context. This makes it difficult to agree on a unified data model.

This vocabulary overlap is quite normal even in simpler business environments. It is somewhat unavoidable and poses a big problem for data consumers especially as they consume data from multiple data products. In traditionally monolithic data architectures, it is often left to the central data team to identify and disambiguate these entities by either documentation or training.

However, in a decentralized mesh, the governance team needs to have a tighter process in place to disambiguate these polysemes. These polysemes require disambiguation, especially for consumers who wish to consume data from multiple domains.

For example, in the case of FastCabs, the Dispatch domain defines ‘search’ as an event when a passenger searches for a ride while it is called ‘ride-request’ by the Rides domain. As another example, both the Drivers and Passengers domains have an entity called “Session” that defines a contiguous chunk of user events, but for their respective domains.

Some Interoperability issues also occur as unwanted side effects of a data product’s evolution. When data products evolve through their life cycle, the changes are captured in their respective data contracts, which in turn will have a cascading effect on other products and domains that depend on it. We will look into this in more detail in the section titled ‘Evolution of Data Products’.

In our earlier example, FastCabs is well aware of this potential challenge they will need to face over time. They decide to formulate a data stewardship committee to help mitigate this challenge. The stewardship committee includes representatives from each domain, typically product owners and analytics engineers.

This committee will be regularly coordinating its efforts to

- Identify and Map the cross-domain polysemes

- Identify, evaluate, and steward changes in data products that have a significant footprint in terms of usage and complexity

The stewardship committee at Fastcabs is meant to adopt a stewarding approach toward governance. They are expected to act as a governing body for interoperability concerns by recognizing the gaps and ensuring that the participating domains are implementing the necessary solutions. They are not expected to actually implement the solutions as doing so will reduce the autonomy of domain teams.

Data contracts framework

Permalink to “Data contracts framework”Good data products define a contract or specification that enables consumers of the products to understand and use them appropriately for their needs. A data contract describes the schema of the data product, its business meaning, semantics, and how it interoperates with other data products. A good data contract includes the service level agreement (SLA) of the product as well as other quality guarantees.

The data product owners own the specifics of the data contracts but the overarching framework under which these contracts are defined, described, and validated is the responsibility of the central governance team. Data contracts will need to be documented as well as enforced in order to keep the trust of data consumers. Towards this, the governance team along with the central data platform team lays out the following:

- The scope of data contracts: For example, do the contracts include only schema or also semantics?

- The method of description: For example, are the contracts defined in programmatically readable configuration files or in some other format?

- The method of enforcement of contracts: For example, are the contracts validated in real-time, by sampling, or via another mechanism?

- The method of handling situations: For example, when does a data product default on its contractual obligations?

- The method of evolution of contracts: For example, how can you handle backward compatible and incompatible changes?

The central governance team at FastCabs has chalked out a plan to ensure that all data products include a contract that they adhere to.

At this early stage, the scope of data contracts will be limited to just the data schemas. They choose JSON Schema as the declarative language for all schemas because schemas and quality expectations are easy to define as JSON files. Also, the schema language is flexible enough to meet FastCabs’ current and future needs for data contracts.

Next, the central platform team builds the necessary framework for the domain teams to

- Define the data contracts as json schemas (stored and version controlled in Git)

- Define a versioning policy (they choose Semantic Versioning) to support schema evolution

- Create a lightweight schema validation library that can be used in the CI/CD workflows for schema validations.

- Set up a Data quality framework (using Soda) to validate the data products against the contracts.

Snowflake’s metadata capabilities come in handy here. The data validation framework utilizes Snowflake’s information schema to check if the products meet their specifications wrt to the schema, data types, lineage, and SLAs. Paired with their data governance tool, Atlan, a solid foundation for governance-driven data contract management is created.

User experience

Permalink to “User experience”Like any other software or physical product, data products must be designed with a good user experience in mind. For data products, this means the ease with which their consumers can interpret and understand them. The principle of “don’t make me think” applies as much to data products as any other.

The governance team defines and continually evolves a common set of standards for names, data formats, types, currency codes, time zones, and such for all the data products across all FastCabs domains to enable a consistent and reliable user experience.

At the same time, the data platform team creates all the necessary tooling like reusable templates, macros, auditing, and testing tools that can be embedded in test suites to confirm compliance. Their metadata management tool, Atlan, with its good search capabilities coupled with Snowflake’s rich metadata can help review areas where standards compliance may be lacking and needs improvement.

Discoverability and documentation

Permalink to “Discoverability and documentation”As more domains and products get onboarded, the sprawl of data could create silos of information. If the data products are connected at multiple layers, one small change in one of them would mean cascading changes for all the dependent products. Coordinating this can quickly become a nightmare in organizations as large and geographically distributed as Fastcabs.

To avoid this, the tooling efforts must include metadata tools with rich discovery features. Discovery tools can facilitate easy management of lineage and dependencies. Most discovery tools in the market also have sophisticated search capabilities that help data consumers to discover the most relevant data products for their needs.

In the case of FastCabs, Atlan provides very rich and advanced discovery capabilities with features like natural language search and a recommendation system.

Fastcabs has chosen to deploy Atlan because it integrates well with their data platform as well as with Snowflake. Atlan’s collaboration features facilitate the discovery of data products as well as foster a strong culture of data democracy within the organization.

Governance tools depend heavily on metadata. Metadata includes details about the products’ purpose, schema, semantics, lineage, ownership, and other operational details such as refresh frequency. It gets captured and stored next to the data products in the data warehouse. Rich metadata support is one of the strongest features of Snowflake.

The governance team at FastCabs creates guidelines and recommendations for adding necessary metadata for all data products.

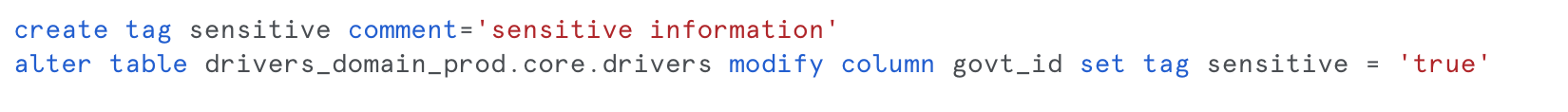

Domain teams leverage these features to add meaningful descriptions to tables and columns in their data marts in Snowflake. Where necessary, objects are tagged along with additional information on the data. For example, all sensitive data is tagged as such.

Metadata tags are searchable within Snowflake as well as via Atlan. Image by Atlan.

These metadata tags are searchable within Snowflake as well as via Atlan.

Housekeeping

Permalink to “Housekeeping”As the Data Mesh scales and proliferates, the domain teams will need to do periodic housekeeping in order to get rid of accumulated technical debt like deprecated products, unused assets, poorly configured policies, and needlessly expensive processing pipelines. Data governance tooling can read metadata from the platform components and notify the domain teams about these areas of improvement.

The governance and platform team at FastCabs collaborate to build the necessary processes, policies, and tooling for this purpose.

For example, the platform team creates a template for reporting underutilized (and possibly deprecated) tables. This report queries Snowflake’s Access_History view to check if there are tables that have not been accessed recently. The domain teams are encouraged to deploy this and other similar reports to help them with housekeeping. The central governance team makes use of these reports as well. They periodically keep an eye on the accrued technical debt and highlight areas of improvement.

Another key requirement is to closely manage cloud cost and budget (aka FinOps). Thanks to features like object tagging and compute resource isolation in Snowflake, FastCabs’ domain teams are able to keep an eye on their respective cloud spend and flexibly scale compute and storage consumption to stay within their respective budgets.

Data privacy

Permalink to “Data privacy”A growing Data Mesh will need foolproof ways to implement compliance requirements for regulatory guidelines like GDPR and CCPA. The central governance team at FastCabs clearly lays out a data privacy framework that all the domain teams must follow in order to protect sensitive data in their respective data marts. All data owners are responsible for identifying and protecting the confidential information of their respective products.

Some of the guidelines that are included in the data privacy policies cover the following aspects:

- Cataloging sensitive data with object tags

- Using pseudonymous identifiers

- Masking sensitive data via Snowflake’s data masking features

- Implementing strict access control with regard to sensitive data throughout its lifecycle

- Periodic audit of sensitive information

- Measures to be taken in case of breach of sensitive data.

- Process for implementing the ‘Right to be forgotten’ requests.

The governance team coordinates with the platform team for making the necessary tooling available for the above. For example, when a user requests their data to be deleted, each domain must have the right mechanism to receive and service this request and send a notification of completion.

Snowflake’s row and column-level security features enable strong support for data privacy. FastCabs domain teams use Snowflake features like dynamic masking to hide sensitive data from all but those who have approved access to it. Sensitive information is tagged using Snowflake’s object tagging. Row and Column level security policies allow controlling access to data based on specific roles.

Organization design

Permalink to “Organization design”A mature Data Mesh calls for the formalization of roles and responsibilities. Initially, this just means extending existing roles to include data product development and implementation responsibilities within each domain.

For example, the product owner in the domain would take the end-to-end ownership of the data product definition while the engineering team would own the actual development of the data product.

Many practitioners believe that one of the most important roles in a distributed Data Mesh is that of the Analytics Engineer. Analytics Engineers design and model the data products and ensure compliance with governance and interoperability requirements, in coordination with the governance team. They also represent their domain in the central stewardship committee. Therefore, this role must be clearly represented in each domain team.

At FastCabs, the Data Analysts assigned to each domain are given the additional responsibility of Analytics Engineering in the beginning. As the mesh matures, the domain teams define the Analytics Engineer role more formally and specifically hire for this role.

Similarly, the central data platform team and the governance team reorganize themselves to align their roles and skills with their new responsibilities.

As time goes by, FastCabs business and technology will evolve. Domain teams will adapt to these changes. Some will consolidate or split and some new domains will be created. FastCabs will have to ensure that the data product ownership and support are appropriately handled with such lifecycle changes.

What role does a metadata control plane like Atlan play in strengthening the Snowflake data mesh?

Permalink to “What role does a metadata control plane like Atlan play in strengthening the Snowflake data mesh?”Snowflake recently conducted a webinar on April 29, 2025, highlighting how to build and govern your data products in Snowflake. In addition to some of the things mentioned above, this webinar also talked about features like Semantic Models and Cortex Analyst for interacting with your data products in natural language and creating AI-enabled and AI-driven data products.

It was also reiterated and confirmed during the webinar that features like Snowflake Internal Marketplace alone is not the solution for data sharing and there’s an integration with a third-party tool like Atlan works really well for Snowflake, which is why both Atlan and Snowflake are working closely on a deeper integration.

How does Atlan support data mesh concepts?

Permalink to “How does Atlan support data mesh concepts?”Atlan enables organizations to adopt data mesh across their whole data landscape, as it sits horizontally across all data systems. Atlan offers features that enable various domain-based topologies, help you create and share data as products, and also helps domains, teams, and individuals collaborate with much more ease and reliability.

Atlan’s features of embedded collaboration, unified control plane for governance, automated lineage, and especially the close integration with Snowflake’s Horizon Catalog enable you to create the foundations for a data mesh architecture and philosophy within your organization. Let’s take a look at how Autodesk used Atlan with Snowflake to activate their data mesh.

How Autodesk activates their data mesh with Snowflake and Atlan

Permalink to “How Autodesk activates their data mesh with Snowflake and Atlan”Autodesk, a global leader in design and engineering software and services, created a modern data platform to better support their business intelligence needs.

Contending with a massive increase in data to ingest, and demand from consumers, Autodesk’s team began executing a data mesh strategy, allowing any team at Autodesk to build and own data products

Using Atlan, 60 domain teams now have full visibility into the consumption of their data products, and Autodesk’s data consumers have a self-service interface to discover, understand, and trust these data products.

Final thoughts on Snowflake data mesh

Permalink to “Final thoughts on Snowflake data mesh”Data Mesh can be hard to implement. It requires an org-wide mindset shift toward decentralization and product thinking. The cultural and organizational changes are often difficult to implement right and it’s not uncommon to see failed or poorly implemented Data mesh. For this reason, critics of Data Mesh often dismiss the architecture as too abstract and impractical.

In this article, we attempted to demonstrate a reference Data Mesh implementation at a growth stage organization with a complex business domain. Needless to say, each journey towards building a Data Mesh will be different and unique, depending on the specific business and cultural challenges at the organization. There is no one-size-fits-all.

It is important, therefore, for organizations to take a step-by-step approach toward implementation and tailor the implementation to their unique needs and environment. A successful mesh implementation will borrow heavily from principles of Agile software development and follow an iterative, fail-fast approach. As we saw in our case study, it is important to have strong feedback loops at each step to enable continuous learning and course correction.

The need for the right self-service data platform can not be overstated. The data platform must be consistent, easy to use, configurable, and scalable, and allow a high degree of autonomy for the diversity of requirements of domain teams. Snowflake’s wide variety of features, its consistent SQL-based interface, and its remarkable support for data governance make it an ideal platform for Data Mesh.

When supplemented with an active data governance tool like Atlan, the platform becomes a strong foundation for a successful Data Mesh.

Book your personalized demo today to find out how Atlan supports data mesh concepts and how it can benefit your organization.

Snowflake data mesh: Frequently asked questions (FAQs)

Permalink to “Snowflake data mesh: Frequently asked questions (FAQs)”1. What is Snowflake data mesh?

Permalink to “1. What is Snowflake data mesh?”Snowflake Data Mesh is an architectural approach that leverages Snowflake’s AI Data Cloud to implement a decentralized, domain-oriented data management philosophy. It emphasizes scalable, self-service data platforms that allow teams to manage and share data independently while maintaining global governance.

2. Can I implement data mesh in Snowflake without a full platform overhaul?

Permalink to “2. Can I implement data mesh in Snowflake without a full platform overhaul?”Yes, you can incrementally adopt data mesh in Snowflake. Start by identifying a few key domains, assigning ownership, and setting up dedicated schemas or accounts. Use Secure Data Sharing and role-based access controls to test inter-domain collaboration before scaling up.

3. How do I structure domains in Snowflake for a data mesh architecture?

Permalink to “3. How do I structure domains in Snowflake for a data mesh architecture?”Domains can be mapped to Snowflake accounts, databases, or schemas depending on your organizational scale. For most mid-sized teams, creating isolated databases per domain with clear naming conventions and dedicated roles is a practical starting point.

4. What’s the best way to publish and share data products between domains?

Permalink to “4. What’s the best way to publish and share data products between domains?”Use Secure Data Sharing or list curated assets on the Snowflake Marketplace (internal or external). Make sure each product includes metadata like ownership, refresh frequency, and quality metrics, among others.

5. How do I ensure consistent governance across domains in a Snowflake data mesh?

Permalink to “5. How do I ensure consistent governance across domains in a Snowflake data mesh?”Set baseline policies at the organization level (e.g., data classification, tagging standards), then allow each domain to define its own policies within that framework. Use Horizon Catalog and object tagging to maintain visibility and auditability across all domains.

6. What role does metadata play in implementing Snowflake data mesh?

Permalink to “6. What role does metadata play in implementing Snowflake data mesh?”Metadata underpins the entire mesh—governing access, search, lineage, and trust. Snowflake captures this metadata in the Horizon Catalog, but to get end-to-end visibility (especially across non-Snowflake systems), you’ll likely need a control plane that can unify metadata from across the stack.

7. How can I manage metadata and governance across Snowflake and non-Snowflake domains in a data mesh?

Permalink to “7. How can I manage metadata and governance across Snowflake and non-Snowflake domains in a data mesh?”While Snowflake’s Horizon Catalog handles metadata within Snowflake, most modern data stacks include tools like dbt, Looker, AWS, and more. A metadata control plane like Atlan can unify metadata, policies, and ownership across all platforms, helping teams implement federated governance and discover trusted data products regardless of where they live.

Snowflake data mesh: Related reads

Permalink to “Snowflake data mesh: Related reads”- Snowflake Summit 2025: How to Make the Most of This Year’s Event

- Snowflake Cortex: Everything We Know So Far and Answers to FAQs

- Snowflake Copilot: Here’s Everything We Know So Far About This AI-Powered Assistant

- Polaris Catalog from Snowflake: Everything We Know So Far

- Snowflake Cost Optimization: Typical Expenses & Strategies to Handle Them Effectively

- Snowflake Horizon for Data Governance: Here’s Everything We Know So Far

- Snowflake Data Cloud Summit 2024: Get Ready and Fit for AI

- How to Set Up a Data Catalog for Snowflake: A Step-by-Step Guide

- How to Set Up Snowflake Data Lineage: Step-by-Step Guide

- How to Set Up Data Governance for Snowflake: A Step-by-Step Guide

- Snowflake + AWS: A Practical Guide for Using Storage and Compute Services

- Snowflake X Azure: Practical Guide For Deployment

- Snowflake X GCP: Practical Guide For Deployment

- Snowflake + Fivetran: Data movement for the modern data platform

- Snowflake + dbt: Supercharge your transformation workloads

- Snowflake Metadata Management: Importance, Challenges, and Identifying The Right Platform

- Snowflake Data Governance: Native Features, Atlan Integration, and Best Practices

- Snowflake Data Dictionary: Documentation for Your Database

- Personalized Data Discovery for Snowflake Data Assets

- Snowflake Data Access Control Made Easy and Scalable

- Glossary for Snowflake: Shared Understanding Across Teams

- Snowflake Data Catalog: Importance, Benefits, Native Capabilities & Evaluation Guide

- Snowflake Data Mesh: Step-by-Step Setup Guide

- Managing Metadata in Snowflake: A Comprehensive Guide

- How to Query Information Schema on Snowflake? Examples, Best Practices, and Tools

- Snowflake Summit: Why Attend and What to Expect

- Snowflake Summit Sessions: 10 Must-Attend Sessions to Up Your Data Strategy

Share this article