Data Quality Management (DQM): The Only Ultimate Guide You'll Ever Need in 2025

Share this article

Quick Answer: What is data quality management? #

Data quality management (DQM) is the process of ensuring that an organization’s data is accurate, complete, consistent, reliable, and fit for its intended purpose.

It includes defining quality standards, profiling data, monitoring key metrics, remediating issues, and establishing governance to maintain trust in data over time. A strong DQM framework helps organizations make confident decisions, reduce compliance exposure, and improve operational efficiency across data pipelines.

Up next, we’ll look at DQM components, metrics, benefits and challenges. We’ll also explore the role of a unified trust engine in elevating your data quality management practices.

Table of Contents #

- Data quality management explained

- What are the key components of data quality management (DQM)?

- How do you measure data quality? Key metrics that matter

- Who is responsible for data quality management (DQM)?

- How to build a data quality management framework: A 7-step process for data architects

- What are the key benefits of robust data quality management (DQM)?

- What are the common challenges in data quality management (DQM)?

- What is the role of metadata in improving data quality management (DQM)?

- How does a unified trust engine strengthen data quality management (DQM)?

- What are some best practices to follow for effective data quality management (DQM)?

- Data quality framework: In summary

- Data quality management: Frequently asked questions (FAQs)

- Data quality management: Related reads

Data quality management explained #

Data Quality Management (DQM) is the discipline of managing data throughout its lifecycle to ensure it is accurate, consistent, complete, timely, and relevant to business needs.

DQM goes beyond fixing errors in data. Its purpose is to prevent bad data from entering your pipelines, monitoring quality in real time, and defining clear ownership and accountability for data assets.

Key aspects of DQM typically include:

- Data profiling to assess quality dimensions like completeness or uniqueness

- Data validation and rules enforcement to catch errors at ingestion

- Issue remediation workflows to route and fix problems quickly

- Quality metrics tracking for visibility across teams and time

- Governance frameworks to define standards, ownership, and escalation paths

Why does DQM matter? #

Gartner highlights that data quality is usually one of the goals of effective data management and data governance. However, it’s an afterthought and that affects decision-making.

Melody Chien, Senior Director Analyst at Gartner, ties data quality directly to the quality of decision making:

“Good quality data provides better leads, better understanding of customers and better customer relationships. Data quality is a competitive advantage that D&A leaders need to improve upon continuously.”

Gartner estimates that poor data quality costs organizations at least $12.9 million a year on average. DQM is essential for building trust in data, powering accurate analytics, and reducing the downstream cost of bad information.

What are the key components of data quality management (DQM)? #

Data quality management (DQM) involves processes, technologies, and methodologies that ensure data accuracy, consistency, and business relevance.

The key components of any data quality management framework include:

- Data profiling

- Data cleansing

- Data monitoring

- Data governance

- Metadata management

Let us understand these components in detail.

1. Data profiling #

Data profiling is the initial, diagnostic stage in the data quality management lifecycle. It involves scrutinizing your existing data to understand its structure, irregularities, and overall quality.

Specialized tools provide insights through statistics, summaries, and outlier detection. This stage is crucial for identifying any errors, inconsistencies, or anomalies in the data.

The insights gathered serve as a roadmap for subsequent data-cleansing efforts. Without effective data profiling, you risk treating the symptoms of poor data quality rather than addressing the root causes.

Essentially, profiling sets the stage for all other components of a data quality management system. It is the preliminary lens that provides a snapshot of the health of your data ecosystem.

2. Data cleansing #

Data cleansing is the remedial phase that follows data profiling. It involves the correction or elimination of detected errors and inconsistencies in the data to improve its quality.

This process is crucial for maintaining the accuracy and reliability of data sets. Various methods like machine learning algorithms, rule-based systems, and manual curation are employed to clean data.

Cleansing ensures that all data adheres to defined formats and standards, thereby making it easier for data integration and analysis. The task also involves removing any duplicate records to maintain data integrity.

It’s the phase where actionable steps are taken to improve data based on the insights gathered during profiling. Proper data cleansing forms the basis for accurate analytics and informed decision-making.

3. Data monitoring #

Data monitoring is the continuous process of ensuring that your data remains of high quality over time. It picks up where data cleansing leaves off, regularly checking the data to ensure it meets the defined quality benchmarks.

Advanced monitoring systems can even detect anomalies in real time, triggering alerts for further investigation. This ongoing vigilance ensures that data doesn’t deteriorate in quality, which is essential in dynamic business environments.

Monitoring can also facilitate automated quality checks, which saves time and human resources. It serves as a continuous feedback loop to data governance policies, potentially leading to updates and refinements in the governance strategy. In essence, data monitoring acts as the guardian of data quality, ensuring long-term consistency and reliability.

4. Data governance #

Data governance provides the overarching framework and rules for data management. It involves policy creation, role assignment, and ongoing compliance monitoring to ensure that data is handled in a consistent and secure manner.

Governance sets the criteria for data quality and lays out the responsibilities for various roles like data stewards or quality managers. These policies guide how data should be collected, stored, accessed, and used, thereby affecting all other stages of data quality management.

Regular audits are often part of governance to ensure that all procedures and roles are effective in maintaining data quality. It’s the scaffolding that provides structure to your data quality management initiatives. Without effective governance, even the most sophisticated profiling, cleansing, and monitoring efforts can become disjointed and ineffective.

5. Metadata management #

“Activating metadata supports automation of tasks that align to the quality dimensions of accessibility, timeliness, relevance and accuracy.” - Gartner on the role of metadata in ensuring data quality

Metadata management deals with data about data, offering context that aids in understanding the primary data’s structure, origin, and usage. This component is vital for decoding what each piece of data actually means and how it relates to other data within the system.

Effective metadata management allows for the tracking of data lineage, which is crucial for debugging issues or maintaining compliance with regulations like GDPR.

Metadata also plays a significant role in data integration processes, helping match data from disparate sources into a cohesive whole. It makes data more searchable and retrievable, enhancing the efficiency of data storage and usage.

In effect, metadata management enriches the value of the data by adding layers of meaning and context. It may not be the most visible part of data quality management, but it’s often the most essential for long-term sustainability and compliance.

How do you measure data quality? Key metrics that matter #

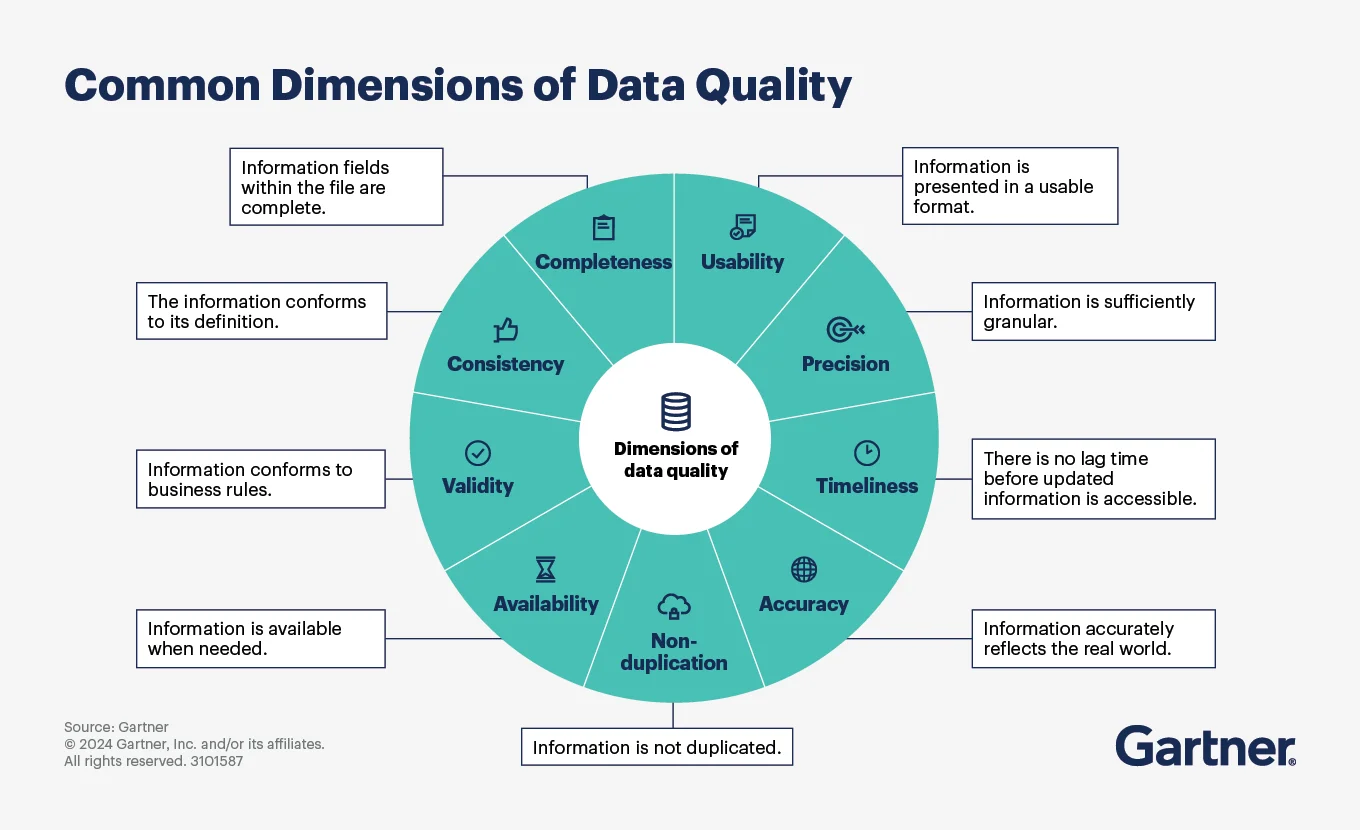

Measuring data quality requires assessing it against a standardized set of dimensions. Gartner outlines nine core dimensions that help organizations evaluate whether their data is fit for purpose:

- Completeness: Are all required fields filled? Incomplete data—like missing customer contact details—can derail processes and decisions.

- Usability: Is the data presented in a format that makes it useful? Even accurate data loses value if it’s hard to understand or apply.

- Precision: Is the data sufficiently granular? Broad or vague values (e.g., “Europe” instead of “France”) can reduce analytical relevance.

- Timeliness: Is the data available when needed, without lag? Stale or delayed data leads to outdated reports and missed opportunities.

- Accuracy: Does the data correctly represent the real-world object or event? Incorrect values—like wrong prices or IDs—create downstream errors.

- Non-duplication (Uniqueness): Are there redundant or duplicate entries? Duplication inflates volumes, confuses systems, and distorts metrics.

- Availability: Can users access the data when they need it? Downtime or limited access creates bottlenecks in critical workflows.

- Validity: Does the data conform to required formats and business rules? Invalid entries—like phone numbers in name fields—signal poor controls.

- Consistency: Is the same data point represented the same way across systems? Inconsistencies cause confusion and integration issues.

Common dimensions of data quality, according to Gartner - Source: Gartner.

Who is responsible for data quality management (DQM)? #

Effective Data Quality Management (DQM) requires cross-functional coordination across technical, operational, and business teams. Clear roles and ownership are essential to maintain accuracy, consistency, and trust in data.

Gartner’s Jason Medd, a Director Analyst, calls it a team sport:

“Data is a team sport, so CDAOs should form special interest groups who can benefit from DQ improvement, communicate the benefits and share best practices around other business units.”

Some of the key roles would include:

- Data Architect: Designs the overall data architecture with quality as a core principle. They ensure data lineage, model integrity, and metadata integration are part of the system from the ground up.

- Data Steward: Owns data domains from a business perspective. Stewards define data standards, resolve quality issues, and collaborate with analysts and engineers to ensure alignment between business requirements and data outputs.

- Data Engineer: Develops and maintains infrastructure to support scalable, reliable data flows. They are responsible for implementing automated checks and integrating data quality rules into the pipeline.

- ETL Developers: Build and maintain data pipelines that move, transform, and load data. They enforce validation logic, remove duplicates, and ensure that cleansed, high-quality data flows into downstream systems.

- Data Quality Analyst: Specializes in profiling, validating, and monitoring data across systems. They define quality rules, analyze root causes of issues, and work closely with data stewards and engineers to improve trust metrics.

- Business Analyst: Defines what “good data” looks like in the context of business use cases. They help identify blind spots in quality and report issues that impact reporting, insights, or customer experience.

- Compliance & Governance Lead: Ensures that data quality processes align with regulatory frameworks. They track auditability, enforce retention and consent rules, and oversee policy compliance across data assets.

Together, these roles ensure that data quality is a shared responsibility embedded across the data lifecycle, from ingestion to consumption.

How to build a data quality management framework: A 7-step process for data architects #

A data architect plays a crucial role in setting the blueprint for a data quality management framework. The planning and building process integrates best practices, technological tools, and company-specific needs into a cohesive strategy.

The steps include:

- Assess current state – what data exists, where it’s stored, what is its current quality, what tools are being used, which workflows are being powered

- Define objectives and metrics – the equivalent of a “business requirements document” for the framework, specifying what is to be achieved

- Identify stakeholders and roles – the ‘users’ of the data quality framework and their roles and responsibilities

- Create a data governance policy – the overarching rules and guidelines, much like a constitution, setting the standards for data quality

- Develop a phased implementation plan – each phase can focus on specific objectives, reducing risks and supporting course correction along the way

- Conduct training and awareness programs

- Monitor, review, and iterate for continuous improvement

What are the key benefits of robust data quality management (DQM)? #

Given the increasing reliance on data for decision-making, strategic planning, and customer engagement, having a robust DQM model can be a significant competitive advantage.

These benefits include:

- Improved decision-making: Organizations with an optimal DQM model can trust their data, leading to better, more informed decisions. This is comparable to having a “true north” in navigation; you know where you stand and how to reach your goals effectively.

- Regulatory compliance: DQM helps meet requirements for accuracy, traceability, and completeness under regulations like GDPR, HIPAA, or BCBS 239.

- Enhanced customer experience: High-quality data allows for better personalization and service delivery, which in turn improves customer satisfaction and loyalty.

- Operational efficiency: Poor data quality can result in inefficiencies, requiring manual data cleansing and reconciliation efforts. An optimal DQM framework streamlines these processes, leading to significant time and cost savings. This efficiency acts as the “oil” that keeps the organizational machine running smoothly.

- Strategic advantage: Accurate data supports innovation and strategic initiatives, allowing organizations to anticipate market changes and adapt swiftly.

- Risk mitigation: Bad data can lead to flawed insights and poor decisions, exposing organizations to various types of risks, including financial and reputational.

What are the common challenges in data quality management (DQM)? #

Despite its importance, DQM is hard to get right. Here are some persistent issues:

- Volume and velocity of data: Today’s organizations generate and ingest massive volumes of data from websites, apps, IoT devices, and external sources. Managing quality at this scale—especially in real time—is resource-intensive and technically complex.

- Accuracy and consistency issues: Inconsistent formatting, outdated entries, and human errors across teams and tools lead to data discrepancies. Without robust validation and reconciliation processes, these issues snowball into poor decision-making and broken analytics.

- Siloed systems and diverse data sources: Organizations typically operate across multiple systems—CRMs, ERPs, data lakes, cloud warehouses, and APIs. Aligning formats, identifiers, and semantics across such a fragmented ecosystem is a major challenge for data quality.

- Data cleaning and processing overhead: Cleansing large datasets—removing duplicates, standardizing fields, and correcting errors—is labor-intensive. Without automation, teams get bogged down fixing data rather than using it.

- Real-time quality enforcement: In fast-moving environments like fraud detection or real-time personalization, data must be validated and monitored instantly. Most traditional tools aren’t designed for this level of speed and automation.

- Low data literacy among employees: If business teams lack the knowledge to interpret or flag bad data, quality issues go unnoticed. DQM must be supported by training and cultural shifts—not just tooling.

- Lack of ownership: Data quality often falls between teams. Without defined stewards, issues go unresolved.

- Evolving tools and techniques: New technologies (e.g., AI models, graph databases, reverse ETL tools) require updated quality checks and governance policies. Keeping DQM strategies current with evolving data architectures is a continuous effort.

- Missing metadata context: If you don’t know how data is produced or used, you can’t track or trust its quality effectively.

The last point on metadata is crucial for ensuring the success of data quality management (DQM). Let’s see why in the next section.

What is the role of metadata in improving data quality management (DQM)? #

Gartner states that data professionals often underestimate the importance of metadata in ensuring data quality.

Without clear metadata — who owns a dataset, what it means, where it came from, when it was updated — even the cleanest-looking data can’t be trusted.

“Effective metadata management provides necessary context about data that can be utilized to mitigate shortcomings in data accuracy, better manage perceptions of poor data quality and enable AI-ready data.” - Gartner on why data architects must focus on metadata for data quality

Metadata management gives context to your data:

- It tells you what the data represents (definitions, tags, business glossaries)

- It shows where the data came from and how it’s used (lineage and usage metrics)

- It clarifies who’s responsible (ownership, access, stewardship)

- It reveals data relationships across models, pipelines, and domains

By managing metadata actively, you can turn fragmented data into connected, trustworthy assets. That’s what powers modern DQM, and sets the stage for automation, anomaly detection, and AI-driven quality workflows.

Next, let’s see how a unified, metadata-led trust engine can help improve data quality management.

How does a unified trust engine strengthen data quality management (DQM)? #

Overcoming modern data quality challenges requires a connected approach — one that unifies metadata, automation, and contextual insight across the data estate. That’s where a unified trust engine for AI like Atlan plays a transformative role.

Atlan acts as the connective tissue across your stack, helping you integrate with upstream data quality tools, and combine discovery, governance, and quality in a single control plane for one trusted view of data health.

Here’s how Atlan strengthens data quality management (DQM):

- Shift from reactive to proactive quality management: Atlan’s Data Quality Studio integrates seamlessly with Snowflake and other platforms to unify quality signals — including tests, issues, owners, and documentation — in one place. Instead of scrambling to investigate anomalies after the fact, data teams get alerts in real-time, complete with 360o context on ownership, lineage, and downstream impact.

- Automate monitoring and anomaly detection: With support for tools like Soda, Monte Carlo, and Great Expectations, Atlan lets you centralize quality checks and automate anomaly detection — without having to toggle between platforms. It pulls in alerts and metadata automatically and links them back to specific assets, so your team always knows what broke, where, and who to notify.

- Operationalize quality ownership and resolution: When quality issues occur, Atlan routes them directly into your team’s daily workflows, like Slack, Jira, or BI tools. Issues are no longer buried in disconnected logs; they show up as rich, contextualized tickets with links to affected assets and owners.

- Enforce trust through metadata policies: Atlan helps you enforce policies through metadata tags — e.g., auto-masking fields flagged as PII or limiting access to tables with low freshness scores. This creates an environment where trust is enforced automatically through lineage, classification, and policy-based controls.

- Provide visibility and traceability: Atlan offers column- to dashboard-level lineage, so data teams can visualize how errors in upstream transformations affect reports, models, or KPIs. That’s especially critical for compliance and audit readiness.

- Track quality at a glance: Track coverage, failures, and business impact in one glanceable Reporting Center.

- Promote trust across the business: Through Atlan’s Trust Signals (such as freshness, documentation, popularity, and ownership), data consumers get confidence indicators before using an asset. Meanwhile, data contracts help in formalizing quality expectations to build trust between data producers and consumers.

What are some best practices to follow for effective data quality management (DQM)? #

Improving data quality requires consistent strategy, stakeholder alignment, and automated enforcement. According to Gartner, organizations that implement structured data quality programs can see measurable gains in efficiency, compliance, and trust.

Here are key best practices:

- Prioritize data domains with the highest business impact—such as customer data, financial data, or regulatory reporting.

- Establish shared data quality metrics—such as completeness, accuracy, timeliness, and validity—and link them to business goals

- Assign clear ownership for data quality–stewards, analysts, architects, and product owners for critical data assets

- Automate quality checks wherever possible–use tools like Atlan to enforce quality policies through dynamic tag-based rules and lineage-driven checks

- Embed data quality into daily operations for early issue detection and continuous improvement

- Build issue resolution into data workflows with collaboration features, automated alerts, and audit trails

- Track quality trends over time, using dashboards to highlight where issues recur and where progress is happening

- Use metadata to drive smarter quality programs as quality depends on context

By aligning your data quality strategy to these principles, you move beyond reactive fixes to build a system of trust that scales across your data ecosystem.

Data quality framework: In summary #

Data quality management is a crucial function that supports an organization’s efforts in decision-making, compliance, and strategic planning. It offers a structured, repeatable way to monitor, measure, and improve trust in data across its lifecycle and across teams.

Effective DQM is not a one-time effort but an ongoing process that requires continuous monitoring and adjustment. It’s about building a shared foundation of metadata, ownership, governance, and real-time signals that enable better decisions and fewer downstream risks.

With a metadata-powered trust engine like Atlan, organizations can embed quality into daily workflows, automate issue detection and resolution, and maintain confidence in the data fueling their operations, AI, and analytics.

Data quality management: Frequently asked questions (FAQs) #

1. What is data quality management (DQM)? #

Data quality management is the practice of ensuring your data is accurate, complete, consistent, timely, and fit for purpose. It includes data profiling, cleansing, monitoring, governance, and metadata management to maintain trust over time.

2. Why is DQM important for modern businesses? #

Without strong DQM, decisions based on faulty data can lead to financial loss, customer churn, and compliance violations. DQM helps prevent these issues and improves confidence in reporting, AI models, and day-to-day operations.

3. What’s the difference between data governance and data quality management? #

Data governance defines the policies and roles around data, while DQM enforces those standards through processes like validation, monitoring, and remediation. Governance is the blueprint; DQM is the implementation.

4. Who is responsible for data quality? #

DQM is a shared responsibility across roles: data architects design quality into the system, stewards set and enforce standards, engineers and ETL developers build and monitor pipelines, and analysts help flag business-critical issues.

5. How can metadata improve data quality? #

Metadata provides essential context (source, lineage, and ownership) that helps teams understand, troubleshoot, and trust the data. Without metadata, even clean data lacks visibility and traceability.

6. What are some key metrics for data quality? #

Common dimensions include completeness, accuracy, consistency, uniqueness, timeliness, validity, availability, integrity, and relevance. Each metric helps evaluate whether data is trustworthy and usable.

7. How does Atlan support data quality management? #

Atlan acts as a metadata control plane that integrates with tools like Snowflake, dbt, and Soda. It automates profiling, connects quality issues with ownership and lineage, embeds alerts in workflows, and helps enforce trust through policies and metadata tags.

Data quality management: Related reads #

- Data Quality Explained: Causes, Detection, and Fixes

- Data Quality Framework: 9 Key Components & Best Practices for 2025

- Data Quality Measures: Best Practices to Implement

- Data Quality Dimensions: Do They Matter?

- Resolving Data Quality Issues in the Biggest Markets

- Data Quality Problems? 5 Ways to Fix Them

- Data Quality Metrics: Understand How to Monitor the Health of Your Data Estate

- 9 Components to Build the Best Data Quality Framework

- How To Improve Data Quality In 12 Actionable Steps

- Data Integrity vs Data Quality: Nah, They Aren’t Same!

- Gartner Magic Quadrant for Data Quality: Overview, Capabilities, Criteria

- Data Management 101: Four Things Every Human of Data Should Know

- Data Quality Testing: Examples, Techniques & Best Practices in 2025

- Atlan Launches Data Quality Studio for Snowflake, Becoming the Unified Trust Engine for AI

Share this article