How to Build a Data Catalog: An 8-Step Guide to Get You Started

Share this article

Building a data catalog might seem purely technical, yet its essence lies in empowering data practitioners to swiftly find, trust, understand, and use their data.

At Atlan, we consider a data catalog as “an active data asset repository that acts as the context, control, and collaboration plane for your data estate. It is no longer a mere inventory, glossary, or dictionary of your data.”

See How Atlan Simplifies Data Cataloging – Start Product Tour ➜

This article helps you understand how to build a data catalog from scratch, addressing both business and technical imperatives, followed by eight steps to build a data catalog. We’ll also look at the common challenges faced and ways to tackle them.

Table of contents

Permalink to “Table of contents”- How to build a data catalog: 5 essential prerequisites

- How to build a data catalog: 3 deployment options to consider

- How to build a data catalog: 8 steps to follow

- Key challenges in building a data catalog

- A better alternative: Using an enterprise data catalog

- Related reads

How to build a data catalog: 5 essential prerequisites

Permalink to “How to build a data catalog: 5 essential prerequisites”Start by considering the following prerequisites before embarking on your journey to build and deploy a data catalog:

- Define your business requirements. Examples include enabling self-service for data consumers, decreasing data storage and computational costs, and improving compliance.

- For Snapcommerce, one of the business needs was standardizing and sharing their data definitions across the organization.

- Meanwhile, for Foundry, the requirement was a self-serve platform that people from outside the data team could use to know what data they have, and where to find it.

- Define your technical requirements. The data catalog you build should be compatible with the tools your data and business teams use.

- Document data catalog use cases. Top use cases include root cause analysis, data compliance management, proactive data issue alerting, and cost optimization.

- Initiate an exercise to identify, compile, and document all the essential data sources, pipeline tools, BI platforms, and other tools in your data stack. The data catalog you build should work for the data sources you have and the data sources you plan to have in the future.

- Outline and document a collaborative data governance framework, establishing standards, definitions, policies, and processes for adding data assets to the data catalog.

Also, read → Data catalog requirements in 2024: A comprehensive guide

Having reviewed the prerequisites, let’s move on to technology considerations for building and deploying data catalogs.

How to build a data catalog: 3 deployment options to consider

Permalink to “How to build a data catalog: 3 deployment options to consider”You can take three paths to building a data catalog for your organization:

- Build your own data cataloging tool

- Use an open-source tool (Amundsen, DataHub, OpenMetadata, Magda, or Metacat) and integrate it with your technology stack

- Use an off-the-shelf enterprise data cataloging tool

Building a data catalog tool is a significant undertaking. So, organizations also adopt a hybrid approach by building their own solution, and then purchasing and integrating standalone features or tools to enhance its functionality.

Alternatively, they buy a data catalog tool that covers most of their fundamental needs and is open by default. Then they build custom features or integrations catering to their unique use cases.

Also, read → Build vs. buy data catalog: What should factor into your decision-making?

In this article, we’ll explore how to build your own data cataloging tool from scratch. If you’re comparing open-source and off-the-shelf alternatives, you can do a deep dive by checking out our piece on data catalog comparison.

How to build a data catalog: 8 steps to follow

Permalink to “How to build a data catalog: 8 steps to follow”If you’re building a data catalog from scratch, here are eight steps you should follow:

- List and identify ways of accessing your data sources

- Come up with a rough technical architecture

- Choose a tech stack to use

- Plan & implement the data cataloging tool

- Set up a business glossary for proper context

- Ensure data security, privacy, integrity, and compliance with appropriate encryption, masking, and authorization mechanisms

- Run a proof of concept to evaluate your data catalog’s capabilities

- Explore ways to automate data classification and tagging, documentation, lineage mapping, etc., to scale your cataloging efforts

Let’s roll up our sleeves and delve into the specifics of each step.

1. List and identify ways of accessing your data sources

Permalink to “1. List and identify ways of accessing your data sources”When building a data catalog for your business, you must consider your company’s current and future infrastructure and data sources.

The goal is to cover the maximum number of sources with the minimum number of interfaces. However, as your business grows, you must keep adding new ways to connect to new and existing data sources.

So, start by identifying:

- Connectivity: Understand how to access a data source. For a relational database, you can provide a username and password and then access the internal data catalog. Meanwhile, others might need APIs (REST, GraphQL, gRPC, Websocket, SOAP, etc.) to access a data source.

- Metadata format: The response format will differ depending on the type of connectivity you choose. For instance, some data sources may allow you to make an API call and store the response as a CSV. Documenting metadata formats will help you set up a metadata layer that homogenizes the metadata format as much as possible.

- Push, pull, or both architectures: The three prominent architectures used in various data cataloging tools are pull-based, push-based, and a combination of both approaches. Each architecture has unique features regarding metadata ingestion, data freshness, and scalability.

At this stage, you should also evaluate the impact on data sources. Connecting to a data source and extracting or receiving metadata puts an additional load on the data source. If you aren’t careful enough when accessing the metadata in a relational database or a data warehouse, you can drastically impact the database’s read-write performance. That’s because these data sources are driven by metadata and locks on the metadata tables.

2. Come up with a rough technical architecture

Permalink to “2. Come up with a rough technical architecture”Even the most basic data catalog must have the ability to:

- Retrieve and store metadata from various heterogeneous data sources

- Search and discover data sources and the metadata linked to those data sources

- See the relationships between different data sources and entities

So, the next step is to design a solution that accommodates your most essential data sources.

The design should outline the various components you’ll use to develop the data cataloging tool. It should also cover communication protocols and frequencies.

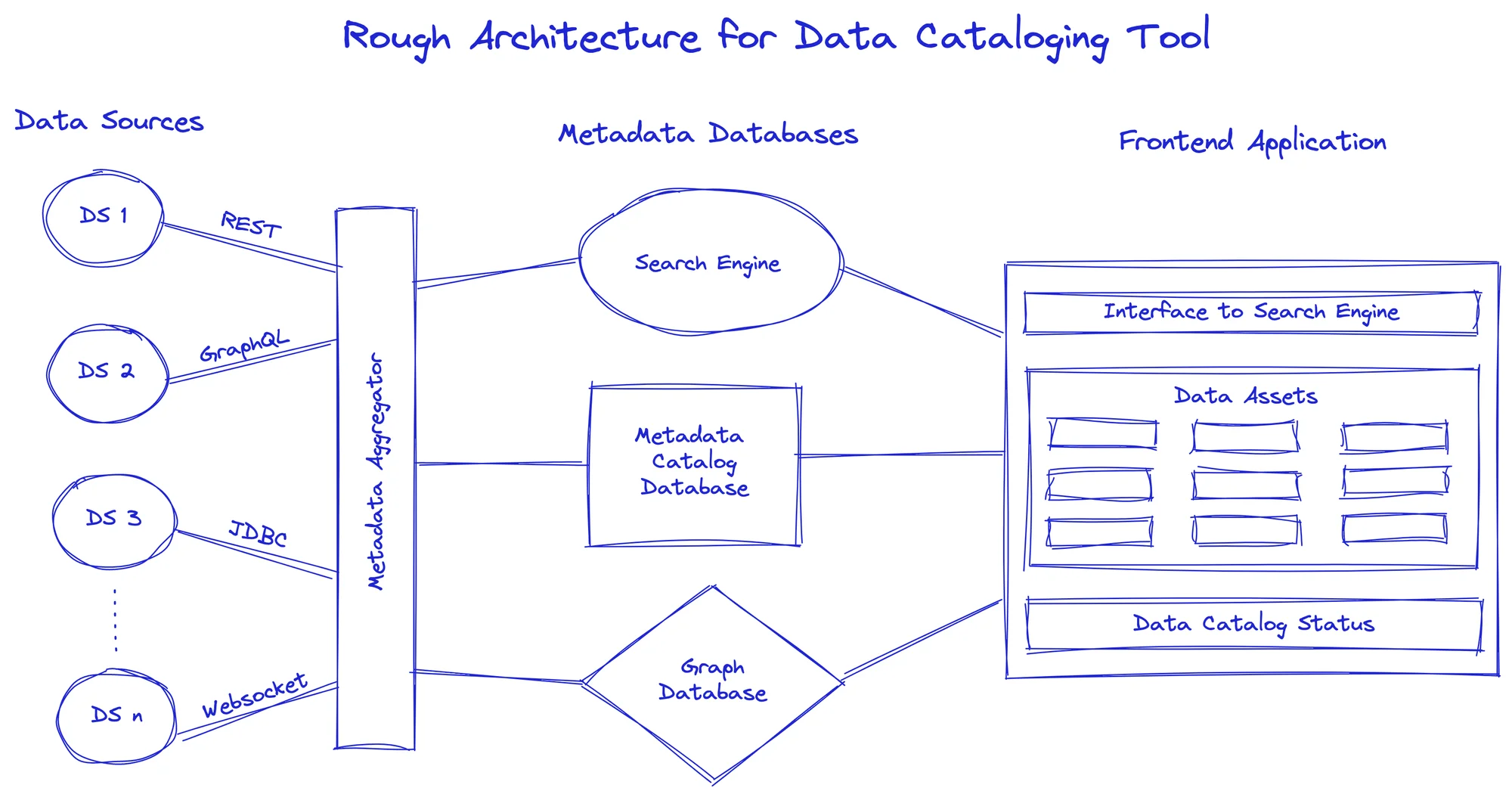

Here’s an example for you to use as reference.

Outlining a rough architecture for a data catalog - Image by Atlan.

In the above diagram, you’ll notice that the components have been roughly divided between three layers:

- The source layer deals with data sources and a metadata service that directly interacts with those sources. This layer also includes numerous communication protocols.

- The metadata layer handles different storage structures to enable various features and access patterns on top of the collected metadata. It can include:

- Graph database: Stores the linkages between entities within and across data sources

- Search engine: Enables full-text search functionality for all your data sources, including entity names, field names, entity descriptions, field descriptions, and more

- The frontend application is how it all comes together and gets presented to the business for consumption in data discovery, schema evolution, etc.

3. Choose a tech stack to use

Permalink to “3. Choose a tech stack to use”The core components of your data catalog may require one or more different technologies to operate. So we’ve listed technologies for each core component from which you can choose.

💡 Note: This step doesn’t always follow sequentially after the previous one, as most engineers and developers already have their familiar tech stacks in their minds when designing these solutions — and that’s quite alright.

Choosing a programming language and development framework

This depends on your team’s proficiency. Some popular choices are Python, Java, Golang, and JavaScript.

Storing metadata in the traditional form usually requires a relational database with a data model that unifies how metadata from different data sources is stored. You can use MySQL, PostgreSQL, MariaDB, or a key-value store like AWS DynamoDB.

As you must extract data from the data sources to populate the metadata database, ensuring its consistency and reliability is crucial. You can use a task workflow engine like Airflow or Prefect.

Developing the search functionality

Using a relational database to provide full-text search capability means including all the columns you want to index for your metadata data model. This can limit you as the full-text indexes that enable full-text search are built on specific columns.

Understanding data asset linkages for lineage mapping

Searching for data isn’t enough. The real value of data is only derived after looking at it up close and from afar. You need to understand what a given entity is, the type of data it contains, and how that entity is linked to various other entities across data sources.

That’s where graph databases can help.

Building a frontend application

Many JavaScript frameworks, either in standalone mode or in combination with another programming language like Python, can do wonders for your frontend application.

To better inform your technology choices, we’ve surveyed some of the open-source data cataloging tools out there to see how their technology stacks are designed:

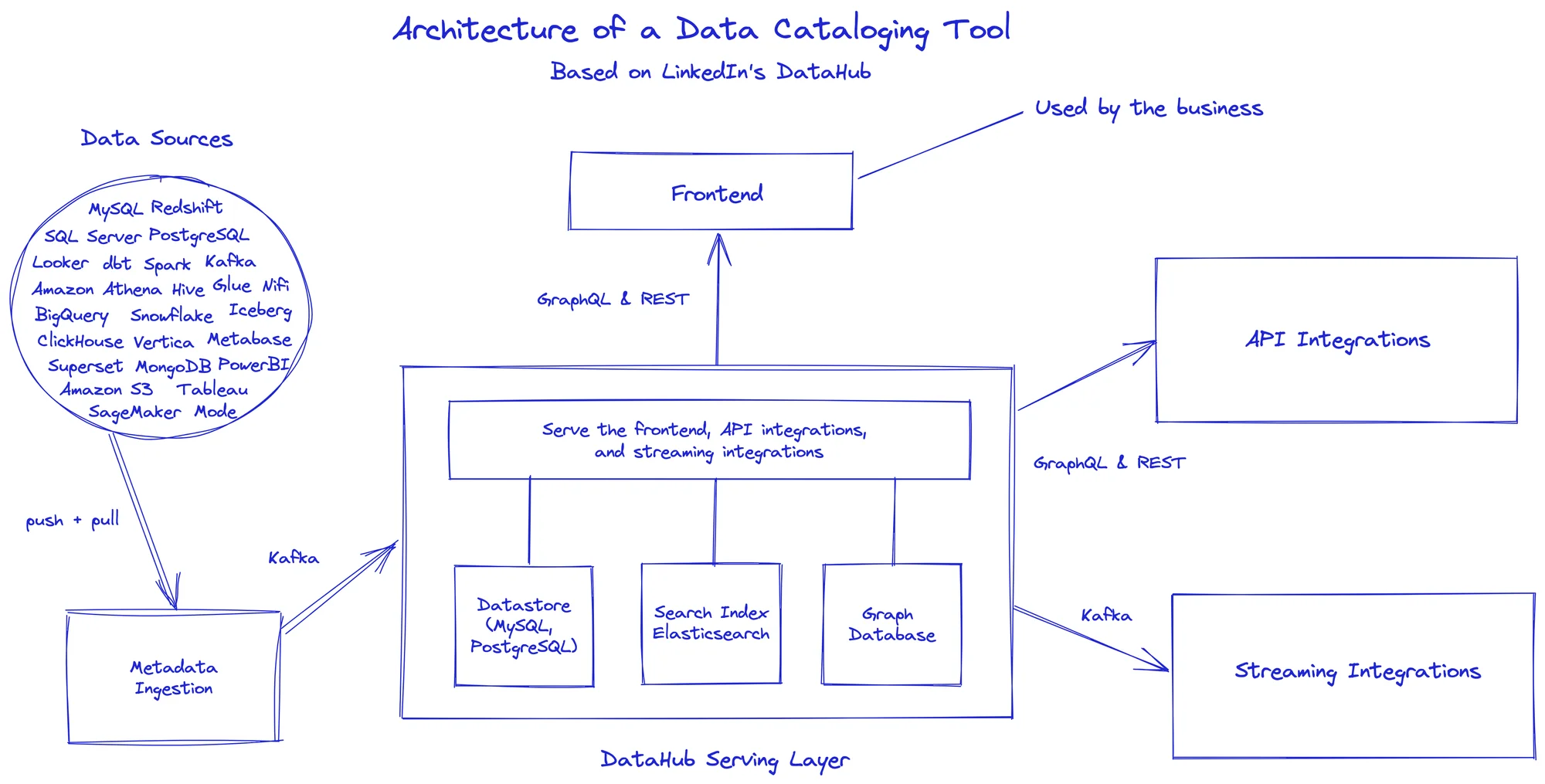

- DataHub: Neo4j, Elasticsearch, Flask, Airflow, React, Redux

- Amundsen: Neo4j, Elasticsearch, Flask, React, Airflow

- OpenMetadata: MySQL, Elasticsearch, Airflow

- Atlas: Kafka, Ranger, JanusGraph, HBase, Solr

- Magda: Elasticsearch, PostgreSQL, React, Passport, Kubernetes

Designing your tech stack: An example

A data catalog can use a database like MySQL or PostgreSQL to store the metadata from various sources in a homogenized manner.

A graph database like neo4j or JanusGraph can help serve workloads where business users try to understand how different entities across various data layers are related to and derived from one another.

Meanwhile, Elasticsearch can serve all the full-text search query workloads to help businesses search for entities, columns, definitions, etc., directly from a search bar on the front end.

Here’s an illustration you can use as a reference.

Designing the architecture of a data catalog - Image by Atlan.

4. Plan & implement the data cataloging tool

Permalink to “4. Plan & implement the data cataloging tool”As you’re starting from scratch, develop a plan to implement the most basic features required for the data catalog to function.

In this section, we’re going to look at metadata extraction from different types of data sources, such as:

- Relational databases and data warehouses

- Data lakes and lakehouses

- Third-party APIs

- Other technical metadata catalogs

Let’s get started.

Metadata extraction: Relational databases and data warehouses

Since most data sources (relational databases, data warehouses, etc.) maintain an internal data catalog, we’ll see how to get the data out of that database-specific local catalog into your unified data catalog.

Access to metadata from relational databases and data warehouses is straightforward. They have several system-maintained schemas, including the information_schema, containing tables with technical metadata. You can access the information_schema using SQL queries or an SDK.

Here’s the syntax you can use as reference.

# Get new and updated tables by schema

SELECT *

FROM information_schema.tables

WHERE table_schema = 'table_schema'

AND COALESCE(update_time, create_time) > '2020–01–01 00:00:00';

# Get all views (with definitions) by schema

SELECT *

FROM information_schema.views

WHERE table_schema = 'table_schema';

# Get all the columns of a table

SELECT *

FROM information_schema.columns

WHERE table_schema = 'table_schema'

AND table_name = 'table_name';

# Get all column-level write privileges on a table

SELECT grantee, table_schema, table_name, column_name,

privilege_type, is_grantable

FROM information_schema.column_privileges

WHERE table_schema = 'table_schema'

AND table_name = 'table_name'

AND privilege_type IN ('INSERT','UPDATE','DELETE');

# Get all the primary and unique key constraints defined on a table

SELECT constraint_schema, constraint_name, table_schema,

table_name, constraint_type, enforced

FROM information_schema.table_constraints

WHERE constraint_schema = 'constraint_schema'

AND table_name = 'table_name'

AND constraint_type in ('PRIMARY KEY', 'UNIQUE');

Metadata extraction: Data lakes and lakehouses

While databases and data warehouses handle highly structured data, data lakes store data in portable file formats like CSV, JSON, Parquet, Avro, ORC, Delta, etc.

To get the metadata from the data lake, you must extract or infer the schema information from the files. The most popular tools for reading from data lakes are Python libraries such as Pandas, PySpark, SQL (in the form of Athena, Snowflake, etc.).

Here’s how you would extract the complete schema from a JSON file (you can use something like csv-schema to extract schema from CSV files).

# Get sample data from a remote server (emulating an object store)

import pandas as pd

import requests

import json

url = '<https://bit.ly/3NSAZTd>'

def get_schema_from_json(url):

response = requests.get(url)

df = pd.DataFrame(response.json()['data'])

schema = pd.io.json.build_table_schema(df)

return schema

get_schema_from_json(url)

This would only be efficient for cases where you have to fetch the schema one-off and the file size is tiny.

In other cases, you’ll be better off reading only a portion of the file. To read a portion of files in columnar format, you’ll need tools like Apache Arrow or Apache Spark. Here’s an example using native PySpark read methods to get the schema of both JSON and Parquet files.

# Using PySpark to read JSON files from a data lake

json_path = "/path/to/file.json"

jsonDf = spark.read.json(json_path)

jsonDf.printSchema()

# Using PySpark to read Parquet files from a data lake

parquet_path = "/path/to/file.parquet"

parquetDf = spark.read.parquet(parquet_path)

parquetDf.printSchema()

Alternatively, you can use something like DESCRIBE TABLE EXTENDED to get a more detailed view of the entity metadata stored in your data lake. Here’s an example.

# Get extended table partition information using Spark

parquet_path = "/path/to/file.parquet"

parquetDf = spark.read.parquet(parquet_path)

parquetDf.createOrReplaceTempView('parquet_table')

spark.sql("DESCRIBE TABLE EXTENDED parquet PARTITION (column_1 = 'some_value')"

Metadata extraction: Third-party APIs

Most businesses use external products specializing in specific business areas, such as digital marketing, customer satisfaction, surveys, etc. Sometimes, you must work with poorly maintained APIs where the API provider changes the API contract without notice.

To prevent yourself from getting stuck with such issues, it’s best to track changes in the source schema right from the source. Some examples of these third-party tools would be SurveyMonkey, Segment, and Mixpanel.

Metadata extraction: Native cloud-platform data catalogs

If you already have your infrastructure on a specific cloud platform, you can use their native data catalog solution to extract the metadata from some data sources. For instance, you can use AWS Glue’s metadata crawler to populate data from sources like RDS, DynamoDB, Redshift, S3, etc.

Meanwhile, GCP, Microsoft, and Databricks have their native data cataloging solutions that you can integrate with your data cataloging tool for some of the metadata extraction functionality.

It’s important to note that technical metadata stores, such as the AWS Glue data catalog, don’t offer the best way for business users to consume metadata for discovery, lineage, etc.

That’s why crawling and aggregating technical metadata is only one piece of the data discovery puzzle. The real challenge is to make a product that your business can use.

Also, read → What is business metadata?

5. Set up a business glossary

Permalink to “5. Set up a business glossary”A business glossary helps everyone in your organization understand, access, and apply common definitions to business terms. Since business are responsible for building and maintaining a business glossary, you must make the glossary easy to set up, use, and maintain.

For instance, Nestor Jarquin, Global Data & Analytics Lead at Aliaxis, explains how a business glossary helped people in the company understand “which data assets are regional or global, at a glance, explore discrepancies between definitions and terms across regions, and discuss details with term owners.”

At Atlan, we see the business glossary as an organization’s second brain. It’s built on a knowledge graph that lets you create connections between data, definitions, and domains, mirroring how your business works.

Dive deeper → How to create a business glossary in 9 steps

6. Ensure data security, privacy, integrity, and compliance

Permalink to “6. Ensure data security, privacy, integrity, and compliance”If you get an off-the-shelf data catalog, it comes with built-in capabilities for managing compliance (global and local), lineage and provenance, security controls, privacy features, and data quality management.

However, when building a catalog from scratch, you’ll need to develop these capabilities. These include implementing granular access controls, encryption and masking mechanisms, generating detailed compliance reports, maintaining thorough audit trails, and deploying data quality monitoring dashboards.

You must also evaluate each tool’s security features and compliance standards and make sure they align with the overall governance and compliance requirements.

Before you build these, you must work with the relevant stakeholders to establish data governance policies and processes. They’re central to implementing a collaborative data governance program, which will ensure data security, privacy, integrity, compliance, and accountability.

Also, read → How to implement a data governance plan in 10 steps

7. Run a proof of concept

Permalink to “7. Run a proof of concept”Before scaling your data catalog across all use cases:

- Conduct a proof of concept or pilot project to assess the effectiveness of your approach and showcase tangible results.

- Measure performance, ease of use, and compatibility with existing systems.

- Gather feedback from the catalog users to understand their expectations, pain points, and preferences.

For the proof of concept, select mission-critical initiatives such as risk management, sales process optimization, or customer engagement. If improving customer engagement and reducing churn is a priority, demonstrate how the data catalog can provide sales teams with actionable insights to identify high-conversion accounts.

Deliver quick wins as they can “quiet the cynics and detractors looking to derail the effort before it begins.”

Also, read → How to build a business case for a data catalog

8. Explore ways to automate and scale

Permalink to “8. Explore ways to automate and scale”As your number of users and data sources grow, you’ll have to explore ways to incorporate automation to scale your data catalog’s use cases.

The most common processes to automate include:

- Metadata ingestion

- Tagging and classification

- Profiling

- Policy propagation via lineage

- Quality checks

- Documentation

- Data observability

Here’s an example of automation in action to tag sensitive data assets as PHI.

When a new patient record data source is connected to the data catalog, it automatically scans the metadata to identify any PHI. If it detects PHI, the catalog will tag the data as sensitive and apply the necessary access controls to ensure only authorized personnel can access it.

Automation is vital to eliminating grunt work, improving scalability, enhancing user experience, and maximizing the value of your data catalog.

Dive deeper → AI data catalog: What is it and how can it improve your business outcomes?

When you build a data catalog from scratch, you’ll have to account for some pressing challenges. Let’s take a look.

Key challenges in building a data catalog

Permalink to “Key challenges in building a data catalog”The top challenges you may face when building a data catalog are as follows:

- Inadequate resources and expertise as you may not have the right solution architects and data engineers for developing and maintaining the data catalog

- The TCO when building a catalog as you must handle the expenses related to building, maintaining, scaling, and supporting the data catalog, including infrastructure costs

- Complex and disparate data sources with diverse data formats, structures, and sources that require complex, custom integration and standardization workflows

- Establishing, maintaining, and scaling data governance policies, processes, and controls

- Lack of user adoption and stakeholder buy-in as overcoming resistance or reluctance (i.e., changing cultures) is tough

- Future-proofing the data catalog to adapt and evolve with changes in data sources, technologies, and business requirements

To tackle these challenges, you can start by setting up collaborative processes, providing user training, and facilitating continuous awareness and improvement sessions.

Alternatively, you can opt for an off-the-shelf data catalog built for modern data teams and use cases. Let’s have a look.

A better alternative: Using an enterprise data catalog

Permalink to “A better alternative: Using an enterprise data catalog”Enterprise data catalogs are usually more mature, comprehensive, and feature-rich than open-source alternatives. Although these tools come with a license or a subscription, you don’t have to worry about product development, infrastructure maintenance, and scaling up your data catalog as your business grows.

Moreover, if you choose a data catalog solution provider who is a partner rather than a vendor, they can help you drive data catalog implementation, adoption, and engagement as they’re invested in your success.

Here’s how Takashi Ueki, Director of Enterprise Data & Analytics at Elastic, explains the ‘partner, not vendor approach’ that he expects from a data catalog solution provider:

Here’s how Takashi Ueki, Director of Enterprise Data & Analytics at Elastic, explains partner, not vendor approach - Image by Atlan.

Here’s how Ted Andersson, Director of Business Intelligence at CouponFollow, puts it:

Here’s how Ted Andersson, Director of Business Intelligence at CouponFollow - Image by Atlan.

Also, read → How to evaluate a data catalog to ensure business value

Summing up

Permalink to “Summing up”Building a data catalog requires a meticulous approach to meet business demands and technical intricacies, while also ensuring that you drive user adoption, deliver business value, and ensure regulatory compliance.

Start by defining clear business and technical requirements and documenting use cases. Then establish a robust data governance framework, ensuring data security and compliance. Lastly, use automation to scale your use cases and eliminate grunt work. These steps are crucial for unlocking the full potential of your data catalog.

How to build a data catalog: Related reads

Permalink to “How to build a data catalog: Related reads”- Data Catalog Guide

- Build vs. Buy Data Catalog: What Should Factor Into Your Decision Making?

- Build vs. Buy: Why Fox Chose Atlan

- Data Catalog Requirements in 2026: A Comprehensive Guide

- Open-Source Modern Data Stack: 5 Steps to Build

- Data Catalog Demo 101: What to Expect, Questions to Ask, and More

- Data catalogs in 2026

- 5 Main Benefits of a Data Catalog

- Data Cataloging Process: Challenges, Steps, and Success Factors

- Data Catalog Business Value: Assessment Factors, Benefits, and ROI Calculation

- Who Uses a Data Catalog & How to Drive Positive Outcomes?

- 15 Essential Features of Data Catalogs to Look for in 2026

- Data Catalog Adoption: What Limits It and How to Drive It Effectively

Share this article