Open Source Data Catalog: Top 6 Tools To Consider in 2025

Which open source data catalogs lead in 2025?

Permalink to “Which open source data catalogs lead in 2025?”Summarize and analyze this article with 👉 🔮 Google AI Mode or 💬 ChatGPT or 🔍 Perplexity or 🤖 Claude or 🐦 Grok (X) .

Open source data governance tools comparison in 2025. Source: Atlan.

Here are the top 6 tools dominating the open source landscape, along with their key strengths and limitations:

Tool | Best for | GitHub stars | Key strength | Main limitation |

|---|---|---|---|---|

Amundsen Lyft | Simple discovery / metadata search | 4.7k | Strong search ranking (PageRank style), easy ingestion | Weak built‑in governance, less advanced policy enforcement |

Apache Atlas | Hadoop ecosystems | 2k | Mature governance, deep support for taxonomy, tag propagation | Complex deployment and UI isn’t user-friendly |

LinkedIn DataHub | Federated architecture, large enterprises | 11.1k | Flexible metadata schema, lineage graph, connector ecosystem | Infrastructure complexity; evolving quality features |

Marquez | Lineage, job/dataset dependency tracking | 2k | Real-time lineage via OpenLineage, traceability across pipelines | Less focus on discovery or policy enforcement |

OpenMetadata | Modern data stacks | 7.7k | Integrated discovery, lineage, quality, collaboration with built-in quality tests | Younger project; some connectors or features still maturing |

OpenDataDiscovery (ODD) | ML and data science use cases | 1.4k | Federated search, metadata health, flexible architecture | Less mature community, features still under development |

What are the best open source data catalogs? A deep dive.

Permalink to “What are the best open source data catalogs? A deep dive.”This section explores leading open source data governance tools, highlighting their features and limitations.

Amundsen

Permalink to “Amundsen”Origin: Amundsen, Lyft’s data discovery platform.

Best for: Teams prioritizing simple, effective metadata ingestion, data search, and discovery.

Standout features:

- Google-like search experience for intuitive search and discovery

- Microservice architecture with metadata service, search service, frontend service, and ingestion library (DataBuilder) for custom extractor/loader framework

- PageRank‑style search ranking by usage to surface frequently used assets

- Integration flexibility–supports Neo4j or Apache Atlas as metadata stores

- Community contributions for connectors (BigQuery, Snowflake, etc.).

Key limitations:

- Limited governance or policy enforcement, as the focus is on discovery

- Scalability challenges for very large metadata volumes or complex deployments

- Requires custom work for enterprise features like data lineage across heterogeneous systems

- Metadata permissions / access controls are less sophisticated out of the box

- Outdated product roadmap

Latest release: v0.13.0 – Apr 2025 (GitHub)

Atlas

Permalink to “Atlas”Origin: Atlas originally developed under Hortonworks and later donated to Apache. One of the first open-source tools for solving search, discovery, and governance problems in Hadoop.

Apache Atlas enjoys a special status amongst all the open-source data cataloging tools as many companies, including Atlan, an enterprise-grade catalog, governance, and metadata platform, still use it.

Best for: Organizations heavily invested in Hadoop ecosystems, needing strong metadata governance, classification, lineage, and integration.

Standout features:

- Rich support for taxonomy, classifications, and tag propagation

- Fine-grained metadata access controls, audit tracking

- Integrations with Hadoop stack (Hive, Kafka, HBase)

- Powered by actively developed and used technologies like Linux Foundation’s JanusGraph, Apache Solr, Apache Kafka, and Apache Ranger

- Well-documented on their Jira project hosted by the Apache Software Foundation, and traceable via the Jira ID

Key limitations:

- Less focus on discovery workflows for end users

- Heavy and complex to deploy and maintain

- UI feels less modern and intuitive, compared to modern alternatives

- Adapting to modern cloud or lakehouse architectures may require custom extensions

- Complex setup for non-Hadoop environments

Latest release: v2.4 – Feb 2025 (GitHub)

DataHub

Permalink to “DataHub”Origin: DataHub evolved from LinkedIn’s internal tool WhereHows

Best for: Organizations that require modular metadata architecture, real-time ingestion, lineage, and federated governance.

Standout features:

- Modular and service-oriented architecture with both push-and-pull options for metadata ingestion

- Supports full-text search and discovery

- Interactive, column-level lineage graph and impact analysis

- Wide range of connectors and integrations

- Role-based access control for metadata itself

- Support for data contracts

- Frequent releases, an active community, and a reasonably well-maintained public roadmap

Key limitations:

- Infrastructure complexity (Kafka, Elasticsearch, multiple services)

- Some integrations or capabilities require custom engineering – resource-intensive deployments

- Features like data quality, observability are still evolving in the community

Latest release: v1.2.0 – Jul 2025 (GitHub)

Marquez

Permalink to “Marquez”Origin: Marquez was created by WeWork to search and visualize data assets and their relationships, and later incubated into the LF AI & Data Foundation.

Marquez also paved the way for OpenLineage, which captures, manages, and maintains data lineage in real time.

Best for: Teams focused heavily on lineage, provenance, and job/dataset dependency tracking across pipelines.

Standout features:

- OpenLineage‑compliant metadata server for real-time lineage collection and visualization

- Unified metadata UI showing job → dataset relationships and execution lineage

- Flexible lineage API for automating impact analysis, backfills, root cause tracing

- Modular, extensible architecture for metadata aggregation across tools

- Integration with modern data stack tools like dbt and Apache Airflow

Key limitations:

- Less emphasis on discovery or governance beyond lineage

- Not as feature-rich in policy enforcement, access control, or business metadata

- Community and ecosystem smaller than more mature catalogs

Latest release: 0.50.0 – July 2024 (GitHub)

OpenMetadata

Permalink to “OpenMetadata”Origin: OpenMetadata is a widely used open-source project using PostgreSQL’s graph capabilities and extensible architecture. It’s built by the team behind Uber’s data infrastructure, as well as founders of Apache Hadoop and Atlas.

Best for: Teams wanting a unified metadata platform to enable governance, quality, profiling, lineage, and collaboration.

Standout features:

- Integrated metadata graph combining discovery, observability, and governance

- Plugin architecture & rich connector ecosystem (90+ connectors)

- Collaboration features (comments, domain glossaries, context)

- Policy enforcement and metadata-driven workflows

Key limitations:

- Younger project, with enterprise RBAC and SSO still maturing

- Some connectors and features may need extensive customization

- Performance at enterprise scale needs maturing

Latest release: v1.10.0 – October 2025 (GitHub)

OpenDataDiscovery (ODD)

Permalink to “OpenDataDiscovery (ODD)”Origin: OpenDataDiscovery was developed by Provectus as a federated data discovery and metadata platform, open sourced around 2021.

Initially designed with ML teams in mind, but soon extended to data engineering and data science use cases.

Best for: Organizations needing federated search, metadata discovery, and observability across systems.

Standout features:

- Federated data catalog driving search across data silos

- Ingestion-to-product data lineage

- Metadata health and observability dashboards

- Flexible architecture for modern data platforms

- Integration with data quality tools, popular data engineering and ML tools (dbt, Snowflake, SageMarker, KubeFlow, BigQuery, etc.)

Key limitations:

- Less community maturity and adoption vs older projects

- Some features (lineage, quality) may still be under development

- Connectors and integrations may require custom work

Latest release: v0.27.7 – February 2025 (GitHub)

How can you pick and deploy an open-source data catalog?

Permalink to “How can you pick and deploy an open-source data catalog?”Step 1: Define must-have capabilities

Permalink to “Step 1: Define must-have capabilities”Start by identifying the non‑negotiables for your environment by considering:

- Metadata scope

- Lineage depth

- Governance controls (RBAC, tags, classification, etc.)

- Business glossary

- Connector coverage

- Scalability and architecture

Step 2: Evaluate open-source health and community

Permalink to “Step 2: Evaluate open-source health and community”Shortlist catalogs with active communities, solid documentation, and frequent releases.

Score each catalog on:

- GitHub metrics: Stars, forks, contributors, release cadence.

- Community responsiveness: Activity on Slack, GitHub Issues, or mailing lists.

- Documentation and setup guides: Are they maintained and easy to follow?

- License type: Apache 2.0, MIT, or custom — this determines flexibility for enterprise deployment.

Step 3: Pilot in a high-impact domain/use case

Permalink to “Step 3: Pilot in a high-impact domain/use case”Pilot the top choice in a sandbox with one high‑value domain (e.g., customer, finance, or marketing) and use case.

Test discovery, search accuracy, lineage visualization, glossary tagging and ownership visibility.

Involve both technical (data engineers) and non‑technical (data stewards, analysts) users early to evaluate usability and adoption potential.

Step 4: Automate ingestion and policy enforcement

Permalink to “Step 4: Automate ingestion and policy enforcement”Automate metadata ingestion via tools like Airflow,dbt, Prefect or Dagster.

Implement tag‑driven or role-based access control so governance operates automatically rather than manually.

For advanced setups, connect catalogs to CI/CD pipelines or APIs for version-controlled metadata updates.

Step 5: Measure adoption, reliability, and ROI

Permalink to “Step 5: Measure adoption, reliability, and ROI”Track early adoption: search volume, active users, issue resolution speed. If results are strong, expand gradually. If not, reassess or test your runner-up tool.

Step 6: Plan for sustainability

Permalink to “Step 6: Plan for sustainability”Open source catalogs often require internal ownership. Document:

- Time spent on setup, upgrades, and maintenance

- Custom code or connectors built internally

- Cost of infrastructure vs. potential managed alternatives

This documentation builds a baseline for future ROI comparisons if you ever consider transitioning to a managed or commercial metadata platform.

What are the pros and cons of choosing an open source data catalog?

Permalink to “What are the pros and cons of choosing an open source data catalog?”Often, it’s challenging to find a single open-source data catalog tool that is capable of addressing all challenges your data team faces. Many organizations combine tools to get complementary strengths (discovery, governance, lineage).

Pros

- Lower upfront cost with no archaic licensing fees

- You own the deployment, data, and stack; you’re not tied to a vendor’s roadmap

- Customization and flexibility – tailor metadata models, workflows, connectors, UI, etc.

- Full code transparency for security audits and compliance

- Vibrant communities driving innovation – faster release of new connectors, bug fixes, and extensions.

Cons

- Self-hosting and upgrades require DevOps time

- High technical overhead when deploying, customizing, and maintaining an open source catalog

- Feature gaps (quality tests, AI governance) often need custom code

- Enterprise support, SLAs and advanced automation typically absent – community support is not the same as enterprise SLAs, and docs may lag

- Sustainability and versioning risk as some projects may stall, lose contributors, or change direction

- Hidden TCO as ongoing costs in infrastructure, upgrades, and custom development can accumulate substantially

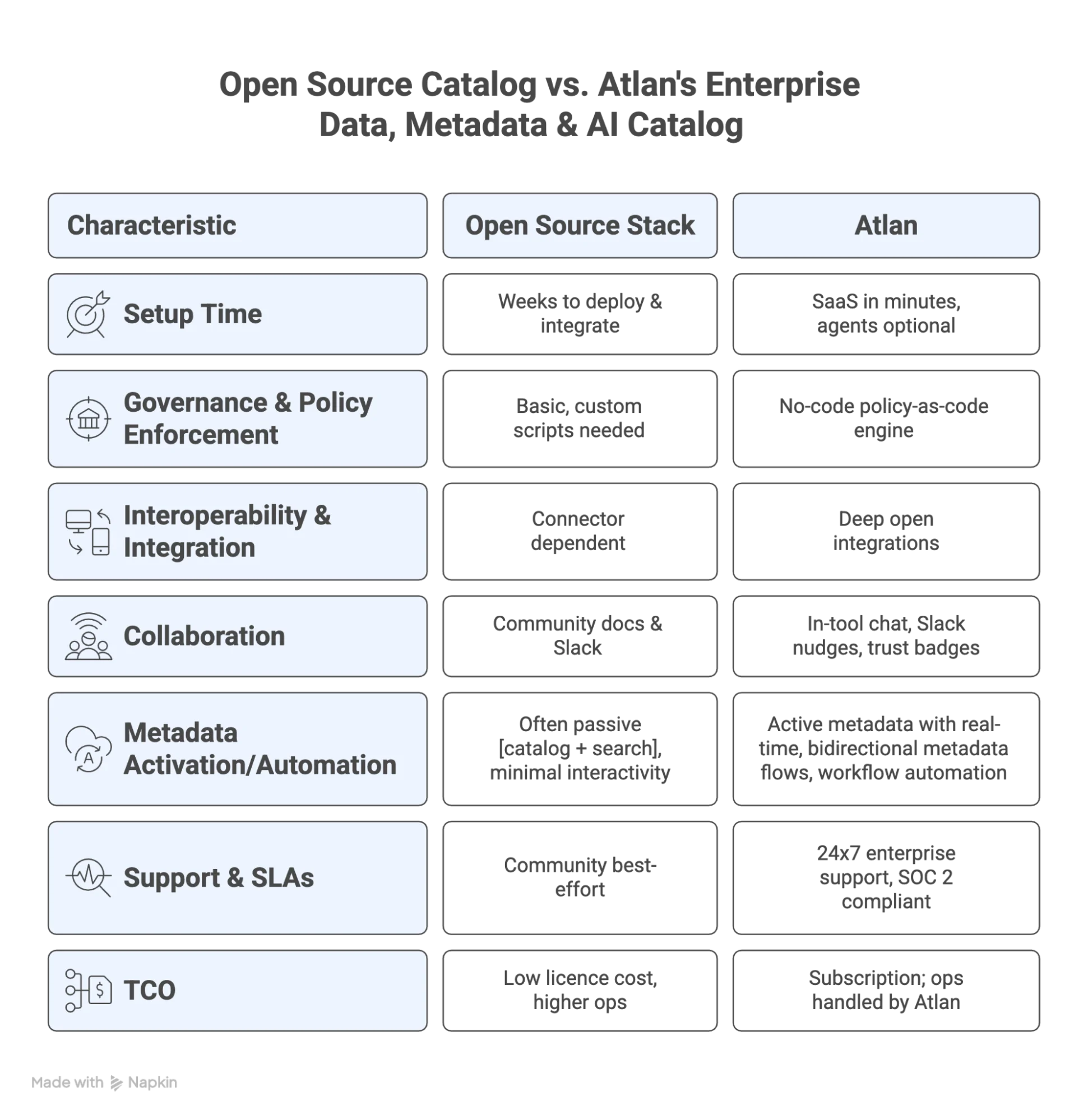

Open source vs. Atlan: A side-by-side snapshot

Permalink to “Open source vs. Atlan: A side-by-side snapshot”Open source catalogs excel at focused use cases—discovery, search, lineage—for specific systems. They give you control and flexibility, but you may need to build governance layers, integrations, and user experiences around them.

Atlan, by contrast, positions itself as a unified control plane—bridging metadata, governance, automation, and collaboration across your entire data and AI stack. It reduces the engineering burden of stitching systems together and lets you focus resources on domain logic and insights.

Open source vs. Atlan: Side-by-side snapshot in 2025. Source: Atlan.

Discover how a modern data catalog drives real results

Book a Personalized Demo →Real wins by real companies: Modern data catalog in action

Permalink to “Real wins by real companies: Modern data catalog in action”

Modernized data stack and launched new products faster while safeguarding sensitive data

“Austin Capital Bank has embraced Atlan as their Active Metadata Management solution to modernize their data stack and enhance data governance. Ian Bass, Head of Data & Analytics, highlighted, ‘We needed a tool for data governance… an interface built on top of Snowflake to easily see who has access to what.’ With Atlan, they launched new products with unprecedented speed while ensuring sensitive data is protected through advanced masking policies.”

Ian Bass, Head of Data & Analytics

Austin Capital Bank

🎧 Listen to podcast: Austin Capital Bank From Data Chaos to Data Confidence

Discover how a modern data catalog drives real results

Book a Personalized Demo →

Yape, a fast-growing finance-sector payments app from Credicorp, turns compliance into an accelerator.

“After a three-week PoC scored 4.8/5, Yape plugged Atlan’s Active Metadata Management into Databricks Unity Catalog on Azure to centralize policies, lineage, and fine-grained access. Audits move faster and regulatory risk drops while teams from SQL users to business analysts confidently find and trust data. The result is secure, compliant, self-serve analytics that match Yape’s speed. you have the best UI in the market right now. Atlan just excels in the things that were important to us. It was easy to use, your connectors with Databricks and our data ecosystem worked really well”

Jorge Plasencia, Yape's Data Catalog & Data Observability Platform Lead

Yape

🎧 Listen to podcast: How Yape became an active metadata pioneer

Ready to move from DIY scripts to modern, automated data cataloging?

Permalink to “Ready to move from DIY scripts to modern, automated data cataloging?”Open source data catalogs offer an excellent entry point for metadata management—flexible, transparent, and cost-effective. But they often come with steep operational overheads, fragmented features, and a lack of support for diverse personas and use cases.

If you’re hitting the limits of DIY scripts, custom connectors, or piecemeal governance workflows, consider an enterprise active data cataloging and governance platform like Atlan.

Atlan unifies your entire data and AI stack with embedded governance, active metadata, and seamless collaboration—designed to scale with your teams, tools, and complexity.

Discover how a modern data catalog drives real results

Book a Personalized Demo →FAQs about open source data catalog

Permalink to “FAQs about open source data catalog”1. What is an open source data catalog?

Permalink to “1. What is an open source data catalog?”An open source data catalog is a tool that helps organizations manage and discover their data assets. It provides a centralized repository for metadata, making it easier for teams to find, understand, and utilize data effectively.

2. How can an open source data catalog improve data discovery?

Permalink to “2. How can an open source data catalog improve data discovery?”By centralizing metadata, an open source data catalog enhances data discovery. It allows users to search for and access data assets quickly, improving efficiency and collaboration among data teams.

3. What are the benefits of using an open source data catalog for data governance?

Permalink to “3. What are the benefits of using an open source data catalog for data governance?”Open source data catalogs support data governance by maintaining data quality and compliance. They provide visibility into data assets, enabling organizations to enforce data policies and standards effectively.

4. How do I choose the right open source data catalog for my organization?

Permalink to “4. How do I choose the right open source data catalog for my organization?”When choosing an open source data catalog, consider factors such as features, community support, integration capabilities, and scalability. Evaluate how well the tool aligns with your organization’s specific data management needs.

5. What features should I look for in an open source data catalog?

Permalink to “5. What features should I look for in an open source data catalog?”Key features to look for include metadata management, data lineage tracking, search functionality, user collaboration tools, and integration capabilities with existing data systems.

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.

Open source data catalog: Related reads

Permalink to “Open source data catalog: Related reads”- Data Catalog: What It Is & How It Drives Business Value

- 7 Popular open-source ETL tools

- 5 Popular open-source data lineage tools

- 5 Popular open-source data orchestration tools

- 7 Popular open-source data governance tools

- 11 Top data masking tools

- 9 Best data discovery tools

- What Is a Metadata Catalog? - Basics & Use Cases

- Modern Data Catalog: What They Are, How They’ve Changed, Where They’re Going

- 5 Main Benefits of Data Catalog & Why Do You Need It?

- Enterprise Data Catalogs: Attributes, Capabilities, Use Cases & Business Value

- The Top 11 Data Catalog Use Cases with Examples

- 15 Essential Features of Data Catalogs To Look For in 2025

- Data Catalog vs. Data Warehouse: Differences, and How They Work Together?

- Snowflake Data Catalog: Importance, Benefits, Native Capabilities & Evaluation Guide

- Data Catalog vs. Data Lineage: Differences, Use Cases, and Evolution of Available Solutions

- Data Catalogs in 2025: Features, Business Value, Use Cases

- AI Data Catalog: Exploring the Possibilities That Artificial Intelligence Brings to Your Metadata Applications & Data Interactions

- Amundsen Data Catalog: Understanding Architecture, Features, Ways to Install & More

- Machine Learning Data Catalog: Evolution, Benefits, Business Impacts and Use Cases in 2025

- 7 Data Catalog Capabilities That Can Unlock Business Value for Modern Enterprises

- Data Catalog Architecture: Insights into Key Components, Integrations, and Open Source Examples

- Data Catalog Market: Current State and Top Trends in 2025

- Build vs. Buy Data Catalog: What Should Factor Into Your Decision Making?

- How to Set Up a Data Catalog for Snowflake? (2025 Guide)

- Data Catalog Pricing: Understanding What You’re Paying For

- Data Catalog Comparison: 6 Fundamental Factors to Consider

- Alation Data Catalog: Is it Right for Your Modern Business Needs?

- Collibra Data Catalog: Is It a Viable Option for Businesses Navigating the Evolving Data Landscape?

- Informatica Data Catalog Pricing: Estimate the Total Cost of Ownership

- Informatica Data Catalog Alternatives? 6 Reasons Why Top Data Teams Prefer Atlan

- Data Catalog Implementation Plan: 10 Steps to Follow, Common Roadblocks & Solutions

- Data Catalog Demo 101: What to Expect, Questions to Ask, and More

- Data Mesh Catalog: Manage Federated Domains, Curate Data Products, and Unlock Your Data Mesh

- Best Data Catalog: How to Find a Tool That Grows With Your Business

- How to Build a Data Catalog: An 8-Step Guide to Get You Started

- The Forrester Wave™: Enterprise Data Catalogs, Q3 2024 | Available Now

- How to Pick the Best Enterprise Data Catalog? Experts Recommend These 11 Key Criteria for Your Evaluation Checklist

- Collibra Pricing: Will It Deliver a Return on Investment?

- OpenMetadata vs. DataHub: Compare Architecture, Capabilities, Integrations & More

- Automated Data Catalog: What Is It and How Does It Simplify Metadata Management, Data Lineage, Governance, and More

- Data Mesh Setup and Implementation - An Ultimate Guide

- What is Active Metadata? Your 101 Guide