Data Mesh Principles: Four Pillars of Modern Data Architecture in 2025

Share this article

Data Mesh is a decentralized approach to data architecture that treats data as a product and empowers cross-functional teams to manage and own data domains independently.

See How Atlan Simplifies Data Governance – Start Product Tour

Four Core Data Mesh Principles

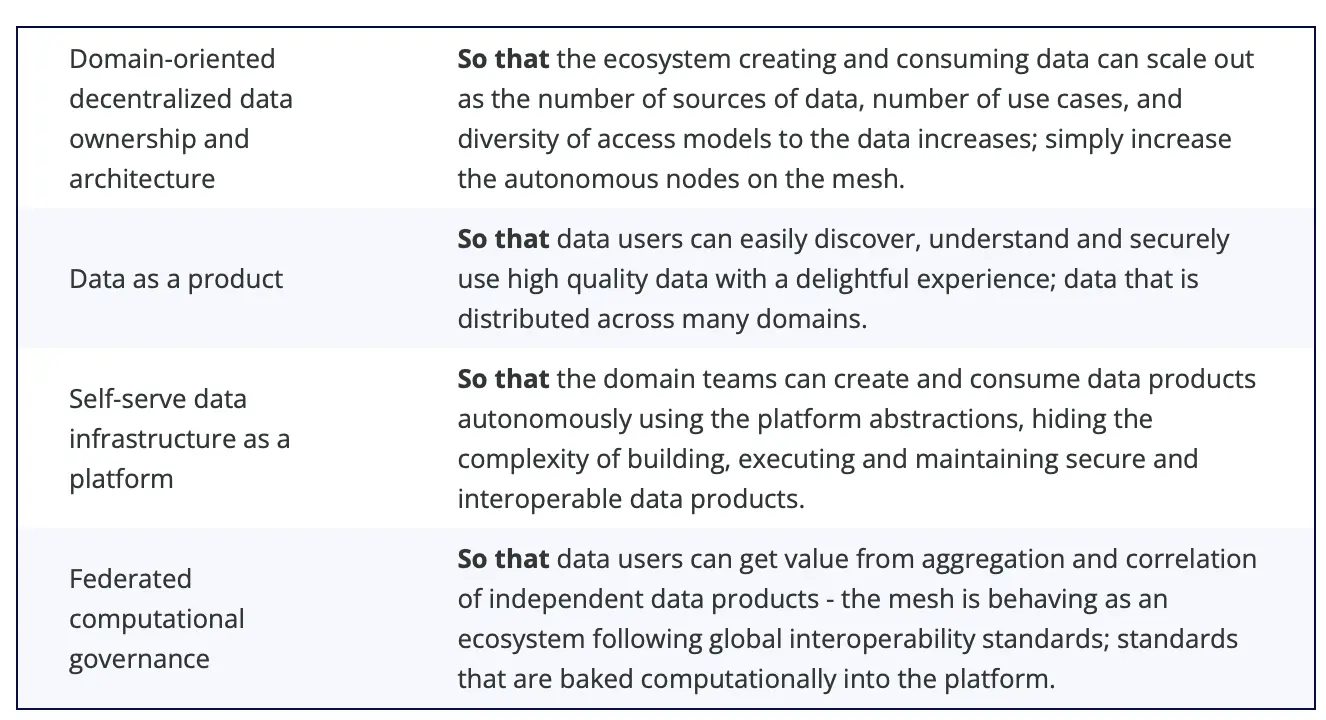

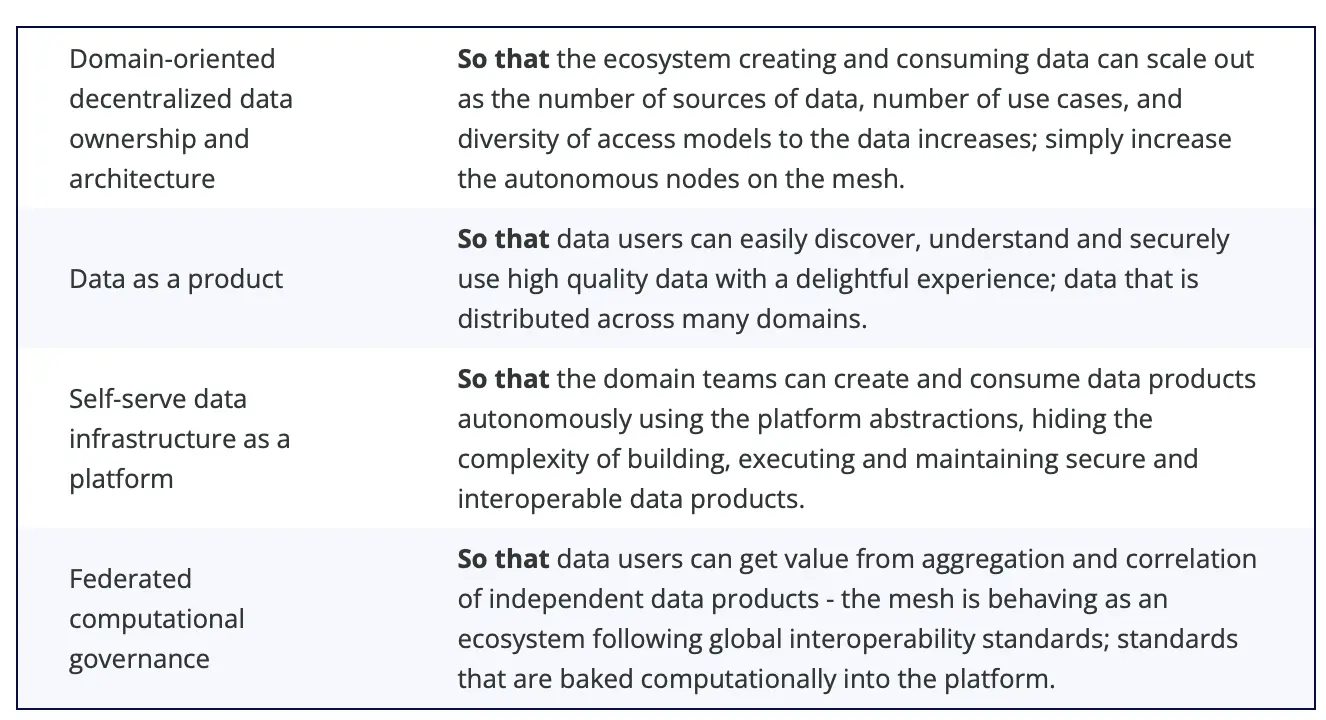

Permalink to “Four Core Data Mesh Principles”The four fundamental data mesh principles are:

- Domain-oriented decentralized data ownership and architecture

- Data as a product

- Self-serve data infrastructure as a platform

- Federated computational governance

The data mesh and its underlying principles offer the promise of increasing value from data at scale, even in a complex and volatile business context.

A summary of the four principles of the data mesh. Source: Martin Fowler_

Table of Contents

Permalink to “Table of Contents”- Four Core Data Mesh Principles

- 1. Domain-oriented data ownership and architecture

- 2. Data as a product

- 3. Self-serve data infrastructure as a platform

- 4. Federated computational governance

- Rethink your culture stack and tech stack basis data mesh principles

- Summing up on Data Mesh Principles

- How Atlan Supports Data Mesh Concepts

- Data mesh principles: Related reads

It’s impossible to understand data mesh without truly comprehending its 4 defining principles.

Here we are hoping to do just that, further our understanding of the concept by familiarizing ourselves with the four data mesh principles.

But before we go into each of them, here’s a quick recap of what is data mesh.

Data Mesh is a decentralized approach to designing data systems for enterprises.

The Ultimate Guide to Data Mesh - Learn all about scoping, planning, and building a data mesh 👉 Download now

In centralized data architectures, a single data team handles data management for an entire organization. Whereas in the data mesh, each business unit is responsible for capturing, processing, organizing, and managing its data.

The concept was coined by Zhamak Dehghani, formerly the director of emerging technologies in North America at ThoughtWorks.

According to Zhamak Dehghani, at its core, the data mesh is all about decentralization and distributing data responsibility to people who are closest to the data. Meanwhile, Intuit calls it “a systematic approach to organizing the people, code, and data which in turn map a solution to a business problem and its owners.”

Let’s explore each data mesh principle at length and understand how it can help you extract more value from data at scale.

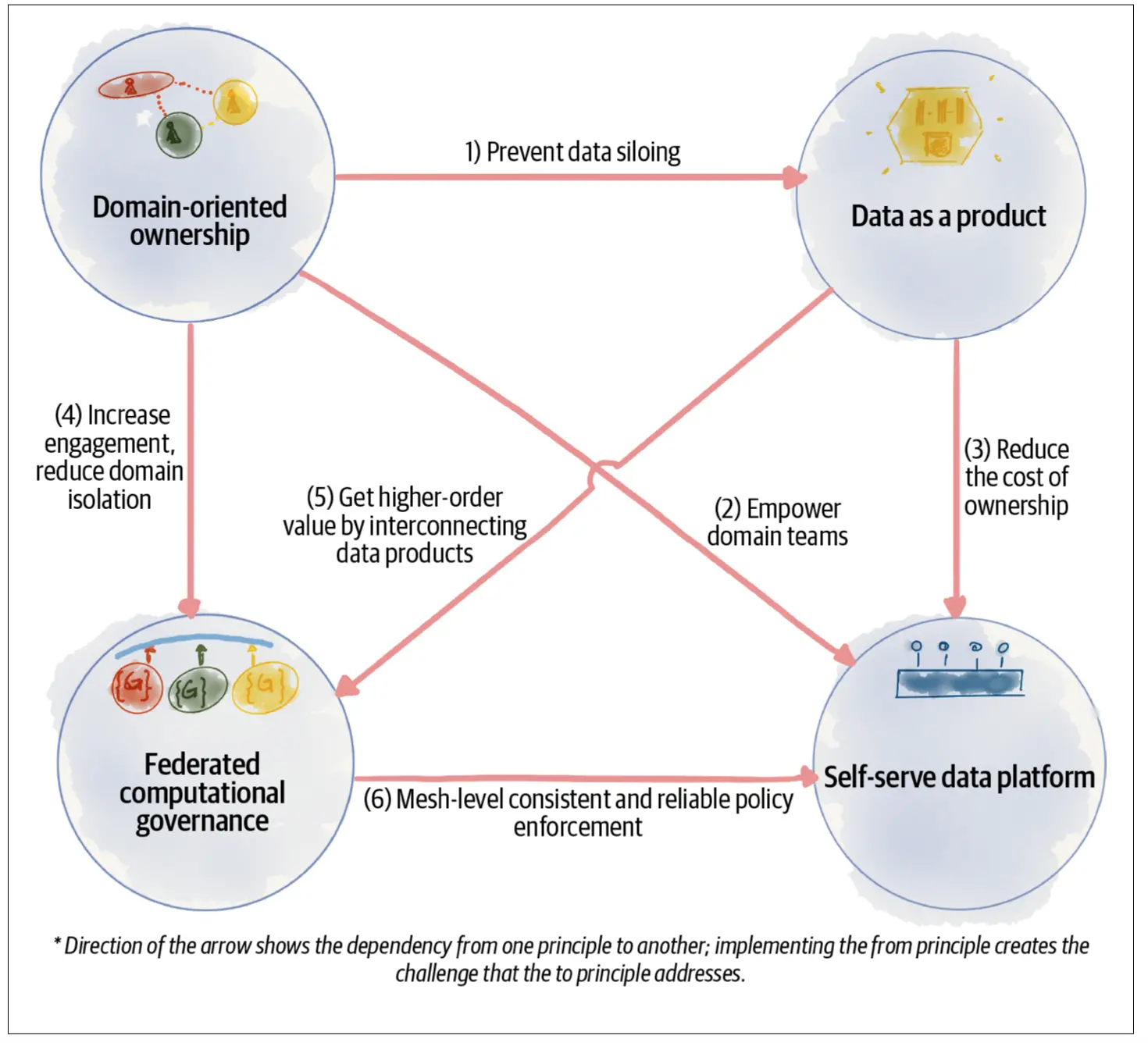

The four principles of data mesh. Source: Data Mesh by Zhamak Dehghani, O'Reilly Media, Inc

1. Domain-oriented data ownership and architecture

Permalink to “1. Domain-oriented data ownership and architecture”The need for decentralized domains in modern organizations?

Traditionally, data architectures revolved around the technology housing your assets, such as data warehouses or lakes. A central data lake — a monolith — would be responsible for storing all organizational data, and a single data team was in charge of managing the lake and data requests of business teams.

However, modern organizations aren’t monoliths. They’re divided into several business domains that further the organization’s growth.

For example, a financial services company can have a domain for each area of finance it covers — equity advisory, M&A, asset management, and underwriting.

Using traditional, centralized approaches, these domains in modern organizations would have to rely on the central data team to fetch the data they need. Such an approach isn’t scalable and increases the time-to-insight substantially.

How do domains work in the data mesh?

Data mesh reimagines that relationship by putting the onus of data management and sharing on the business domains. So, each domain is in complete control of its data end-to-end and doesn’t depend on a central data team for data discovery, sharing, or distribution.

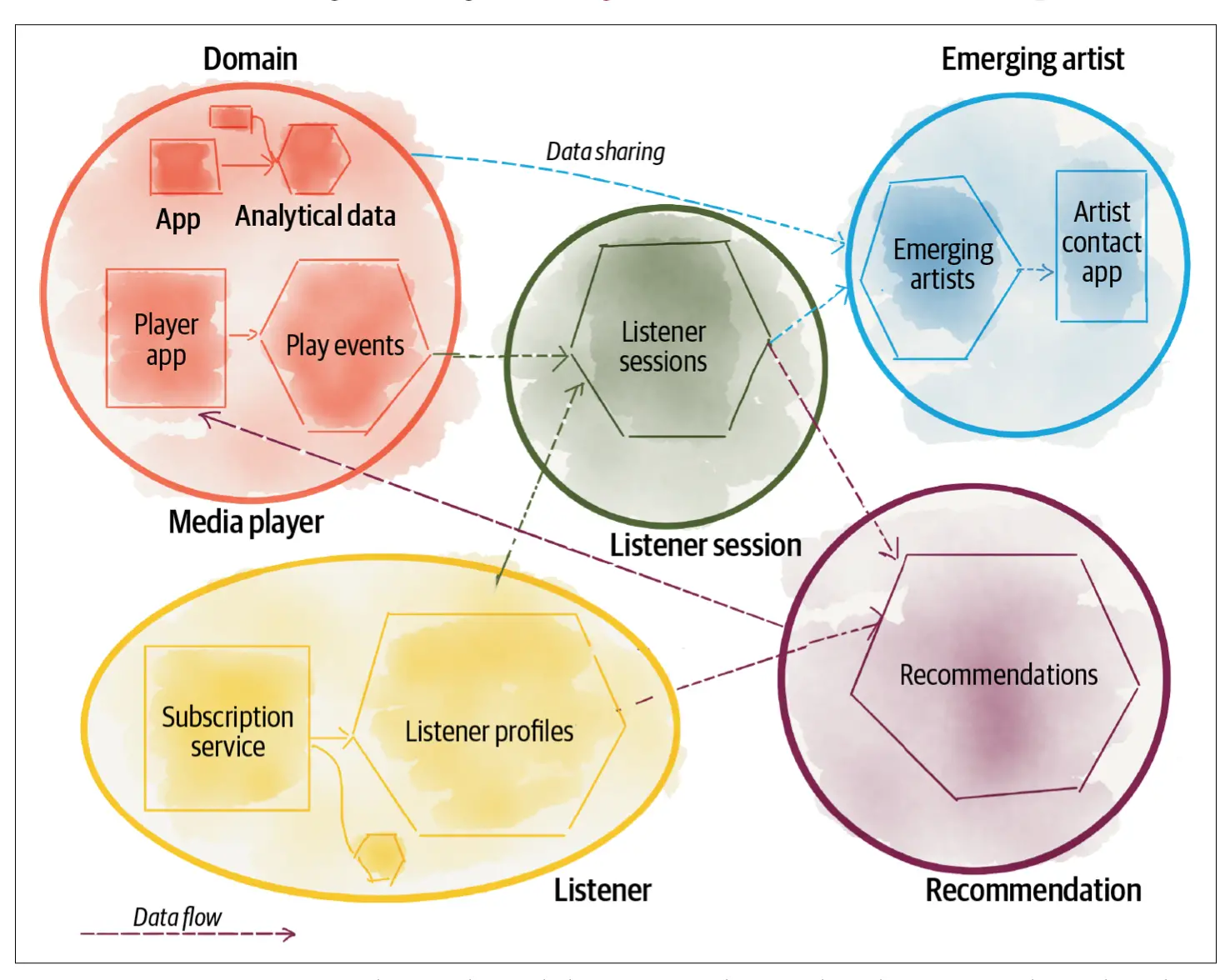

Dehghani explains this data mesh principle by citing the example of a digital audio streaming company. The company would have domains, such as listeners, emerging artists, media players, and recommendations.

Each domain can depend on data from other domains. For instance, the Recommendations domain consumes listener profile data from the Listener domain (in the image shown below). Analyzing listener profiles is essential to understand their patterns and predicting music or podcasts they might like, based on their preferences.

Example of domains and the types of data they own in an audio streaming company. Source: Data Mesh by Zhamak Dehghani, O'Reilly Media, Inc

In another instance, for DPG Media, a Belgian media company, domains include Media Platform, Customer Services, and Marketing. The Customer Services domain would have data products like Subscription Service and Salesforce.

Data Mesh vs Data Fabric | Forrester + Atlan - Masterclass

2. Data as a product

Permalink to “2. Data as a product”The need for changing how we perceive data?

Traditionally, data has always been treated as a byproduct of business functions.

So, a central data team was responsible for cleaning, exploring, and organizing data so that it can be used by other teams. This central team is also responsible for ensuring the quality, integrity, and accuracy of data.

What is data as a product?

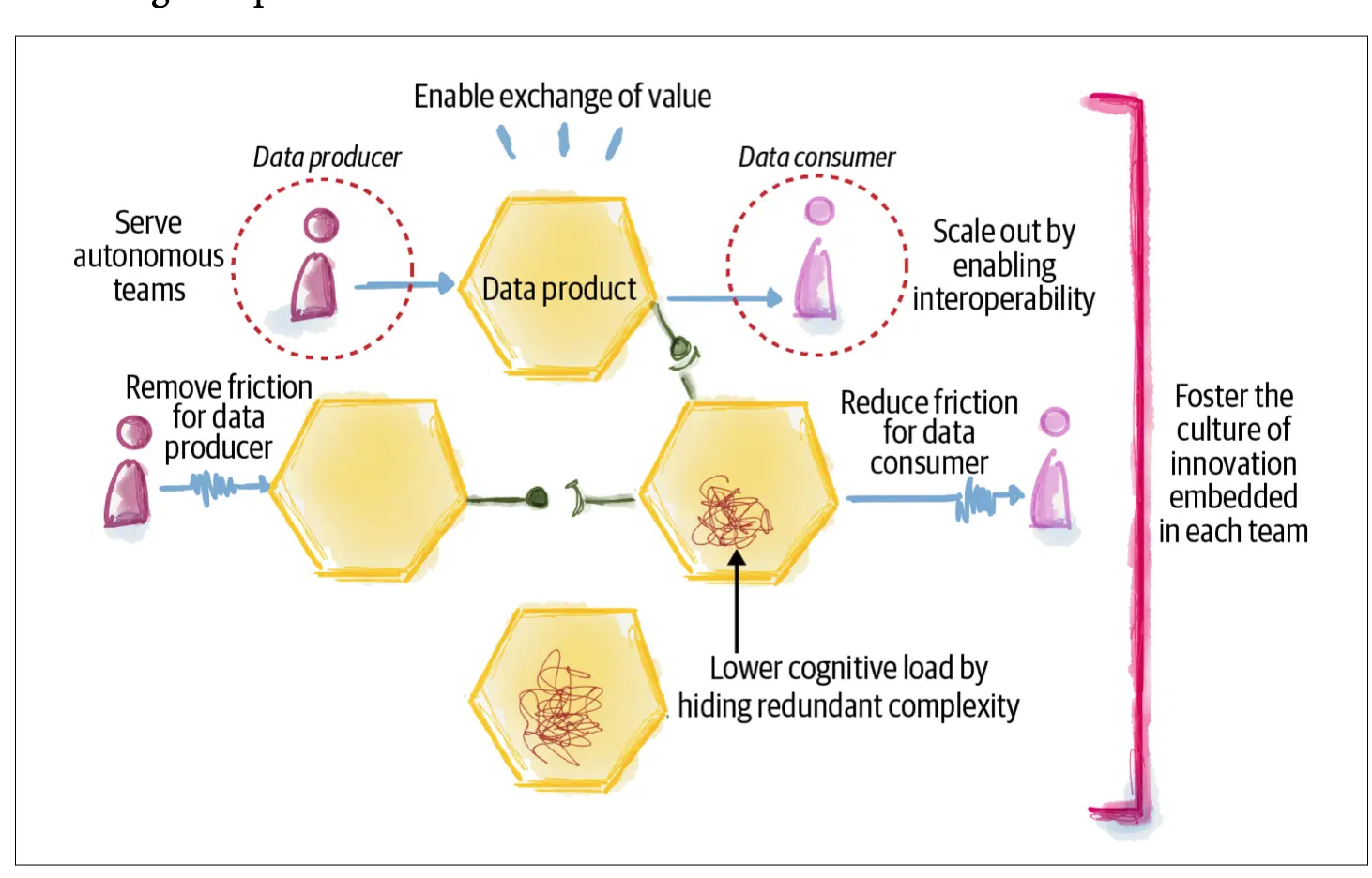

The data mesh paradigm inverts this relationship by making those closest to the source/origins of data accountable for its use.

In such an environment, the domains generating data apply the principles of product thinking to their data products, with the end goal being to provide a great experience to their customers (i.e., other data users).

Let’s explore this data mesh principle further by understanding:

- What does a data product look like?

- What are the components of a data product?

- What do data product teams look like?

- What are the ideal characteristics of a data product?

- How can you measure the effectiveness of a data product?

What does a data product look like?

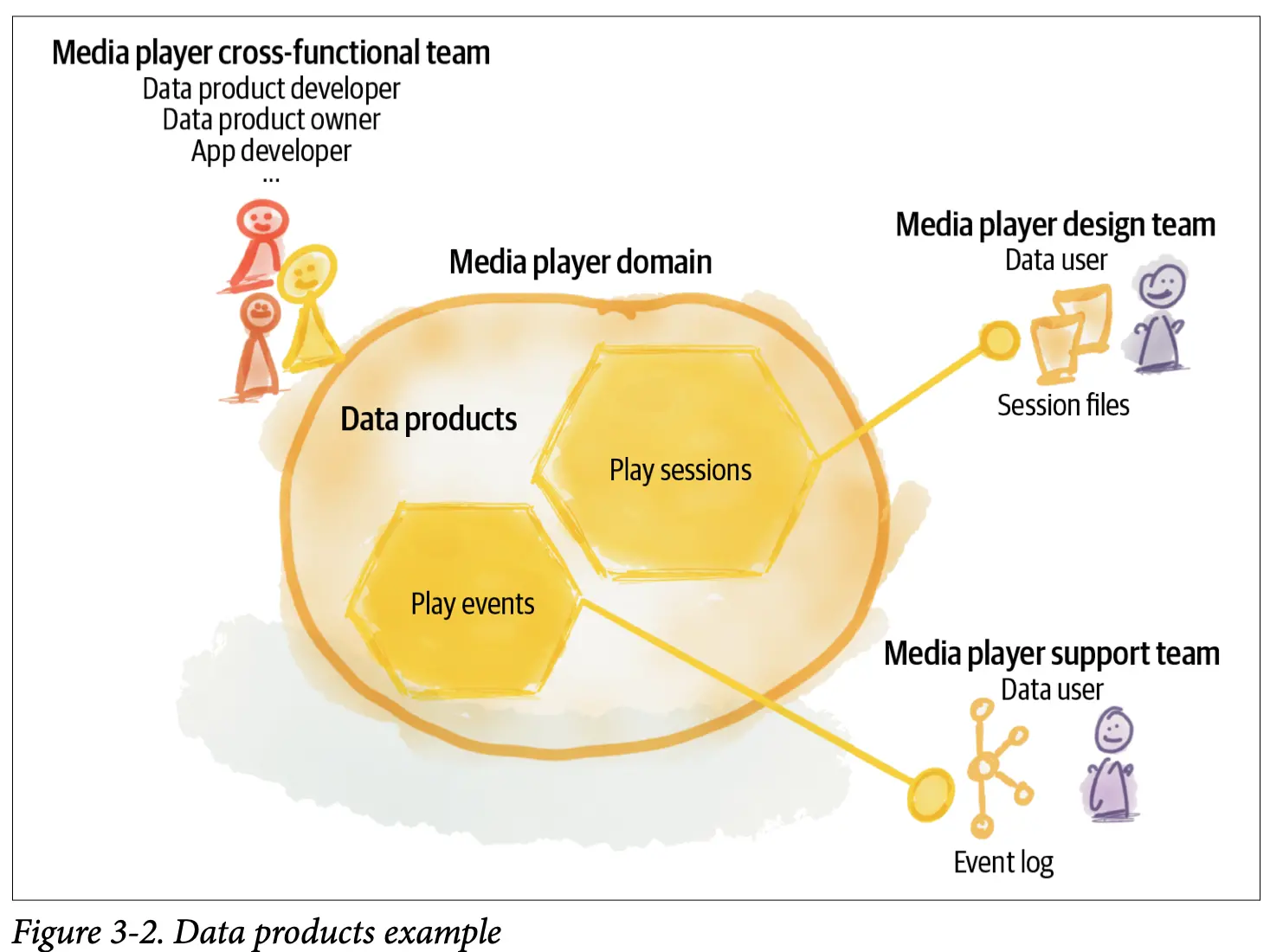

If the domain is a Media player, then the data products would be Play sessions (session data) and Play events (event logs), as shown below.

An example of a data product. Source: Data Mesh by Zhamak Dehghani, O'Reilly Media, Inc

However, different organizations can have their unique interpretations.

For instance, HelloFresh, an Australian food and meal delivery service, defines a data product as “a solution to a customer problem that delivers maximum value to the business and leverages the power of data.” So, HelloFresh deems error rate dashboards and recipe recommendations as data products.

What are the components of a data product?

A data product has three core components:

- Code: Data pipelines, APIs, access control policies, compliance, provenance, etc.

- Data and metadata: Data can be in the form of events, batch files, relational tables, graphs, etc. Meanwhile, the metadata can include semantic definition, syntax declaration, quality metrics, etc.

- Infrastructure: How the data product gets built, deployed, and managed

What do data product teams look like?

To maintain data products, each domain can have two cross-functional teams:

- Data product owner: Responsible for delivering value, satisfying the data users, and managing the life cycle of the data products

- Data product developer: Responsible for developing, serving, and maintaining the domain’s data products

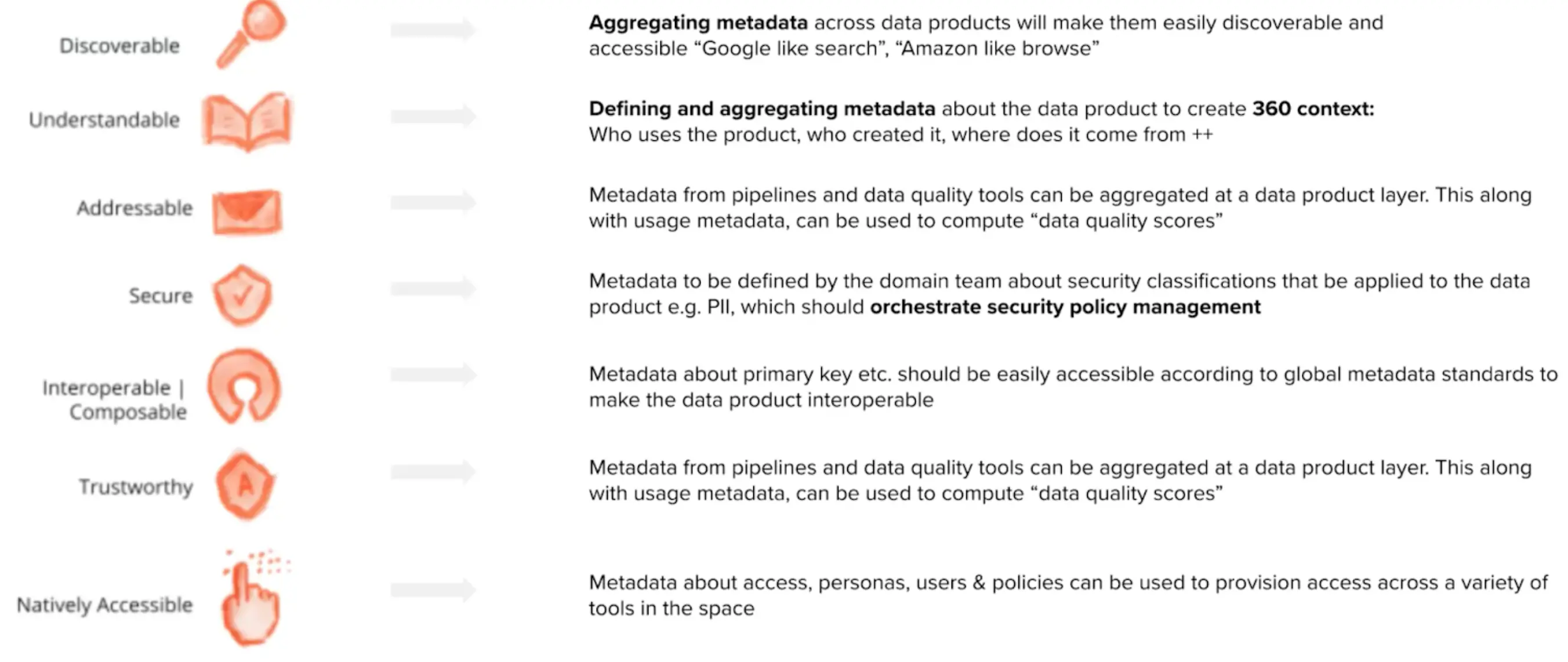

What are the ideal characteristics of a data product?

The domains are responsible for overseeing all three components and ensuring that their data product is:

- Discoverable

- Addressable

- Understandable

- Trustworthy

- Natively accessible

- Interoperable

- Valuable (on its own)

- Secure

How can you measure the effectiveness of a data product?

If you treat data as a product, you can monitor metrics, such as:

- Data adoption rate

- Data quality metrics

- Data trust score

- Total number of users

- User satisfaction (like NPS for data)

Data Catalog 3.0: The Modern Data Stack, Active Metadata and DataOps

Download free ebook

3. Self-serve data infrastructure as a platform

Permalink to “3. Self-serve data infrastructure as a platform”Why should you set up a data mesh platform?

Defining data domains and assigning data products can only work if the domains are equipped with the right resources.

For instance, the domain teams must be able to manage the cost and engineering effort involved in operating domain data products without relying on a central data team.

However, traditional data technologies offer solutions for centralized architectures — centralized catalogs, warehouse schema, allocation of compute/storage resources, etc.

These technologies are priced as a bundle rather than charging for isolated bundles. Security and privacy rules aren’t customizable per domain or project. In addition, all data pipelines are configured and orchestrated centrally.

If you rely on such technologies, establishing decentralized, autonomous, self-sufficient domains isn’t feasible.

That’s the reason for coming up with a self-serve data infrastructure as a platform — the third data mesh principle.

What would the data infrastructure as a platform look like?

The data infrastructure as a platform must be:

- Domain-agnostic

- Able to support autonomous teams

- Designed for a generalist rather than a technologist

Ultimately, it should empower data product users to easily find, access, understand, and consume the data they want.

The objectives of the data infrastructure as a platform. Source: Data Mesh by Zhamak Dehghani, O'Reilly Media, Inc

What are the capabilities of such a platform?

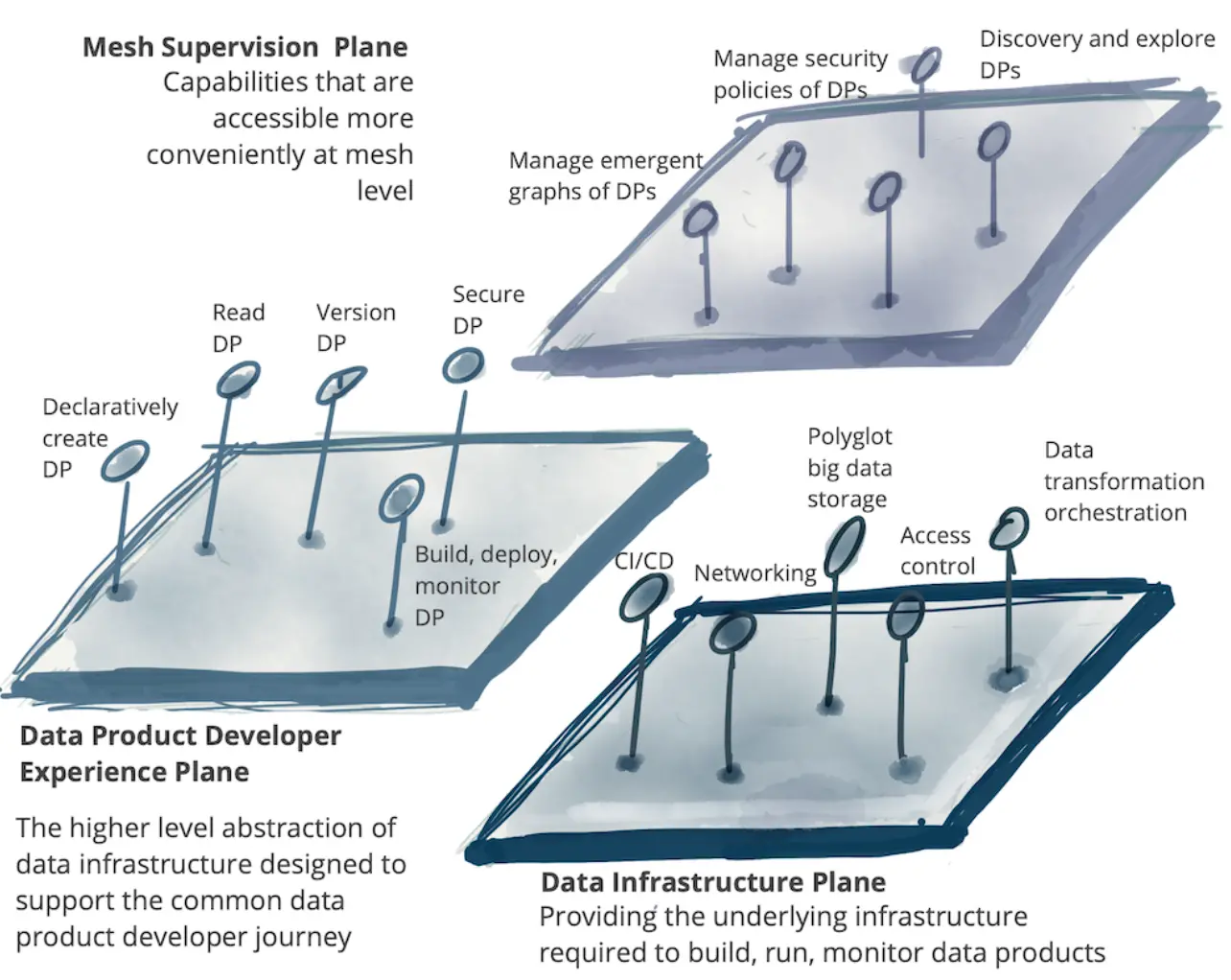

According to Dehghani, such a platform would consist of three planes equipped with capabilities, such as:

1. Data infrastructure plane

This plane provides the underlying infrastructure to build, run, and manage the data products in a mesh. This includes:

- Scalable polyglot data storage with a lake, warehouse, or lakehouse

- Access control

- Pipeline workflow management to orchestrate and implement complex data pipeline tasks or ML model deployment workflows

2. Data product developer experience plane

This plane acts as the interface to help data product developers manage data product lifecycles. This includes:

- Data querying languages for computational data flow programming or algebraic SQL-like statements

- Data product versioning

3. Data mesh supervision plane

The purpose of this plane is to simplify data discovery, access, and governance. This includes:

- Data catalog solutions that solve data governance, standardization, and data discovery across an organization

- Data security with unified data access control and logging, encryption for data at rest and in motion, and data product versioning

The three planes of a data mesh infrastructure. Source: Data Mesh by Zhamak Dehghani, O'Reilly Media, Inc

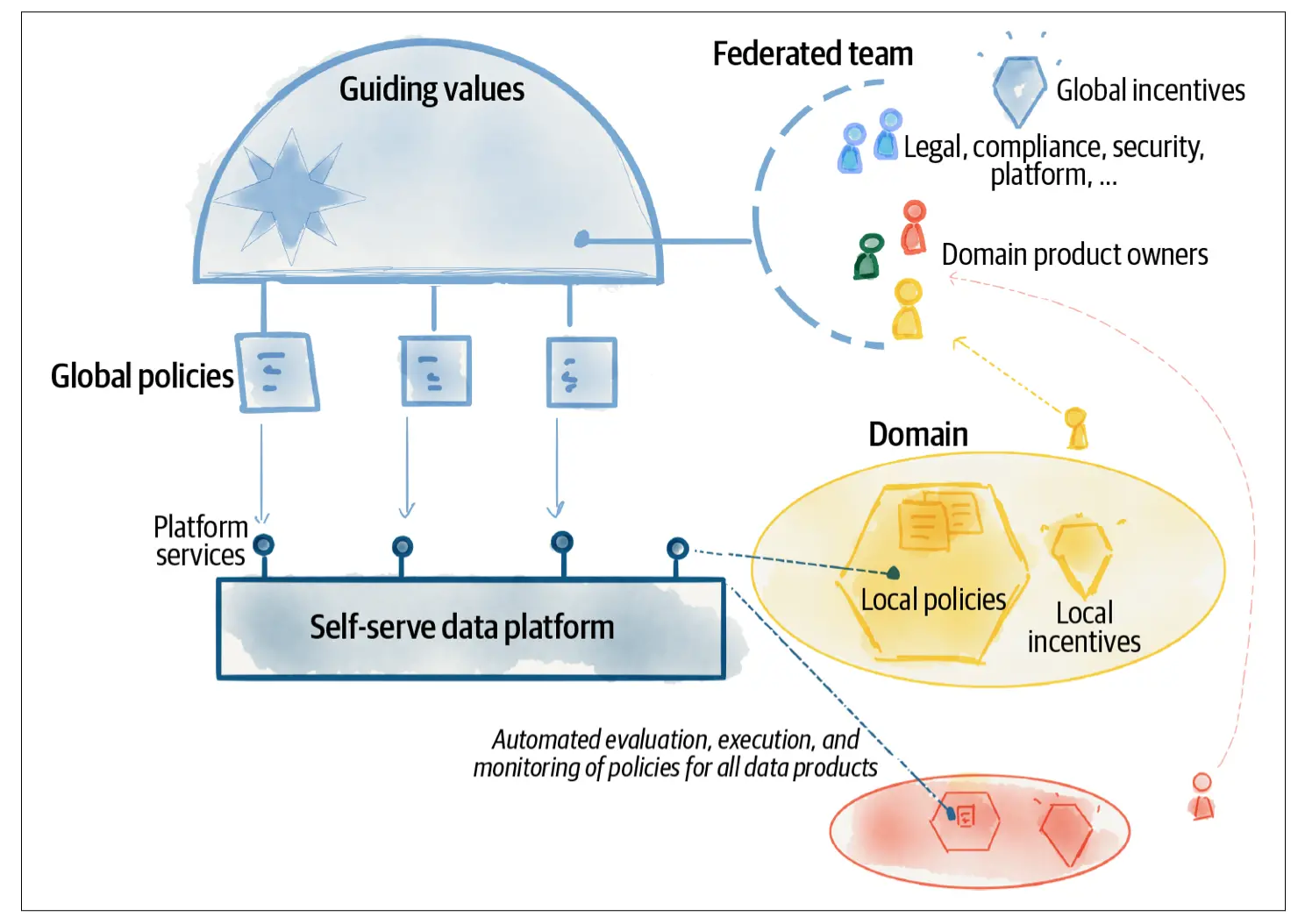

4. Federated computational governance

Permalink to “4. Federated computational governance”A decentralized architecture runs the risk of creating data silos, duplicates, inconsistent data management practices, and data chaos. It’s crucial for the individual domains to be interoperable and ensure consistency by operating under a set of global standards.

Dehghani believes that governance is the ideal mechanism to ensure that a mesh of independent data products is reliable, useful, interoperable, and delivers value consistently.

To that end, they propose a federated computational governance model — the fourth data mesh principle.

What is a federated computational governance model?

A federated computational governance model is a “decision-making model led by the federation of domain data product owners and data platform product owners, with autonomy and domain-local decision-making power, while creating and adhering to a set of global rules.”

The owners of the domains and data platforms form a federation — much like the various states within a country following a federal system, where:

- A domain = a state

- Domain product owner = a governor

- A data product = a country

- Data product owner = a country head

So, each domain maintains its autonomy and can create its rules. However, it must still follow a set of federal/global rules.

Why do you need a federated model?

Organizationally, a data mesh is a federation of smaller divisions (i.e., the domains) with a decent amount of autonomy.

However, the federation must stick to a set of standards and global policies. That’s where computational governance helps.

Operating elements of a federated computational governance model

The five core elements of a federated governance model are:

- Federated team of domain product owners and subject matter experts

- Guiding values to manage the scope of the program

- Policies governing the mesh, such as security, conformance, legal, and interoperability

- Incentives or motivators to balance local and global optimization

- Platform automation, such as protocols, policies as code, automated testing, monitoring, and recovery

Operating elements of a federated computational governance model. Source: Data Mesh by Zhamak Dehghani, O'Reilly Media, Inc

Now that we’ve understood all four data mesh principles, let’s see how to practice them by recalibrating our data culture and tech stacks.

How to rethink your culture stack and tech stack basis data mesh principles?

Permalink to “How to rethink your culture stack and tech stack basis data mesh principles?”The data mesh is at the intersection of people, processes, and technology.

The best way to set it up is by rethinking your culture and tech stack. Let’s explore how.

1. Rethinking your culture stack for the data mesh

The data mesh architecture requires independent domains to gel well together. There are six things you can do to ensure that happens:

- Foster collaboration: Think of the data mesh setup exercise as a team sport, with collaboration being the key. So, everything you set up should have ingrained mechanisms that fuel effective collaboration.

- Appreciate the diversity of data assets: Treat all data assets — code, models, dashboards — as essential assets and make sure that they’re all easy to discover, access, maintain, and manage.

- Focus on self-serve: As business evolves rapidly, your tech stack and data must improve in tandem, rather than playing catch up. One way to do so is to reduce dependencies by focusing on self-serve tools, a documentation-first culture, and automation, wherever possible.

- Create systems of trust: Set up an ecosystem based on trust and open communication. So, make sure that everyone’s work is accessible, discoverable, and transparent. Encourage domains and team members to communicate as often as possible on data and metadata.

- Ensure alignment of technology: Get all data product owners and domains to align on the technology being used and its purpose. Despite the autonomy of domains, the underlying — cloud providers, programming languages, and job scheduling tools — should remain standard.

- Create rituals: Like documentation hour, weekly case studies, and learnings from data — to help your organization stay on track and embrace the sociotechnical changes.

2. Rethinking your tech stack for the data mesh

The premise of the data mesh architecture is end-to-end ownership of domain data to eliminate bottlenecks and ensure extracting the full potential of data.

A top reason for challenges with data is a lack of context. Data consumers need context on data whenever they’re using it. This need varies depending on the consumer’s role and responsibilities.

So, the technology being used to set up the data mesh infrastructure as a platform should be personalized per the domain, user role, or project, and offer context readily, wherever required.

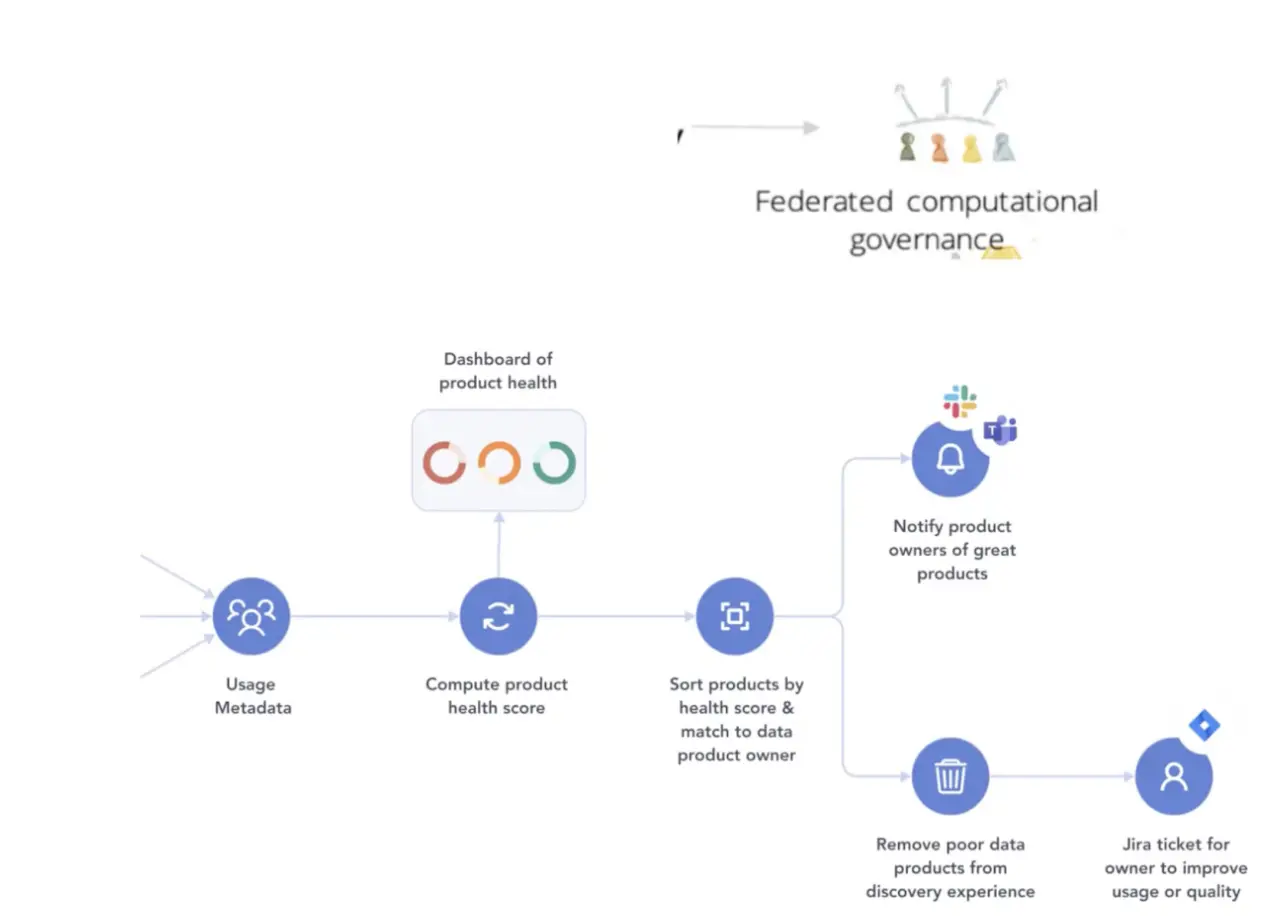

Another tenet of the mesh architecture is federated computational governance.

Implementing federated governance technologically requires feedback loops and bottom-up input from across the organization to naturally federate and govern data products.

An automated workflow for finding and governing data product health. Source: Towards Data Science

All three requirements — context, personalization, and governance workflows — can be met by leveraging metadata. Metadata is the key to powering these fundamental principles behind the data mesh.

For instance, making the data products discoverable can be achieved by aggregating all metadata and making it searchable with a Google-like search.

Similarly, building a 360-degree context of each data product — who owns it, and where each asset comes from can be achieved by defining and aggregating the relevant metadata.

The characteristics of “Data as a Product." Source: Towards Data Science

By leveraging metadata for the data mesh, you can:

- Embed context within your daily workflows for each domain

- Build personalized experiences for each data product

- Develop an intuitive, open, extensible, and automated system that’s easy to learn and use

- Democratize governance with interoperable data products

Summing up on Data Mesh Principles

Permalink to “Summing up on Data Mesh Principles”According to Data Mesh Learning, the data mesh is an approach to being data-driven that:

- Uses data product thinking — approaching your data as a product instead of a by-product of how you do business

- Decentralizes data ownership (so data/data quality is no longer owned by the centralized data lake team)

This approach is a response to the data realities of organizations today and their trajectory.

The four data mesh principles — domain-oriented decentralized data ownership, data as a product, self-serve data infrastructure, and federated computational governance are a way to transform and optimize today’s data stacks.

The data mesh and its underlying principles offer the promise of increasing value from data at scale, even in a complex and volatile business context.

A summary of the four principles of the data mesh. Source: Martin Fowler

Want to understand more about how a data mesh can supercharge your data initiatives?

How Atlan Supports Data Mesh Concepts

Permalink to “How Atlan Supports Data Mesh Concepts”Atlan helps organizations implement data mesh principles by enabling domain teams to create and manage data products that can be easily discovered and consumed by other teams.

Data products in Atlan are scored based on data mesh principles such as discoverability, interoperability, and trust, providing organizations with insights into their data mesh maturity.

Atlan’s automated lineage tracking and metadata management capabilities further support data mesh implementation by providing a comprehensive understanding of data flows and dependencies across domains.

How Autodesk Activates Their Data Mesh with Snowflake and Atlan

Permalink to “How Autodesk Activates Their Data Mesh with Snowflake and Atlan”- Autodesk, a global leader in design and engineering software and services, created a modern data platform to better support their colleagues’ business intelligence needs

- Contending with a massive increase in data to ingest, and demand from consumers, Autodesk’s team began executing a data mesh strategy, allowing any team at Autodesk to build and own data products

- Using Atlan, 60 domain teams now have full visibility into the consumption of their data products, and Autodesk’s data consumers have a self-service interface to discover, understand, and trust these data products

Book your personalized demo today to find out how Atlan supports data mesh concepts and how it can benefit your organization.

Data mesh principles: Related reads

Permalink to “Data mesh principles: Related reads”- AI Governance: How to Mitigate Risks & Maximize Business Benefits

- Gartner on AI Governance: Importance, Issues, Way Forward

- Data Governance for AI

- AI Data Governance: Why Is It A Compelling Possibility?

- BCBS 239 2025: Principles for Effective Risk Data Management and Reporting

- BCBS 239 Compliance: What Banks Need to Know in 2025

- BCBS 239 Data Governance: What Banks Need to Know in 2025

- BCBS 239 Data Lineage: What Banks Need to Know in 2025

- HIPAA Compliance: Key Components, Rules & Standards

- CCPA Compliance: 7 Requirements to Become CCPA Compliant

- CCPA Compliance Checklist: 9 Points to Be Considered

- How to Comply With GDPR? 7 Requirements to Know!

- Benefits of GDPR Compliance: Protect Your Data and Business in 2025

- IDMP Compliance: It’s Key Elements, Requirements & Benefits

- Data Governance for Banking: Core Challenges, Business Benefits, and Essential Capabilities in 2025

- Data Governance in Action: Community-Centered and Personalized

- Data Governance Framework — Examples, Templates, Standards, Best practices & How to Create One?

- Data Governance Tools: Importance, Key Capabilities, Trends, and Deployment Options

- Data Governance Tools Comparison: How to Select the Best

- Data Governance Tools Cost: What’s The Actual Price?

- Data Governance Process: Why Your Business Can’t Succeed Without It

- Data Governance and Compliance: Act of Checks & Balances

- Data Governance vs Data Compliance: Nah, They Aren’t The Same!

- Data Compliance Management: Concept, Components, Getting Started

- Data Governance for AI: Challenges & Best Practices

- A Guide to Gartner Data Governance Research: Market Guides, Hype Cycles, and Peer Reviews

- Gartner Data Governance Maturity Model: What It Is, How It Works

- Data Governance Roles and Responsibilities: A Round-Up

- Data Governance in Banking: Benefits, Implementation, Challenges, and Best Practices

- Data Governance Maturity Model: A Roadmap to Optimizing Your Data Initiatives and Driving Business Value

- Open Source Data Governance - 7 Best Tools to Consider in 2025

- Federated Data Governance: Principles, Benefits, Setup

- Data Governance Committee 101: When Do You Need One?

- Data Governance for Healthcare: Challenges, Benefits, Core Capabilities, and Implementation

- Data Governance in Hospitality: Challenges, Benefits, Core Capabilities, and Implementation

- 10 Steps to Achieve HIPAA Compliance With Data Governance

- Snowflake Data Governance — Features, Frameworks & Best practices

- Data Governance Policy: Examples, Templates & How to Write One

- 7 Best Practices for Data Governance to Follow in 2025

- Benefits of Data Governance: 4 Ways It Helps Build Great Data Teams

- Key Objectives of Data Governance: How Should You Think About Them?

- The 3 Principles of Data Governance: Pillars of a Modern Data Culture

Share this article