OpenMetadata Data Quality: Native Features, Integrations & More

Share this article

OpenMetadata is an open-source data catalog built on a unified metadata model, open API spec, extensibility, and pull-based metadata ingestion. First released in mid-2021, OpenMetadata didn’t have a lot of data quality features.

See How Atlan Simplifies Data Governance – Start Product Tour

However, since release 0.9.0 in March 2022, OpenMetadata’s native data quality feature was the ability to add tests. Since then, OpenMetadata data quality features have evolved to include data profiling, column-level tests, freshness metrics, data lake tests, integration with Great Expectations, and more.

This article will walk you through the scope and data quality features offered by OpenMetadata.

Table of contents

Permalink to “Table of contents”- OpenMetadata data quality: Native features

- How data quality works in OpenMetadata

- Summary

- OpenMetadata Data Quality: Related reads

OpenMetadata data quality: Native features

Permalink to “OpenMetadata data quality: Native features”OpenMetadata offers data quality and observability features that allow you to do the following:

- Create and execute tests to run assertions for all popular database connectors for databases – MySQL, PostgreSQL, Snowflake, Redshift, etc.

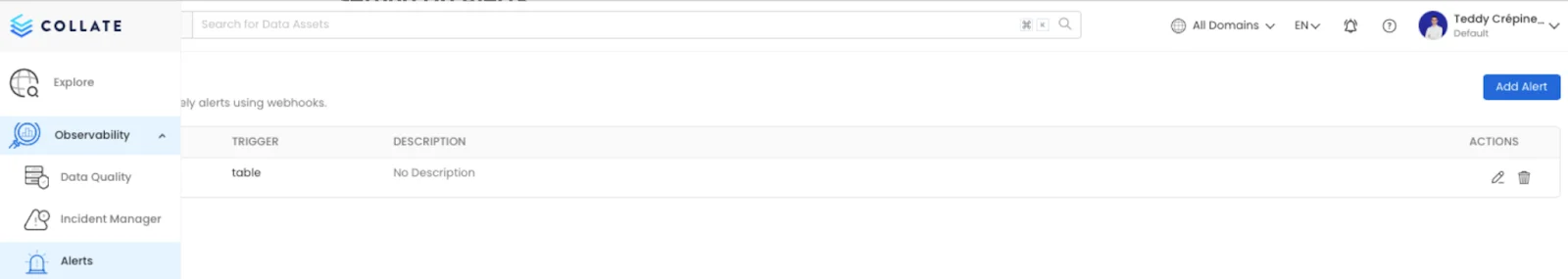

- Set up observability alerts on test failure, triggering notifications across various channels

Configuring observability alerts in OpenMetadata - Source: OpenMetadata docs.

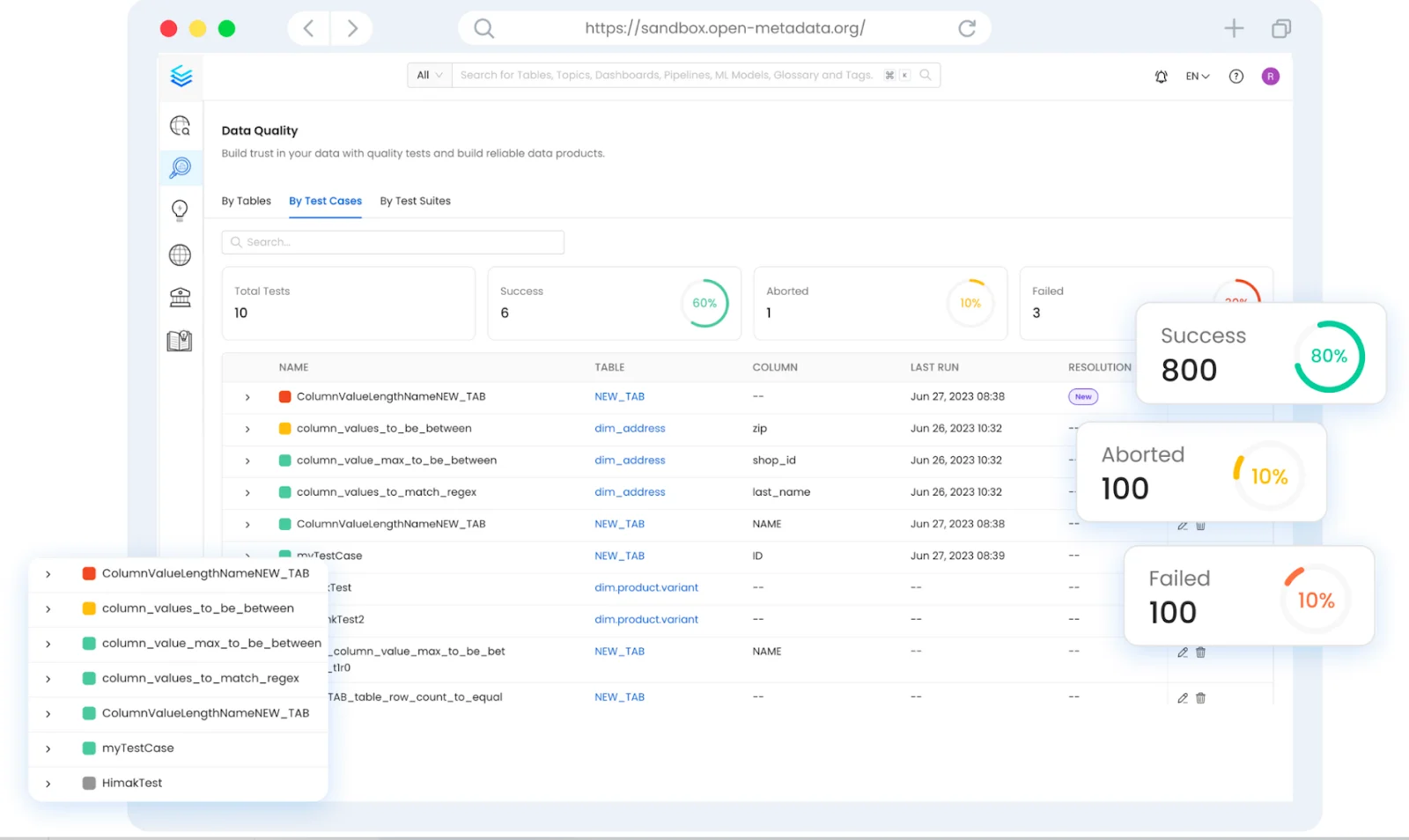

- Build a health dashboard for data where you can track real-time test results and address any critical issues on priority

OpenMetadata data quality dashboard - Source: OpenMetadata docs.

- Create resolution workflows for failed tests, and optionally let the data consumers know about the failure

These goals are achieved by many native features along with some external integrations with tools like Great Expectations, dbt, and more. Before exploring these features further, let’s delve deeper into the mechanics of OpenMetadata data quality.

How data quality works in OpenMetadata

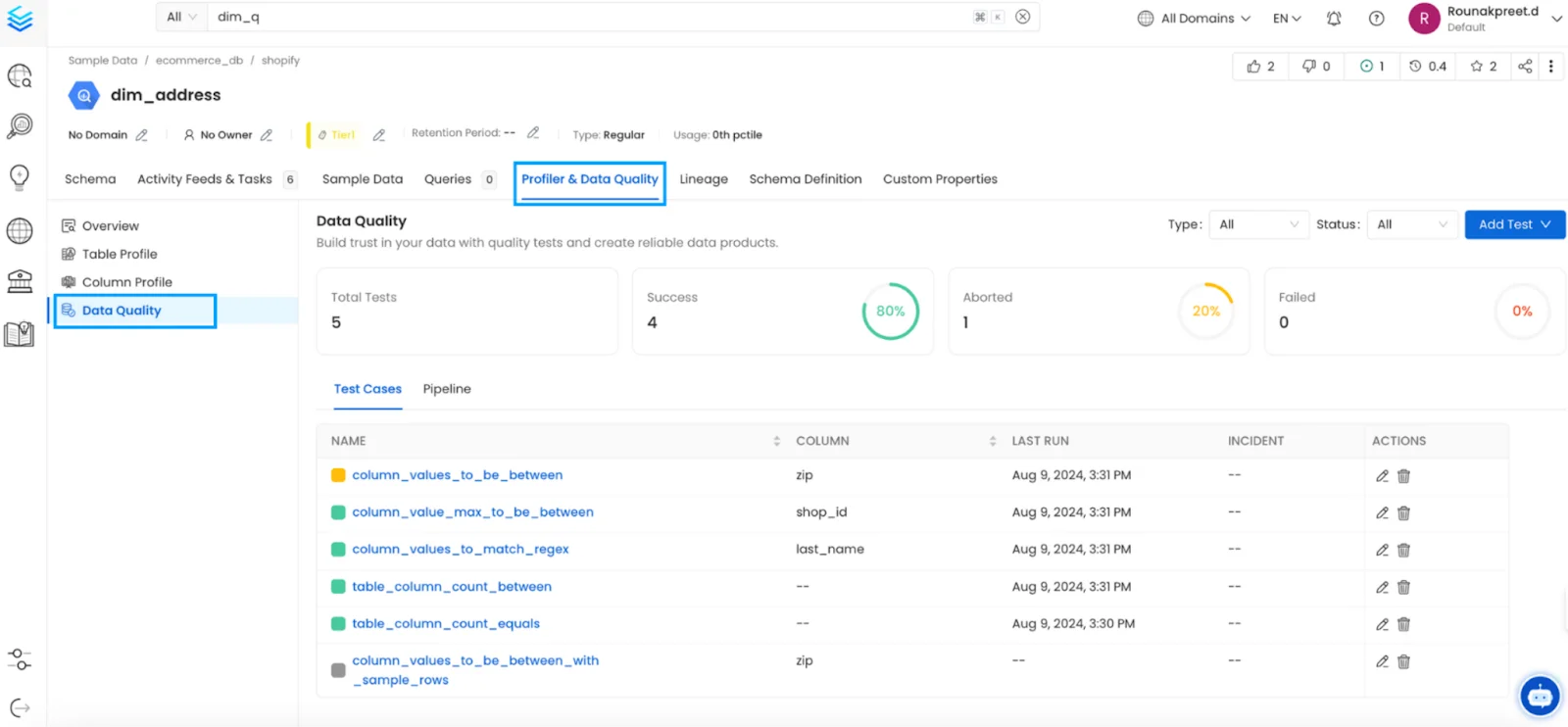

Permalink to “How data quality works in OpenMetadata”OpenMetadata doesn’t require you to create or maintain a YAML or JSON configuration file to define tests. You can do it from the UI via the Profiler & Data Quality tab. Within this tab, you can define:

- Table level structural tests

- Column level tests

- Data diff quality tests

Profiler & Data Quality Tab - Source: OpenMetadata docs.

You also have the option to create completely custom test definitions, test suites, and test cases and write them to test case results. You can configure these using the API or Python SDK. You can also make these test cases available from the OpenMetadata UI.

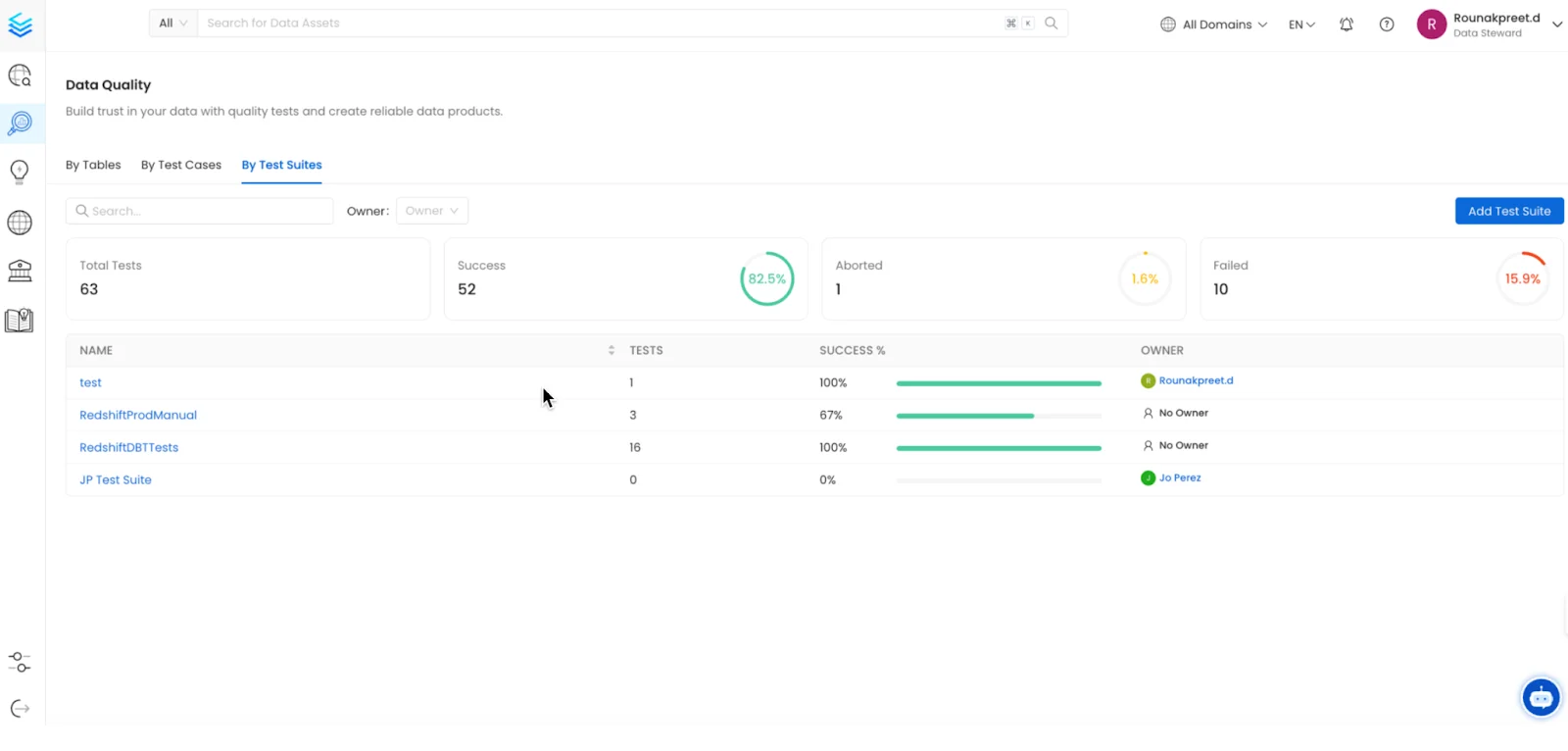

Test suites in OpenMetadata

Permalink to “Test suites in OpenMetadata”Tests in OpenMetadata are run using pipelines, which can be grouped up together in a test suite. Test suites allow you to group related test cases, even those related to different tables. So, you get a consistent view of the quality of the data assets. It also allows you to streamline the alerting and notification workload, preventing unnecessary noise.

OpenMetadata offers two types of test suites:

- Logical test suite: A logical test suite gives you a consolidated view of related test cases without the need to run them as a single unit necessarily. It’s not associated or linked with a pipeline. Note: A pipeline in OpenMetadata is a scheduler that runs your test suite.

- Executable test suite: An executable test suite is specifically associated with a single table.The goal is to ensure that all the tests for a particular table are stored and executed together. This type of test suite helps in testing the integrity and functionality of a table.

Running tests on a schedule in OpenMetadata

Permalink to “Running tests on a schedule in OpenMetadata”Whether you want to run an individual test or a test suite, you can run them manually or execute them on a schedule. For the latter, the tests must be associated with a pipeline.

You can spin up a pipeline from the Profiler & Data Quality tab. The pipeline can be scheduled using a cron expression. The results of the pipeline runs will be in the test cases section.

Visualizing tests in OpenMetadata

Permalink to “Visualizing tests in OpenMetadata”There are two ways in which you can visualize test results in OpenMetadata:

- Using the Quality page

Visualizing test results in OpenMetadata - Source: OpenMetadata docs.

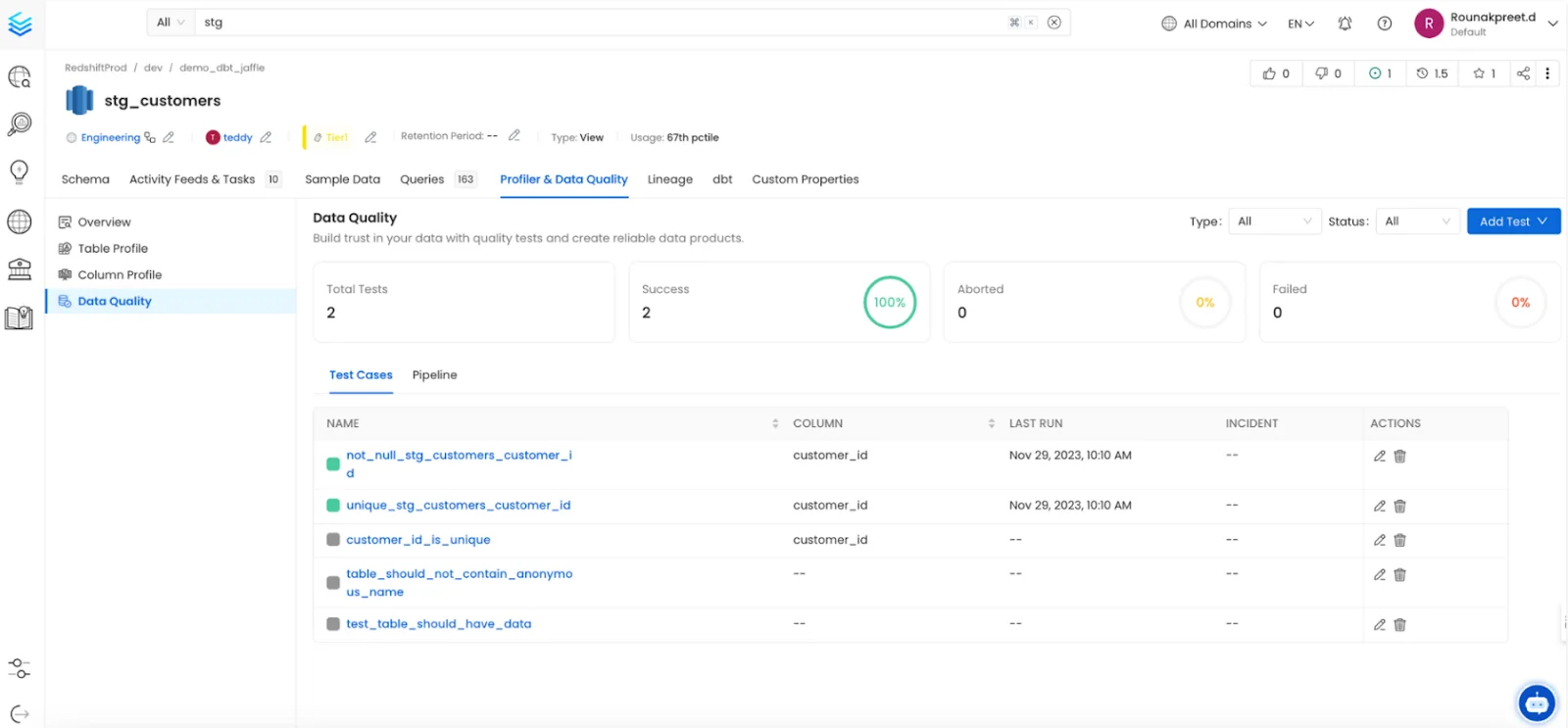

- Using the Profiler & Data Quality tab (under the table entity)

Another way to visualize test results in OpenMetadata - Source: OpenMetadata docs.

You can search and filter test cases by tables/entities, test cases, test suites, and test owners. You can also see a summary of the test runs by looking at the Total Tests, Success, Aborted, and Failed counts.

Other data quality and observability features

Permalink to “Other data quality and observability features”Apart from the core data quality features, there are many other features in OpenMetadata that you can use to monitor your data, such as:

- Data Profiler

- Observability Alerts

- Incident Manager

How organizations making the most out of their data using Atlan

Permalink to “How organizations making the most out of their data using Atlan”The recently published Forrester Wave report compared all the major enterprise data catalogs and positioned Atlan as the market leader ahead of all others. The comparison was based on 24 different aspects of cataloging, broadly across the following three criteria:

- Automatic cataloging of the entire technology, data, and AI ecosystem

- Enabling the data ecosystem AI and automation first

- Prioritizing data democratization and self-service

These criteria made Atlan the ideal choice for a major audio content platform, where the data ecosystem was centered around Snowflake. The platform sought a “one-stop shop for governance and discovery,” and Atlan played a crucial role in ensuring their data was “understandable, reliable, high-quality, and discoverable.”

For another organization, Aliaxis, which also uses Snowflake as their core data platform, Atlan served as “a bridge” between various tools and technologies across the data ecosystem. With its organization-wide business glossary, Atlan became the go-to platform for finding, accessing, and using data. It also significantly reduced the time spent by data engineers and analysts on pipeline debugging and troubleshooting.

A key goal of Atlan is to help organizations maximize the use of their data for AI use cases. As generative AI capabilities have advanced in recent years, organizations can now do more with both structured and unstructured data—provided it is discoverable and trustworthy, or in other words, AI-ready.

Tide’s Story of GDPR Compliance: Embedding Privacy into Automated Processes

Permalink to “Tide’s Story of GDPR Compliance: Embedding Privacy into Automated Processes”- Tide, a UK-based digital bank with nearly 500,000 small business customers, sought to improve their compliance with GDPR’s Right to Erasure, commonly known as the “Right to be forgotten”.

- After adopting Atlan as their metadata platform, Tide’s data and legal teams collaborated to define personally identifiable information in order to propagate those definitions and tags across their data estate.

- Tide used Atlan Playbooks (rule-based bulk automations) to automatically identify, tag, and secure personal data, turning a 50-day manual process into mere hours of work.

Book your personalized demo today to find out how Atlan can help your organization in establishing and scaling data governance programs.

Summary

Permalink to “Summary”This article took you through some of the data quality features of OpenMetadata, how they work, and how they can be extended by using native external integrations like Great Expectations and dbt, among many others.

OpenMetadata also couples several governance and observability features like test suite ownership, PII tagging, and observability alerts with the data quality features mentioned in this article. This gives you an integrated data quality, observability, and governance layer.

Since release 1.4, there have been improvements and upgrades in data quality, especially relating to specific database connectors for Redshift and DynamoDB, UI performance improvements, data profiler improvements, and so on. You can check out the details on OpenMetadata’s public roadmap.

OpenMetadata Data Quality: Related reads

Permalink to “OpenMetadata Data Quality: Related reads”- OpenMetadata: Design Principles, Architecture & More

- OpenMetadata vs. DataHub: Which One to Choose in 2025

- Alation vs. OpenMetadata vs. Collibra vs. Atlan: Find the Right Fit

- OpenMetadata vs. OpenLineage: Features, Architecture & More

- OpenMetadata + dbt: All Your Data Assets in One Place

- What is Data Quality?: Causes, Detection, and Fixes

- Data Quality Metrics: How to Monitor the Health of Your Data Estate

- 6 Popular Open Source Data Quality Tools To Know in 2025: Overview, Features & Resources

- Forrester on Data Quality: Approach, Challenges, and Best Practices

- Gartner Magic Quadrant for Data Quality: Overview, Capabilities, Criteria

- Measurable Goals for Data Quality: Ensuring Reliable Insights and Growth

- How To Improve Data Quality In 12 Actionable Steps?

- Data Quality in Data Governance: The Crucial Link That Ensures Data Accuracy and Integrity

- Data Quality vs Data Governance: Learn the Differences & Relationships!

- 9 Components to Build the Best Data Quality Framework

- 10 Steps to Improve Data Quality in Healthcare

- 11 Steps to Build an API-Driven Data Quality Framework

- The Evolution of Data Quality: From the Archives to the New Age

- Data Quality Issues: Steps to Assess and Resolve Effectively

- Who is a Data Quality Analyst and How to Become One?

- Data Quality Strategy: 10 Steps to Excellence!

- Data Quality Dimensions: Do They Matter in 2025 & Beyond?

- Data Quality Culture: Everything You Need to Know in 2025!

- Data Quality and Observability: Key Differences & Relationships!

- Data Quality Testing: Key to Ensuring Accurate Insights!

- Data Quality Management: The Ultimate Guide

- Data Quality Fundamentals: Why It Matters in 2025!

- How to Fix Your Data Quality Issues: 6 Proven Solutions!

- Predictive Data Quality: What is It & How to Go About It

- Data Quality is Everyone’s Problem, but Who is Responsible?

- Top 10 Data Quality Best Practices to Improve Data Performance

- Data Quality Problems? 5 Ways to Fix Them in 2025!

- Is Atlan compatible with data quality tools?

Share this article